Abstract

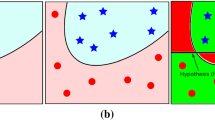

The class imbalance problem occurs when the classifier is to detect a rare but important class. The purpose of this paper is to study whether possible sources of error are not only the imbalance but also other factors in combination, which lead to these misclassifications. The theoretical difficulties in purely predictive settings arise from the lack of visualization. Therefore, for kernel classifiers we propose the link with a kernel version of multidimensional scaling in high-dimensional feature space. The transformed version of the features specifically discloses the intrinsic structure of Hilbert space and is then used as inputs into a learning system: in the example, this prediction method is based on the SVMs-rebalance methodology. The graphical representations indicate the effects of masking, skewed, and multimodal distribution, which are also responsible for the poor performance. By studying the properties of the misclassifications, we can further develop ways to improve them.

Similar content being viewed by others

References

Provost F, Fawcett T (2001) Robust classification for imprecise environments. Mach Learn 42(3):203–231

Wu G, Chang EY (2003) Class-boundary alignment for imbalanced dataset learning. In: Proceedings of the ICML’03 workshop on learning from imbalanced datasets, pp 49–56

Chawla NV, Japcowicz N, Kolcz A (2004) Editorial: special issue on learning from imbalanced datasets. SIGKDD Explor 6(1):1–6

Visa S, Ralescu A (2005) Issues in mining imbalanced data sets—a review paper. In: Proceeding of the sixteen Midwest artificial intelligence and cognitive science conference, Dayton, Ohio, USA, pp 67–73

Kubat M, Matwin S (1997) Addressing the curse of imbalanced training sets: one-sided selection. In: Proceedings of the 14th international conference on machine learning, pp 179–186

Japkowicz N (ed) (2000) Proceeding of the AAAI’2000 workshop on learning from imbalanced data sets. Technical report WS-00-05, AAAI Press, Menlo Park

Chawla NV, Japkowicz N, Kolcz A (eds) (2003) Proceedings of the ICML’2003 workshop on learning from imbalanced data sets (II). http://www.site.uottawa.ca/~nat/Workshop2003/workshop2003.html

Weiss G (2004) Mining with rarity: a unifying framework. SIGKDD Explor 6(1):7–19

Japkowicz N, Stephen S (2002) The class imbalance problem: a systematic study. Intell Data Anal 6(5):429–449

Vapnik VN (1995) The nature of statistical learning theory. Springer, Berlin

Akbani R, Kwek S, Japkowicz N (2004) Applying support vector machines to imbalanced datasets. In: Proceedings 15th ECML, pp 39–50

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) SMOTE: synthetic minority over-sampling technique. J Artif Intell Res 16:321–357

Veropoulos K, Campbell C, Cristianini N (1999) Controlling the sensitivity of support vector machines. In: Proceedings of the international joint conference on artificial intelligence, pp 55–60

Han H, Wang W-Y, Mao B-H (2005) Borderline-SMOTE: a new over-sampling method in imbalanced data sets learning. Lect Notes Comput Sci 3644:878–887

Tang Y, Zhang Y-Q, Chawla NV, Krasser S (2009) SVMs modeling for highly imbalanced classification. IEEE Trans Syst Man Cybern Part B 39(1):281–288

Wu G, Chang EY (2005) KBA: kernel boundary alignment considering imbalanced data distribution. IEEE Trans Knowl Data Eng 17(6):786–795

Maloof M (2003) Learning when data sets are imbalanced and when costs are unequal and unknown. In: Proceedings of the ICML’2003 workshop on learning from imbalanced data sets II, pp 73–80

Tao Q, Wu G-W, Wang F-Y, Wang J (2005) Posterior probability support vector machines for unbalanced data. IEEE Trans Neural Netw 16(6):1561–1573

Green PF, Carmone FJ Jr, Smith SM (1989) Multidimensional scaling: concepts and applications. Allyn and Bacon, Boston, pp 139–204

Hsu CC, Wang KS, Chung HY, Chang SH (2013) An algorithmic SVMs-rebalancing approach for class imbalance problem. Neural Computing and Applications (submitted)

Chung HY, Ho CH (2009) Design of Bayesian-based knowledge extraction for SVMs in unbalanced classifications. Department of Electrical Engineering, National Central University, Jhongli, Taiwan, ROC

Hsu CC, Wang KS, Chang SH (2011) Bayesian decision theory for support vector machines: imbalance measurement and feature optimization. Expert Syst Appl 38(5):4698–4704

Chung HY, Ho CH, Hsu CC (2011) Support vector machines using Bayesian-based approach in the issue of unbalanced classifications. Expert Syst Appl 38(9):11447–11452

Visa S, Ralescu A (2003) Learning imbalanced and overlapping classes using fuzzy sets. In: Proceedings of the ICML’2003 workshop on learning from imbalanced data sets II, Washington, pp 97–104

Prati RC, Batista GEAPA, Monard MC (2004) Class imbalances versus class overlapping: an analysis of a learning system behavior. In: MICAI, pp 312–321

Batista GEAPA, Prati RC, Monard MC (2004) A study of the behavior of several methods for balancing machine learning training data. SIGKDD Explor 6(1):20–29

Japkowicz N (2001) Concept-learning in the presence of between-class and within-class imbalances. In: Proceedings of the fourteenth conference of the Canadian society for computational studies of intelligence, pp 67–77

Bradley AP (1997) The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit 30(7):1145–1159

Duda RO, Hart PE, Stork DG (2001) Pattern classification, 2nd edn. Wiley, New York, pp 20–64

Krishnaiah PR, Kanal LN (1982) Classification, pattern recognition, and reduction of dimensionality. Handbook of statistics 2. North-Holland, Amsterdam

Hastie T, Tibshirani R, Friendman J (2001) The elements of statistical learning: data mining, inference and prediction. Springer, Berlin, pp 502–503

Lee JA, Verleysen M (2007) Nonlinear dimensionality reduction. Springer, New York, pp 69–97

Murphy PM (1995) UCI-benchmark repository of artificial and real data sets. http://www.ics.uci.edu/~mlearn. CA, University of California Irvine

Breiman L (1996) Bias, variance and arcing classifiers. Technical report 460, Berkeley, CA: Statistics Department, University of California at Berkeley

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hsu, CC., Wang, KS., Chung, HY. et al. A study of visual behavior of multidimensional scaling for kernel perceptron algorithm. Neural Comput & Applic 26, 679–691 (2015). https://doi.org/10.1007/s00521-014-1746-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-014-1746-2