Abstract

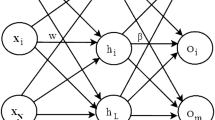

Extreme learning machine (ELM) proposed by Huang et al. is a learning algorithm for single-hidden layer feedforward neural networks (SLFNs). ELM has the advantage of fast learning speed and high efficiency, so it brought into public focus. Later someone developed regularized extreme learning machine (RELM) and extreme learning machine with kernel (KELM). But they are the single-hidden layer network structure, so they have deficient in feature extraction. Deep learning (DL) is a multi-layer network structure, and it can extract the significant features by learning from a lower layer to a higher layer. As DL mostly uses the gradient descent method, it will spend too much time in the process of adjusting parameters. This paper proposed a novel model of convolutional extreme learning machine with kernel (CKELM) which was based on DL for solving problems—KELM is deficient in feature extraction, and DL spends too much time in the training process. In CKELM model, alternate convolutional layers and subsampling layers add to hidden layer of the original KELM so as to extract features and classify. The convolutional layer and subsampling layer do not use the gradient algorithm to adjust parameters because of some architectures yielded good performance with random weights. Finally, we took experiments on USPS and MNIST database. The accuracy of CKELM is higher than ELM, RELM and KELM, which proved the validity of the optimization model. To make the proposed approach more convincing, we compared with other ELM-based methods and other DL methods and also achieved satisfactory results.

Similar content being viewed by others

References

Huang GB, Zhu QY, Siew CK (2004) Extreme learning machine: a new learning scheme of feedforward neural networks. Proceedings of IEEE International Joint Conference on Neural Networks 2004 2:985–990

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70:489–501

Vapnik VN (1995) The nature of statistical learning theory. Springer, New York

Rousseeuw PJ, Leroy A (1987) Robust regression and outlier detection. Wiley, New York

Deng W, Zheng Q, Chen L et al (2010) Research on extreme learning of neural networks. Chin J Comput 33(2):279–287

Huang GB, Zhou HM, Ding XJ, Zhang R (2012) Extreme learning machine for regression and multiclass classification. IEEE Trans Syst Man Cybern Part B Cybern 42(2):513–529

Ding SF, Xu XZ, Nie R (2014) Extreme learning machine and its applications. Neural Comput Appl 25(3):549–556

Hinton GE, Osindero S, Teh YW (2006) A fast learning algorithm for deep belief nets. Neural Comput 18(7):1527–1554

Hinton GE, Salakhutdinov RR (2006) Reducing the dimensionality of data with neural networks. Science 313(5786):504–507

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Saxe A, Koh PW, Chen Z et al. (2011) On random weights and unsupervised feature learning[C]. In: Proceedings of the 28th international conference on machine learning (ICML-11), pp 1089–1096

Jarrett K, Kavukcuoglu K, Ranzato M, LeCun Y (2009) What is the best multi-stage architecture for object recognition[C]. In: IEEE 12th international conference on computer vision, pp 2146–2153

Guo RF, Huang GB, Lin QP et al (2009) Error minimized extreme learning machine with growth of hidden nodes and incremental learning. IEEE Trans Neural Netw 20(8):1352–1357

Zhu QY, Qin AK, Suganthan PN et al (2005) Evolutionary extreme learning machine. Pattern Recogn 38(10):1759–1763

Huang GB, Liang NY, Rong HJ et al (2005) On-line sequential extreme learning machine In: The IASTED international conference on computational intelligence. Calgary

Huang GB, Siew CK (2005) Extreme learning machine with randomly assigned RBF Kernels. Int J Inf Technol 11(1):16–24

Huang GB, Lei C, Siew CK (2006) Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans Neural Netw 17(4):879–892

Haykin S (1999) Neural networks: a comprehensive foundation. Prentice Hall, New Jersey

Wang J, Guo C (2013) Wavelet kernel extreme learning machine classifier. Microelectron Comput 30(010):73–76

Ding S, Zhang Y, Xu Xinzheng (2013) A novel extreme learning machine based on hybrid kernel function. J Comput 8(8):2110–2117

Kwolek B (2005) Face detection using convolutional neural networks and Gabor filters. In: Artificial neural networks: biological inspirations–ICANN 2005. Springer Berlin, pp 551–556

Laserson J (2011) From neural networks to deep learning: zeroing in on the human brain. ACM Crossroads Stud Mag 18(1):29–34

Neubauer C (1992) Shape, position and size invariant visual pattern recognition based on principles of recognition and perception in artificial neural networks. North Holland, Amsterdam, pp 833–837

Vincent P, Larochelle H, Bengio Y et al. (2008) Extracting and composing robust features with denoising autoencoders. In: Proceedings of the 25th international conference on machine learning (ICML’2008). ACM Press, New York, pp 1096–1103

Huang FJ, LeCun Y (2006) Large-scale learning with SVM and convolutional for generic object categorization. In: Proceedings of IEEE computer society conference on computer vision and pattern recognition. IEEE Computer Society, Washington, pp 284–291

Tivive FHC, Bouzerdoum A (2003) A new class of convolutional neural networks (SICoNNets) and their application of face detection. Proc Int Jt Conf Neural Netw 3:2157–2162

Simard PY, Steinkraus D, Platt JC (2003) Best practice for convolutional neural networks applied to visual document analysis. In: Seventh international conference on document analysis and recognition, pp 985–963

Skittanon S, Surendran AC, Platt JC et al (2004) Convolutional networks for detection. Interspeech, Lisbon, pp 1077–1080

Chen Y, Han C, Wang C et al. (2006) The application of a convolution neural network on face and license plate detection. In: 18th international conference on pattern recognition, ICPR. IEEE Computer Society, Hong Kong, pp 552–555

Poultney C, Chopra S, Cun YL (2006) Efficient learning of sparse representations with an energy-based model. In: Advances in neural information processing systems. MIT Press, MA, pp 1137–1144

Platt JC, Steinkraus D, Simard PY (2003) Best practices for convolutional neural networks applied to visual document analysis. In: Proceedings of the international conference on document analysis and recognition, ICDAR. Edinburgh, pp 958–962

Keysers D, Deselaers T, Gollan C, Ney H (2007) Deformation models for image recognition. IEEE Trans Pattern Anal Mach Intell 29(8):1422–1435

Zhang Yannan, Ding Shifei, Xinzheng Xu et al (2013) An algorithm research for prediction algorithm of extreme learning machines based on rough sets. J Comput 8(5):1335–1342

Lu J, Lu Y, Cong G (2011) Reverse spatial and textual k nearest neighbor search. In: Proceedings of SIGMOD. ACM Press, Athens, pp 349–360

Shen Fengshan, Wang Liming, Zhang Junying (2014) Reduced extreme learning machine employing SVM technique. J Huazhong Univ Sci Technol (Natural Science Edition) 42(6):107–111

Zhang D, Ooi BC, Tung A (2010) Locating mapped resources in web 2.0. In: Proceedings of ICDE. Long Beach, pp 521–532

Castaño A, Fernández-Navarro F, Hervás-Martínez C (2013) PCA-ELM: a robust and pruned extreme learning machine approach based on principal component analysis. Neural Process Lett 37(3):377–392

Jirayucharoensak S, Pan-Ngum S, Israsena P (2014) EEG-based emotion recognition using deep learning network with principal component based covariate shift adaptation. Sci World J 2014:627892-1–627892-10

Acknowledgments

This work is supported by the National Natural Science Foundation of China (No. 61379101) and the National Key Basic Research Program of China (No. 2013CB329502).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ding, S., Guo, L. & Hou, Y. Extreme learning machine with kernel model based on deep learning. Neural Comput & Applic 28, 1975–1984 (2017). https://doi.org/10.1007/s00521-015-2170-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-015-2170-y