Abstract

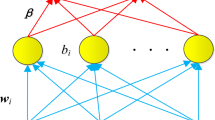

In this paper, we propose a novel hybrid text classification model based on deep belief network and softmax regression. To solve the sparse high-dimensional matrix computation problem of texts data, a deep belief network is introduced. After the feature extraction with DBN, softmax regression is employed to classify the text in the learned feature space. In pre-training procedures, the deep belief network and softmax regression are first trained, respectively. Then, in the fine-tuning stage, they are transformed into a coherent whole and the system parameters are optimized with Limited-memory Broyden–Fletcher–Goldfarb–Shanno algorithm. The experimental results on Reuters-21,578 and 20-Newsgroup corpus show that the proposed model can converge at fine-tuning stage and perform significantly better than the classical algorithms, such as SVM and KNN.

Similar content being viewed by others

References

Hinton GE, Osindero S, Teh YW (2006) A fast learning algorithm for deep belief nets. Neural Comput 18(7):1527–1554. doi:10.1162/neco.2006.18.7.1527

Deng L, Li X (2013) Machine learning paradigms for speech recognition: an overview. IEEE Trans Audio Speech Lang Process 21(5):1060–1089. doi:10.1109/TASL.2013.2244083

Sivaram G, Hermansky H (2012) Sparse multilayer perceptron for phoneme recognition. IEEE Trans Audio Speech Lang Process 20(1):23–29. doi:10.1109/TASL.2011.2129510

Yu D, Wang S, Karam Z, Deng L (2010) Language recognition using deep-structured conditional random fields. Acoust Speech Signal Process 41(3):5030–5033. doi:10.1109/ICASSP.2010.5495072

Dahl G, Yu D, Deng L, Acero A (2011) Large vocabulary continuous speech recognition with context-dependent DBN-HMMS. In: Proceedings of international conference on acoustics, speech and signal processing, pp 4688–4691. doi:10.1109/ICASSP.2011.5947401

Krizhevsky A, Sutskever I, Hinton G (2012) ImageNet classification with deep convolutional neural networks. Neural Inf Process Syst 25(2):1106–1114

Lawrence McAfee (2008) Document classification using deep belief nets. http://nlp.stanford.edu/courses/cs224n/2008/reports/10. Accessed 4 June 2008

Liu T (2010) A novel text classification approach based on deep belief network. In: Proceedings of the 17th international conference on neural information processing, pp 314–321. doi:10.1007/978-3-642-17537-4_39

Hinton GE, Salakhutdinov R (2011) Discovering binary codes for documents by learning deep generative models. Top Cogn Sci 3(1):74–91. doi:10.1111/j.1756-8765.2010.01109.x1

Huang CC, Gong W, Fu WL, Feng DY (2014) A research of speech emotion recognition based on deep belief network and SVM. Math Probl Eng 2014(2014):1–7. doi:10.1155/2014/749604

Zhou S, Chen Q, Wang X (2014) Active semi-supervised learning method with hybrid deep belief networks. PLoS One 9(9):e107122. doi:10.1371/journal.pone.0107122

Yang YM (1999) An evaluation of statistical approaches to text categorization. Inf Retr 1(1):69–90. doi:10.1023/A:1009982220290

Sebastiani F (2002) Machine learning in automated text categorization. ACM Comput Surv 34(1):1–47. doi:10.1145/505282.505283

Chakrabarti S, Roy S, Soundalgekar M (2003) Fast and accurate text classification via multiple linear discriminant projections. VLDB J 12(2):170–185. doi:10.1007/s00778-003-0098-9

Wu H, Phang TH, Liu B, Li X (2002) A refinement approach to handling model misfit in text categorization. In: Proceedings of the eighth ACM SIGKDD international conference on knowledge discovery and data mining, pp 207–216. doi:10.1145/775047.775078

Gu B, Sheng VS, Tay KY, Romano W, Li S (2015) Incremental support vector learning for ordinal regression. IEEE Trans Neural Netw Learn Syst 26(7):1403–1416. doi:10.1109/TNNLS.2014.2342533

Tan S, Cheng X, Wang B, Xu H, Ghanem MM, Guo Y (2005) Using dragpushing to refine centroid text classifiers. In: Proceedings of the 28th annual international ACM SIGIR conference on research and development in information retrieval, pp 653–654. doi:10.1145/1076034.1076174

Debole F, Sebastiani F (2004) An analysis of the relative hardness of reuters-21578 subsets. J Am Soc Inf Sci Technol 56(6):584–596. doi:10.1002/asi.20147

Joachims T (1998) Text categorization with support vector machines: learning with many relevant features. In: 10th european conference on machine learning, Chemnitz, Germany, pp 137–142. doi:10.1007/BFb0026683

Gu B, Sheng VS (2016) A robust regularization path algorithm for ν-support vector classification. IEEE Trans Neural Netw Learn Syst. doi:10.1109/TNNLS.2016.2527796

Lewis DD, Li F, Rose T, Yang Y (2004) RCV1: a new benchmark collection for text categorization research. J Mach Learn Res 5(2):361–397. doi:10.1145/122860.122861

Forman G, Cohen I (2004) Learning from little: Comparison of classifiers given little training. In: 8th European conference on principles and practice of knowledge discovery 3203, pp 161–172. doi:10.1007/978-3-540-30116-5_17

Gu B, Sun XM, Sheng VS (2016) Structural minimax probability machine. IEEE Trans Neural Netw Learn Syst. doi:10.1109/TNNLS.2016.2544779

Zheng W, Qian Y, Lu H (2013) Text categorization based on regularization extreme learning machine. Neural Comput Appl 22(3–4):447–456. doi:10.1007/s00521-011-0808-y

Wang W, Yu B (2009) Text categorization based on combination of modified back propagation neural network and latent semantic analysis. Neural Comput Appl 18(8):875–881. doi:10.1007/s00521-008-0193-3

Wu S, Er MJ (2000) Dynamic fuzzy neural networks: a novel approach to function approximation. IEEE Trans Syst Man Cybern 30(2):358–364. doi:10.1109/3477.836384

Er MJ, Wu S, Lu J, Toh HL (2002) Face recognition using radial basis function (RBD) neural networks. IEEE Trans Neural Netw 13(3):697–710. doi:10.1109/CDC.1999.831240

Chen W, ER MJ, Wu S (2006) Illumination compensation and normalisation for robust face recognition using discrete cosine transform on logarithm domain. IEEE Trans Syst Man Cybern Part B Cybern A Publ IEEE Systems Man Cybern Soc 36(2):458–66. doi:10.1109/TSMCB.2005.857353

Larochelle H, Bengio Y, Louradour J et al (2009) Exploring strategies for training deep neural networks. J Mach Learn Res 10(10):1–40. doi:10.1145/1577069.1577070

Guan R, Shi X, Marchese M, Yang C, Liang Y (2011) Text clustering with seeds affinity propagation. IEEE Trans Knowl Data Eng 23(4):627–637. doi:10.1109/TKDE.2010.144

Hinton G E, Sejnowski T (1986) Learning and relearning in Boltzmann machines. In: Parallel distributed processing: explorations in the microstructure of cognition. vol 1. Foundations, MIT Press, Cambridge, MA, pp 282–317

Smolensky P (1986) Information processing in dynamical systems: foundations of harmony theory. In: Parallel distributed processing: explorations in the microstructure of cognition, vol 1. Foundations, MIT Press, Cambridge, MA, pp 194–281

Hinton GE (2010) A practical guide to training restricted boltzmann machines. Neural Netw: Tricks Trade 9(1):599–619. doi:10.1007/978-3-642-35289-8_32

Hinton GE (2002) Training products of experts by minimizing contrastive divergence. Neural Comput 14(8):1771–1800. doi:10.1162/089976602760128018

Sarikaya R, Hinton GE, Deoras A (2014) Application of deep belief networks for natural language understanding. IEEE/ACM Trans Audio, Speech Lang Process 22(4):778–784. doi:10.1109/TASLP.2014.2303296. DOI: 10.1109/TNN.2005.844909

Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297. doi:10.1007/BF00994018

Altman N (1992) An introduction to kernel and nearest-neighbor nonparametric regression. Am Stat 46(3):175–185. doi:10.1080/00031305.1992.10475879

Ng A, Ngiam J, et al (2013) UFLDL tutorial. IOP Stanford. http://deeplearning.stanford.edu/wiki/index.php/UFLDL_Tutorial. Accessed 7 Apr 2013

Wu S, Er MJ, Gao Y (2001) A fast approach for automatic generation of fuzzy rules by generalized dynamic fuzzy neural networks. IEEE Trans Fuzzy Syst 9(4):578–594. doi:10.1109/CDC.1999.831240

Er MJ, Chen W, Wu S (2005) High-speed face recognition based on discrete cosine transform and RBF neural networks. IEEE Trans Neural Netw 16(3):679–691. doi:10.1109/TNN.2005.844909

Joachims T (1999) Making large-scale support vector machine learning practical. In: Schölkopf B, Burges CJC, Smola AJ (eds) Advances in Kernel methods-support vector learning, chapter 11. MIT Press, Cambridge, pp 169–184

Salakhutdinov R (2009) Learning deep generative models. Annu Rev Stat Appl 2(1):74–91. doi:10.1146/annurev-statistics-010814-020120

Ranzato MA, Szummer M (2008) Semi-supervised learning of compact document representations with deep networks. In: Proceedings of the twenty-fifth international conference, pp 792–799. doi:10.1145/1390156.1390256

Acknowledgments

This work is supported by the National Natural Science Foundation of China (61163034, 61373067, 61572228, 61272207, 61472158), the 321 Talents Project of the two level of Inner Mongolia Autonomous Region (2010), the Inner Mongolia Talent Development Fund (2011), the Natural Science Foundation of Inner Mongolia Autonomous Region of China (2016MS0624), the Research Program of Science and Technology at Universities of Inner Mongolia Autonomous Region (NJZY16177), and Science and Technology Development Program of Jilin Province (20140101195JC, 20140520070JH, 20160101247JC).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jiang, M., Liang, Y., Feng, X. et al. Text classification based on deep belief network and softmax regression. Neural Comput & Applic 29, 61–70 (2018). https://doi.org/10.1007/s00521-016-2401-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-016-2401-x