Abstract

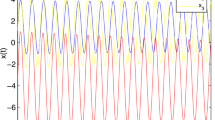

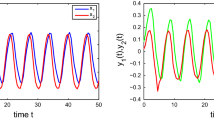

This paper is concerned with positive solutions and global exponential stability of positive equilibrium of inertial neural networks with multiple time-varying delays. By utilizing the comparison principle via differential inequalities, we first explore conditions on damping coefficients and self-excitation coefficients to ensure that, with nonnegative connection weights and inputs, all state trajectories of the system initiating in an admissible set of initial conditions are always nonnegative. Then, based on the method of using homeomorphisms, we derive conditions in terms of linear programming problems via M-matrices for the existence, uniqueness, and global exponential stability of a positive equilibrium of the system. Two examples with numerical simulations are given to illustrate the effectiveness of the obtained results.

Similar content being viewed by others

References

Soulié FF, Gallinari P (1998) Industrial applications of neural networks. World Scientific Publishing, Singapore

Venketesh P, Venkatesan R (2009) A survey on applications of neural networks and evolutionary techniques in web caching. IETE Tech Rev 26:171–180

Mrugalski M, Luzar M, Pazera M, Witczak M, Aubrun C (2016) Neural network-based robust actuator fault diagnosis for a non-linear multi-tank system. ISA Trans 61:318–328

Witczak P, Patan K, Witczak M, Mrugalski M (2017) A neural network approach to simultaneous state and actuator fault estimation under unknown input decoupling. Neurocomputing 250:65–75

Kiakojoori S, Khorasani K (2016) Dynamic neural networks for gas turbine engine degradation prediction, health monitoring and prognosis. Neural Comput Appl 27:2157–2192

Gong M, Zhao J, Liu J, Miao Q, Jiao J (2016) Change detection in synthesis aperture radar images based on deep neural networks. IEEE Trans Neural Netw Learn Syst 27:125–138

Baldi P, Atiya AF (1995) How delays affect neural dynamics and learning. IEEE Trans Neural Netw 5:612–621

Lu H (2012) Chaotic attractors in delayed neural networks. Phys Lett A 298:109–116

Zhang H, Wang Z, Liu D (2014) A comprehensive review of stability analysis of continuous-time recurrent neural networks. IEEE Trans Neural Netw Learn Syst 25:1229–1262

Liu B (2015) Pseudo almost periodic solutions for CNNs with continuously distributed leakage delays. Neural Process Lett 42:233–256

Arik S (2016) Dynamical analysis of uncertain neural networks with multiple time delays. Int J Syst Sci 47:730–739

Li L, Yang YQ, Lin G (2016) The stabilization of BAM neural networks with time-varying delays in the leakage terms via sampled-data control. Neural Comput Appl 27:447–457

Liu B (2017) Global exponential convergence of non-autonomous SICNNs with multi-proportional delays. Neural Comput Appl 28:1927–1931

Manivannan R, Samidurai R, Sriraman R (2017) An improved delay-partitioning approach to stability criteria for generalized neural networks with interval time-varying delays. Neural Comput Appl 28:3353–3369

Hai-An LD, Hien LV, Loan TT (2017) Exponential stability of non-autonomous neural networks with heterogeneous time-varying delays and destabilizing impulses. Vietnam J Math 45:425–440

Lee TH, Trinh MH, Park JH (2017) Stability analysis of neural networks with time-varying delay by constructing novel Lyapunov functionals. IEEE Trans Neural Netw Learning Syst. https://doi.org/10.1109/TNNLS.2017.2760979

Wheeler DW, Schieve WC (1997) Stability and chaos in an inertial two neuron system. Phys D Nonlin Phenom 105:267–284

Koch C (1984) Cable theory in neurons with active linearized membrane. Biol Cybern 50:15–33

Babcock KL, Westervelt RM (1986) Stability and dynamics of simple electronic neural networks with added inertia. Phys D Nonlin Phenom 23:464–469

Tu Z, Cao J, Hayat T (2016) Matrix measure based dissipativity analysis for inertial delayed uncertain neural networks. Neural Netw 75:47–55

Wan P, Jian J (2017) Global convergence analysis of impulsive inertial neural networks with time-varying delays. Neurocomputing 245:68–76

Tu Z, Cao J, Alsaedi A, Alsaadi F (2017) Global dissipativity of memristor-based neutral type inertial neural networks. Neural Netw 88:125–133

Zhang G, Zeng Z, Hu J (2018) New results on global exponential dissipativity analysis of memristive inertial neural networks with distributed time-varying delays. Neural Netw 97:183–191

Ke Y, Miao C (2013) Stability analysis of inertial Cohen–Grossberg-type neural networks with time delays. Neurocomputing 117:196–205

Zhang Z, Quan Z (2015) Global exponential stability via inequality technique for inertial BAM neural networks with time delays. Neurocomputing 151:1316–1326

Cui N, Jiang H, Hu C, Abdurahman A (2018) Global asymptotic and robust stability of inertial neural networks with proportional delays. Neurocomputing 272:326–333

Tu Z, Cao J, Hayat T (2016) Global exponential stability in Lagrange sense for inertial neural networks with time-varying delays. Neurocomputing 171:524–531

Wang J, Tian L (2017) Global Lagrange stability for inertial neural networks with mixed time-varying delays. Neurocomputing 235:140–146

He X, Huang TW, Yu JZ, Li CD, Li CJ (2017) An inertial projection neural network for solving variational inequalities. IEEE Trans Cybern 47:809–814

Smith H (2008) Monotone dynamical systems: an introduction to the theory of competitive and cooperative systems. American Mathematical Society, Providence

Mózaryn J, Kurek JE (2010) Design of a neural network for an identification of a robot model with a positive definite inertia matrix. In: Artificial Intelligence and Soft Computing. Springer, Berlin

Ma GJ, Wu S, Cai GQ (2013) Neural networks control of the Ni-MH power battery positive mill thickness. Appl Mech Mater 411–414:1855–1858

Lu W, Chen T (2007) \(R^n_+\)-global stability of a Cohen–Grossberg neural network system with nonnegative equilibria. Neural Netw 20:714–722

Liu B, Huang L (2008) Positive almost periodic solutions for recurrent neural networks. Nonlinear Anal Real World Appl 9:830–841

Hien LV (2017) On global exponential stability of positive neural networks with time-varying delay. Neural Netw 87:22–2617

He Y, Ji MD, Zhang CK, Wu M (2016) Global exponential stability of neural networks with time-varying delay based on free-matrix-based integral inequality. Neural Netw 77:80–86

Arino O, Hbid ML, Ait Dads E (2002) Delay differential equations and applications. Springer, Dordrecht

Forti M, Tesi A (1995) New conditions for global stability of neural networks with application to linear and quadratic programming problems. IEEE Trans Circuits Syst-I: Fund 42:354–366

Hien LV, Son DT (2015) Finite-time stability of a class of non-autonomous neural networks with heterogeneous proportional delays. Appl Math Comput 251:14–23

Haykin S (1999) Neural networks: a comprehensive foundation. Prentice Hall, Upper Saddle River

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that no potential conflict of interest to be reported to this work.

Appendices

Appendix 1: Proof of Lemma 3

(Necessity) Let \(\eta _i>0\), \(\xi _i>0\), \(i\in [n]\), satisfying \(D_{\alpha }\succ 0\) and \(D_{\beta }\succ 0\). Then, we have

Observe that \(\xi _i(a_i-\xi _i)-b_i=\frac{1}{4}\left( a_i^2-4b_i\right) -\left( \xi _i-\frac{1}{2}a_i\right) ^2\). Thus,

(Sufficiency) Let condition (11) hold. Then, the constants \(\xi _i^l=\frac{a_i-\sqrt{a_i^2-4b_i}}{2}\) and \(\xi _i^u=\frac{a_i+\sqrt{a_i^2-4b_i}}{2}\) are well defined, \(\xi _i^l>0\), and \(\xi _i^u<a\). In addition, \(\xi _i(a_i-\xi _i)-b_i=(\xi _i^u-\xi _i)(\xi _i-\xi _i^l)>0\) for all \(\xi _i\in (\xi _i^l,\xi _i^u)\). Therefore,

for any \(\xi _i\in (\xi _i^l,\xi _i^u)\). It follows from (29) that \(D_{\alpha }\succ 0\) and \(D_{\beta }\succ 0\) for any \(\eta _i>0\) and \(\xi _i\in (\xi _i^l,\xi _i^u)\). The proof is completed.

Appendix 2: Proof of Theorem 1

For any initial condition in \({\mathscr {A}}_T\), it suffices to prove that the corresponding solution \(z(t)=(x^{\top }(t),y^{\top }(t))^{\top }\) of (5) is positive. To this end, we note at first that if \(y_i(t)\ge 0\), \(t\in [0,t_f)\), for some \(t_f>0\), then from the first equation in (5), we have

Thus, it is only necessary to show that \(y(t)=(y_i(t))\succeq 0\) for all \(t\ge 0\).

For a given \(\epsilon >0\), let \(z_{\epsilon }(t)=(x^{\top }_{\epsilon }(t),y^{\top }_{\epsilon }(t))^{\top }\) be solution (5) with initial functions \(\phi _i(.)\) and \(\psi _{i\epsilon }(.)=\psi _i(.)+\epsilon\). Since \(\phi (s)=(\phi _i(s))\succeq 0\) and \(\psi _{\epsilon }(s)=(\psi _{i\epsilon }(s))\succeq \epsilon {\mathbf {1}}_n\) for all \(s\in [-\tau ^+,0]\), where \({\mathbf {1}}_n\) denotes the vector in \({\mathbb {R}}^n\) with all entries equal one, it follows from (5) that \(y_{\epsilon }(t)=(y_{i\epsilon }(t))\succ 0\), \(t\in [0,t_f)\), for some small \(t_f>0\). By virtue of the contrary argument method, we assume that there exist a \(t_1>0\) and an index \(i\in [n]\) such that

and \(y_{\epsilon }(t)\succeq 0\) for all \(t\in [0,t_1]\). Then, by multiplying with \(e^{\alpha _it}\), it follows from (5) that

It follows from (31) and \(D_{\beta }e^{D_{\alpha }t}x_{\epsilon }(t)\succeq 0\), \(t\in [0,t_1]\), that

where \({\hat{f}}({\hat{x}}_{\epsilon }(s))=(f_j(x_{j\epsilon }(s-\tau _j(s)))\) and \(E_n\) is the identity matrix in \({\mathbb {R}}^{n\times n}\).

Since the vector fields \(F_1(x)=Cf(x)\) and \(F_2(x)=Df(x)\) are order-preserving, \(x_{\epsilon }(t)\succeq 0\) and \({\hat{x}}_{\epsilon }(t)\succeq 0\) for \(t\in [0,t_1]\), from (32), we have

where \(D_{\alpha \eta }=D_{\alpha }D_{\eta }\). Let \(t\uparrow t_1\) in (33), and note also that \(E_n-e^{-D_{\alpha }t_1}\succ 0\), we readily obtain

which gives a contradiction with (30). Therefore, \(x_{\epsilon }(t)\succeq 0\) and \(y_{\epsilon }(t)\succ 0\) for all \(t\ge 0\). Let \(\epsilon \downarrow 0\), we then obtain \(z(t)=\lim _{\epsilon \rightarrow 0}z_{\epsilon }(t)\succeq 0\). The proof is completed.

Appendix 3: Proof of Theorem 2

We define the following mappings

For any vectors \(\chi _1=\begin{bmatrix}x_1\\ y_1\end{bmatrix}\) and \(\chi _2=\begin{bmatrix}x_2\\ y_2\end{bmatrix}\) in \({\mathbb {R}}^{2n}\), we have

Therefore,

where \({\mathscr {S}}(x_1-x_2)={{\mathrm{diag}}}\{{{\mathrm{sgn}}}(x_{1i}-x_{2i})\}\).

By assumption (A1), and \(D_{\alpha \xi }-B=D_{\beta }D_{\eta }\succ 0\), similar to (34), we also have

where \(L_f={{\mathrm{diag}}}\{l_i^f\}\). From (34) and (35), we have

where \({\mathscr {M}}=\begin{pmatrix}D_{\xi }&-D_{\eta }\\ B-D_{\alpha \xi }-(C+D)L_f&D_{\alpha \eta }\end{pmatrix}\). On the other hand, it is clear that

Thus, combining (36) and (37) gives

Let \(\chi _0\) be a positive vector satisfying (14). Then, from (38), we have

If \({\mathscr {H}}(\chi _1)-{\mathscr {H}}(\chi _2)=0\) then, by (14) and (39), \(\chi _1=\chi _2\). This shows that the mapping \({\mathscr {H}}(.)\) is injective in \({\mathbb {R}}^{2n}\). In addition to this, let \(\chi _2=0\), from (39), we have

which ensures that \(\Vert {\mathscr {H}}(\chi _k)\Vert _{\infty }\rightarrow \infty\) for any sequence \(\{\chi _k\}\) in \({\mathbb {R}}^{2n}\) satisfying \(\Vert \chi _k\Vert _{\infty }\rightarrow \infty\). Thus, the continuous mapping \({\mathscr {H}}(.)\) is proper. By Lemma 2, \({\mathscr {H}}(.)\) is a homeomorphism onto \({\mathbb {R}}^{2n}\). Consequently, the equation \({\mathscr {H}}(\chi )=0\) has a unique solution \(\chi _*\in {\mathbb {R}}^{2n}\), which is a unique EP of (5).

Rights and permissions

About this article

Cite this article

Van Hien, L., Hai-An, L.D. Positive solutions and exponential stability of positive equilibrium of inertial neural networks with multiple time-varying delays. Neural Comput & Applic 31, 6933–6943 (2019). https://doi.org/10.1007/s00521-018-3536-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-018-3536-8