Abstract

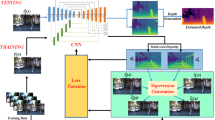

Extracting dense depth from a single image is an important yet challenging computer vision task. Compared with stereo depth estimation, sensing the depth of a scene from monocular images is much more difficult and ambiguous because the epipolar geometry constraints cannot be exploited. The recent development of deep learning technologies has introduced significant progress in monocular depth estimation. This paper aims to explore the effects of multi-scale structures on the performance of monocular depth estimation and further obtain a more refined 3D reconstruction by using our predicted depth and corresponding uncertainty. First, we explore three multi-scale architectures and compare the qualitative and quantitative results of some state-of-the-art approaches. Second, in order to improve the robustness of the system and provide the reliability of the predicted depth for subsequent 3D reconstruction, we estimate the uncertainty of noisy data by modeling such uncertainty in a new loss function. Last, the predicted depth map and corresponding depth uncertainty are incorporated into a monocular reconstruction system. The experiments of monocular depth estimation are mainly performed on the widely used NYU V2 depth dataset, on which the proposed method achieves a state-of-the-art performance. For the 3D reconstruction, the implementation of our proposed framework can reconstruct more smooth and dense models on various scenes.

Similar content being viewed by others

References

Li C, Lu B, Zhang Y et al (2018) 3D reconstruction of indoor scenes via image registration. Neural Process Lett 48(3):1281–1304

Dong S, Gao Z, Pirbhulal S et al (2020) IoT-based 3D convolution for video salient object detection. Neural Comput Appl 32(3):735–746

Li J, Zhang Y, Chen Z et al (2019) A novel edge-enabled slam solution using projected depth image information. Neural Comput Appl 2019:1–13

Vidal R, Ma Y, Soatto S, Sastry S (2006) Two-view multibody structure from motion. Int J Comput Vis 68(1):7–25

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 234–241

Yu F, Koltun V, Funkhouser T (2017) Dilated residual networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 472–480

Yu F, Koltun V (2015) Multi-scale context aggregation by dilated convolutions. arXiv:1511.07122

Kendall A, Gal Y (2017) What uncertainties do we need in Bayesian deep learning for computer vision? In: Advances in neural information processing systems, pp 5574–5584

Tateno K, Tombari F, Laina I, Navab N (2017) Cnn-slam: real-time dense monocular slam with learned depth prediction. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), vol 2

Mur-Artal R, Tardós JD (2017) Orb-slam2: an open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans Robot 33(5):1255–1262

Eigen D, Puhrsch C, Fergus R (2014) Depth map prediction from a single image using a multi-scale deep network. In: Advances in neural information processing systems, pp 2366–2374

Eigen D, Fergus R (2015) Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In: Proceedings of the IEEE international conference on computer vision, pp 2650–2658

Liu F, Shen C, Lin G, Reid ID (2016) Learning depth from single monocular images using deep convolutional neural fields. IEEE Trans Pattern Anal Mach Intell 38(10):2024–2039

Li B, Shen C, Dai Y, Van Den Hengel A, He M (2015) Depth and surface normal estimation from monocular images using regression on deep features and hierarchical crfs. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1119–1127

Wang P, Shen X, Lin Z, Cohen S, Price B, Yuille AL (2015) Towards unified depth and semantic prediction from a single image. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2800–2809

Laina I, Rupprecht C, Belagiannis V, Tombari F, Navab N (2016) Deeper depth prediction with fully convolutional residual networks. In: 2016 fourth international conference on 3D vision (3DV). IEEE, pp 239–248

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Garg R, Vijay Kumar BG, Carneiro G, Reid I (2016) Unsupervised cnn for single view depth estimation: Geometry to the rescue. In: European conference on computer vision. Springer, pp 740–756

Godard C, Mac Aodha O, Brostow GJ (2017) Unsupervised monocular depth estimation with left-right consistency. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 6602–6611

Vijayanarasimhan S, Ricco S, Schmid C, Sukthankar R, Fragkiadaki K (2017) Sfm-net: learning of structure and motion from video. arXiv:1704.07804

Wang C, Miguel Buenaposada J, Zhu R, Lucey S (2018) Learning depth from monocular videos using direct methods. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2022–2030

Poggi M, Tosi F, Mattoccia S (2018) Learning monocular depth estimation with unsupervised trinocular assumptions. In: 2018 international conference on 3D vision (3DV). IEEE, pp 324–333

Zhou T, Brown M, Snavely N, Lowe DG (2017) Unsupervised learning of depth and ego-motion from video. CVPR 2(6):7

Repala VK, Dubey SR (2018) Dual cnn models for unsupervised monocular depth estimation. arXiv:1804.06324

Cao Y, Wu Z, Shen C (2018) Estimating depth from monocular images as classification using deep fully convolutional residual networks. IEEE Trans Circuits Syst Video Technol 28(11):3174–3182

Li B, Dai Y, He M (2018) Monocular depth estimation with hierarchical fusion of dilated cnns and soft-weighted-sum inference. Pattern Recognit 83:328–339

Li R, Xian K, Shen C, Cao Z, Lu H, Hang L (2018) Deep attention-based classification network for robust depth prediction. arXiv:1807.03959

Fu H, Gong M, Wang C, Batmanghelich K, Tao D (2018) Deep ordinal regression network for monocular depth estimation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2002–2011

Mahjourian R, Wicke M, Angelova A (2018) Unsupervised learning of depth and ego-motion from monocular video using 3d geometric constraints. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5667–5675

Yin Z, Shi J (2018) Geonet: unsupervised learning of dense depth, optical flow and camera pose. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), vol 2

Zou Y, Luo Z, Huang J-B (2018) Df-net: unsupervised joint learning of depth and flow using cross-task consistency. In: Proceedings of the European conference on computer vision (ECCV), pp 36–53

Jiao J, Cao Y, Song Y, Lau R (2018) Look deeper into depth: monocular depth estimation with semantic booster and attention-driven loss. In: Proceedings of the European conference on computer vision (ECCV), pp 53–69

Zhang Z, Cui Z, Xu C, Jie Z, Li X, Yang J (2018) Joint task-recursive learning for semantic segmentation and depth estimation,. In: European conference on computer vision. Springer, pp 238–255

Babu V, Majumder A, Das K, Kumar S et al (2018) A deeper insight into the undemon: unsupervised deep network for depth and ego-motion estimation. arXiv:1809.00969

Tong T, Li G, Liu X, Gao Q (2017) Image super-resolution using dense skip connections. In: 2017 IEEE international conference on computer vision (ICCV). IEEE, pp 4809–4817

Ji Y, Zhang H, Wu QJ (2018) Salient object detection via multi-scale attention cnn. Neurocomputing 322:130–140

Chen L-C, Zhu Y, Papandreou G, Schroff F, Adam H (2018) Encoder–decoder with atrous separable convolution for semantic image segmentation. In: The European conference on computer vision (ECCV)

Moukari M, Picard S, Simoni L, Jurie F (2018) Deep multi-scale architectures for monocular depth estimation. In: 2018 25th IEEE international conference on image processing (ICIP). IEEE, pp 2940–2944

Blundell C, Cornebise J, Kavukcuoglu K, Wierstra D (2015) Weight uncertainty in neural networks. arXiv:1505.05424

Gal Y, Ghahramani Z (2016) Dropout as a Bayesian approximation: representing model uncertainty in deep learning. In: International conference on machine learning, pp 1050–1059

Engel J, Schöps T, Cremers D (2014) Lsd-slam: large-scale direct monocular slam. In: European conference on computer vision. Springer, pp 834–849

Yang N, Wang R, Stückler J, Cremers D (2018) Deep virtual stereo odometry: leveraging deep depth prediction for monocular direct sparse odometry. In: European conference on computer vision. Springer, pp 835–852

Yu F, Koltun V (2016) Multi-scale context aggregation by dilated convolutions. In: ICLR

Zwald L, Lambert-Lacroix S (2012) The berhu penalty and the grouped effect. arXiv:1207.6868

Xu D, Wang W, Tang H, Liu H, Sebe N, Ricci E (2018) Structured attention guided convolutional neural fields for monocular depth estimation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3917–3925

Xu D, Ricci E, Ouyang W, Wang X, Sebe N (2017) Multi-scale continuous crfs as sequential deep networks for monocular depth estimation. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), vol 1

Nathan Silberman PK, Hoiem D, Fergus R (2012) Indoor segmentation and support inference from rgbd images. In: ECCV

Sturm J, Engelhard N, Endres F, Burgard W, Cremers D (2012) A benchmark for the evaluation of rgb-d slam systems. In: 2012 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, pp 573–580

Levin A, Lischinski D, Weiss Y (2004) Colorization using optimization. In: ACM transactions on graphics (tog), vol 23, no 3. ACM, pp 689–694

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M, Berg AC, Fei-Fei L (2015) ImageNet large scale visual recognition challenge. Int J Comput Vis (IJCV) 115(3):211–252

Pizzoli M, Forster C, Scaramuzza D (2014) Remode: probabilistic, monocular dense reconstruction in real time. In: 2014 IEEE international conference on robotics and automation (ICRA). IEEE, pp 2609–2616

Concha Belenguer A, Civera Sancho J (2015) Dpptam: dense piecewise planar tracking and mapping from a monocular sequence. In: Proceedings of IEEE/RSJ international conference on intelligent robotic systems, no. ART-2015-92153

Acknowledgements

This work was supported in part by Zhejiang Provincial Science Foundation of China (Grant No. LY18F010004) and Major Scientific Project of Zhejiang Lab (No. 2018DD0ZX01).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ding, Y., Lin, L., Wang, L. et al. Digging into the multi-scale structure for a more refined depth map and 3D reconstruction. Neural Comput & Applic 32, 11217–11228 (2020). https://doi.org/10.1007/s00521-020-04702-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-04702-3