Abstract

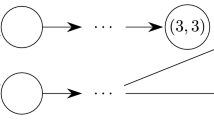

An increasing number of complex problems have naturally posed significant challenges in decision-making theory and reinforcement learning practices. These problems often involve multiple conflicting reward signals that inherently cause agents’ poor exploration in seeking a specific goal. In extreme cases, the agent gets stuck in a sub-optimal solution and starts behaving harmfully. To overcome such obstacles, we introduce two actor-critic deep reinforcement learning methods, namely Multi-Critic Single Policy (MCSP) and Single Critic Multi-Policy (SCMP), which can adjust agent behaviors to efficiently achieve a designated goal by adopting a weighted-sum scalarization of different objective functions. In particular, MCSP creates a human-centric policy that corresponds to a predefined priority weight of different objectives. Whereas, SCMP is capable of generating a mixed policy based on a set of priority weights, i.e., the generated policy uses the knowledge of different policies (each policy corresponds to a priority weight) to dynamically prioritize objectives in real time. We examine our methods by using the Asynchronous Advantage Actor-Critic (A3C) algorithm to utilize the multithreading mechanism for dynamically balancing training intensity of different policies into a single network. Finally, simulation results show that MCSP and SCMP significantly outperform A3C with respect to the mean of total rewards in two complex problems: Food Collector and Seaquest.

Similar content being viewed by others

References

Sutton RS, Barto AG (2012) Reinforcement learning: an introduction. The MIT Press, Cambridge

Mahadevan S, Connell J (1992) Automatic programming of behavior-based robots using reinforcement learning. Artif Intell 55(2–3):311–365

Schaal S (1997) Learning from demonstration. In: Adv Neural Inf Process Syst, pp. 1040–1046

Kim HJ, Jordan MI, Sastry S, Ng AY (2004) Autonomous helicopter flight via reinforcement learning. In: Adv Neural Inf Process. Syst., pp. 799–806

Ng AY, Coates A, Diel M (2006) V. Ganapathi, J. Schulte, B. Tse, E. Berger, and E. Liang, Autonomous inverted helicopter flight via reinforcement learning. In: Experimental Robot. IX, pp. 363–372

Riedmiller M, Gabel T, Hafner R, Lange S (2009) Reinforcement learning for robot soccer. J Auton Robot 27(1):55–73

Mülling K, Kober J, Kroemer O, Peters J (2013) Learning to select and generalize striking movements in robot table tennis. Int J Robot Res 32(3):263–279

Martinez D, Alenya G, Torras C (2015) Safe robot execution in model-based reinforcement learning. In: Int Conf Intell Robot Sys (IROS), pp. 6422–6427

Bertsekas DP (2005) Dynamic Programming and Optimal Control. Athena Scientific, Belmont, MA

Mnih V et al (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529–533

Silver D et al (2016) Mastering the game of Go with deep neural networks and tree search. Nature 529(7578):484–489

Schaul T, Quan J, Antonoglou I, Silver D (2016) Prioritized experience replay. In: ICLR

Vamplew P, Dazeley R, Berry A, Issabekov R, Dekker E (2011) Empirical evaluation methods for multiobjective reinforcement learning algorithms. Mach Learn 84(1–2):51–80

Roijers DM, Vamplew P, Whiteson S, Dazeley R (2013) A survey of multi-objective sequential decision-making. J Artif Intell Res 48:67–113

Mnih V et al (2016) Asynchronous methods for deep reinforcement learning. Int Conf Mach Learn, pp 1928–1937

Konda VR, Tsitsiklis JN (2000) Actor-critic algorithms. In: Adv Neural Inf Process. Syst., pp. 1008–1014

Amodei D, Olah C, Steinhardt J, Christiano P, Schulman J, Mane D (2016) Concrete problems in AI safety in arXiv:1606.06565 [cs]

Hasselt HV (2010) Double Q-learning. In: Adv. Neural Inf Process Syst, pp. 2613–2621

Hasselt HV, Guez A, Silver D (2016) Deep reinforcement learning with double q-learning. In: Proc. AAAI Conf Artif Intell, pp. 2094–2100

Wang Z et al. (2015) “Dueling network architectures for deep reinforcement learning,” arXiv:1511.06581 [cs]

Sorokin I, Seleznev A, Pavlov M, Fedorov A, Ignateva A (2015) “Deep attention recurrent q-network. arXiv:1512.01693 [cs]

Wang Z, Bapst V, Heess N, Mnih V, Munos R, Kavukcuoglu K, de Freitas N (2016) “Sample efficient actor-critic with experience replay. arXiv preprint arXiv:1611.01224

Dietterich TG (2000) Hierarchical reinforcement learning with the maxq value function decomposition. J. Artif. Intell. Res. 13:227–303

Barto AG, Mahadevan S (2003) Recent advances in hierarchical reinforcement learning. Dis Event Dyn Syst 13(4):341–379

Chentanez N, Barto AG, Singh SP (2005) Intrinsically motivated reinforcement learning. In: Adv. Neural Inf. Process. Syst., pp. 1281–1288

Kulkarni TD, Narasimhan KR, Saeedi A, Tenenbaum JB (2016) Hierarchical deep reinforcement learning: Integrating temporal abstraction and intrinsic motivation. In: Adv. Neural Inf. Process. Syst., pp. 3675–3683

Vamplew P, Yearwood J, Dazeley R, Berry A (2008) On the limitations of scalarisation for multi-objective reinforcement learning of pareto fronts. In: Australasian Joint Conf. Artif. Intell., pp. 372–378

Deb K, Thiele L, Laumanns M, Zitzler E (2002) Scalable multi-objective optimization test problems. In: Evolutionary Computation

Bullinaria J (2005) “Evolved age dependent plasticity improves neural network performance. In: International Conference on Hybrid Intelligent Systems

Nishimoto R, Namikawa J, Tani J (2008) Learning multiple goal-directed actions through self-organization of a dynamic neural network model: a humanoid robot experiment. In: Adaptive Behavior, pp. 166–181

Vargas DV, Takano H, Murata J (2015) Novelty-organizing team of classifiers in noisy and dynamic environments. In: IEEE Congress on Evolutionary Computation

Yang R, Sun X, Narasimhan K (2019) A generalized algorithm for multi-objective reinforcement learning and policy adaptation. In: Advances in Neural Information Processing Systems

Mnih V (2013) et al., Playing atari with deep reinforcement learning. arXiv:1312.5602 [cs]

Bellemare MG, Naddaf Y, Veness J, Bowling M (2013) The arcade learning environment: an evaluation platform for general agents. J Artif Intell Res 47:253–279

Tieleman T, Hinton G Lecture 6.5–rmsprop: Divide the gradient by a running average of its recent magnitude. In: COURSERA: Neural Networks for Machine Learning

Gábor Z, Kalmár Z, Szepesvári C (1998) “Multi-criteria reinforcement learning. In: Int. Conf, Mach, Learn

Abbeel P, Ng AY (2004) “Apprenticeship learning via inverse reinforcement learning. In: Int. Conf, Mach, Learn

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of Interest

The authors declare that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A. agent behavior analysis

In this section, we conduct an analysis of agent behaviors by using MCSP and SCMP in Food Collector. Thereby, we understand the underlying mechanism of MCSP and SCMP that is used to tackle the LiS problem. We use a priority weight of (0.5, 0.5) for both methods. Figure 11 shows the differences between the two methods with respect to the agent’s behaviors in Food Collector. The agent using MCSP eats chicken even when the energy is full, and it only collects rice to accumulate rewards. Therefore, the agent follows a mechanical strategy to find a long-term solution. In contrast to MCSP, the agent using SCMP is greedier as it collects both chicken and rice to accumulate rewards (even the energy is low). The agent only eats the chicken when the situation is dangerous. Specifically, the agent prioritizes collecting the food over eating the chicken in the early stage because the energy slowly decreases. When the agent achieves a high score, the energy decreases rapidly. The agent prioritizes eating the food over collecting it to avoid energy depletion. In other words, the agent using SCMP is “smarter” than the MCSP’s agent as it dynamically modifies the priority of different objectives in real time to adapt to the environment. The strategy used in SCMP is quite similar to human strategy and hence enables the agent to efficiently finish the task in a short period. To measure the efficiency of agents in each method, we compare the mean total of steps per episode of each agent during the training process. In Fig. 11c shows the performance of two agents: one agent is trained with MCSP using the priority weight (0.5, 0.5), another agent is trained with SCMP using the set \(P_4\) and an input weight (0.5, 0.5) for evaluation. The SCMP’s agent is more efficient than MCSP’s agent regarding the mean total of steps per episode.

Appendix B. SCMP with different input weights

In this Appendix, we examine the performance of SCMP by varying input weights for evaluation in Food Collector and Seaquest. We use the same training weight sets for SCMP as in Sect. 4. Figures 12, 13, 14 and 15 present the performance of SCMP in Food Collector with different input weights for evaluation. We see that the policy produced by SCMP does not depend on the input weight with respect to the mean total rewards of collecting food (the primary reward signal). Therefore, it is helpful to use SCMP as we do not need to find the optimal value of an input weight. The only use of an input weight is to adjust agent behaviors to prioritize objectives. In Food Collector, for example, the weight (0, 1) maximizes the mean total rewards of eating food while the weight (1, 0) restricts the agent from eating food. Figures 16, 17, 18, 19 and 20 shows the performance of SCMP using different input weights in Seaquest. We also infer the same conclusion as in Food Collector.

Rights and permissions

About this article

Cite this article

Nguyen, N.D., Nguyen, T.T., Vamplew, P. et al. A Prioritized objective actor-critic method for deep reinforcement learning. Neural Comput & Applic 33, 10335–10349 (2021). https://doi.org/10.1007/s00521-021-05795-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-05795-0