Abstract

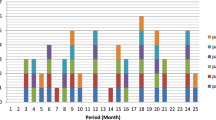

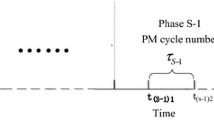

The current quality status of most machinery and equipment is based on its accumulated historical status, but the influence of the past quality status on the current status of equipment is often overlooked in optimization management. This paper uses a Caputo-type fractional derivative to characterize this property. By refining the nature and characteristics of the equipment maintenance effect function and considering the memory characteristics of equipment quality, the existing model is improved, and a fractional-order optimal control model for equipment maintenance and replacement is constructed. Theoretical analyses verify the effectiveness of the fractional-order equipment maintenance management model. Furthermore, the results of numerical experiments also reflect this difference between integer-order and fractional-order equipment maintenance management models. The result shows that with an increase of the order \(\alpha\), the optimal target value of the equipment maintenance management problem will also increase with the weakening of the memory effect.

Similar content being viewed by others

References

Sethi SP, Thompson GL (2000) Optimal control theory: Applications to management science and economics. Kluwer Academic Publishers, Boston

Kamien MI, Schwartz NL (2012) Dynamic optimization: the calculus of variations and optimal control in economics and management[M]. Courier corporation, USA

Wang Z, Wang Q, Zhang Z et al (2021) A new configuration of autonomous CHP system based on improved version of marine predators algorithm: A case study[J]. Int Trans Electr Energy Syst 31(4):e12806

Tian MW, Yan SR, Han SZ et al (2020) New Optimal Design for a Hybrid Solar Chimney, Solid Oxide Electrolysis and Fuel Cell based on Improved Deer hunting optimization algorithm[J]. J Clean Prod 249:119414

Dya B, Yong W, Hla B et al (2019) System identification of PEM fuel cells using an improved Elman neural network and a new hybrid optimization algorithm[J]. Energy Rep 5:1365–1374

Almeida R, Brito DA, Cruz AMC, Martins N et al (2019) An epidemiological MSEIR model described by the Caputo fractional derivative [J]. Int J Dyn Control 7(2):76–84

Binshi X (2001) History and Development of Equipment Management. Equipment Manage 1:50–51 ((in Chinese))

Degbotse AT, Nachlas JA (2003) Use of nested renewals to model availability under opportunistic maintenance policies [C]. Reliability and Maintainability Symposium. IEEE

Hui W, Xiumin F, Junqi Y (2003) Optimizing Equal Risk Preventive Maintenance Strategy Considering Opportunity Maintenance. Mach Design Res 19(3):51–56 (in Chinese)

Duffuaa SO, Ben-Daya M, Al-Sultan KS et al (2001) A generic conceptual simulation model for maintenance systems [J]. J Qual Maint Eng 7(3):207–219

Thompson GL (1968) Optimal maintenance policy and sale date of a machine. Manage Sci 14:543–550

Kamien MI, Schwartz NL (1971) Optimal maintenance and sale age for a machine subject to failure. Manage Sci 17:427–449

Sethi SP, Morton TE (1972) A mixed optimization technique for the generalized machine replacement problem. Naval Res Logis Q 19:471–481

Tapiero CS (1973) Optimal maintenance and replacement of a sequence of machines and technical obsolescence [J]. Opsearch 19:1–13

Sethi SP, Thompson GL (1977) Christmas toy manufacturers problem: An application of the stochastic maximum principle. Opsearch 14:161–173

Sethi SP, Chand S (1979) Planning horizon procedures in machine replacement models. Manage Sci 25:140–151

Chand S, Sethi SP (1982) Planning horizon procedures for machine replacement models with several possible replacement alternatives [J]. Naval Res Logis Q 29(3):483–493

Mehrez A, Berman N (1994) Maintenance optimal control, three machine replacement model under technological breakthrough expectations [J]. J Optim Theory Appl 1994(81):591–618

Mehrez A, Rabinowitz G, Shemesh E (2000) A discrete maintenance and replacement model under technological breakthrough expectations [J]. Ann Oper Res 99:351–372

Dogramaci A, Fraiman NM (2004) Replacement decisions with maintenance under certainty: An imbedded optimal control model. Oper Res 52:785–794

Dogramaci A (2005) Hibernation Durations for Chain of Machines with Maintenance Under Uncertainty. Optimal Control and Dynamic Games, C. Diessenberg, RF Hartl (Eds.), New York: Springer, 231–238

Zhang Rong (2007) A limit property of the optimal control strategy for equipment maintenance and update under uncertain conditions [C]. Proceedings of the 26th Chinese Control Conference of the Control Theory Committee of the Chinese Society of Automation. The Control Theory Committee of the Chinese Society of Automation: China Control Theory Professional Committee of the Society of Automation, 2007: 1376–1380.(in Chinese)

Love CE, Guo R (1996) Utilizing Weibull failure rates in repair limit analysis for equipment replacement/preventive maintenance decisions [J]. J Op Res Soc 47(11):1366–1376

Marquez AC, Heguedas AS (2002) Models for maintenance optimization: a study for repairable systems and finite time periods [J]. Reliab Eng Syst Saf 75(3):367–377

Monahan GE (1982) Survey of partially observable Markov decision processes: Theory, models and algorithms [J]. Manage Sci 28(1):1–16

Duffuaa S, Ben-Daya M, AI-Sultan KS (2001) A generic conceptual simulation model for maintenance systems. J Qual Maint Eng 7(3):207–219

Charles AS, Floru IR, Azzaro-Pantel C et al (2003) Optimization of preventive maintenance strategies in a multipurpose batch plant: application to semiconductor manufacturing [J]. Comput Chem Eng 27(4):449–467

Li GQ, Li JJ (2002) A semi-analytical simulation method for reliability assessments of structural systems [J]. Reliab Eng Syst Saf 78(3):275–281

Ozekici Suleyman (1995) Optimal maintenance policies in random environments [J]. European Journal of Operational Research 283–294

Levitin G (2004) Reliability and performance analysis for fault-tolerant programs consisting of versions with different characteristics. Reliab Eng Syst Saf 86(1):75–81

Khan FI, Haddara MM (2003) Risk-based maintenance (RBM): a quantitative approach for maintenance/inspection scheduling and planning. J Loss Prev Process Ind 16(6):561–573

Bangjun H, Xiumin F, Dengzhe M (2004) Simulation and optimization of preventive maintenance control strategy for production system equipment. Comput Int Manuf Syst 7:15–19 (in Chinese)

Karamasoukis CC, Kyriakidis EG (2010) Optimal maintenance of two stochastically deteriorating machines with an intermediate buffer. Eur J Oper Res 207(1):297–308

Honggen C (2011) Implementation decision model for equipment maintenance improvement. Syst Eng Theory Practice 31(05):954–960 (in Chinese)

Caesarendra W, Widodo A, Thom PH (2011) Combined Probability approach and indirect data-driven method for bearing degradation prognostics. IEEE Trans Reliab 60(1):14–20

Yuzhong Z, Yang S (2013) Research on equipment maintenance cost model of machinery manufacturing enterprise. China Collect Econ 10:150–152 (in Chinese)

Wen Y (2015) Logistics equipment state maintenance model and its robust optimization [D]. Jilin University, (in Chinese)

Youtang L, Houjun L (2017) Dynamic preventive maintenance model for deteriorating equipment based on reliability constraints. J Lanzhou Univ Technol 43(05):35–39 (in Chinese)

Zhibin Z (2019) Research on Joint Decision of Equipment Maintenance and Equipment Replacement Based on Degradation System [D]. South China University of Technology,(in Chinese)

Podlubny I (1999) Fractional differential equations. Academic Press, San Diego

Kilbas AA, Srivastava HM, Trujillo JJ (2006) Theory and applications of fractional differential equations. Elsevier B. V, Amsterdam

Miller KS, Ross B (1993) An introduction to the fractional calculus and fractional differential equations. Wiley, New York

Bai Z, Lü H (2005) Positive solutions for boundary value problem of nonlinear fractional differential equation. J Math Anal Appl 311:495–505

Li CP, Deng WH (2007) Remarks on fractional derivatives. Appl Math Comput 187:777–784

Su X, Zhang S (2011) Unbounded solutions to a boundary value problem of fractional order on the half-line. Comput Math Appl 61:1079–1087

Bressan A, Piccoli B (2007) Introduction to the mathematical theory of control. American Institute of Mathematical Sciences Press, New York

Deng H, Wei W (2015) Existence and stability analysis for nonlinear optimal control problems with 1-mean equicontinuous control [J]. J Ind Manage Opt 11:1409–1422

Kamocki R (2014) Pontryagin maximum principle for fractional ordinary optimal control problems. Math Methods Appl Sci 37:1668–1686

Yusun T (2004) Functional analysis course. Fudan University Press, Shanghai (in Chinese)

Wei G (2002) A generalization and application of Ascoli-Arzela Theorem. Syst Sci Math 22:115–122 (in Chinese)

Gongqing Z, Yuanqu L (2005) Lecture Notes on Functional Analysis [M]. Peking University Press, Beijing (in Chinese)

Dixo J, Mckee S (1986) Weakly singular discrete gronwall inequalities. Zeitschrift Fur Angewandte Mathematik und Mechanik 66:535–544

Li CP, Wu YJ, Ye RS (2013) Recent Advances in Applied Nonlinear Dynamics with Numerical Analysis: Fractional Dynamics, Network Dynamics, Classical Dynamics and Fractal Dynamics with Their Numerical Simulations [J]. World Scientific, Singapore

Wang JR, Zhou Y, Feckan M (2012) Nonlinear impulsive problems for fractional differential equations and Ulam stability. Comput Math Appl 64:3389–3405

Acknowledgements

This work was supported by the Project of the National Natural Science Foundation of China (Grant No. 71672195), the Project of the National Natural Science Foundation of China (Grant No. 72072185), the Project of the National Natural Science Foundation of China (Grant No. 71872184), and the Project of Doctor of entrepreneurship and innovation in Jiangsu Province (Grant No. JSSCBS20211279).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

Important properties of fractional calculus

Lemma 6

[43] Let there be a function \(h(t) \in C(0, 1)\bigcap L(0, 1)\) with a \(\nu (\nu >0)\)-order Riemann–Liouville type fractional derivative, and let \(h\in C(0, 1)\bigcap\) L(0, 1); then,

where \(C_i \in {\mathbb {R}}, i=1, 2,\ldots ,N\) and N is the smallest positive integer that satisfies \(N \geqslant \nu\).

Lemma 7

[40,41,42] If \(\nu _1, \nu _2,\nu >0, t \in [0,1]\) and \(h(t) \in L [0,1],\) we have

Lemma 8

[40, 44] If \(h(t) \in C [0,1]\) and \(\nu >0\), we have

Lemma 9

[40] Let \(\nu >0\); then, for the fractional differential equation

there is solution of the following form:

Lemma 10

[40] Let \(\nu >0\); then, we have

where \(c_i \in {\mathbb {R}}, i=0,1, 2,\cdots ,n-1, n=[\nu ]+1.\)

Lemma 11

[41, 42] If \(\nu _1, \nu _2,\nu >0, t \in [0,1]\) and function \(h(t) \in L [0,1]\), then we have

Lemma 12

[41, 45] Let \(h(t) \in L^1(0,+\infty )\), \(\nu _1, \nu _2,\nu >0\); then, we have

Lemma 13

[45] Let \(_{0}^C D_t^{\nu }h(t) \in L^1(0,+\infty ), \nu >0\); then, we have

where \(C_i \in {\mathbb {R}}, i=1, 2,\ldots ,N,\) and N is the smallest positive integer greater than or equal to \(\nu\).

Remark 2

If the value of the function h in the above definition and lemma is in a Banach space E, then the integral involved in the above definition and lemma refers to the integral in the Bochner sense. If the abstract function g is measurable and its norm is integrable in the Lebesgue sense, then it is Bochner integrable.

Important tools

Here, we mainly introduce the concept of compact sets and related theorems, some commonly used conclusions and theorems in functional analysis, several fixed point theorems, and the Gronwall inequality. These concepts are important tools for proving the main results of this article.

First, a series of concepts and theorems of compact sets are given. The relevant details can be found in [49, 51].

Definition 1

[49] Let X be a nonempty compact set, and let \(\{A_{\alpha }\}\) be a family subset of X, \(A\subset X\); if \(A\subset \bigcup _{\alpha }A_{\alpha }\), there is a family set \(\{A_{\alpha }\}\) covering A. If the intersection of any finite set in \(\{A_{\alpha }\}\) is not empty, then \(\{A_{\alpha }\}\) has the finite intersection property.

Definition 2

[49] Let X be a topological space, \(A\subset X\); if in any open set family covering A, a finite number of open coverings A can always be taken, then A is said to be a compact set in X.

Remark 3

[49] Two small conclusions regarding compact sets are given as follows:

-

1.

A closed subset of a compact set is a compact set.

-

2.

A compact set in a Hausdorff space X must be a closed set.

Theorem 5

[49] Assuming f is a continuous mapping from topological space \(X_1\) to topological space \(X_2\), the compact set A in \(X_1\) is similar to f(A) and is a compact set in \(X_2\).

Definition 3

[49] Assume X is a distance space, \(M\subset X\); if for any point in M, denoted as \(\{x_n\}\), there is a convergent subcolumn \(\{x_{n_k} in\)X\(\}\), then M is a column compact set in X. If \(\forall \epsilon >0\), there is a finite subset A of M, making \(M\subset \bigcup _{x\in A}O(x,\epsilon )\), then M is said to be completely bounded, and it is called A, as a limited \(\epsilon -\) net of M.

Remark 4

[49] Three small conclusions about column compact sets are given as follows:

-

1.

The closure of a column compact set is still a column compact set.

-

2.

The sequence compact set in the distance space must be completely bounded; a completely bounded set must be bounded.

-

3.

Assume X is a distance space, \(M\subset X\); then, the necessary and sufficient condition for M to be a compact set is that M is a sequence tightly closed set.

The continuous functions on the compact space X are all C(X). For \(f\in C(X)\), we have

According to \(\Vert \cdot \Vert\), C(X) is a Banach space.

Definition 4

[49] Let \(M\subset C(X)\); for arbitrary \(\epsilon >0\) and \(\delta >0\), when \(\rho (x,y)<\delta\), we have

Then, M is an equally continuous family of functions.

The Arzela–Ascoli theorem is given below.

Theorem 6

[49] Let E be a real Banach space, \(J_0=[a,b]\), and let

Its norm is

Then, \(C[J_0,E]\) is a Banach space.

The necessary and sufficient condition for \(H\subset C[J_0,E]\) to be a relatively compact set is that H is an equicontinuous function of the family and that for an arbitrary \(t\in J_0\), \(H(t)=\{x(t)|x\in H\}\) is a relatively compact set in E.

The generalized Arzela–Ascoli theorem is given below.

Theorem 7

[50] Let X be a compact distance space, \(M\subset X\); the necessary and sufficient condition for M to be compact is that M is a bounded and equally continuous function family.

Definition 5

[51] Let \((X,\rho )\) be a distance space; therefore, \(T:(X,\rho )\rightarrow (X,\rho )\) is a contraction map. If \(0<\alpha <1\), make \(\rho (Tx,Ty)\leqslant \alpha \rho (x,y)\) \((\forall x,y \in X)\).

A commonly used fixed point theorem is given below: the Banach fixed point theorem, which is the principle of contraction mapping.

Theorem 8

[51] Let \((X,\rho )\) be a complete distance space, and let T be a compressed mapping from \((X,\rho )\) to itself; then, T has the only immovable X point. That is, there is only one \(x\in X\) such that \(Tx=x\).

For details on weakly singular Gronwall inequalities, see [52,53,54].

Theorem 9

[52, 53] Let u(t) be a continuous function that is nonnegative on [0, T]. If

where \(0\leqslant \alpha <1\), \(\varphi (t)\) is a nonnegative monotonically increasing continuous function on [0, T], and M is a positive constant.

where \(E_{1-\alpha }(z)\) is a Mittag-Leffler function defined on \(0\leqslant \alpha <1\).

Theorem 10

[54] Let \(u(t)\in PC(J,{\mathbb {R}})\) satisfy the following inequality:

where \(0< q <1\), \(c_1\) is a nonnegative monotonically increasing continuous function on [0, T], and \(c_2, \theta _k(0<t_k<t)\) is a positive constant.

where

Proof of the partial theorem lemma

Proof of Lemma 1

“Necessity”. Let \(y\in PC_m[0,T]\) be the solution of equation (18). By Lemma 10, when \(t\in [0,t_1]\), we have

By \(y(0)=y^0\), we have \(c_1=y^0\); thus,

When \(t\in (t_1,t_2]\), we have

By Lemma 10, when \(t\in (t_1,t_2]\), we have

By

we have

Thus, for \(t\in (t_1,t_2]\), we have

By analogy, we have \(t\in (t_k,t_{k+1}]\), and thus,

Therefore,

“Adequacy”. Assume y satisfies the function (19). By Lemma 11, \(y\in PC_m[0,T]\) is the solution of function (18). The proof is complete. \(\square\)

Proof of Lemma 2

For the convenience of description and writing, let \(a_0=0\). \(\forall u\in U\), \(\forall f \in Y_K\), by Lemma 1, \(y\in PC_m[0,T]\) is the solution of function (20) if and only if \(y\in PC_m[0,T]\) is the solution of the following integral equation:

For a given \(u\in U\), \(f \in Y_K\), consider the following operator:

With the binding condition \((F_K)\), we have \(T:PC_m[0,T]\rightarrow PC_m[0,T]\).

Clearly, \(y\in PC_m[0,T]\) is the solution of (20) if and only if \(y\in PC_m[0,T]\) is the fixed point of operator T on \(PC_m[0,T]\).

Next, we will use the Banach fixed point theorem 8 to prove that the operator T has a unique fixed point in the Banach space \(PC_m[0,T]\).

First, since the condition \((H_{\phi })\) is established, the following equivalent norm can be defined in the Banach space \(PC_m[0,T]\):

where \(\chi _K>0\) and

In fact,

It can be seen that \(\Vert \cdot \Vert _1\) and \(\Vert \cdot \Vert\) are equivalent norms. Next, we use the norm \(\Vert \cdot \Vert _1\) in related discussions.

For a given positive constant \(K>0\), we know that there is a constant \(D_K>0\) such that \(\forall (t,y_1,u), (t,y_2,u)\in I_K\), we have

where

and

For arbitrary \(\vartheta _1, \vartheta _2\in PC_m[0,T]\), let \(\vartheta _1\ne \vartheta _2\), and let \(\Vert \vartheta _1-\vartheta _2\Vert _*=\xi _0>0\); by the definition of \(\Vert \cdot \Vert _*\), \(\forall t\in [0,T]\), we have

and thus, \(\forall t\in [0,T]\),

When \(t\in [0,t_1]\), we have

and thus, for \(t\in [0,t_1]\),

Next, consider that for \(t\in (t_k,t_{k+1}]\), \(\forall y\in PC_m[0,T]\), we have

and we have

In summary, we have

Because we have

T is a contraction mapping on \(PC_m[0,T]\); there is a principle of contraction mapping 8, and it can be seen that the operator T has a unique fixed point in \(PC_m[0,T]\). Therefore, Equation (20) has a unique solution in \(PC_m[0,T]\). The proof is complete. \(\square\)

Proof of theorem 4

According to the definition form of the performance index function J, we have

and

where

and

From \(f^j\rightarrow f^*(j\rightarrow +\infty )\) and \(u^j\rightarrow u^*(j\rightarrow +\infty )\), by Lemma 4, we have \(y^j\rightarrow y^*(j\rightarrow +\infty )\).

The functions k and h satisfy the conditions \((H_k)\) and \((H_h)\), respectively. k and h are continuous functions, and we have

and

Therefore,

Therefore, we have

The proof is complete. \(\square\)

Rights and permissions

About this article

Cite this article

Gong, Y., Zha, M. & Lv, Z. Fractional-order optimal control model for the equipment management optimization problem with preventive maintenance. Neural Comput & Applic 34, 4693–4714 (2022). https://doi.org/10.1007/s00521-021-06624-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-06624-0