Abstract

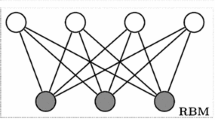

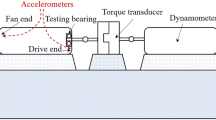

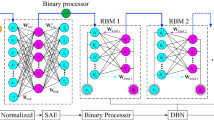

Vibration signals are widely used in fault diagnosis of rotating machinery in real-world situations. However, it is very challenging to extract effective fault features from noisy signals and construct an accurate diagnosis model. In this paper, we propose a novel Gaussian–Bernoulli deep belief network (GDBN) model for intelligent fault diagnosis, where improved graph regularization and sparse features learning are embedded in the GDBN smoothly. In particular, the improved graph regularization is added to the hidden layer of original and reconstructed data. Therefore, our model can not only transform the original data into features with improved separability, but also generate discriminant features from vibration signal. An unsupervised pre-training learning process followed by a supervised fine-tuning is implemented in proposed model to contribute the classification capabilities. The effectiveness and superiority of the proposed model have been validated by gearbox and bearing cases studies. The results illustrate that our model can learn effective discriminative features and the extracted features are more separable. Furthermore, the proposed model achieves the better diagnosis accuracy in comparison with that of other models.

Similar content being viewed by others

References

Yu JB (2019) Evolutionary manifold regularized stacked denoising autoencoders for gearbox fault diagnosis. Knowl Based Syst 178:111–122

Jin X, Zhao M, Chow TWS, Pecht M (2014) Motor bearing fault diagnosis using trace ratio linear discriminant analysis. IEEE Trans Ind Electron 61(5):2441–2451

Yu J, Liu G (2020) Knowledge-based deep belief network for machining roughness prediction and knowledge discovery. Comput Ind 121:103262

Zuev A, Zhirabok A, Filaretov V (2020) Fault identification in underwater vehicle thrusters via sliding mode observers. Int J Appl Math Comput Sci 30(4):679–688

Shao S, McAleer S, Yan R, Baldi P (2019) Highly accurate machine fault diagnosis using deep transfer learning. IEEE Trans Ind Inf 15(4):2446–2455

Cui L, Yao T, Zhang Y, Gong X, Kang C (2017) Application of pattern recognition in gear faults based on the matching pursuit of a characteristic waveform. Measurement 104:212–222

Precup RE, Angelov P, Costa BSJ, Sayed-Mouchaweh M (2015) An overview on fault diagnosis and nature-inspired optimal control of industrial process applications. Comput Ind 74:75–94

Straka O, Punčochář I (2020) Decentralized and distributed active fault diagnosis: multiple model estimation algorithms. Int J Appl Math Comput Sci 30(2):239–249

Schmidhuber J (2015) Deep learning in neural networks: an overview. Neural Netw 61:85–117

Hinton GE (2006) Reducing the dimensionality of data with neural networks. Science 313(5786):504–507

Shin HC, Orton R, Collins DJ, Doran SJ, Leach MO (2013) Stacked autoencoders for unsupervised feature learning and multiple organ detection in a pilot study using 4D patient data. IEEE Trans Pattern Anal Mach Intell 35(8):1930–1943

Ince T, Kiranyaz S, Eren L, Askar M, Gabbouj M (2016) Real-time motor fault detection by 1D convolutional neural networks. IEEE Trans Ind Electron 63(11):7067–7075

Lin FJ, Sun IF, Yang KJ, Chang JK (2016) Recurrent fuzzy neural cerebellar model articulation network fault-tolerant control of six-phase permanent magnet synchronous motor position servo drive. IEEE Trans Fuzzy Syst 24(1):153–167

Liu J, Xu Z, Zhou L, Yu W, Shao Y (2019) A statistical feature investigation of the spalling propagation assessment for a ball bearing. Mech Mach Theory 131:336–350

Chen Y, Schmidt S, Heyns PS, Zuo MJ (2021) A time series model-based method for gear tooth crack detection and severity assessment under random speed variation. Mech Syst Signal Process 156:107605

Zhu H, Cheng J, Zhang C, Wu J, Shao X (2020) Stacked pruning sparse denoising autoencoder based intelligent fault diagnosis of rolling bearings. Appl Soft Comput 88:106060

Chen Z, Gryllias K, Li W (2020) Intelligent Fault Diagnosis for Rotary Machinery Using Transferable Convolutional Neural Network. IEEE Trans Ind Inf 16(1):339–349

Cheng Y, Zhu H, Wu J, Shao X (2019) Machine health monitoring using adaptive kernel spectral clustering and deep long short-term memory recurrent neural networks. IEEE Trans Ind Inf 15(2):987–997

Xing S, Lei Y, Wang S, Jia F (2021) Distribution-invariant deep belief network for intelligent fault diagnosis of machines under new working conditions. IEEE Trans Ind Electron 68(3):2617–2625

Che C, Wang H, Ni X, Fu Q (2020) Domain adaptive deep belief network for rolling bearing fault diagnosis. Comput Ind Eng 143:106427

Shi M, Zhang F, Wang S, Zhang C, Li X (2021) Detail preserving image denoising with patch-based structure similarity via sparse representation and SVD. Comput Vis Image Underst 206:103173

Zhang J, Shao M, Yu L, Li Y (2020) Image super-resolution reconstruction based on sparse representation and deep learning. Signal Process Image Commun 87:115925

Shao Y, Sang N, Li Y, Li W, Gao C (2020) Joint image restoration and matching method based on distance-weighted sparse representation prior. Pattern Recogn Lett 135:160–166

An F, Li S, Ma X, Bai L (2021) Image fusion algorithm based on unsupervised deep learning-optimized sparse representation. Biomed Signal Process Control 71:103140

Ding X, He Q (2016) Time-frequency manifold sparse reconstruction: a novel method for bearing fault feature extraction. Mech Syst Signal Process 80:392–413

Xiao Z, Wang H, Zhou J (2016) Robust dynamic process monitoring based on sparse representation preserving embedding. J Process Control 40:119–133

Lee H, Ekanadham C, Ng AY (2007) Sparse deep belief net model for visual area v2. In: Conference on advances in neural information processing systems p 873–880

Ji N, Zhang J, Zhang C, Yin Q (2014) Enhancing performance of restricted Boltzmann machines via log-sum regularization. Knowl Based Syst 63:82–96

Sankaran A, Goswami G, Vatsa M, Singh R, Majumdar A (2017) Class sparsity signature based restricted Boltzmann machine. Pattern Recogn 61:674–685

Rozin B, Pereira-Ferrero VH, Lopes LT, Guimarães Pedronette DC (2021) A rank-based framework through manifold learning for improved clustering tasks. Inform Sci 580:202–220

Li Q, Ding X, Huang W, He Q, Shao Y (2019) Transient feature self-enhancement via shift-invariant manifold sparse learning for rolling bearing health diagnosis. Measurement 148:106957

Chen D, Lv J, Yi Z (2018) Graph regularized restricted Boltzmann machine. IEEE Trans Neural Netw Learn Syst 29(6):2651–2659

Yang J, Bao W, Liu Y, Li X, Wang J, Niu Y (2021) A pairwise graph regularized constraint based on deep belief network for fault diagnosis. Digit Signal Prog 108:102868

Choo S, Lee H (2017) Learning framework of multimodal Gaussian–Bernoulli RBM handling real-value input data. Neurocomputing 275:1813–1822

Yang J, Bao W, Liu Y, Li X, Wang J, Niu Y, Li J (2021) Joint pairwise graph embedded sparse deep belief network for fault diagnosis. Eng Appl Artif Intell 99:104149

Hinton GE, Osindero S, Teh YW (2006) A fast learning algorithm for deep belief nets. Neural Comput 18(7):1527–1554

Zhu X (2006) Semi-supervised learning literature survey. Neural Comput 2(3):4

Yan S, Xu D, Zhang B, Zhang HJ, Yang Q, Lin S (2007) Graph embedding and extensions: a general framework for dimensionality reduction. IEEE Trans Pattern Anal Mach Intell 29(1):40–51

Yuan Y, Mou L, Lu X (2015) Scene recognition by manifold regularized deep learning architecture. IEEE Trans Neural Netw Learn Syst 26(10):2222–2233

Cotter Shane F, Rao Bhaskar D, Kjersti E, Kenneth KD (2005) Sparse solutions to linear inverse problems with multiple measurement vectors. IEEE Trans Signal Process 53(7):2277–2488

Zhang S, Tang J (2018) Integrating angle-frequency domain synchronous averaging technique with feature extraction for gear fault diagnosis. Mech Syst Signal Process 99:711–729

(2015) Case western reserve university bearing data center website. https://csegroups.case.edu/bearingdatacenter/pages/welcome-case-western-reserve-university-bearing-data-center-website

Funding

This work was supported in part by the National Natural Science Foundation of China, under Grant No. 61627901.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

My Appendix

My Appendix

From the energy function defined by Eq. (8), we can obtain the joint probability distribution of the states \(({\mathbf {v}},{\mathbf {h}})\) [10]:

where \(Z=\mathop {\sum }\limits _{{\mathbf {v}}}\mathop {\sum }\limits _{{\mathbf {h}}}e^{-E({\mathbf {v}},{\mathbf {h}})}\).

When calculating the features of the original data, the features of the reconstruction layer data can be regarded as constants. Denote \(\mathbf{{h}}_{ - m}^k\mathrm{{ = }}\left( {h_1^k,h_2^k, \cdots ,h_{m - 1}^k,h_{m + 1}^k, \cdots ,h_{{n_h}}^k} \right)\) is the removed vector of components from \({\mathbf{{h}}^k}\).

Considering the energy function of (14), Derive \(p(v_{m}^{k}=v\mid {\mathbf {h}}^{k})\)

Therefore,

Derivate \(p(h_{m}^{k}=1\mid {\mathbf {v}}^{k})\) :

The derivation process of reconstructed data is similar to the original data.

Rights and permissions

About this article

Cite this article

Yang, J., Bao, W., Li, X. et al. Improved graph-regularized deep belief network with sparse features learning for fault diagnosis. Neural Comput & Applic 34, 9885–9899 (2022). https://doi.org/10.1007/s00521-022-06972-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-06972-5