Abstract

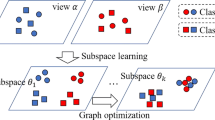

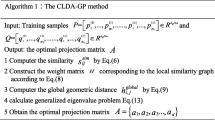

View variation is one of the greatest challenges in the field of gait recognition. Subspace learning approaches are designed to solve this issue by projecting cross-view features into a common subspace before recognition. However, similarity measures are data-dependent, which results in low accuracy when cross-view gait samples are randomly arranged. Inspired by the recent developments of data-driven similarity learning and multi-nonlinear projection, we propose a new unsupervised projection approach, called multi-nonlinear multi-view locality-preserving projections with similarity learning (M2LPP-SL). The similarity information among cross-view samples can be learned adaptively in our M2LPP-SL. Besides, the complex nonlinear structure of original data can be well preserved through multiple explicit nonlinear projection functions. Nevertheless, its performance is largely affected by the choice of nonlinear projection functions. Considering the excellent ability of kernel trick for capturing nonlinear structure information, we further extend M2LPP-SL into kernel space, and propose its multiple kernel version MKMLPP-SL. As a result, our approaches can capture linear and nonlinear structure more precisely, and also learn similarity information hidden in the multi-view gait dataset. The proposed models can be solved efficiently by alternating direction optimization method. Extensive experimental results over various view combinations on the multi-view gait database CASIA-B have demonstrated the superiority of the proposed algorithms.

Similar content being viewed by others

References

Sugandhi, K., Wahid, F.F., Raju, G.: Feature extraction methods for human gait recognition—a survey. Adv. Comput. Data Sci. 721, 377–385 (2017)

Nie, F., Cai, G., Li, J., et al.: Auto-weighted multi-view learning for image clustering and semi-supervised classification. IEEE Trans. Image Process. 3(27), 1501–1511 (2017)

Wu, X., Li, Q., Xu, L., Chen, K., Yao, L.: Multi-feature kernel discriminant dictionary learning for face recognition. Pattern Recogn. 66, 404–411 (2017)

Pan, H., He, J., Ling, Y., et al.: Graph regularized multiview marginal discriminant projection. J. Vis. Commun. Image Represent. 57, 12–22 (2018)

Wan, C.S., Wang, L., Phoha, V.V.: A survey on gait recognition. ACM Comput. Surv. 51(5), 89 (2018)

Adeli-Mosabbeb, E., Fathy, M., Zargari, F.: Model-based human gait tracking, 3D reconstruction and recognition in uncalibrated monocular video. Imaging Sci. J. 60(1), 9–28 (2012)

Sun, J., Wang, Y., Li, J., et al.: View-invariant gait recognition based on kinect skeleton feature. Multimedia Tools Appl. 77(19), 24909–24935 (2018)

Tang, J., Luo, J., Tjahjadi, T., et al.: Robust arbitrary-view gait recognition based on 3D partial similarity matching. IEEE Trans. Image Process. 26(1), 7–22 (2016)

Jean, F., Bergevin, R., Albu, A.B.: Computing and evaluating view-normalized body part trajectories. Image Vis. Comput. 27(9), 1272–1284 (2009)

Kusakunniran, W., Wu, Q., Zhang, J., et al.: A new view-invariant feature for cross-view gait recognition. IEEE Trans. Inf. Forensics Secur. 8(10), 1642–1653 (2013)

Tafazzoli, F., Safabakhsh, R.: Model-based human gait recognition using leg and arm movements. Eng. Appl. Artif. Intell. 23(8), 1237–1246 (2010)

Wang, H., Fan, Y.Y., Fang, B.F., et al.: Generalized linear discriminant analysis based on euclidean norm for gait recognition. Int. J. Mach. Learn. Cybern. 9(4), 569–576 (2018)

Xing, X., Wang, K., Yan, T., et al.: Complete canonical correlation analysis with application to multi-view gait recognition. Pattern Recogn. 50, 107–117 (2015)

Xu, W., Zhu, C., Wang, Z.: Multiview max-margin subspace learning for cross-view gait recognition. Pattern Recogn. Lett. 107, 75–82 (2018)

Wu, Z., Huang, Y., Wang, L., et al.: A comprehensive study on cross-view gait based human identification with deep CNNs. IEEE Trans. Pattern Anal. Mach. Intell. 39(2), 209–226 (2017)

Li, B., Chang, H., Shan, S.G., et al.: Coupled metric learning for face recognition with degraded images. Adv. Mach. Learn. Proc. 5828, 220–233 (2009)

Xu, W., Luo, C., Ji, A., et al.: Coupled locality preserving projections for cross-view gait recognition. Neurocomputing 224, 37–44 (2017)

He, X.F., Niyogi, P.: Locality preserving projections. Adv. Neural Inf. Process. Syst. 16, 153–160 (2004)

Bashir, K., Xiang, T., Gong, S.: Cross-view gait recognition using correlation strength. Proc. Br. Mach. Vis. Conf. 109, 1–11 (2010)

Hardoon, D.R., Szedmak, S., Shawe-Taylor, J.: Canonical correlation analysis: an overview with application to learning methods. Neural Comput. 16(12), 2639–2664 (2004)

Ben, X.Y., Meng, W.X., Yan, R., et al.: An improved biometrics technique based on metric learning approach. Neurocomputing 97(1), 44–51 (2012)

Ben, X.Y., Meng, W.X., Yan, R., et al.: Kernel coupled distance metric learning for gait recognition and face recognition. Neurocomputing 120(10), 577–589 (2013)

Huang, G.B.: An insight into extreme learning machines: random neurons, random features and kernels. Cognit. Comput. 6(3), 376–390 (2014)

Wang, Q., Dou, Y., Liu, X.W., et al.: Multi-view clustering with extreme learning machine. Neurocomputing 214, 483–494 (2016)

Zhao, Z., Feng, G., Zhu, J., et al.: Manifold learning: dimensionality reduction and high dimensional data reconstruction via dictionary learning. Neurocomputing 216, 268–285 (2016)

Chen, X.Y., Jian, C.R.: Gene expression data clustering based on graph regularized subspace segmentation. Neurocomputing 143, 44–50 (2014)

Lu, C.Y., Min, H., Zhao, Z.Q., et al.: Robust and efficient subspace segmentation via least squares regression. Eur. Conf. Comput. Vis. 7578(1), 347–360 (2012)

Lu, C., Feng, J., Yan, S., et al.: A unified alternating direction method of multipliers by majorization minimization. IEEE Trans. Pattern Anal. Mach. Intell. 40(3), 527–541 (2018)

Bezdek, J.C., Hathaway, R.J.: Convergence of alternating optimization. Neural Parallel Sci. Comp. 11(4), 351–368 (2003)

Shawe-Taylor, J., Cristianini, N.: Kernel Method for Pattern Analysis. Cambridge University Press, Cambridge (2004)

Zheng S., Zhang J., Huang K., et al.: Robust view transformation model for gait recognition. The 18th IEEE International Conference on Image Processing, pp. 2073–2076. IEEE (2011). https://kylezheng.org/software/

Yu, S., Tan, D., Tan, T.: A framework for evaluating the effect of view angle, clothing and carrying condition on gait recognition. Int. Conf. Pattern Recogn. 4, 441–444 (2006)

Han, J., Bhanu, B.: Individual recognition using gait energy image. IEEE Trans. Pattern Anal. Mach. Intell. 28(2), 316–322 (2006)

Acknowledgements

This research was supported by National Natural Science Foundation of China (Grant nos. 71273053, 11571074) and Natural Science Foundation of Fujian Province (Grant no. 2018J01666).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by B. Prabhakaran.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Derivation of transforming the objective function of the proposed M2LPP-SL into problem (12) when SXY is updated and fixed:

where SX = [\(s_{ip}^{\text{X}}\)]n × n is the similarity matrix within X-view, the degree matrix with \(d_{ii}^{\text{X}} = \sum\nolimits_{j} {s_{ij}^{\text{X}} }\). SY = [\(s_{jq}^{\text{Y}}\)]m × m is the similarity matrix within Y-view, the degree matrix \(D^{\text{Y}} = {\text{diag}}(d_{11}^{\text{Y}} ,d_{22}^{\text{Y}} ,\ldots,d_{nn}^{\text{Y}} )\) with \(d_{ii}^{\text{Y}} = \sum\nolimits_{j} {s_{ij}^{\text{Y}} }\). SXY = [\(s_{ij}^{\text{XY}}\)]n × m is the cross-view similarity matrix. D(XY)r and D(XY)c are diagonal matrices; their entries are row and column sums of SXY.

Let \(P = \left[ {\begin{array}{*{20}c} {P_{\text{X}} } \\ {P_{\text{Y}} } \\ \end{array} } \right]\), \(\varPhi (Z) = \varPhi (X) \oplus \varPhi (Y) = \left[ {\begin{array}{*{20}c} {\varPhi (X)} & {} \\ {} & {\varPhi (Y)} \\ \end{array} } \right]\), \(S = \left[ {\begin{array}{*{20}c} {S^{\text{X}} } & {S^{\text{XY}} } \\ {(S^{\text{XY}} )^{\text{T}} } & {S^{\text{Y}} } \\ \end{array} } \right]\), \(D = {\text{diag}}(d_{11}^{{}} ,d_{22}^{{}} ,\ldots,d_{(m + n)(m + n)}^{{}} )\) with \(d_{ii} = \sum\nolimits_{j} {S_{ij} }\), and \(L = D - S\). Then Eq. (20) can be transformed into:

Rights and permissions

About this article

Cite this article

Chen, X., Kang, Y. & Chen, Z. Multi-nonlinear multi-view locality-preserving projection with similarity learning for random cross-view gait recognition. Multimedia Systems 26, 727–744 (2020). https://doi.org/10.1007/s00530-020-00685-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-020-00685-2