Abstract

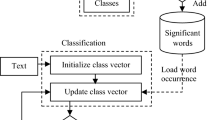

It is well known that the classification effectiveness of the text categorization system is not simply a matter of learning algorithms. Text representation factors are also at work. This paper will consider the ways in which the effectiveness of text classifiers is linked to the five text representation factors: “stop words removal”, “word stemming”, “indexing”, “weighting”, and “normalization”. Statistical analyses of experimental results show that performing “normalization” can always promote effectiveness of text classifiers significantly. The effects of the other factors are not as great as expected. Contradictory to common sense, a simple binary indexing method can sometimes be helpful for text categorization.

Similar content being viewed by others

Notes

In some text categorization literature Mutual Information is named as Information Gain.

References

Maron M (1961) Automatic indexing: an experimental inquiry. J Assoc Comput Mach 8(3):404–417

Sebastiani F (2002) Machine learning in automated text categorization. ACM Comput Surv 34(1):1–47

Jain AK, Duin RPW, Mao J (2000) Statistical pattern recognition: a review. IEEE Trans PAMI 22(1):4–37

Yang Y (1999) An evaluation of statistical approaches to text categorization. Inf Retrieval 1(2):69–90

Joachims T (1998) Text categorization with support vector machines: learning with many relevant features. Proceedings of the 10th European Conference on Machine Learning (ECML). Springer, Berlin Heidelberg New York

Dumais S, Platt J, Heckerman D, Sahami M (1998) Inductive learning algorithms and representations for text categorization. Proceedings of the CIKM-98, Seventh ACM International Conference on Information and Knowledge Management, pp 148–155

Yang Y, Liu X (1999) A re-evaluation of text categorization methods. Proceedings of SIGIR-99, 22nd ACM International Conference on Research and Development in Information Retrieval, pp 42–49

Zhang T, Oles FJ (2001) Text categorization based on regularized linear classification methods. Inf Retrieval 4:5–31

Chakrabarti S, Roy S, Soundalgekar MV, Bombay I (2002) Fast and accuracy text classification via multiple linear discriminant projections. Proceedings of the 28th VLDB Conference, Hong Kong, China

Petridis V, Kaburlasos VG, Fragkou P, Kehagias A (2001) Text classification using the -FLNMAP neural network. Proceedings of the 2001 International Joint Conference on Neural Networks (IJCNN2001)

Salton G, Wong A, Yang C (1975) A vector space model for automatic indexing. Commun ACM 18(11):613–620

Baker LD, McCallum AK (1998) Distributional clustering of words for text categorisation. Proceedings of SIGIR-98, 21st ACM International Conference on Research and Development in Information Retrieval, Melbourne, Australia, pp 96–103

Yang Y Pedersen JO (1997) A comparative study on feature selection in text categorization. In: Machine learning, Proceedings of the 14th International Conference (ICML’97), pp 412–420

Ma J, Zhao Y Ahalt S OSU SVM Classifier Matlab Toolbox (ver 3.00). Available at: http://www.eng.ohio-state.edu/~maj/osu_svm/

Porter MF (1980) An algorithm for suffix striping, Program, vol 14, no. 3, pp 130–137

Lewis, Reuters-21578, Distribution 1.0. Available at: http://www.research.att.com/~lewis/reuters21578.html

Hsu C, Lin C (2002) A comparison of methods for multiclass support vector machines. IEEE Trans Neural Netw 13(2)

Lang K (1995) Newsweeder: learning to filter netnews. Proceeding of the Twelfth International Conference on Machine Learning, pp 331–339

Schutze H, Hull DA, Pedersen JO (1995) A comparison of classifiers and document representations for the routing problem. Proceedings of SIGIR-95, 18th ACM International Conference on Research and Development in Information Retrieval, pp 229–23

Acknowledgements

The authors would like to thank the anonymous reviewers for their precious suggestions.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix

Originality and contribution

It is well known that the effectiveness of the text categorization system is not simply a matter of learning algorithms. Text representation factors are also at work. Though a lot of text representation modes other than the bag of words, such as statistical phrase-based representation and ngram-based representation, have been examined previously without much success, variants of the bag of words and their effectiveness have not been studied systematically as known by the authors. There are still some questions left behind without answers:

-

Among the possible variants of the bag of words schemes which ones are probably the best?

-

Among the factors that may affect a text representation which ones are the most important and should be dealt with seriously?

-

Is “stop words removal” an indispensable step to represent a text document?

-

Does indexing a text document with term frequency always outperform indexing it with binary value?

-

Whether “word stemming” is harmful or beneficial for text categorization?

-

How should we represent text document the best?

By extensive experiments on two benchmark dataset Reuters-21578 and 20 Newsgroups and thorough statistical analyses of those results all of the above questions have been answered in this paper with some confidence.

The main contribution of this paper is that it clarifies some blurred cognition on text representation such that text representation can be more effective and efficient.

Rights and permissions

About this article

Cite this article

Song, F., Liu, S. & Yang, J. A comparative study on text representation schemes in text categorization. Pattern Anal Applic 8, 199–209 (2005). https://doi.org/10.1007/s10044-005-0256-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-005-0256-3