Abstract

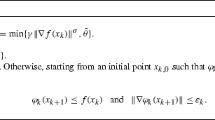

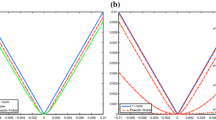

We study a Newton-like method for the minimization of an objective function \(\phi \) that is the sum of a smooth function and an \(\ell _1\) regularization term. This method, which is sometimes referred to in the literature as a proximal Newton method, computes a step by minimizing a piecewise quadratic model \(q_k\) of the objective function \(\phi \). In order to make this approach efficient in practice, it is imperative to perform this inner minimization inexactly. In this paper, we give inexactness conditions that guarantee global convergence and that can be used to control the local rate of convergence of the iteration. Our inexactness conditions are based on a semi-smooth function that represents a (continuous) measure of the optimality conditions of the problem, and that embodies the soft-thresholding iteration. We give careful consideration to the algorithm employed for the inner minimization, and report numerical results on two test sets originating in machine learning.

Similar content being viewed by others

References

Andrew, G., Gao, J.: Scalable training of \({L}_1\)-regularized log-linear models. In: Proceedings of the 24th International Conference on Machine Learning. ACM, pp. 33–40. (2007)

Banerjee, O., El Ghaoui, L., d’Aspremont, A.: Model selection through sparse maximum likelihood estimation for multivariate Gaussian or binary data. J. Mach. Learn. Res. 9, 485–516 (2008)

Banerjee, O., El Ghaoui, L., d’Aspremont, A., Natsoulis, G.: Convex optimization techniques for fitting sparse Gaussian graphical models. In: Proceedings of the 23rd International Conference on Machine learning. ACM, pp. 89–96 (2006)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Becker, S.R., Candés, E.J., Grant, M.C.: Templates for convex cone problems with applications to sparse signal recovery. Math. Program. Comput. 3(3), 165–218 (2011)

Byrd, R.H., Chin, G.M., Nocedal, J., Oztoprak, F.: A family of second-order methods for convex L1 regularized optimization. Technical report, Optimization Center Report 2012/2, Northwestern University (2012)

Byrd, R.H., Chin, G.M., Nocedal, J., Wu, Y.: Sample size selection in optimization methods for machine learning. Math. Program. 134(1), 127–155 (2012)

Byrd, R.H., Nocedal, J., Schnabel, R.: Representations of quasi-Newton matrices and their use in limited memory methods. Math. Program. 63(4), 129–156 (1994)

Dembo, R.S., Eisenstat, S.C., Steihaug, T.: Inexact-Newton methods. SIAM J. Numer. Anal. 19(2), 400–408 (1982)

Dontchev, A.L., Rockafellar, R.T.: Convergence of inexact Newton methods for generalized equations. Math. Program. 139, 115–137 (2013)

Facchinei, F., Pang, J.S.: Finite-Dimensional Variational Inequalities and Complementarity Problems, vol. 2. Springer, Berlin (2003)

Hsieh, C.J., Sustik, M.A., Ravikumar, P., Dhillon, I.S.: Sparse inverse covariance matrix estimation using quadratic approximation. Adv. Neural Inf. Process. Syst. 24, 2330–2338 (2011)

Lee, J., Sun, Y., Saunders, M.: Proximal Newton-type methods for convex optimization. In: Advances in Neural Information Processing Systems, pp. 836–844 (2012)

Li, L., Toh, K.C.: An inexact interior point method for L1-regularized sparse covariance selection. Math. Program. Comput. 2(3), 291–315 (2010)

Le Roux, N., Schmidt, M.W., Bach, F.: Convergence rates of inexact proximal-gradient methods for convex optimization. In: NIPS, pp. 1458–1466 (2011)

Milzarek, A., Ulbrich, M.: A semismooth Newton method with multi-dimensional filter globalization for L1-optimization. SIAM J. Optim. 24(1), 298–333 (2014)

Nocedal, Jorge, Wright, Stephen: Numerical Optimization, 2nd edn. Springer, New York (1999)

Olsen, P., Oztoprak, F., Nocedal, J., Rennie, S.: Newton-like methods for sparse inverse covariance estimation. In: Bartlett, P., Pereira, F.C.N., Burges, C.J.C., Bottou, L., Weinberger, K.Q. (eds.) Advances in Neural Information Processing Systems, vol. 25, pp. 764–772 (2012)

Ortega, J.M., Rheinboldt, W.C.: Iterative Solution of Nonlinear Equations in Several Variables. Academic Press, London (1970)

Patriksson, M.: Cost approximation: a unified framework of descent algorithms for nonlinear programs. SIAM J. Optim. 8(2), 561–582 (1998)

Patriksson, M.: Nonlinear Programming and Variational Inequality Problems, a Unified Approach. Kluwer, Dordrecht (1998)

Picka, J.D.: Gaussian Markov random fields: theory and applications. Technometrics 48(1), 146–147 (2006)

Salzo, S., Villa, S.: Inexact and accelerated proximal point algorithms. J. Convex Anal. 19(4), 1167–1192 (2012)

Sra, S., Nowozin, S., Wright, S.J.: Optimization for Machine Learning. Mit Press, Cambridge (2011)

Tan, X., Scheinberg, K.: Complexity of Inexact Proximal Newton Method. Technical report, Dept. of ISE, Lehigh University (2013)

Tappenden, R., Richtárik, P., Gondzio, J.: Inexact coordinate descent: complexity and preconditioning. arXiv preprint arXiv:1304.5530 (2013)

Yuan, G.-X., Chang, K., Hsie, C., Lin, C.-J.: A comparison of optimization methods and software for large-scale l1-regularized linear classification. J. Mach. Learn. Res. 11(1), 3183–3234 (2010)

Yuan, G.-X., Ho, C.-H., Lin, C.-J.: An improved glmnet for l1-regularized logistic regression. J. Mach. Learn. Res. 13(1), 1999–2030 (2012)

Author information

Authors and Affiliations

Corresponding author

Additional information

To Jong-Shi Pang for his important contributions to optimization and his constant support.

Richard H. Byrd was supported by National Science Foundation Grant DMS-1216554 and Department of Energy Grant DE-SC0001774.

Jorge Nocedal was supported by National Science Foundation Grant DMS-0810213, and by ONR Grant N00014-14-1-0313 P00002.

Figen Oztoprak was supported by US Department of Energy Grant DE-FG02-87ER25047 and by Scientific and Technological Research Council of Turkey Grant Number 113M500. Part of this work was completed while the author was at Istanbul Technical University.

Rights and permissions

About this article

Cite this article

Byrd, R.H., Nocedal, J. & Oztoprak, F. An inexact successive quadratic approximation method for L-1 regularized optimization. Math. Program. 157, 375–396 (2016). https://doi.org/10.1007/s10107-015-0941-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-015-0941-y