Abstract

Voice user interfaces (VUIs) have exploded in popularity over the past 3 years. However, there has been little research on the reply methods that VUIs can adopt to communicate with people. In this paper, we designed 2 studies with 20 participants to explore the influence of reply methods on user experience in 2 kinds of scenarios (applicational scenarios and giving a command) when using a VUI. We explored the performance of different reply methods (fact-only, rational, and emotional) at different times and in different scenarios. In addition, we examined whether there were gender differences when evaluating a reply and different preferences for different reply methods. A “Wizard of Oz” method was used in the experiments to simulate real scenarios for communication between the participants and the VUI. We divided a reply into three parts (fact + judgment + strategy) and constructed three kinds of reply methods. In the experiments, we used quantitative scoring (five aspects: affection, confidence, naturalness, social distance, and satisfaction), preference selection and an interview to measure the participants’ user experience. The results indicated that the participants were inclined to prefer the reply methods (rational and emotional) that offered judgments and strategies in our experiment script, and the emotional style received the highest evaluation. In addition, we found that male participants tended to have a higher evaluation of VUIs’ replies for all three reply methods in applicational scenarios and when giving a command than female participants in our studies. In general, these results may contribute to the design of VUI replies.

Similar content being viewed by others

References

Adiga N, Prasanna SRM (2019) Acoustic features modelling for statistical parametric speech synthesis: a review. IETE Technical Review 36(2):130–149. https://doi.org/10.1080/02564602.2018.1432422

Ameen N, Tarhini A, Reppel A, Anand A (2021) Customer experiences in the age of artificial intelligence. Computers in Human Behavior 114. https://doi.org/10.1016/j.chb.2020.106548

Becker C, Kopp S, Wachsmuth I (2007) Why Emotions should be integrated into conversational agents. In: Nishida T (ed) Wiley series in agent technology. Wiley, New York, pp 49–67. https://doi.org/10.1002/9780470512470.ch3

Bentley F, Luvogt C, Silverman M, Wirasinghe R, White B, Lottridge D (2018) Understanding the long-term use of smart speaker assistants. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies 2(3):1–24. https://doi.org/10.1145/3264901

Bernhaupt R, Dalvi G, Joshi A, Balkrishan DK, O'Neill J, Winckler M (Eds) (2017) Human-Computer Interaction-INTERACT 2017: 16th IFIP TC 13 International Conference, Mumbai, India, September 25-29, 2017, Proceedings, Part II (Vol. 10514). Springer. https://doi.org/10.1007/978-3-319-67744-6

Bradley MM, Lang PJ (1994) Measuring emotion: the self-assessment manikin and the semantic differential. Journal of behavior therapy and experimental psychiatry 25(1):49–59. https://doi.org/10.1016/0005-7916(94)90063-9

Brooke J (1996) SUS-A quick and dirty usability scale. Usability evaluation in industry 189(194):4–7

Cohen MH, Cohen MH, Giangola JP, Balogh J (2004) Voice user interface design. Addison-Wesley Professional, San Francisco

Coskun-Setirek A, Mardikyan S (2017) Understanding the adoption of voice activated personal assistants. In: Int J E-Services Mobile Appl (IJESMA) 9(3):1–21. https://doi.org/10.4018/IJESMA.2017070101

Dybala P, Ptaszynski M, Rzepka R, Araki K (2009, May) Humoroids: conversational agents that induce positive emotions with humor. In AAMAS'09 Proceedings of The 8th International Conference on Autonomous Agents and Multiagent Systems (Vol. 2, pp. 1171-1172). ACM, Budapest, Hungary

Eyssel F, De Ruiter L, Kuchenbrandt D, Bobinger S, Hegel F (2012, March) ‘If you sound like me, you must be more human’: On the interplay of robot and user features on human-robot acceptance and anthropomorphism. In: 2012 7th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (pp. 125-126). IEEE, Boston, MA, USA. https://doi.org/10.1145/2157689.2157717

Fischer JE, Reeves S, Porcheron M, Sikveland R O (2019, August) Progressivity for voice interface design. In Proceedings of the 1st International Conference on Conversational User Interfaces (pp. 1-8). ACM, New York, NY, USA. https://doi.org/10.1145/3342775.3342788

Green P, Wei-Haas L (1985) The rapid development of user interfaces: Experience with the Wizard of Oz method. In Proceedings of the Human Factors Society Annual Meeting (Vol. 29, No. 5, pp. 470-474). Sage CA, Los Angeles. https://doi.org/10.1177/154193128502900515

Habler F, Peisker M, Henze N (2019) Differences between smart speakers and graphical user interfaces for music search considering gender effects. In Proceedings of the 18th International Conference on Mobile and Ubiquitous Multimedia (pp. 1-7). ACM, New York, NY, USA. https://doi.org/10.1145/3365610.3365627

Hone KS, Graham R (2000) Towards a tool for the subjective assessment of speech system interfaces (SASSI). Natural Language Engineering, 6(3-4), 287-303. https://doi.org/10.1017/S1351324900002497

Jang Y (2020) Exploring User Interaction and Satisfaction with Virtual Personal Assistant Usage through Smart Speakers. Archives of Design Research, 33(3), 127-135. https://doi.org/10.15187/adr.2020.08.33.3.127

Biermann M, Schweiger E, Jentsch M (2019) Talking to stupid?!? improving voice user interfaces. Mensch und Computer 2019-Usability Professionals. https://doi.org/10.18420/MUC2019-UP-0253

Jeong Y, Lee J, Kang Y (2019) Exploring effects of conversational fillers on user perception of conversational agents. In Extended abstracts of the 2019 CHI conference on human factors in computing systems (pp. 1-6). ACM, Glasgow, UK. https://doi.org/10.1145/3290607.3312913

Karsenty L, Botherel V (2005) Transparency strategies to help users handle system errors. Speech Communication, 45(3), 305-324. https://doi.org/10.1016/j.specom.2004.10.018

Kerly A, Bull S (2006) The potential for chatbots in negotiated learner modelling: A wizard-of-oz study. In International Conference on Intelligent Tutoring Systems (pp. 443-452). Springer, Berlin, Heidelberg. https://doi.org/10.1007/11774303_44

Kim Y, Mutlu B (2014) How social distance shapes human–robot interaction. International Journal of Human-Computer Studies, 72(12), 783-795.https://doi.org/10.1016/j.ijhcs.2014.05.005

Klein AM, Hinderks A, Schrepp M, Thomaschewski J (2020) Measuring User Experience Quality of Voice Assistants Voice Communication Scales for the UEQ+ Framework: Voice Communication Scales for the UEQ+ Framework. In 2020 15th Iberian Conference on Information Systems and Technologies (CISTI) (pp. 1-4). IEEE, Seville, Spain. https://doi.org/10.23919/CISTI49556.2020.9140966

Kopp S, Gesellensetter L, Krämer NC, Wachsmuth I (2005) A conversational agent as museum guide–design and evaluation of a real-world application. In International workshop on intelligent virtual agents (pp. 329-343). Springer, Berlin, Heidelberg. https://doi.org/10.1007/11550617_28

Krause AE, North AC (2017) Pleasure, arousal, dominance, and judgments about music in everyday life. Psychology of Music, 45(3), 355-374. https://doi.org/10.1177/0305735616664214

Lee S, Cho M, Lee S (2020) What If Conversational Agents Became Invisible? Comparing Users' Mental Models According to Physical Entity of AI Speaker. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 4(3), 1-24. Athens, Greece. https://doi.org/10.1145/3411840

Maharjan R, Bækgaard P, Bardram JE (2019) " Hear me out" smart speaker based conversational agent to monitor symptoms in mental health. In Adjunct Proceedings of the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2019 ACM International Symposium on Wearable Computers (pp. 929-933). ACM, London, UK. https://doi.org/10.1145/3341162.3346270

McKeown G (2016) Laughter and humour as conversational mind-reading displays. In International Conference on Distributed, Ambient, and Pervasive Interactions (pp. 317-328). Springer, Cham. https://doi.org/10.1007/978-3-319-39862-4_29

Melton M, Fenwick Jr J (2019) Alexa Skill Voice Interface for the Moodle Learning Management System. J Comput Sci Coll, 26

Merrill DW, Reid RH (1981) Personal styles & effective performance. CRC Press, Boca Raton

Mehrabian A (1996) Pleasure-arousal-dominance: A general framework for describing and measuring individual differences in temperament. Current Psychol 14(4):261-292. https://doi.org/10.1007/BF02686918

Miccoli L, Delgado R, Guerra P, Versace F, Rodríguez-Ruiz S, Fernández-Santaella MC (2016) Affective pictures and the open library of affective foods (OLAF): tools to investigate emotions toward food in adults. PLoS One 11(8):e0158991. https://doi.org/10.1371/journal.pone.0158991

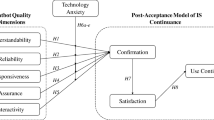

Nguyen Q N, Ta A, Prybutok V (2019) An integrated model of voice-user interface continuance intention: the gender effect. Int J Hum Comput Interact 35(15):1362-1377.https://doi.org/10.1080/10447318.2018.1525023

Niculescu AI, Banchs RE (2019) Humor intelligence for virtual agents. In 9th International Workshop on Spoken Dialogue System Technology (pp. 285-297). Springer, Singapore. https://doi.org/10.1007/978-981-13-9443-0_25

Norton RW (1978) Foundation of a communicator style construct. Hum Commun Res 4(2):99-112.

Park S, Lee Y (2020) User Experience of Smart Speaker Visual Feedback Type: The Moderating Effect of Need for Cognition and Multitasking. Archives of Design Research, 33(2):181-199.https://doi.org/10.15187/adr.2020.05.33.2.181

Pearl C (2016) Designing voice user interfaces: principles of conversational experiences. O’Reilly Media Inc, Newton

Pigliacelli F (2020) Smart speakers’ adoption: technology acceptance model and the role of conversational style. [Unpublished master dissertation]. Libera universtà Internazionale degli Studi Sociali

Polkosky MD, Lewis JR (2003) Expanding the MOS: Development and psychometric evaluation of the MOS-R and MOS-X. International Journal of Speech Technology 6(2):161–182

Radziwill NM, Benton MC (2017) Evaluating quality of chatbots and intelligent conversational agents. arXiv preprint arXiv:1704.04579

Schwind V, Henze N (2018) Gender-and age-related differences in designing the characteristics of stereotypical virtual faces. In Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play (pp. 463-475). ACM, New York, NY, USA. https://doi.org/10.1145/3242671.3242692

Schwind V, Knierim P, Tasci C, Franczak P, Haas N, Henze N (2017) " These are not my hands!" Effect of Gender on the Perception of Avatar Hands in Virtual Reality. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (pp. 1577-1582). ACM, New York, NY, USA. https://doi.org/10.1145/3025453.3025602

Shamekhi A, Czerwinski M, Mark G, Novotny M, Bennett G A (2016) An exploratory study toward the preferred conversational style for compatible virtual agents. In International Conference on Intelligent Virtual Agents (pp. 40-50). Springer, Cham. https://doi.org/10.1007/978-3-319-47665-0_4

Street Jr RL (1982) Evaluation of noncontent speech accommodation. Language & Communication, 2(1): 13-31. https://doi.org/10.1016/0271-5309(82)90032-5

Vanderhaegen F (2021) Weak Signal-Oriented Investigation of Ethical Dissonance Applied to Unsuccessful Mobility Experiences Linked to Human–Machine Interactions. Science and Engineering Ethics, 27(1): 1-25. https://doi.org/10.1007/s11948-021-00284-y

Wang J, Yang H, Shao R, Abdullah S, Sundar SS (2020) Alexa as coach: Leveraging smart speakers to build social agents that reduce public speaking anxiety. In: Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1-13). ACM, New York, NY, USA. https://doi.org/10.1145/3313831.3376561

Yanyan S, Shiyan Li, Xiantao C (2019) Emotional voice interaction design: human computer interaction research map and design case of baidu AI user experience department. Decoration 11:22–27. https://doi.org/10.16272/j.cnki.cn11-1392/j.2019.11.008

Funding

This study was supported by the National Natural Science Foundation of China (NSFC, 72171015 and 72021001) and the Fundamental Research Funds for the Central Universities (YWF-21-BJ-J-314).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ma, Q., Zhou, R., Zhang, C. et al. Rationally or emotionally: how should voice user interfaces reply to users of different genders considering user experience?. Cogn Tech Work 24, 233–246 (2022). https://doi.org/10.1007/s10111-021-00687-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10111-021-00687-8