Abstract

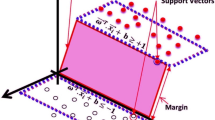

Support vector machine (SVM) is a state-of-art classification tool with good accuracy due to its ability to generate nonlinear model. However, the nonlinear models generated are typically regarded as incomprehensible black-box models. This lack of explanatory ability is a serious problem for practical SVM applications which require comprehensibility. Therefore, this study applies a C5 decision tree (DT) to extract rules from SVM result. In addition, a metaheuristic algorithm is employed for the feature selection. Both SVM and C5 DT require expensive computation. Applying these two algorithms simultaneously for high-dimensional data will increase the computational cost. This study applies artificial bee colony optimization (ABC) algorithm to select the important features. The proposed algorithm ABC–SVM–DT is applied to extract comprehensible rules from SVMs. The ABC algorithm is applied to implement feature selection and parameter optimization before SVM–DT. The proposed algorithm is evaluated using eight datasets to demonstrate the effectiveness of the proposed algorithm. The result shows that the classification accuracy and complexity of the final decision tree can be improved simultaneously by the proposed ABC–SVM–DT algorithm, compared with genetic algorithm and particle swarm optimization algorithm.

Similar content being viewed by others

References

Baesens B, Van Gestel T, Viaene S, Stepanova M, Suykens J, Vanthienen J (2003) Benchmarking state-of-the-art classification algorithms for credit scoring. J Oper Res Soc 54:627–635

Barakat N, Bradley AP (2010) Rule extraction from support vector machines: a review. Neurocomputing 74:178–190

Barakat N, Diederich J (2004) Learning-based rule-extraction from support vector machines. In: The 14th international conference on computer theory and applications ICCTA’2004. Publishing, Alexandria, Egypt

Berry MJ, Linoff G (1997) Data mining techniques: for marketing, sales, and customer support. Wiley, New York

Brown de Colstoun EC, Story MH, Thompson C, Commisso K, Smith TG, Irons JR (2003) National Park vegetation mapping using multitemporal Landsat 7 data and a decision tree classifier. Remote Sens Environ 85:316–327

Burges CJ (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Discov 2:121–167

Cervantes J, García Lamont F, López-Chau A, Rodríguez Mazahua L, Sergio Ruíz J (2015) Data selection based on decision tree for SVM classification on large data sets. Appl Soft Comput 37:787–798

Chen M-S, Han J, Yu PS (1996) Data mining: an overview from a database perspective. IEEE Trans Knowl Data Eng 8:866–883

Chen Z, Li J, Wei L (2007) A multiple kernel support vector machine scheme for feature selection and rule extraction from gene expression data of cancer tissue. Artif Intell Med 41:161–175

Downs T, Gates KE, Masters A (2002) Exact simplification of support vector solutions. J Mach Learn Res 2:293–297

Eesa AS, Orman Z, Brifcani AMA (2015) A novel feature-selection approach based on the cuttlefish optimization algorithm for intrusion detection systems. Expert Syst Appl 42:2670–2679

Eiben AE, Hinterding R, Michalewicz Z (1999) Parameter control in evolutionary algorithms. IEEE Trans Evol Comput 3:124–141

Fayyad UM, Piatetsky-Shapiro G, Smyth P, Uthurusamy R (1996) Advances in knowledge discovery and data mining. American Association for Artificial Intelligence, Menlo Park

Fong S, Wong R, Vasilakos AV (2016) Accelerated PSO swarm search feature selection for data stream mining big data. IEEE Trans Serv Comput 9:33–45

Frohlich H, Chapelle O, Scholkopf B (2003) Feature selection for support vector machines by means of genetic algorithm. In: 15th IEEE international conference on tools with artificial intelligence. Publishing, pp 142–148

Fung G, Sandilya S, Rao RB (2005) Rule extraction from linear support vector machines.In: Proceedings of the eleventh ACM SIGKDD international conference on Knowledge discovery in data mining. Publishing, Chicago, pp 32–40

Garcia S, Herrera F (2008) An extension on “statistical comparisons of classifiers over multiple data sets” for all pairwise comparisons. J Mach Learn Res 9:2677–2694

Hsu C-W, Chang C-C, Lin C-J (2003) A practical guide to support vector classification. Publishing, Taipei

Hsu C-W, Lin C-J (2002) A simple decomposition method for support vector machines. Mach Learn 46:291–314

Huang C-L, Wang C-J (2006) A GA-based feature selection and parameters optimization for support vector machines. Expert Syst Appl 31:231–240

Im J, Jensen JR (2005) A change detection model based on neighborhood correlation image analysis and decision tree classification. Remote Sens Environ 99:326–340

Jansen T, Wegener I (2005) Real royal road functions—where crossover provably is essential. Discret Appl Math 149:111–125

Joachims T (1998) Text categorization with support vector machines: learning with many relevant features. Springer, Berlin

Joachims T (2005) A support vector method for multivariate performance measures. In: Proceedings of the 22nd international conference on Machine learning. Publishing, pp 377–384

Kao Y-T, Zahara E (2008) A hybrid genetic algorithm and particle swarm optimization for multimodal functions. Appl Soft Comput 8:849–857

Karaboga D (2005b) An idea based on honey bee swarm for numerical optimization. Technical Report TR06, Erciyes University Press, Erciyes

Karaboga D, Akay B (2009) A comparative study of artificial bee colony algorithm. Appl Math Comput 214:108–132

Karaboga D, Akay B (2011) A modified artificial bee colony (ABC) algorithm for constrained optimization problems. Appl Soft Comput 11:3021–3031

Karaboga D, Basturk B (2007) A powerful and efficient algorithm for numerical function optimization: artificial bee colony (ABC) algorithm. J Glob Optim 39:459–471

Karaboga D, Basturk B (2008) On the performance of artificial bee colony (ABC) algorithm. Appl Soft Comput 8:687–697

Kecman V (2001) Learning and soft computing: support vector machines, neural networks, and fuzzy logic models. MIT press, Cambridge

Khemchandani R, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29:905–910

Kuo R, Zulvia FE, Suryadi K (2012) Hybrid particle swarm optimization with genetic algorithm for solving capacitated vehicle routing problem with fuzzy demand-A case study on garbage collection system. Appl Math Comput 219:2574–2588

Lajnef T, Chaibi S, Ruby P, Aguera P-E, Eichenlaub J-B, Samet M, Kachouri A, Jerbi K (2015) Learning machines and sleeping brains: automatic sleep stage classification using decision-tree multi-class support vector machines. J Neurosci Methods 250:94–105

LaValle SM, Branicky MS, Lindemann SR (2004) On the relationship between classical grid search and probabilistic roadmaps. Int J Robot Res 23:673–692

Lian C, Ruan S, Denœux T (2015) An evidential classifier based on feature selection and two-step classification strategy. Pattern Recognit 48:2318–2327

Lin H-T, Lin C-J (2003) A study on sigmoid kernels for SVM and the training of non-PSD kernels by SMO-type methods. Technical report, Department of Computer Science, National Taiwan University

Lin L (2013) Integration of particle swarm k-means optimization algorithm and granular computing for imbalanced data classification problem—a case study on prostate cancer prognosis. National Taiwan Unversity of Science and Technology, Taipei

Martens D, Baesens B, Van Gestel T (2009) Decompositional rule extraction from support vector machines by active learning. IEEE Trans Knowl Data Eng 21:178–191

Martens D, Baesens B, Van Gestel T, Vanthienen J (2007) Comprehensible credit scoring models using rule extraction from support vector machines. Eur J Oper Res 183:1466–1476

Moschitti A, Pighin D, Basili R (2006) Semantic role labeling via tree kernel joint inference. In: Proceedings of the tenth conference on computational natural language learning. Publishing, pp 61–68

Pan Y, Zomaya AY (2007) Current methods for protein secondary-structure prediction based on support vector machines. Wiley, Hoboken

Pontil M, Verri A (1998) Support vector machines for 3D object recognition. IEEE Trans Pattern Anal Mach Intell 20:637–646

Prugel-Bennett A (2010) Benefits of a population: five mechanisms that advantage population-based algorithms. IEEE Trans Evol Comput 14:500–517

Quinlan JR (1986) Induction of decision trees. Mach Learn 1:81–106

Quinlan JR (1993a) C4. 5: programs for machine learning, vol 1. Morgan kaufmann, San Francisco

Quinlan JR (1993b) Data mining with decision trees: theory and applications. Morgan Kaufmann, San Francisco

Samadzadegan F, Saeedi S (2009) Clustering of lidar data using particle swarm optimization algorithm in urban area. Laserscanning 09(38):334–339

Smola AJ (2002) Learning with kernels: support vector machines, regularization, optimization and beyond. MIT press, Cambridge

Sugumaran V, Muralidharan V, Ramachandran K (2007) Feature selection using decision tree and classification through proximal support vector machine for fault diagnostics of roller bearing. Mech Syst Signal Process 21:930–942

Sulaiman MH, Mustafa MW, Shareef H, Abd Khalid SN (2012) An application of artificial bee colony algorithm with least squares support vector machine for real and reactive power tracing in deregulated power system. Int J Electr Power Energy Syst 37:67–77

Torres D, Rocco C (2005) Extracting trees from trained SVM models using a TREPAN based approach. In: Fifth international conference on hybrid intelligent systems, 2005. HIS’05. Publishing, Rio de Janeiro, p 6

Van Gestel T, Suykens JA, Baesens B, Viaene S, Vanthienen J, Dedene G, De Moor B, Vandewalle J (2004) Benchmarking least squares support vector machine classifiers. Mach Learn 54:5–32

Van Gestel T, Suykens JA, Baestaens D-E, Lambrechts A, Lanckriet G, Vandaele B, De Moor B, Vandewalle J (2001) Financial time series prediction using least squares support vector machines within the evidence framework. IEEE Trans Neural Netw 12:809–821

Vapnik V (2000) The nature of statistical learning theory. Springer, New York

Varma MKS, Rao NKK, Raju KK, Varma GPS (2016) Pixel-based classification using support vector machine classifier. In: 2016 IEEE 6th international conference on advanced computing (IACC). Publishing pp 51–55

Wang J, Li T, Ren R (2010) A real time IDSs based on artificial bee colon-support vector machine algorithm. In: Third international workshop on advance computational intelligence, Suzhou, Jiangsu, pp 91–96

Waske B, Benediktsson JA (2007) Fusion of support vector machines for classification of multisensor data. IEEE Trans Geosci Remote Sens 45:3858–3866

Witt C (2008) Population size versus runtime of a simple evolutionary algorithm. Theor Comput Sci 403:104–120

Yang J, Honavar V (1998) Feature subset selection using a genetic algorithm, Feature extraction, construction and selection. Publishing, pp 117–136

Yu G-X, Ostrouchov G, Geist A, Samatova NF (2003) An SVM-based algorithm for identification of photosynthesis-specific genome features. In: Proceedings of the 2003 IEEE bioinformatics conference, 2003. CSB 2003. Publishing, Stanford, pp 235–243

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kuo, R.J., Huang, S.B.L., Zulvia, F.E. et al. Artificial bee colony-based support vector machines with feature selection and parameter optimization for rule extraction. Knowl Inf Syst 55, 253–274 (2018). https://doi.org/10.1007/s10115-017-1083-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-017-1083-8