Abstract

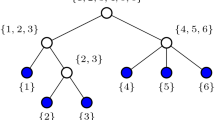

Hierarchical tensors can be regarded as a generalisation, preserving many crucial features, of the singular value decomposition to higher-order tensors. For a given tensor product space, a recursive decomposition of the set of coordinates into a dimension tree gives a hierarchy of nested subspaces and corresponding nested bases. The dimensions of these subspaces yield a notion of multilinear rank. This rank tuple, as well as quasi-optimal low-rank approximations by rank truncation, can be obtained by a hierarchical singular value decomposition. For fixed multilinear ranks, the storage and operation complexity of these hierarchical representations scale only linearly in the order of the tensor. As in the matrix case, the set of hierarchical tensors of a given multilinear rank is not a convex set, but forms an open smooth manifold. A number of techniques for the computation of hierarchical low-rank approximations have been developed, including local optimisation techniques on Riemannian manifolds as well as truncated iteration methods, which can be applied for solving high-dimensional partial differential equations. This article gives a survey of these developments. We also discuss applications to problems in uncertainty quantification, to the solution of the electronic Schrödinger equation in the strongly correlated regime, and to the computation of metastable states in molecular dynamics.

Similar content being viewed by others

Notes

A contraction variable \(k_\eta \) is called inactive in \({\mathbf {C}}^\nu \) if \({\mathbf {C}}^\nu \) does not depend on this index. The other variables are called active. The notation will be adjusted to reflect the dependence on active variables only later for special cases.

If \(\mathbf {u} = \sum _{k=1}^r \mathbf {u}^1_k \otimes \mathbf {u}^2_k\), then \({\mathbf {u}} \in {{\mathrm{span}}}\{\mathbf {u}^1_1,\dots ,\mathbf {u}^1_r \} \otimes {{\mathrm{span}}}\{ \mathbf {u}^2_1,\dots ,\mathbf {u}^2_r \}\). Conversely, if \(\mathbf {u}\) is in such a subspace, then there exist \(a_{ij}\) such that \(\mathbf {u} = \sum _{i=1}^r \sum _{j=1}^r a_{ij} \mathbf {u}^1_i \otimes \mathbf {u}^2_j = \sum _{i=1}^r \mathbf {u}^1_i \otimes \left( \sum _{j=1}^r a_{ij} \mathbf {u}^2_j \right) \).

One can think of \(\pi _{{\mathbb {T}}}(\mathbf {u})\) as a reshape of the tensor \(\mathbf {u}\) which relabels the physical variables according to the permutation \(\varPi \) induced by the order of the tree vertices. Note that in the pointwise formula (3.9) it is not needed.

Even without assuming our knowledge that \({\mathscr {H}}_{\mathfrak {r}}\) is an embedded submanifold, these considerations show that \(\tau _{\text {TT}}\) is a smooth map of constant co-rank \(r_1^2 + \dots + r_{d-1}^2\) on \({\mathscr {W}}^*\). This already implies that the image is a locally embedded submanifold [79].

References

Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton, NJ (2008)

Andreev, R., Tobler, C.: Multilevel preconditioning and low-rank tensor iteration for space-time simultaneous discretizations of parabolic PDEs. Numer. Linear Algebra Appl. 22(2), 317–337 (2015)

Arad, I., Kitaev, A., Landau, Z., Vazirani, U.: An area law and sub-exponential algorithm for 1D systems. arXiv:1301.1162 (2013)

Arnold, A., Jahnke, T.: On the approximation of high-dimensional differential equations in the hierarchical Tucker format. BIT 54(2), 305–341 (2014)

Bachmayr, M., Cohen, A.: Kolmogorov widths and low-rank approximations of parametric elliptic PDEs. Math. Comp. (2016). In press.

Bachmayr, M., Dahmen, W.: Adaptive low-rank methods for problems on Sobolev spaces with error control in L\(_2\). ESAIM: M2AN (2015). doi:10.1051/m2an/2015071. In press.

Bachmayr, M., Dahmen, W.: Adaptive near-optimal rank tensor approximation for high-dimensional operator equations. Found. Comput. Math. 15(4), 839–898 (2015)

Bachmayr, M., Dahmen, W.: Adaptive low-rank methods: Problems on Sobolev spaces. SIAM J. Numer. Anal. 54, 744–796 (2016)

Bachmayr, M., Schneider, R.: Iterative methods based on soft thresholding of hierarchical tensors. Found. Comput. Math. (2016). In press.

Ballani, J., Grasedyck, L.: A projection method to solve linear systems in tensor format. Numer. Linear Algebra Appl. 20(1), 27–43 (2013)

Ballani, J., Grasedyck, L.: Tree adaptive approximation in the hierarchical tensor format. SIAM J. Sci. Comput. 36(4), A1415–A1431 (2014)

Ballani, J., Grasedyck, L., Kluge, M.: Black box approximation of tensors in hierarchical Tucker format. Linear Algebra Appl. 438(2), 639–657 (2013)

Bazarkhanov, D., Temlyakov, V.: Nonlinear tensor product approximation of functions. J. Complexity 31(6), 867–884 (2015)

Beck, J., Tempone, R., Nobile, F., Tamellini, L.: On the optimal polynomial approximation of stochastic PDEs by Galerkin and collocation methods. Math. Models Methods Appl. Sci. 22(9), 1250023, 33 (2012)

Beck, M. H., Jäckle, A., Worth, G. A., Meyer, H.-D.: The multiconfiguration time-dependent Hartree (MCTDH) method: a highly efficient algorithm for propagating wavepackets. Phys. Rep. 324(1), 1 – 105 (2000)

Beylkin, G., Mohlenkamp, M. J.: Numerical operator calculus in higher dimensions. Proc. Natl. Acad. Sci. USA 99(16), 10246–10251 (electronic) (2002)

Beylkin, G., Mohlenkamp, M. J.: Algorithms for numerical analysis in high dimensions. SIAM J. Sci. Comput. 26(6), 2133–2159 (electronic) (2005)

Billaud-Friess, M., Nouy, A., Zahm, O.: A tensor approximation method based on ideal minimal residual formulations for the solution of high-dimensional problems. ESAIM Math. Model. Numer. Anal. 48(6), 1777–1806 (2014)

Blumensath, T., Davies, M. E.: Iterative hard thresholding for compressed sensing. Appl. Comput. Harmon. Anal. 27(3), 265–274 (2009)

Braess, D., Hackbusch, W.: On the efficient computation of high-dimensional integrals and the approximation by exponential sums. In: R. DeVore, A. Kunoth (eds.) Multiscale, Nonlinear and Adaptive Approximation, pp. 39–74. Springer Berlin Heidelberg (2009)

Buczyńska, W., Buczyński, J., Michałek, M.: The Hackbusch conjecture on tensor formats. J. Math. Pures Appl. (9) 104(4), 749–761 (2015)

Cai, J.-F., Candès, E. J., Shen, Z.: A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 20(4), 1956–1982 (2010)

Cancès, E., Defranceschi, M., Kutzelnigg, W., Le Bris, C., Maday, Y.: Handbook of Numerical Analysis, vol. X, chap. Computational Chemistry: A Primer. North-Holland (2003)

Cancès, E., Ehrlacher, V., Lelièvre, T.: Convergence of a greedy algorithm for high-dimensional convex nonlinear problems. Math. Models Methods Appl. Sci. 21(12), 2433–2467 (2011)

Carroll, J. D., Chang, J.-J.: Analysis of individual differences in multidimensional scaling via an n-way generalization of “Eckart-Young” decomposition. Psychometrika 35(3), 283–319 (1970)

Chan, G. K.-L., Sharma, S.: The density matrix renormalization group in quantum chemistry. Annu. Rev. Phys. Chem. 62, 465–481 (2011)

Cichocki, A.: Era of big data processing: a new approach via tensor networks and tensor decompositions. arXiv:1403.2048 (2014)

Cichocki, A., Mandic, D., De Lathauwer, L., Zhou, G., Zhao, Q., Caiafa, C., Phan, H. A.: Tensor decompositions for signal processing applications: From two-way to multiway component analysis. IEEE Signal Proc. Mag. 32(2), 145–163 (2015)

Cohen, A., DeVore, R., Schwab, C.: Convergence rates of best \(N\)-term Galerkin approximations for a class of elliptic sPDEs. Found. Comput. Math. 10(6), 615–646 (2010)

Cohen, A., Devore, R., Schwab, C.: Analytic regularity and polynomial approximation of parametric and stochastic elliptic PDE’s. Anal. Appl. (Singap.) 9(1), 11–47 (2011)

Cohen, N., Sharir, O., Shashua, A.: On the expressive power of Deep Learning: A tensor analysis. arXiv:1509.05009 (2015)

Coifman, R. R., Kevrekidis, I. G., Lafon, S., Maggioni, M., Nadler, B.: Diffusion maps, reduction coordinates, and low dimensional representation of stochastic systems. Multiscale Model. Simul. 7(2), 842–864 (2008)

Combettes, P. L., Wajs, V. R.: Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 4(4), 1168–1200 (electronic) (2005)

Comon, P., Luciani, X., de Almeida, A. L. F.: Tensor decompositions, alternating least squares and other tales. J. Chemometrics 23(7-8), 393–405 (2009)

Da Silva, C., Herrmann, F. J.: Optimization on the hierarchical Tucker manifold—applications to tensor completion. Linear Algebra Appl. 481, 131–173 (2015)

Dahmen, W., DeVore, R., Grasedyck, L., Süli, E.: Tensor-sparsity of solutions to high-dimensional elliptic partial differential equations. Found. Comput. Math. (2015). In press.

Daubechies, I., Defrise, M., De Mol, C.: An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Comm. Pure Appl. Math. 57(11), 1413–1457 (2004)

De Lathauwer, L., Comon, P., De Moor, B., Vandewalle, J.: High-order power method – Application in Independent Component Analysis. In: Proceedings of the 1995 International Symposium on Nonlinear Theory and its Applications (NOLTA’95), pp. 91–96 (1995)

De Lathauwer, L., De Moor, B., Vandewalle, J.: A multilinear singular value decomposition. SIAM J. Matrix Anal. Appl. 21(4), 1253–1278 (electronic) (2000)

DeVore, R. A.: Nonlinear approximation. Acta Numer. 7, 51–150 (1998)

Dolgov, S., Khoromskij, B.: Tensor-product approach to global time-space parametric discretization of chemical master equation. Preprint 68/2012, MPI MIS Leipzig (2012)

Dolgov, S. V., Khoromskij, B. N., Oseledets, I. V.: Fast solution of parabolic problems in the tensor train/quantized tensor train format with initial application to the Fokker-Planck equation. SIAM J. Sci. Comput. 34(6), A3016–A3038 (2012)

Dolgov, S. V., Khoromskij, B. N., Oseledets, I. V., Savostyanov, D. V.: Computation of extreme eigenvalues in higher dimensions using block tensor train format. Comput. Phys. Commun. 185(4), 1207–1216 (2014)

Dolgov, S. V., Savostyanov, D. V.: Alternating minimal energy methods for linear systems in higher dimensions. SIAM J. Sci. Comput. 36(5), A2248–A2271 (2014)

Eckart, C., Young, G.: The approximation of one matrix by another of lower rank. Psychometrika 1(3), 211–218 (1936)

Edelman, A., Arias, T. A., Smith, S. T.: The geometry of algorithms with orthogonality constraints. SIAM J. Matrix Anal. Appl. 20(2), 303–353 (1999)

Eigel, M., Gittelson, C. J., Schwab, C., Zander, E.: Adaptive stochastic Galerkin FEM. Comput. Methods Appl. Mech. Engrg. 270, 247–269 (2014)

Eigel, M., Pfeffer, M., Schneider, R.: Adaptive stochastic Galerkin FEM with hierarchical tensor representions. Preprint 2153, WIAS Berlin (2015)

Espig, M., Hackbusch, W., Handschuh, S., Schneider, R.: Optimization problems in contracted tensor networks. Comput. Vis. Sci. 14(6), 271–285 (2011)

Espig, M., Hackbusch, W., Khachatryan, A.: On the convergence of alternating least squares optimisation in tensor format representations. arXiv:1506.00062 (2015)

Espig, M., Hackbusch, W., Rohwedder, T., Schneider, R.: Variational calculus with sums of elementary tensors of fixed rank. Numer. Math. 122(3), 469–488 (2012)

Falcó, A., Hackbusch, W.: On minimal subspaces in tensor representations. Found. Comput. Math. 12(6), 765–803 (2012)

Falcó, A., Nouy, A.: Proper generalized decomposition for nonlinear convex problems in tensor Banach spaces. Numer. Math. 121(3), 503–530 (2012)

Falcó, A., Hackbusch W., Nouy, A.: Geometric structures in tensor representations. Preprint 9/2013, MPI MIS Leipzig (2013)

Fannes, M., Nachtergaele, B., Werner, R. F.: Finitely correlated states on quantum spin chains. Comm. Math. Phys. 144(3), 443–490 (1992)

Foucart, S., Rauhut, H.: A Mathematical Introduction to Compressive Sensing. Birkhäuser/Springer, New York (2013)

Ghanem, R., Spanos, P. D.: Polynomial chaos in stochastic finite elements. J. Appl. Mech. 57(1), 197–202 (1990)

Ghanem, R. G., Spanos, P. D.: Stochastic Finite Elements: A Spectral Approach, second edn. Dover (2007)

Grasedyck, L.: Existence and computation of low Kronecker-rank approximations for large linear systems of tensor product structure. Computing 72(3-4), 247–265 (2004)

Grasedyck, L.: Hierarchical singular value decomposition of tensors. SIAM J. Matrix Anal. Appl. 31(4), 2029–2054 (2009/10)

Grasedyck, L.: Polynomial approximation in hierarchical Tucker format by vector-tensorization. DFG SPP 1324 Preprint 43 (2010)

Grasedyck, L., Hackbusch, W.: An introduction to hierarchical(\({\cal H}\)-) rank and TT-rank of tensors with examples.Comput. Methods Appl. Math. 11(3), 291–304 (2011) Math.11(3), 291-304 (2011)

Grasedyck, L., Kressner, D., Tobler, C.: A literature survey of low-rank tensor approximation techniques. GAMM-Mitt. 36(1), 53–78 (2013)

Hackbusch, W.: Tensorisation of vectors and their efficient convolution. Numer. Math. 119(3), 465–488 (2011)

Hackbusch, W.: Tensor Spaces and Numerical Tensor Calculus. Springer, Heidelberg (2012)

Hackbusch, W.: \(L^\infty \) estimation of tensor truncations. Numer. Math. 125(3), 419–440 (2013)

Hackbusch, W.: Numerical tensor calculus. Acta Numer. 23, 651–742 (2014)

Hackbusch, W., Khoromskij, B. N., Tyrtyshnikov, E. E.: Approximate iterations for structured matrices. Numer. Math. 109(3), 365–383 (2008)

Hackbusch, W., Kühn, S.: A new scheme for the tensor representation. J. Fourier Anal. Appl. 15(5), 706–722 (2009)

Hackbusch, W., Schneider, R.: Extraction of Quantifiable Information from Complex Systems, chap. Tensor spaces and hierarchical tensor representations, pp. 237–261. Springer (2014)

Haegeman, J., Osborne, T. J., Verstraete, F.: Post-matrix product state methods: To tangent space and beyond. Phys. Rev. B 88, 075133 (2013)

Harshman, R. A.: Foundations of the PARAFAC procedure: Models and conditions for an “explanatory” multi-modal factor analysis. UCLA Working Papers in Phonetics 16, 1–84 (1970)

Helgaker, T., Jørgensen, P., Olsen, J.: Molecular Electronic-Structure Theory. John Wiley & Sons, Chichester (2000)

Helmke, U., Shayman, M. A.: Critical points of matrix least squares distance functions. Linear Algebra Appl. 215, 1–19 (1995)

Hillar, C. J., Lim, L.-H.: Most tensor problems are NP-hard. J. ACM 60(6), Art. 45, 39 (2013)

Hitchcock, F. L.: The expression of a tensor or a polyadic as a sum of products. Journal of Mathematics and Physics 6, 164–189 (1927)

Hitchcock, F. L.: Multiple invariants and generalized rank of a \(p\)-way matrix or tensor. Journal of Mathematics and Physics 7, 39–79 (1927)

Holtz, S., Rohwedder, T., Schneider, R.: The alternating linear scheme for tensor optimization in the tensor train format. SIAM J. Sci. Comput. 34(2), A683–A713 (2012)

Holtz, S., Rohwedder, T., Schneider, R.: On manifolds of tensors of fixed TT-rank. Numer. Math. 120(4), 701–731 (2012)

Kazeev, V., Schwab, C.: Quantized tensor-structured finite elements for second-order elliptic PDEs in two dimensions. SAM research report 2015-24, ETH Zürich (2015)

Kazeev, V. A.: Quantized tensor structured finite elements for second-order elliptic PDEs in two dimensions. Ph.D. thesis, ETH Zürich (2015)

Khoromskij, B. N.: \(O(d\log N)\)-quantics approximation of \(N\)-\(d\) tensors in high-dimensional numerical modeling. Constr. Approx. 34(2), 257–280 (2011)

Khoromskij, B. N., Miao, S.: Superfast wavelet transform using quantics-TT approximation. I. Application to Haar wavelets. Comput. Methods Appl. Math. 14(4), 537–553 (2014)

Khoromskij, B. N., Oseledets, I. V.: DMRG+QTT approach to computation of the ground state for the molecular Schrödinger operator. Preprint 69/2010, MPI MIS Leipzig (2010)

Khoromskij, B. N., Schwab, C.: Tensor-structured Galerkin approximation of parametric and stochastic elliptic PDEs. SIAM J. Sci. Comput. 33(1), 364–385 (2011)

Koch, O., Lubich, C.: Dynamical tensor approximation. SIAM J. Matrix Anal. Appl. 31(5), 2360–2375 (2010)

Kolda, T. G., Bader, B. W.: Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009)

Kressner, D., Steinlechner, M., Uschmajew, A.: Low-rank tensor methods with subspace correction for symmetric eigenvalue problems. SIAM J. Sci. Comput. 36(5), A2346–A2368 (2014)

Kressner, D., Steinlechner, M., Vandereycken, B.: Low-rank tensor completion by Riemannian optimization. BIT 54(2), 447–468 (2014)

Kressner, D., Tobler, C.: Low-rank tensor Krylov subspace methods for parametrized linear systems. SIAM J. Matrix Anal. Appl. 32(4), 1288–1316 (2011)

Kressner, D., Tobler, C.: Preconditioned low-rank methods for high-dimensional elliptic PDE eigenvalue problems. Comput. Methods Appl. Math. 11(3), 363–381 (2011)

Kressner, D., Uschmajew, A.: On low-rank approximability of solutions to high-dimensional operator equations and eigenvalue problems. Linear Algebra Appl. 493, 556–572 (2016)

Kroonenberg, P. M.: Applied Multiway Data Analysis. Wiley-Interscience [John Wiley & Sons], Hoboken, NJ (2008)

Kruskal, J. B.: Rank, decomposition, and uniqueness for \(3\)-way arrays. In: R. Coppi, S. Bolasco (eds.) Multiway data analysis, pp. 7–18. North-Holland, Amsterdam (1989)

Landsberg, J. M.: Tensors: Geometry and Applications. American Mathematical Society, Providence, RI (2012)

Landsberg, J. M., Qi, Y., Ye, K.: On the geometry of tensor network states. Quantum Inf. Comput. 12(3-4), 346–354 (2012)

Lang, S.: Fundamentals of Differential Geometry. Springer-Verlag, New York (1999)

Le Maître, O. P., Knio, O. M.: Spectral Methods for Uncertainty Quantification. Springer, New York (2010)

Legeza, Ö., Rohwedder, T., Schneider, R., Szalay, S.: Many-Electron Approaches in Physics, Chemistry and Mathematics, chap. Tensor product approximation (DMRG) and coupled cluster method in quantum chemistry, pp. 53–76. Springer (2014)

Lim, L.-H.: Tensors and hypermatrices. In: L. Hogben (ed.) Handbook of Linear Algebra, second edn. CRC Press, Boca Raton, FL (2014)

Lim, L.-H., Comon, P.: Nonnegative approximations of nonnegative tensors. J. Chemometrics 23(7-8), 432–441 (2009)

Lubich, C.: From quantum to classical molecular dynamics: reduced models and numerical analysis. European Mathematical Society (EMS), Zürich (2008)

Lubich, C., Oseledets, I. V.: A projector-splitting integrator for dynamical low-rank approximation. BIT 54(1), 171–188 (2014)

Lubich, C., Oseledets, I. V., Vandereycken, B.: Time integration of tensor trains. SIAM J. Numer. Anal. 53(2), 917–941 (2015)

Lubich, C., Rohwedder, T., Schneider, R., Vandereycken, B.: Dynamical approximation by hierarchical Tucker and tensor-train tensors. SIAM J. Matrix Anal. Appl. 34(2), 470–494 (2013)

Mohlenkamp, M. J.: Musings on multilinear fitting. Linear Algebra Appl. 438(2), 834–852 (2013)

Murg, V., Verstraete, F., Schneider, R., Nagy, P. R., Legeza, Ö.: Tree tensor network state study of the ionic-neutral curve crossing of LiF. arXiv:1403.0981 (2014)

Nüske, F., Schneider, R., Vitalini, F., Noé, F.: Variational tensor approach for approximating the rare-event kinetics of macromolecular systems. J. Chem. Phys. 144, 054105 (2016)

Oseledets, I., Tyrtyshnikov, E.: TT-cross approximation for multidimensional arrays. Linear Algebra Appl. 432(1), 70–88 (2010)

Oseledets, I. V.: On a new tensor decomposition. Dokl. Akad. Nauk 427(2), 168–169 (2009). In Russian; English translation in: Dokl. Math. 80(1), 495–496 (2009)

Oseledets, I. V.: Tensor-train decomposition. SIAM J. Sci. Comput. 33(5), 2295–2317 (2011)

Oseledets, I. V., Dolgov, S. V.: Solution of linear systems and matrix inversion in the TT-format. SIAM J. Sci. Comput. 34(5), A2718–A2739 (2012)

Oseledets, I. V., Tyrtyshnikov, E. E.: Breaking the curse of dimensionality, or how to use SVD in many dimensions. SIAM J. Sci. Comput. 31(5), 3744–3759 (2009)

Oseledets, I. V., Tyrtyshnikov, E. E.: Recursive decomposition of multidimensional tensors. Dokl. Akad. Nauk 427(1), 14–16 (2009). In Russian; English translation in: Dokl. Math. 80(1), 460–462 (2009)

Oseledets, I. V., Tyrtyshnikov, E. E.: Algebraic wavelet transform via quantics tensor train decomposition. SIAM J. Sci. Comput. 33(3), 1315–1328 (2011)

Pavliotis, G. A.: Stochastic Processes and Applications. Diffusion processes, the Fokker-Planck and Langevin equations. Springer, New York (2014)

Rauhut, H., Schneider, R., Stojanac, Z.: Low-rank tensor recovery via iterative hard thresholding. In: 10th international conference on Sampling Theory and Applications (SampTA 2013), pp. 21–24 (2013)

Rohwedder, T., Uschmajew, A.: On local convergence of alternating schemes for optimization of convex problems in the tensor train format. SIAM J. Numer. Anal. 51(2), 1134–1162 (2013)

Rozza, G.: Separated Representations and PGD-based Model Reduction, chap. Fundamentals of reduced basis method for problems governed by parametrized PDEs and applications, pp. 153–227. Springer, Vienna (2014)

Sarich, M., Noé, F., Schütte, C.: On the approximation quality of Markov state models. Multiscale Model. Simul. 8(4), 1154–1177 (2010)

Schmidt, E.: Zur Theorie der linearen und nichtlinearen Integralgleichungen. Math. Ann. 63(4), 433–476 (1907)

Schneider, R., Uschmajew, A.: Approximation rates for the hierarchical tensor format in periodic Sobolev spaces. J. Complexity 30(2), 56–71 (2014)

Schneider, R., Uschmajew, A.: Convergence results for projected line-search methods on varieties of low-rank matrices via Łojasiewicz inequality. SIAM J. Optim. 25(1), 622–646 (2015)

Schollwöck, U.: The density-matrix renormalization group in the age of matrix product states. Ann. Physics 326(1), 96–192 (2011)

Schwab, C., Gittelson, C. J.: Sparse tensor discretizations of high-dimensional parametric and stochastic PDEs. Acta Numer. 20, 291–467 (2011)

Shub, M.: Some remarks on dynamical systems and numerical analysis. In: Dynamical Systems and Partial Differential Equations (Caracas, 1984), pp. 69–91. Univ. Simon Bolivar, Caracas (1986)

de Silva, V., Lim, L.-H.: Tensor rank and the ill-posedness of the best low-rank approximation problem. SIAM J. Matrix Anal. Appl. 30(3), 1084–1127 (2008)

Stewart, G. W.: On the early history of the singular value decomposition. SIAM Rev. 35(4), 551–566 (1993)

Szabo, A., Ostlund, N. S.: Modern Quantum Chemistry. Dover, New York (1996)

Szalay, S., Pfeffer, M., Murg, V., Barcza, G., Verstraete, F., Schneider, R., Legeza, Ö.: Tensor product methods and entanglement optimization for ab initio quantum tensor product methods and entanglement optimization for ab initio quantum chemistry. arXiv:1412.5829 (2014)

Tanner, J., Wei, K.: Normalized iterative hard thresholding for matrix completion. SIAM J. Sci. Comput. 35(5), S104–S125 (2013)

Tobler, C.: Low-rank tensor methods for linear systems and eigenvalue problems. Ph.D. thesis, ETH Zürich (2012)

Tucker, L. R.: Some mathematical notes on three-mode factor analysis. Psychometrika 31(3), 279–311 (1966)

Uschmajew, A.: Well-posedness of convex maximization problems on Stiefel manifolds and orthogonal tensor product approximations. Numer. Math. 115(2), 309–331 (2010)

Uschmajew, A.: Local convergence of the alternating least squares algorithm for canonical tensor approximation. SIAM J. Matrix Anal. Appl. 33(2), 639–652 (2012)

Uschmajew, A.: Zur Theorie der Niedrigrangapproximation in Tensorprodukten von Hilberträumen. Ph.D. thesis, Technische Universität Berlin (2013). In German

Uschmajew, A.: A new convergence proof for the higher-order power method and generalizations. Pac. J. Optim. 11(2), 309–321 (2015)

Uschmajew, A., Vandereycken, B.: The geometry of algorithms using hierarchical tensors. Linear Algebra Appl. 439(1), 133–166 (2013)

Vandereycken, B.: Low-rank matrix completion by Riemannian optimization. SIAM J. Optim. 23(2), 1214–1236 (2013)

Vidal, G.: Efficient classical simulation of slightly entangled quantum computations. Phys. Rev. Lett. 91(14), 147902 (2003)

Wang, H., Thoss, M.: Multilayer formulation of the multiconfiguration time-dependent Hartree theory. J. Chem Phys. 119(3), 1289–1299 (2003)

White, S. R.: Density matrix formulation for quantum renormalization groups. Phys. Rev. Lett. 69, 2863–2866 (1992)

White, S. R.: Density matrix renormalization group algorithms with a single center site. Phys. Rev. B 72(18), 180403 (2005)

Wouters, S., Poelmans, W., Ayers, P. W., Van Neck, D.: CheMPS2: a free open-source spin-adapted implementation of the density matrix renormalization group for ab initio quantum chemistry. Comput. Phys. Commun. 185(6), 1501–1514 (2014)

Xiu, D.: Numerical Methods for Stochastic Computations. A Spectral Method Approach. Princeton University Press, Princeton, NJ (2010)

Xu, Y., Yin, W.: A block coordinate descent method for regularized multiconvex optimization with applications to nonnegative tensor factorization and completion. SIAM J. Imaging Sci. 6(3), 1758–1789 (2013)

Zeidler, E.: Nonlinear Functional Analysis and its Applications. III. Springer-Verlag, New York (1985)

Zeidler, E.: Nonlinear Functional Analysis and its Applications. IV. Springer-Verlag, New York (1988)

Zwiernik, P.: Semialgebraic Statistics and Latent Tree Models. Chapman & Hall/CRC, Boca Raton, FL (2016)

Acknowledgments

M.B. was supported by ERC AdG BREAD; R.S. was supported through Matheon by the Einstein Foundation Berlin and DFG project ERA Chemistry.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by W. Dahmen.

Rights and permissions

About this article

Cite this article

Bachmayr, M., Schneider, R. & Uschmajew, A. Tensor Networks and Hierarchical Tensors for the Solution of High-Dimensional Partial Differential Equations. Found Comput Math 16, 1423–1472 (2016). https://doi.org/10.1007/s10208-016-9317-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10208-016-9317-9