Abstract

We consider a queueing system with heterogeneous customers. One class of customers is eager; these customers are impatient and leave the system if service does not commence immediately upon arrival. Customers of the second class are tolerant; these customers have larger service requirements and can wait for service. In this paper, we establish pseudo-conservation laws relating the performance of the eager class (measured in terms of the long run fraction of customers blocked) and the tolerant class (measured in terms of the steady state performance, e.g., sojourn time, number in the system, workload) under a certain partial fluid limit. This fluid limit involves scaling the arrival rate as well as the service rate of the eager class proportionately to infinity, such that the offered load corresponding to the eager class remains constant. The workload of the tolerant class remains unscaled. Interestingly, our pseudo-conservation laws hold for a broad class of admission control and scheduling policies. This means that under the aforementioned fluid limit, the performance of the tolerant class depends only on the blocking probability of the eager class, and not on the specific admission control policy that produced that blocking probability. Our psuedo-conservation laws also characterize the achievable region for our system, which captures the space of feasible tradeoffs between the performance experienced by the two classes. We also provide two families of complete scheduling policies, which span the achievable region over their parameter space. Finally, we show that our pseudo-conservation laws also apply in several scenarios where eager customers have a limited waiting area and exhibit balking and/or reneging behaviour.

Similar content being viewed by others

Notes

We categorize the eager customers as those that demand immediate service and at-least at a certain minimum service rate. Since eager customers once admitted have to be allocated a certain minimum service capacity, admission control becomes an important aspect of any scheduling policy for the eager class.

Henceforth, we follow the convention that scheduling for the eager class includes admission control.

Such complete families are well known in the context of multiclass queueing systems with different tolerant classes; see, for example, Mitrani and Hine (1977).

Aside from our prior work Kavitha and Sinha (2017a).

We consider the case of partially eager customers with limited patience in Sect. 6.

We consider exceptions to Assumption A.3 in Sect. 6, where we also allow the eager customers to wait in the queue to a limited extent.

However from Sect. 4 onwards, this assumption is implied by B.4.

Because, \(\mathbb {E}[B_{{ \epsilon }, n}^{\mu _{ \epsilon }} ; X_a< n] = \mathbb {E}[B_{{ \epsilon }, n}^{\mu _{ \epsilon }}] \mathbb {E}[X_a < n] \).

It is important to note here that we have one \({ \epsilon }\)-US process for each \({\tau }\)-customer, which starts at the instance the nth \({\tau }\)-service starts. This is done to simplify the notations.

Note that the models considered here allow for reneging/abandonment while waiting, but not during service.

To compare two stochastic systems, we use realizations of some random quantities of one system in defining the other system so as to ensure the required dominance in almost sure sense. Where required we use independent copies of some other random quantities.

The \({\tau }\)-customer receives some (respectively zero) service in original (respectively upper) system during \({\tilde{\varPsi }}\) (respectively during bigger \(\varTheta ^{\mu _{ \epsilon }}\)) and after \(({\tilde{\varPsi }},\varTheta ^{\mu _{ \epsilon }})\)\({\tau }\)-service is received in exactly the same way, in both the systems.

Almost sure dominance given by (24) easily implies the stochastic dominance:

$$\begin{aligned} P(X^U_n \ge x ) \ge P(X^O_n \ge x ) \ge P(X^L_n \ge x ) \hbox { for all } x, n. \end{aligned}$$Stochastic dominance implies the dominance of expected values of any increasing function (e.g., Shaked and Shanthikumar 2007, pp. 4, Eq. (1.A.7)), i.e.,:

$$\begin{aligned} \mathbb {E}[\phi (X^U_n) ] \ge \mathbb {E}[\phi (X^O_n) ] \ge \mathbb {E}[\phi (X^L_n) ] \hbox { for any increasing function, } \phi . \end{aligned}$$For the case with A.4(a) assumption, the proof follows exactly as in Lemma 6. We provide an alternate proof which also works for assumption A.4(b).

As before this replacement with independent copies does not change the original system stochastically.

References

Ancker, C. J, Jr., & Gafarian, A. V. (1963). Some queuing problems with balking and reneging-II. Operations Research, 11(6), 928–937.

Baxendale, Peter H. (2005). Renewal theory and computable convergence rates for geometrically ergodic Markov chains. The Annals of Applied Probability, 15(1B), 700–738.

Bertsimas, D., Paschalidis, I., & Tistsiklis, J. N. (1994). Optimization of multiclass queueing networks: Polyhedral and nonlinear characterizations of achievable performance. The Annals of Applied Probability, 4, 43–75.

Coffman, E. G., & Mitrani, I. (1979). A characterization of waiting time performance realizable by single server queues. Operations Research, 28, 810–821.

de Haan, Roland, Boucherie, Richard J., & van Ommeren, Jan-Kees. (2009). A polling model with an autonomous server. Queueing Systems, 62(3), 279–308.

Federgruen, A., & Groenevelt, H. (1988). M/G/c queueing systems with multiple agent classes: Characterization and control of achievable performance under nonpre-emptive priority rules. Management Science, 9, 1121–1138.

Feller, W. (1968). An introduction to probability theory and its applications (Vol. 1). Hoboken: Wiley.

Feller, W. (1972). An introduction to probability theory and its applications (Vol. 2). Hoboken: Wiley.

Harchol-Balter, M. (2013). Performance modeling and design of computer systems: queueing theory in action. Cambridge: Cambridge University Press.

Hoel, P. G., Port, S. C., & Stone, C. J. (1986). Introduction to stochastic processes. Long Grove: Waveland Press.

Kavitha, V., & Sinha, R. (2017). Achievable region with impatient customers. In Proceedings of Valuetools.

Kavitha, V., & Sinha, R. (2017b). Queuing with heterogeneous users: Block probability and Sojourn times. ArXiv preprint http://adsabs.harvard.edu/abs/2017arXiv170906593K.

Kleinrock, L. (1964). A delay dependent queue discipline. Naval Research Logistics Quarterly, 11, 329–341.

Kleinrock, L. (1965). A conservation law for wide class of queue disciplines. Naval Research Logistics Quarterly, 12, 118–192.

Li, B., Li, L., Li, B., Sivalingam, K. M., & Xi-Ren, C. (2004). Call admission control for voice/data integrated cellular networks: Performance analysis and comparative study. IEEE Journal on Selected Areas in Communications, 22(4), 706–718.

Mahabhashyam, Sai Rajesh, & Gautam, Natarajan. (2005). On queues with Markov modulated service rates. Queueing Systems Theory Applications, 51(1–2), 89–113.

Meyn, S. P., & Tweedie, R. L. (1993). Markov chains and stochastic stability, communications and control engineering series. London: Springer.

Mitrani, I., & Hine, J. (1977). Complete parametrized families of job scheduling strategies. Acta Informatica, 8, 61–73.

Sesia, S., Baker, M., & Toufik, I. (2011). LTE-the UMTS long term evolution: From theory to practice. Hoboken: Wiley.

Shaked, M., & Shanthikumar, J. G. (2007). Stochastic orders. Berlin: Springer.

Shanthikumar, J. G., & Yao, D. D. (1992). Multiclass queueing systems: Polymatroidal structure and optimal scheduling control. Operations Research, 40(3–supplement–2), S293–S299.

Sleptchenko, A., van Harten, A., & van der Heijden, M. C. (2003). An exact analysis of the multi-class M/M/k priority queue with partial blocking (pp. 527–548). Milton Park: Taylor & Francis.

Takács, L. (1962). An Introduction to queueing theory.

Tang, S., & Li, W. (2004). A channel allocation model with preemptive priority for integrated voice/data mobile networks. In Proceedings of the 1st international conference on quality of service in heterogeneous wired/wireless networks.

White, Harrison, & Christie, Lee S. (1958). Queuing with pre-emptive priorities or with breakdown. Operations Research, 6(1), 79–95.

Whitt, Ward. (1999). Improving service by informing customers about anticipated delays. Management Science, 45(2), 192–207.

Zhang, Yan, Soong, Boon-Hee, & Ma, Miao. (2006). A dynamic channel assignment scheme for voice/data integration in GPRS networks. Elsevier Computer Communications, 29, 1163–1163.

Author information

Authors and Affiliations

Corresponding author

Additional information

The second author would like to acknowledge the support of DST and CEFIPRA.

Appendices

Appendix A: Proofs related to the pseudo-conservation of the \({ \epsilon }\)-US process

This appendix is devoted to the proof of Theorem 1. The proof requires the following functional version of the RRT.

Theorem 5

(Functional RRT) Let \(\varOmega ^1(t)\) represent a time-monotone non-negative cumulative reward function of the \({ \epsilon }\)-renewal process when \(\mu _{ \epsilon }= 1\), with \(\nu \) as its RRT limit:

Then, for any \(W < \infty \) and irrespective of the initial condition:

Proof

For any \(\delta > 0\) there exists a \(T_{\delta }\) (for any initial state) such that:

Now pick \(s \in [0,W].\) If \(\mu s \ge T_{\delta }\) we have

If \(\mu s < T_{\delta }\),

for large enough \(\mu \), chosen appropriately for any initial condition.

It follows that

for large enough \(\mu .\) This completes the proof. \(\square \)

Proof of Theorem 1

As in the proof of Lemma 1, we couple the arrival processes corresponding to the eager class for different \(\mu _{{ \epsilon }}\) as per (1). Under this construction, it is easy to see that

Thus by Theorem 5, for any \(W>0,\)

This proves the statement about the asymptotic (in time) growth rate of the \({ \epsilon }\)-US process.

We now prove the claim regarding the time required to obtain B units of service from the \({ \epsilon }\)-US process. By continuity of probability measure, one can assume that (13) is satisfied together for a sequence of \(\{W_n \}\) with \(W_n \rightarrow \infty \), almost surely. Define the event A as follows.

For any outcome \(\omega \in A\), consider a \(W_n > (B (\omega ) + 1)/\nu _{\tau }\). For every \(\delta \in (0,1),\) there exists \(\bar{\mu } > 0\) such that:

Thus \( \nu _{\tau }t - \delta \le \varOmega ^{\mu _{ \epsilon }} (t) \le \nu _{\tau }t + \delta \hbox { for all } t \le W_n. \) Now in particular for \(t = (B (\omega ) + \delta )/\nu _{\tau }< W_n\), we have:

which implies \( {\varUpsilon }^{\mu _{ \epsilon }}\le (B (\omega ) + \delta )/\nu _{\tau }. \) Similarly, for \(t = (B (\omega ) - \delta )/\nu _{\tau }< W_n,\) we have:

Thus, \({\varUpsilon }^{\mu _{ \epsilon }}\ge (B (\omega ) - \delta )/\nu _{\tau }.\) We conclude that

This completes the proof. Note that the above argument applies for any initial condition of the \({ \epsilon }\)-state, as Theorem 5 is true for any initial state. \(\square \)

Appendix B: Proof of Theorem 2

This section is devoted to the proof of Theorem 2.

1.1 Stochastic upper bound on residual \({ \epsilon }\)-busy periods

The first step in the proof is the construction of a stochastic upper bound on the residual \({ \epsilon }\)-busy period \({\tilde{\varPsi }}\) starting from any \({ \epsilon }\)-state. Let \(s_{{ \epsilon }}\) denote the state of the \({ \epsilon }\)-subsystem. For the case of bounded eager service requirements, \(s_{{ \epsilon }} = (y,\mathbf{r}) \in \{0,1,\ldots ,K\} \times [0, {\bar{b}}/\mu _{ \epsilon }]^{K}\), for any \(\mu _{ \epsilon }.\) Here, y is the number of \({ \epsilon }\)-jobs present, and the vector \(\mathbf{r}\) holds the residual service requirements of these \({ \epsilon }\)-jobs at the start of \({\tilde{\varPsi }}\). Note that \(\mathbf{r}\) has exactly y positive entries; the remaining \(K-y\) entries are set to zero. For the case of exponential eager service requirements, the \({ \epsilon }\)-state \(s_{{ \epsilon }} = y,\) the number of \({ \epsilon }\)-jobs in the system.

Lemma 6

Assume A.1–3 and B.4. Let \({\tilde{\varPsi }}^{ \mu _{ \epsilon }} (s_{ \epsilon })\) denote the residual \({ \epsilon }\)-busy period starting with \({ \epsilon }\)-state \(s_{{ \epsilon }}.\) One can construct an upper bound \(\varTheta ^{\mu _{ \epsilon }}\) independent \(s_{{ \epsilon }}\) that almost surely dominates \({\tilde{\varPsi }}^{ \mu _{ \epsilon }},\) i.e.,

uniformly over \(s_{{ \epsilon }}.\) Further, as \(\mu _{{ \epsilon }} \rightarrow \infty \) under the SFJ scaling,

Proof

We begin with the case of bounded service requirements. Let \(\varTheta = \varTheta ^1\), be the random time distributed as the busy period in a fictitious M/G/\(\infty \) system with infinite buffer space (no loss system), when started with exactly K users each demanding \({\bar{b}}\) amount of service (see assumption B.4), and seeing the same \({ \epsilon }\)-arrival stream as in our model at scale \(\mu _{{ \epsilon }} = 1.\) Each server in this fictitious system operates at a service rate that equals the lowest possible service rate \(c_{min} > 0\) of \({ \epsilon }\)-jobs under the given \({ \epsilon }\)-scheduling policy. By the way of our special construction, \(\varTheta ^{\mu _{ \epsilon }}:= \varTheta /\mu _{ \epsilon }\) will be the busy period of the same system for any arbitrary \(\mu _{ \epsilon },\) with the understanding that the initial residual service requirement of each job equals \({\bar{b}}/\mu _{{ \epsilon }}.\) This immediately implies the statement about almost sure convergence of \(\varTheta ^{\mu _{ \epsilon }}\) under the SFJ limit. The statement about convergence of kth moment holds, so long as \(\mathbb {E}[(\varTheta ^{1})^k] < \infty .\) To show that \(\mathbb {E}[(\varTheta ^{1})^k] < \infty ,\) divide time into blocks of length \(\frac{\bar{b}}{c_{min}}.\) Since \(\frac{\bar{b}}{c_{min}}\) is the maximum time spent by a job in our fictitious system, the busy period ends if there is no arrival in any block of time. Thus, for \(m \ge 2,\)

where \(p := e^{-\lambda _{{ \epsilon }} \frac{\bar{b}}{c_{min}}}\) is the probability of no arrival in any block. Upper bound (16) implies that all moments of \(\varTheta ^{1}\) are bounded.

It is not hard to see that \(\varTheta \) dominates \({\tilde{\varPsi }}^{1};\) indeed, the fictitious system has, at any time, a larger number of \({ \epsilon }\)-jobs, each with a greater residual service requirement, as compared to the original system. While the original system may have losses, the fictitious system does not. One can obtain the required dominance in almost sure sense when the following couplingFootnote 11 rules are employed: (a) the \({ \epsilon }\)-arrival epochs in the beginning of the \(\varTheta ^{\mu _{ \epsilon }}\) system are exactly the same as those that evolved \({\tilde{\varPsi }}\) in the original system; (b) the job demands of the subsequent customers of \(\varTheta ^{\mu _{ \epsilon }}\) system are exactly the same as the ones corresponding to the original system; and (c) we complete construction of \(\varTheta ^{\mu _{ \epsilon }}\), using independent copies of \({ \epsilon }\)-job requirements and inter arrival times as required. By above construction, it is clear that \(\varTheta ^{\mu _{ \epsilon }}\) is independent of \({ \epsilon }\)-initial state \(s_{ \epsilon }= (y, \mathbf{r}).\)

For exponential \({ \epsilon }\)-jobs, similar arguments are applicable, except that we further couple the service times of the first y customers with the (residual) service times of the y customers of the original system. The remaining \((K-y)\) customer in upper bound system are independent copies of exponential distribution with parameter \(\mu _{ \epsilon }.\) In fact we replace the (residual) service times of the y customers of the original system with IID copies, and the same realizations are used by \(\varTheta ^{\mu _{ \epsilon }}\) customers. This does not change the stochastic description of the original system. Thus for exponential \({ \epsilon }\)-jobs, \(\varTheta ^{\mu _{ \epsilon }}\) almost surely upper bounds \({\tilde{\varPsi }}(s_{ \epsilon })\) (for any \(s_{ \epsilon }= y\)) and is independent of the residual service times of the \({ \epsilon }\) customers present at the start of \({\tilde{\varPsi }}\). \(\square \)

1.2 Ergodicity

The next step in the proof of Theorem 2 is to establish ergodicity of the number of jobs in the tolerant sub-system. For the case of exponential \({ \epsilon }\)-service requirements, ergodicity follows from Mahabhashyam and Gautam (2005). Thus, we focus only on the case of bounded \({ \epsilon }\)-service requirements. As discussed in Sect. 4, we analyse the evolution of the system across departure instants of tolerant jobs, via the Markov process \(Z_n := (X_n, Y_n, \mathbf{R}^s_n).\) The following theorem states that this Markov process is ergodic for \(\mu _{{ \epsilon }}\) large enough.

Theorem 6

Assume A.1–3, B.1–6 and general bounded \({ \epsilon }\)-jobs. There exists a \({\bar{\mu }} < \infty \) such that \(Z_n\) is positive recurrent and aperiodic for every \(\mu _{ \epsilon }\ge {\bar{\mu }}.\) Hence it has a stationary distribution and the state at time n converges to the stationary distribution in total variation norm. Thus we have the convergence of the marginals also, i.e., with \(X_*\) representing the stationary quantity

Proof

Let P(z, .) represent the probability transition Kernel of the Markov chain. Consider the Lyaponuv function \(V(z) = x\) for all \(z = (x, y, \mathbf{r}) = (x, s_{ \epsilon })\). Note that \(PV(z) = \mathbb {E}[ V(Z_1) | Z_0 = z] \). For any \(x \ge 1\) it is clear from (5),

By Lemma 8 given below, with \({ \delta } := ( 1- \lambda _{\tau }( \mathbb {E}[ B_{\tau }] / \nu _{\tau })/2\) (which is greater than 0 by B.5) there exists a \({\bar{\mu }} < \infty \) such that, for any \(\mu _{ \epsilon }\ge {\bar{\mu }}\):

Thus one can choose a \(\delta > 0\) and a \({\bar{\mu }} < \infty \), such that for all \(\mu _{ \epsilon }\ge {\bar{\mu }}\)

The above negative drift is true for all \(z \in C^c\) where

For any \(z \in C\), i.e., when \(x = 0\) and for any \((s_{ \epsilon })\), again using Lemma 8

By Lemma 7 of “Appendix B”, C is a small set and is aperiodic. Thus, by (Meyn and Tweedie 1993, Theorem 13.0.1, pp. 313, Eq. 13.4), we have convergence of the stationary distribution in total variation norm and hence the theorem. \(\square \)

Lemma 7

Set C of (18) is a small set and the Markov chain is aperiodic.

Proof

Let P(z, .) represent the probability transition kernel of the Markov chain. For any event A and any \(z \in C\) (i.e., when \(z = (0, s_{ \epsilon })\)) it is clear to see that

Starting from any \(z \in C\) one can uniformly lower bound \(Prob ( z, (0,0,\mathbf{0})) \) by the probability of the following event: (a) there are no \({\tau }\) or \({ \epsilon }\) arrivals for a time \(\frac{\bar{b}}{\mu _{ \epsilon }c_{min}}\), by which time all existing \({ \epsilon }\)-customers would have been served (note that \({ \epsilon }\)-jobs have a maximum size of \(\bar{b}/\mu _{ \epsilon },\) and are served at a minimum rate of \(c_{min}\)); (b) the first arrival after time \(\frac{\bar{b}}{\mu _{ \epsilon }c_{min}}\) is a \({\tau }\)-job; (c) there are no \({\tau }\) or \({ \epsilon }\) arrival for time \(B_{\tau },\) which is the service requirement of this newly arrived \({\tau }\)-job. Under this event, it is clear that state of the system after the next \({\tau }\)-departure would be \((0,0,\mathbf{0}).\) Thus for any \(z \in C\),

where \(G_{\tau }\) is the distribution of the \({\tau }\)-service times \(B_{\tau }.\) Thus from (19),

Hence the one step transition probability measure (19), is lower bounded uniformly for all \(z \in C\), with a non-trivial measure (need not be probability) that concentrates only on \(\{(0,0,\mathbf{0})\}\) and hence C is a small set (see Meyn and Tweedie 1993, p. 109, Eq. (5.14)) for definition of small set). This also shows that the ‘significant’ atom \(\{(0,0,\mathbf{0})\}\) (see Meyn and Tweedie 1993, p. 103) for definition of atom) is aperiodic and hence that the Markov chain is aperiodic. \(\square \)

Lemma 8

Assume A.1-3, B.1-6. Let \(\varUpsilon ^{\mu _{ \epsilon }}\) denote the time required for the tolerant class to receive service \(B_{\tau },\) starting with \({ \epsilon }\)-state \(s_{ \epsilon }.\) There exists a uniform (over initial \({ \epsilon }\)-states) almost sure upper bound \({\bar{\varUpsilon }}^{\mu _{ \epsilon }}\) of \(\varUpsilon ^{\mu _{ \epsilon }},\) such that \({\bar{\varUpsilon }}^{\mu _{ \epsilon }} \rightarrow \frac{B_{{\tau }}}{\nu _{\tau }}\) almost surely as \(\mu _{{ \epsilon }} \rightarrow \infty .\) Moreover,

Proof of Lemma 8

Let \({\varUpsilon }^{\mu _{ \epsilon }} (s_{ \epsilon })\) represent the time taken to serve a \({\tau }\)-customer with job requirement \(B_{\tau }\) (see (6)) when \({ \epsilon }\)-state at \({\tau }\)-service start equals \(s_{ \epsilon }\). We construct a fictitious upper system such that \({ {\varUpsilon }}^{U, \mu _{ \epsilon }}\), the time taken to serve the same (realization of the) job \(B_{\tau }\) in the upper system, upper bounds \({\varUpsilon }^{\mu _{ \epsilon }} (s_{ \epsilon })\) of original system uniform over all \(s_{ \epsilon }\) and almost surely. The starting residual \({ \epsilon }\)-busy period \({\tilde{\varPsi }}\) of the original system (for any \(s_{ \epsilon }\)) is replaced by \(\varTheta ^{\mu _{ \epsilon }}\) of Lemma 6 in the upper system, so that,

Zero \({\tau }\)-service is offered during \(\varTheta ^{\mu _{ \epsilon }}\). And after \({\tilde{\varPsi }}\) in the original system (respectively \(\varTheta ^{\mu _{ \epsilon }}\) in upper system), the remaining \(B_{\tau }\) (entire \(B_{\tau }\) in upper system) is completed using the server capacity available to the \({\tau }\)-class when the \({ \epsilon }\)-jobs in both the systems are driven with same realizations of the random quantities like further arrival times, \({ \epsilon }\)-job requirements etc. Further, both the systems use the same \({ \epsilon }\)-scheduling policy. This ensures required almost sure dominationFootnote 12.

Thus \({\varUpsilon }^{\mu _{ \epsilon }} ( s_{ \epsilon }) \le {{\varUpsilon }}^{U, \mu _{ \epsilon }} \) for all \(s_{ \epsilon }\) a.s. and hence

It now follows from Theorem 1 and Lemma 6 that \({{\varUpsilon }}^{U, \mu _{ \epsilon }} \rightarrow \frac{B_{{\tau }}}{\nu _{\tau }}\) almost surely as \(\mu _{{ \epsilon }} \rightarrow \infty .\)

Statements about convergence in expectation follow once we show uniform integrability of \(\{{{\varUpsilon }}^{U, \mu _{ \epsilon }} \}_{\mu _{ \epsilon }}\). Define \(\hat{\bar{\varUpsilon }}^{\mu _{ \epsilon }}(\mathbf{0})\) as the time taken to serve \(B_{{\tau }}\) amount of work for the tolerant class, when the \({\tau }\)-customers are served only during \({ \epsilon }\)-idle periods. Note this also implies that the tolerant class service has not started till the first \({ \epsilon }\)-busy period is over in \(\hat{\bar{\varUpsilon }}^{\mu _{ \epsilon }}(\mathbf{0})\). Clearly, \({\varUpsilon }^{\mu _{ \epsilon }} (\mathbf{0}) \le _{\mathrm {a.s.}}\hat{\bar{\varUpsilon }}^{\mu _{ \epsilon }}(\mathbf{0})\) (dominated almost surely). Via exactly the same analysis as in Theorem 1, one can show that

equals the long run fraction of time the \({ \epsilon }\)-queue is idle. Also, because of the simplistic \({\tau }\)-server availability rules and because of memoryless \({ \epsilon }\)-arrivals, one can express

where \(N(B_{{\tau }})\) is the number \({ \epsilon }\)-arrivals (or \({ \epsilon }\)-interruptions) during time period B and \(\{\varPsi _i \}\) are resulting \({ \epsilon }\)-busy cycles. Note these are IID. By the special construction (1) and from Lemma 6 (\(\varPsi _1\) is a partial busy period with \(s_{ \epsilon }= \mathbf{0}\)):

Further by conditioning first on \(B_{{\tau }}\),

Thus and further using (15) of Lemma 6, there exists a \(\bar{\mu }_{ \epsilon }\) large enough such that:

Thus \(\{ {\varUpsilon }^{U, \mu _{ \epsilon }}\}_{{\mu _{ \epsilon }}}\) are uniformly integrable and hence we obtain \(L^1\) convergence. Using analogous arguments, it can be shown that

which implies that \(\{({\varUpsilon }^{U, \mu _{ \epsilon }})^2\}_{{\mu _{ \epsilon }}}\) are uniformly integrable and hence we also obtain \(L^2\) convergence. \(\square \)

1.3 Proof of Theorem 2

We are now ready to prove Theorem 2. The main idea of the proof is that the number of tolerant customers in the system at any time can be bounded from above and below by two M/G/1 systems, such that the performance metrics for the two bounding systems converge to the same value under the SFJ limit.

We begin with construction of the dominating queues for the scenario with bounded \({ \epsilon }\)-jobs. The case with exponential \({ \epsilon }\)-job requirements is considered at the end. The key challenge is to ensure IID service times in the bounding systems. Note that \({\tau }\)-service times in the original system are not in general independent (since successive \({\tau }\)-jobs might begin service within the same \({ \epsilon }\)-busy period).

-

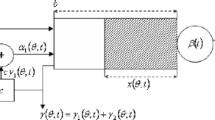

Let \(\varUpsilon ^O\) be defined as the total time period between the service start and the service end of a typical \({\tau }\)-agent (in original system) and we refer this as the effective server time (EST). A typical EST (say \(\varUpsilon ^O_n\)) begins with a residual \({ \epsilon }\)-busy period (call this \({\tilde{\varPsi }}_n\)) and then might span over multiple \({ \epsilon }\)-busy periods, before ending with another partial \({ \epsilon }\)-busy period (call this \({\ddot{\varPsi }}_n\)) (for e.g., see Figure 7). Thus \({\ddot{\varPsi }}_{n}\) and \({\tilde{\varPsi }}_{n+1}\) together form the \({ \epsilon }\)-busy period in between which the nth \({\tau }\)-customer departs (if it does not leave behind an \({\tau }\)-queue empty), which leads to correlations in original system.

-

We construct \(\varTheta _n^{\mu _{ \epsilon }}\) (for any arbitrary \(\mu _{ \epsilon }\), n), which almost surely dominates \({\tilde{\varPsi }}_n\) (for any \({ \epsilon }\)-state \(s_{ \epsilon }\) at \({\tau }\)-service start) as described in Lemma 6 of “Appendix B”.

-

The \({\tau }\)-service in original system begins with \({\tilde{\varPsi }}_n\), while that in the two bounding systems begins with \(\varTheta ^{\mu _{ \epsilon }}_n\). During this period the \({\tau }\) customer in lower system has access to the complete system capacity (i.e., it is served at rate 1) while in upper system it has access to zero capacity. After \(\varTheta ^{\mu _{ \epsilon }}_n\) in bounding systems (respectively after \({\tilde{\varPsi }}_n\) in original system) the two bounding systems continue with \({\tau }\)-service exactly as in original system. This continues at maximum for the full-\({ \epsilon }\)-busy periods of the original system, over which the \({\tau }\)-service of the customer under consideration in the original system, spans completely (Figure 7).

-

We continue the same for the ‘last’ partial busy period \({\ddot{\varPsi }}_n\), till which the service of the current \({\tau }\)-customer continues (in the original system). From this point onwards subsequent \({ \epsilon }\)-inter-arrival times and the service times of the subsequent new \({ \epsilon }\)-arrivals are continued with IID copies. We however couple the departure epochs of the \({ \epsilon }\)-customers (\(Y_{n+1}\) of them) deriving service at the (nth) \({\tau }\)-departure epoch in the original system.

-

This ensures that the modified ‘last’ \({ \epsilon }\)-busy period used by the dominating systems is stochastically the same as the usual \({ \epsilon }\)-busy period, because of memoryless property of \({ \epsilon }\)-Poisson arrivals. And further this period is independent of \(\varTheta _{n+1}^{\mu _{ \epsilon }}\) (constructed by coupling the subsequent \({ \epsilon }\)-inter-arrival times and service times in Lemma 6) which dominates \({\tilde{\varPsi }}_{n+1}\) of the next \({\tau }\)-customer.

-

The span of the time during which the \({\tau }\)-job is completed, in each system, becomes the service time of the corresponding customer in the respective dominating systems.

-

The \({\tilde{\varPsi }}_{n+1}\) of any customer can be correlated with \({\ddot{\varPsi }}_{n}\) of the previous customer in original system. However after \({\ddot{\varPsi }}_{n}\), \({ \epsilon }\)-busy period of previous customer is continued using independent quantities in the dominating systems, except for departure epochs of the \({ \epsilon }\)-customers existing at \({\tau }\)-departure, and \(\varTheta _{n+1}^{\mu _{ \epsilon }}\) dominating \({\tilde{\varPsi }}_{n+1}\) of the next customer is independent of these \({ \epsilon }\)-departure epochs. Thus correlations are avoided in the dominating systems and hence they are M/G/1 queues.

-

To summarize, by way of the construction the IID service times in the dominating queues equal:

$$\begin{aligned} \varUpsilon ^U= & {} \varTheta ^{\mu _{ \epsilon }} + \varUpsilon (\mathbf{0}, B_{\tau }) , \\ \varUpsilon ^O= & {} \varUpsilon (s_{ \epsilon }, B_{\tau }) \hbox { and } \nonumber \\ \varUpsilon ^L= & {} \min \Bigg \{ \varTheta ^{\mu _{ \epsilon }}, B_{\tau }\Bigg \} + \varUpsilon ( \mathbf{0},[(B_{\tau }- \varTheta ^{\mu _{ \epsilon }}) \mathbb {1}_{\{ B_{\tau }> \varTheta ^{\mu _{ \epsilon }} \}}]) , \nonumber \end{aligned}$$(22)where \(\varUpsilon (s_{ \epsilon }, b)\) represents the time taken to complete job requirement b, when \({ \epsilon }\)-state at \({\tau }\)-service start is \(s_{ \epsilon }.\)

-

The service time \(\varUpsilon ^L\) in the lower system (with same realization of \(B_{\tau }\)) is less or equal to \(\varUpsilon ^O\), because \({\tau }\)-customer in lower system receives service at the maximum rate during the (bigger) interval \(\varTheta ^{\mu _{ \epsilon }},\) as compared to the time varying rate (which is less than or equal to the maximum rate) with which the service is offered in original system during the (smaller) interval \({\tilde{\varPsi }}\). After \({\tilde{\varPsi }}\) and \(\varTheta ^{\mu _{ \epsilon }}\) (respectively), the service rate available for \({\tau }\)-customer evolves in exactly the same way for both the systems. Thus, the customer of lower system leaves before that in the original system.

-

Similarly, the service time \(\varUpsilon ^U\) in the upper system is greater than or equal to \(\varUpsilon ^O.\) There is a possibility that service is not completed in the same \({ \epsilon }\)-busy period (which was modified for bounding system) in the upper system as in the original system. If so, the rest of the service is completed using independent copies of \({ \epsilon }\)-busy periods as required.

Thus departure instances of \({\tau }\)-customers are delayed in the upper system in comparison with that of the original system almost surely, while they occur sooner in lower system almost surely. Hence the number customers at time t in the three systems:

This implies the following dominance at \({\tau }\)-departure epochs

Stationary performance Let \(X^m_*\) (with \(m = U\) or L or O) be the random variable distributed as the stationary number in the system, if the corresponding stationary distribution exists. The upper system may not be stable for all \(\mu _{ \epsilon }\) because of \(\varTheta ^{\mu _{ \epsilon }}\). By Lemma 9 of “Appendix B” and B.5, the upper system is stable (i.e., \(\lambda _{\tau }\mathbb {E}[\varUpsilon ^U] < 1\)) for all large enough \(\mu _{ \epsilon }\). Consider larger \(\mu _{ \epsilon }\), if required, for which the original system is also stationary as given by Theorem 6. Thus, for large enough \({\mu _{ \epsilon }}\), we have the weak convergence

The three weak convergence results along with stochastic dominationFootnote 13 as given by (24) gives the following for any large enough \(\mu _{ \epsilon }< \infty \) by Shaked and Shanthikumar 2007, Theorem 1.A.3(c), pp. 6:

Convergence in distribution It follows from (25) that the moment generating functions (MGFs) corresponding to \(X_*^L,\)\(X_*^O,\) and \(X_*^U\) satisfy the following relation,Footnote 14 for \(z \in (0,1).\)

Now, as \(\mu _{{ \epsilon }} \rightarrow \infty ,\) Lemma 9 below implies that the \(\varUpsilon ^U, \varUpsilon ^L {\mathop {\rightarrow }\limits ^{d}}\frac{B_{{\tau }}}{\nu _{{\tau }}}.\) It follows from the continuity theorem for Laplace transforms (see Feller 1972, Theorem 2, Chapter 13.1) that the corresponding Laplace transforms satisfy, for \(s > 0,\)

Given the representation of the MGF of the steady state number in system of an M/G/1 queue in terms of the Laplace transform of the job size distribution (see Harchol-Balter 2013, Chap. 26), it follows that

where \(X_*\) denotes the stationary number in system corresponding to an M/G/1 queue with arrival rate \(\lambda _{{\tau }}\) and job sizes \(\frac{B_{{\tau }}}{\nu _{{\tau }}}.\) We now conclude from (26) that

which implies, from the continuity theorem for MGFs (see Feller 1968, Sect. 11.6) that \(X_*^O {\mathop {\rightarrow }\limits ^{d}}X_*.\)

Convergence in expectation

Similarly, it follows from (25) that

From Lemma 9 below, we have, as \(\mu _{ \epsilon }\rightarrow \infty \), the job requirements of the two bounding queues, satisfy

Thus the stationary expected number in the system with \(\mu _{ \epsilon }\) in the two bounding systems converges to the stationary performance \( \mathbb {E}[X_{*}] \) of the M/G/1 system with the same arrival rate and with service times \(B_{\tau }/\nu _{\tau }\):

Hence the performance of both the dominating systems converge to the same constant and hence the sand-witched performance of the original system from (27) also converges to

This completes the proof for bounded \({ \epsilon }\)-jobs, when we observe that X(t) performance of M/G/1 system with service times \(B_{\tau }/ \nu _{\tau }\) and unit rate server is same as that in the M/G/1 system with service times \(B_{\tau }\) and \(\nu _{\tau }\)-rate server.

For exponential \({ \epsilon }\)-jobs, the construction is almost the same, except that we need not couple the departure epochs of \({ \epsilon }\)-customers present at the end of \({\ddot{\varPsi }}\) in the dominating systems. This is because these departure epochs are after exponentially distributed times (by memoryless property). More importantly we replace these departure epochs in \({\tilde{\varPsi }}\) corresponding to the next customer of the original system by an IID copy and further couple the same with \(\varTheta ^{\mu _{ \epsilon }}\) construction. This does not change stochastic description of the original system. At the start of each \({\tau }\)-service at state \(s_{{ \epsilon }} = y\) in the original system, we construct \(\varTheta ^{\mu _{ \epsilon }}\) (see Lemma 6) using K (fresh) IID exponential random variables to capture the residual service requirements. The residual service requirement of the y\({ \epsilon }\)-jobs in the original system is taken to as the first y of the same random variables. This coupling ensures that the \(\varTheta ^{\mu _{ \epsilon }}\) almost surely dominates the residual \({ \epsilon }\)-busy period in the original system, and is additionally independent of any previous upper bound constructions in the same \({ \epsilon }\)-busy period. This ensures that the service requirements in the two bounding systems remain IID. The rest of the proof follows along exactly similar lines. \(\square \)

Lemma 9

For the upper and lower bounding system constructed in the proof of Theorem 2, as \(\mu _{{ \epsilon }} \rightarrow \infty ,\)

Proof of Lemma 9

For any \({ \epsilon }\)-state at the start of \({\tau }\)-service, almost sure convergence of \(\varUpsilon ^U, \ \varUpsilon ^L\) under the SFJ limit follow from Theorem 1 and Lemma 6.

Convergence of the first and second moment of \(\varUpsilon ^U, \ \varUpsilon ^L\) under the SFJ limit follow from the uniform integrability arguments in the proof of Lemma 8. \(\square \)

Appendix C: Proofs for Theorem 3, workload pseudo-conservation

Proof of Theorem 3

By PASTA the workload seen by the arriving customers equals the stationary workload. Hence we consider an alternate embedded Markov chain of the original system, that observed at \(\tau \)-arrival instances to study the \({\tau }\)-workload. We also consider an additional Markov chain, that defined in assumption W.2. This chain is updated at every \({ \epsilon }\)-arrival/departure epoch and hence evolves at a much faster rate than the former \({\tau }\) state representing Markov chain. Further the \(\{\zeta _n\}_n\) chain is defined with \(\mu _{ \epsilon }= 1\), however by Eqs. (1) and (3) also represents the Markov chain for any \(\mu _{ \epsilon }\), when the residual \({ \epsilon }\)-job size component of the \({ \epsilon }\)-state is scaled down by \(1/\mu _{ \epsilon }\). With exponential \({ \epsilon }\)-jobs its state space is finite, i.e., \(\zeta \in \{0, 1, \ldots , K\}\) and hence the uniform rate of convergence required by W.2 is immediate. With bounded \({ \epsilon }\)-jobs \(\zeta \in \{0, 1, \ldots , K\} \times [0, {\bar{b}}]^K\), i.e., we have a compact state space.

Theorem 1 is again applicable. We obtain the ergodicity as well as the convergence in distribution/mean, both by establishing geometric ergodicity of the alternate embedded Markov chain, just discussed. Let \(U_n\) represent the workload seen by nth \({\tau }\)-arrival and redefine \( (Y_n, \mathbf{R}^s_n)\) to again represent the \({ \epsilon }\)-number and the \({ \epsilon }\)-residual service times vector respectively, but now at \({\tau }\)-arrival instances and we study the redefined Markov chain \(Z_n = (U_n, Y_n, \mathbf{R}^s_n)\). Firstly

where the start of \(\varOmega _n^{\mu _{ \epsilon }} \) (the total server capacity available to \({\tau }\)-customer for a duration \(A_{{\tau }, n+1} \)) is dictated by \((Y_n, \mathbf{R}^s_n)\), \(B_{{\tau },n}\) is the service time of nth \({\tau }\) customer and \(A_{{\tau }, n+1}\) is the inter-arrival time before the \((n+1)\)th \({\tau }\) arrival.

Lyaponuv Function By Lemma 10 given below, for any given \(\delta > 0\), one can chose \({\bar{\mu }}, {\bar{u}} \) such that for any \(\mu _{ \epsilon }\ge {\bar{\mu }}\), \(u \ge {\bar{u}}\) and for any \(s_{ \epsilon }\):

The above is true for any \(1 < a \le {\bar{a}}\) by W.1, however \({\bar{u}}\), \({\bar{\mu }}\) can depend upon \(a, \delta \).

Consider \(V(z) = V(u) = a^u\) and we will choose a suitable \({\bar{a}} \ge a > 1\), to obtain the appropriate Lyapunov function. Consider any such a, any \(\delta > 0\), and \(u > {\bar{u}} (a, \delta )\), \(\mu _{ \epsilon }\ge {\bar{\mu }}(a, \delta )\). Then, with \(P(\centerdot , \centerdot )\) being the transition probability kernel and \(PV(z) = \mathbb {E}_z^{\mu _{ \epsilon }}[ V (U_1, Y_1, \mathbf{R}^s_1)]= \mathbb {E}_z^{\mu _{ \epsilon }}[ V (U_1)]\), we have

One can chose an a in neighbourhood of 1 such that \(g(a) < 1\) because of the following reasons:

Basically the derivative is less than 0, is continuous in a, and hence would remain negative in some neighbourhood of \(a=1.\) Thus \(g(\centerdot )\) is decreasing beyond \(a = 1\) in neighbourhood of \(a=1.\) Thus choose an \(a \le {\bar{a}}\), for which \( g(a) < 1\) and further choose a \(\delta > 0 \) such that \( g(a) <1 - \delta \). By Lemma 10 choose \({\bar{u}}, {\bar{\mu }}\) for this \((\delta , a)\) and then the geometric drift condition is satisfied with \(\beta := g(a) + \delta < 1 \) because,

Note that \(K_\Delta \) and \( \beta \) are the same for every \(\mu _{ \epsilon }\ge {\bar{\mu }}.\)

Small set We prove that one of the following sets is a small set:

The embedded \({ \epsilon }\)-Markov chain \(\{ \zeta _n \}_n\) changes at every \({ \epsilon }\)-arrival/departure epoch. Thus if the number of arrivals increases, the number of transitions increase to infinity, and then it converges to the stationary distribution \(\pi ^*\) given by W.2. Further as already mentioned, by equations (1) and (3), it also represents the Markov chain for any \(\mu _{ \epsilon }.\) Let \(S^{\mu _{ \epsilon }}\) represent the state of \({ \epsilon }\)-Markov process, immediately after the last \({ \epsilon }\)-change before \({\tau }\)-arrival instance, \(A_{\tau }\). Note that \(S^{\mu _{ \epsilon }} = (Y_1, \mathbf{R}^s_1).\) As \(\mu _{ \epsilon }\rightarrow \infty \), the number of \({ \epsilon }\)-arrivals within one \({\tau }\)-inter arrival time, \(N^{ \epsilon }(A_{\tau }) \rightarrow \infty \). Hence by W.2 as \(\mu _{ \epsilon }\rightarrow \infty \) (rate of convergence independent of \(s_{ \epsilon }\)),

Choose further large \({\bar{\mu }}\) such that:

For all such \(\mu _{ \epsilon }\), when \(z = (u, s_{ \epsilon }) \in \mathcal{C}\) (see (29)), i.e., when \(u \le {\bar{u}}\):

As in Lemma 8 one can show that

and hence, if required by choosing even larger \({\bar{\mu }}\), we have

Thus in all, \( \mathcal{C}\) is a small set with uniform lower bound measure as below for all \(\mu _{ \epsilon }\ge {\bar{\mu }}\):

where measure \(\gamma (.)\) is a Dirac measure at \((0, 0, \mathbf{0})\), i.e.,

Without loss of generality start with \(U_0 = 0\) and note \(V(0) = 1\). By (Baxendale 2005, Theorem 1.1), with \(\mathcal{P}\) representing the transition function of \(\{Z_n \}_n\): a) there exists a unique stationary distribution \(\pi ^*_{\tau }\); and b)

where constants \(r < 1\) and \(C < \infty \) are same for all \(\mu _{ \epsilon }\ge {\bar{\mu }}\) (because constants \(\kappa ^\infty \), \(\beta \), \(K_\Delta \) are the same for all such \(\mu _{ \epsilon }\)), and for any function \(\phi \) that is dominated by V:

In particular we are interested in stationary average, i.e., when \(\phi (u) = u\). Consider the function

It is clear that \(h'(u) > 0\) only for all \(0 \le u \le {\bar{u}}\) where \(a^{{\bar{u}}} = 1/ ln(a)\) and \(h'(u) < 0\) for \(u > {\bar{u}}.\) Thus eventually V(u) dominates and hence \(\phi (u) = u\) satisfies (30) and hence we have

Note that the above upper bounds are uniform for all \(\mu _{ \epsilon }\ge {\bar{\mu }}\) and for all \(s_{ \epsilon }\) and we always start with \(u=0\). And then we have for any \(s_{ \epsilon }\) and n

Given an \(\varepsilon >0\) choose \(n_\varepsilon \) large enough such that the sum of the first two terms is less than \(\varepsilon /2\) (for any \(\mu _{ \epsilon }\ge {\bar{\mu }} \) and for any \(s_{ \epsilon }\)), further using ergodicity of the limit system represented by evolution:

Finally for this \(n = n_\varepsilon \),

Using similar arguments to those in the proof of Theorem 1, it can be shown that \(\varOmega ^{\mu _{ \epsilon }} ( A_{{\tau }, k}) \rightarrow \nu _{\tau }A_{{\tau }, k}\) almost surely as \(\mu _{ \epsilon }\rightarrow \infty \), for each k. Moreover, \(\varOmega ^{\mu _{ \epsilon }} (A_{{\tau }, k}) \le A_{{\tau }, k},\) with \(\mathbb {E}\left[ A_{{\tau }, k}\right] < \infty ,\) implying we also have uniform integrability:

It follows that for \(\mu _{{ \epsilon }}\) large enough,

Since \(\varepsilon \) is arbitrary, this proves the convergence in expectation.

Choosing \(\phi (u) := z^u\) (for any \(0< z < 1\)) in (30) we obtain the convergence of MGF and hence the convergence in distribution as in the proof of Theorem 2. \(\square \)

Lemma 10

Consider any \(1< a <{\bar{a}}\) of W.1. Let \(\varOmega ^\mu _{n+1} := \varOmega _n^\mu (A_{{\tau }, n+1} )\), be the server capacity totally available to \({\tau }\)-customer for time duration \(A_{{\tau }, n+1}\). Let \(\varOmega ^\infty _{n+1} := A_{{\tau }, n+1} \nu _{\tau },\) be the total server capacity available to \({\tau }\)-customer in time \(A_{{\tau },n+1}\) for the limit system. For any given \(\varepsilon > 0\), there exists a \({\bar{\mu }} < \infty \) and \({\bar{u}} < \infty \) such that for any \(\mu _{ \epsilon }\ge {\bar{\mu }}\) and for any \(u \ge {\bar{u}}\) we have

Proof

By Theorem 5, Eq. (13) and exactly as in the proof of Theorem 1 one can prove that \( \varOmega ^\mu _{n+1} \rightarrow \varOmega ^\infty _{ n+1} \hbox { almost surely } \hbox { as } \mu \rightarrow \infty .\) Further as in Lemma 8 of “Appendix B”, for any given \(\varepsilon > 0\), there exists a \({\bar{\mu }} < \infty \) such that for any \(\mu \ge {\bar{\mu }}\) (we use shorter notation \(\mu =\mu _{ \epsilon }\) and it is only meant for \({ \epsilon }\)-customers) and any u

Define \(\xi ^\mu := \left( u + B_{{\tau }, n} - \varOmega ^\mu _{ n+1} \right) ^+ \), \(\xi ^\infty := \left( u + B_{{\tau }, n} - \varOmega ^\infty _{ n+1} \right) ^+ \) and then for any \(C < \infty \):

Thus and using Markov inequality,

if sufficiently large \({\bar{\mu }}\) is chosen by (32). Now

In the above the last term is so upper bounded because, as \(u \rightarrow \infty \), by integrability:

\(\square \)

Appendix D: Proofs for eager customers with limited patience

Lemma 11

When A.3 is replaced by A.3\('\) and if additionally one of the assumptions of A.4 is satisfied, one can construct an upper bound \(\varTheta ^{\mu _{ \epsilon }}\) which uniformly dominates any partial \({ \epsilon }\)-busy period uniformly over all \({ \epsilon }\)-states \(s_{ \epsilon }= (y_s, y_w, \mathbf{r})\) and converges to zero as \(\mu _{ \epsilon }\rightarrow \infty \) exactly as in Lemma 6.

Proof

The construction of \(\varTheta ^{\mu _{ \epsilon }} \) is the same for the first two conditions and is similarFootnote 15 to that in Lemma 6. We first define \(\varTheta ^{1} \) and then \(\varTheta ^{\mu _{ \epsilon }} = \varTheta ^{1} / \mu _{ \epsilon }.\) Consider a fictitious \(M/G/\infty \) (infinite server) queue when started with K initial customers, whose service times are given by: a) \({\tilde{b}}^{1}\), the upper bound on the time spent by any \({ \epsilon }\)-customer in the system (with \(\mu _{ \epsilon }= 1\)), when A.4(a) is satisfied; b) the sum \(\Gamma ^1 + B_{ \epsilon }\), where \(\Gamma ^{\mu _{ \epsilon }}\) is the reneging time, when A.4(b) is satisfied. Then \(\varTheta ^1\) is the busy period of the above fictitious queue. By using appropriate coupling rules as in Lemma 6, \(\varTheta ^{\mu _{ \epsilon }} \) almost surely dominates \({\tilde{\varPsi }}(s_{ \epsilon })\) for any initial state \(s_{ \epsilon }\). This is because the fictitious \(M/G/\infty \) again accepts all the arrivals, any customer accepted in original system spends longer time in the fictitious \(M/G/\infty \) queue etc.

We first observe few simple facts. The busy period \(\mathcal{B}_K\) of an \(M/G/\infty \) when started with K customers and with general IID service times \(\{ B_{G, n}\}_n\) is almost surely larger than that of an \(M/G/\infty \) queue (\({\hat{\mathcal{B}}}_1\)) when started with 1 customer and whose IID service times are given by the maximum of K independent service times of the original \(M/G/\infty \) queue, i.e., if service time of nth customer in upper \(M/G/\infty \) queue equals \(\hat{B}_{G, n} := \max _{1 \le j \le K } B_{G, (n-1)K+j}\). Therefore (Takács (1962)),

In a similar way

Thus the first two moments of \(\varTheta ^1\) are bounded. Hence the conclusions of Lemma 6 are true under A.4(a) and A.4(b).

When A.4(c) is satisfied Here \(\varTheta ^{\mu _{ \epsilon }} \) is the busy period of a fictitious \(M/G/K_1/2K\) queue, and when started with K customers. We would couple the inter arrival times, job sizes etc., as before in both the systems.

System with 2K servers (each of same capacity as before), when started with K\({ \epsilon }\)-customers and: (a) if \(y_s\) number of \({ \epsilon }\)-customers are deriving service at the beginning of \({\tilde{\varPsi }}(s_{ \epsilon })\), the service times of those \(y_s\) customers also equal the service time requirements of the first \(y_s\) customers of the 2K system and these are independent of residual service times;Footnote 16 (b) the service times of the remaining \((K_1-y_s)\)\({ \epsilon }\)-customers are independent copies of the exponential random variable with the same parameter; (c) further inter arrival times and service times of all the new \({ \epsilon }\)-customers coincide with that in the original system; and (d) if a customer is not accepted in original system, we consider an independent service time for that customer. With this construction, an \({ \epsilon }\)-customer departure during \({\tilde{\varPsi }}(s_{ \epsilon })\), of the original system definitely marks a departure in 2K system also, any customer accepted in original system is also accepted in the 2K system (it has double 2K holding capacity). Thus the busy period \(\varTheta ^{\mu _{ \epsilon }}\) of the 2K system dominates the residual \({ \epsilon }\)-busy period \({\tilde{\varPsi }}(s_{ \epsilon })\), irrespective of the state \(s_{ \epsilon }= (y_s, y_w)\) of the original system at the start of \({\tilde{\varPsi }}(s_{ \epsilon })\).

Rest of the arguments are as in Lemma 6. \(\square \)

Rights and permissions

About this article

Cite this article

Kavitha, V., Nair, J. & Sinha, R.K. Pseudo conservation for partially fluid, partially lossy queueing systems. Ann Oper Res 277, 255–292 (2019). https://doi.org/10.1007/s10479-018-2780-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-018-2780-8