Abstract

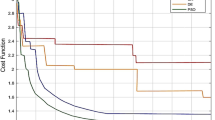

Echo state networks (ESNs) with very simple and linear learning algorithm are a new approach to recurrent neural network training. Recently, these networks have aroused a lot of interest in their nonlinear dynamic system modeling capacities. In previous studies, the largest eigenvalue of the reservoir connectivity matrix (spectral radius) is used as a predictor for the stable network dynamics, but recent evidences show that in the presence of reservoir substructures like clusters, stability criteria in these kind of networks are altered. Some researchers have also demonstrated that network approximation ability in ESN networks is improved by the characteristics of small-world and scale-free. In this paper, we used three classic clustering algorithms called K-Means (C- Centeriod), partitioning Around Medoids (PAM) and ward algorithm, for clustering the internal neurons. After that, we refer to mean nodes in each cluster as backbone units and refer to the other neurons in a cluster as local neurons. Connections between neurons are such that the resulting networks have small-world topology and neurons in the new networks follow a power-law distribution. At first, we demonstrate that resulting clustered networks have some characteristics of biological neural system like power-law distribution, small-word feature, community structure, and distributed architecture. For investigating the prediction power and the range of spectral radius of resulting networks, we use new ESNs on the Mackey-Glass dynamic system and the laser time series prediction problem and compared the results with the previous works. Then we evaluate echo state property and performance of approximating highly complex nonlinear dynamic systems of proposed networks rather than previous approaches. Results show that the proposed methods outperform the previous ones in terms of prediction accuracy of chaotic time series.

Similar content being viewed by others

References

Barabasi A, Bonabeau E (2003) Scale-free networks. Sci Am:50–59

Bengio SP, Frasconi P (1994) Learning long-term dependencies with gradient descent is difficult. IEEE Trans Neural Netw 5(2):157–166

Bohland JW, Minai AA (2001) Efficient associative memory using small-world architecture, vol 8–40, pp 489–496

Deng Z, Zhang Y (2006) Collective behavior of a small-world recurrent neural system with scale-free distribution- IEEE transactions on neural networks

Eguıluz VM, Chialvo DR, Cecchi GA, Baliki M, Apkarian AV (2005) Scale-free brain functional networks. Phys Rev Lett 94:018102

Girvan M, Newman MEJ (2002) Community structure in social and biological networks. Proc Natl Acad Sci USA 99(12):7821–7826

Georg H (2008) Echo state networks with filter neurons and a delay&sum readout with applications in audio signal processing, master’s thesis at graz university of technology- institute for theoretical computer science, graz university of technology a-8010 graz, Austria- June 2008

Herbert J (2001) The echo state approach to analyzing and training recurrent neural networks, german national research center for information technology bremen, tech, Rep. No.148

Herbet J, Haas H (2004) Harnessing nonlinearity: predicting chaotic systems and saving energy in wireless communication. Science 304:78–80. doi:10.1126/science.1091277

Sarah J, Stefan R, Ulrich E (2010) Extending stability through hierarchical clusters in Echo State Networks, Frontiers in Neuroinformatics, 2010- volume 4

Kaufman L, Rousseeuw P J (1987) Clustering by means of Medoids. In: Dodge, Y (ed) Statistical data analysis based on the L 1–norm and related methods, North-Holland, pp 405–416

Mantas L, Herbert J (2009) Reservoir computing approaches to recurrent neural network training. Vol 3:127–149

Maass W, Natschlaeger T, Markram H (2002) Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput 14(11):2531–2560. ISSN 0899-7667

MacKay DM (1966) In: Eccles J C (ed) Cerebral organization and the conscious control of action. Springer, Brain and conscious experience, pp 422–440

MacQueen JB (1967) Some methods for classification and analysis of multivariate observations. In: Proceedings of 5-th berkeley Symposium on Mathematical Statistics and Probability, berkeley, university of california press, vol. 1, pp. 281–297

Medina A, Matta I, Byers J (2000) On the origin of power laws in internet topologies, was supported in part by NSF grants CAREER ANIR-9701988 and MRI EIA-9871022. 1for Boston university Representative Internet Topology gEnerator. Available at http://www.cs.bu.edu/fac/matta/software.html Published in: Newsletter ACM SIGCOMM Computer Communication Review Volume 30 Issue 2, April 2000 Pages 18-28

Mcculloch Warren S., Pitts Waiter H (1943) A logical calculus of the ideas immanent in nervous activity, originally published. In: Bulletin of mathematical biophysics, vol.5, p.115-133

Morelli LG, Abramson G, Kuperman MN (2004) Associative memory on a small-world neural network. Eur Phys J B-Condensed Matter Complex Syst 38:495–500

Rosenblatt F, The Perceptron–a perceiving and recognizing automaton Report 85-460-1 cornell aeronautical laboratory (1957)

Siegelmann HT, Sontag ED (1991) Appl Math Lett 4:77–80

Steil JJ (2004) Backpropagation-decorrelation: recurrent learning with O(N) complexity. pp 843–848

Stauffer D, Aharony1 A, da Fontoura CL, Adler J (2003) Efficient hopfield pattern recognition on a scale-free neural network. Eur Phys J B 32:395–399

Ward JH Jr (1963) Hierarchical grouping to optimize an objective function. J Am Stat Assoc 58:236–244

Wilhelm T, Kim J (2008) What is a complex graph? Physica A 387:2637–2652

Xiang L, Hong-yan C, Tian-jun Z, Jian-ya C (2012) Performance evaluation of new echo state networks based on complex network, the journal of china universities of posts and telecommunications, available online at, www.sciencedirect.com

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Najibi, E., Rostami, H. SCESN, SPESN, SWESN: Three recurrent neural echo state networks with clustered reservoirs for prediction of nonlinear and chaotic time series. Appl Intell 43, 460–472 (2015). https://doi.org/10.1007/s10489-015-0652-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-015-0652-3