Abstract

Measuring an attribute may consume several types of resources. For example, a blood test has a cost and needs to wait for a result. Resource constraints are often imposed on a classification task. In medical diagnosis and marketing campaigns, it is common to have a deadline and budget for finishing the task. The objective of this paper is to develop an algorithm for inducing a classification tree with minimal misclassification cost under multiple resource constraints. To our best knowledge, the problem has not been studied in the literature. To address this problem, we propose an innovative algorithm, namely, the Cost-Sensitive Associative Tree (CAT) algorithm. Essentially, the algorithm first extracts and retains association classification rules from the training data which satisfy resource constraints, and then uses the rules to construct the final decision tree. The approach can ensure that the classification task is done within the specified resource constraints. The experiment results show that the CAT algorithm significantly outperforms the traditional top-down approach and adapts very well to available resources.

Similar content being viewed by others

References

Han J, Kamber M (2006) Data mining: concepts and techniques. Morgan Kaufmann

Quinlan JR (1986) Induction of decision trees. Mach Learn 1:81–106

Quinlan JR (1993) C4.5: programs for machine learning. Morgan Kaufmann

Breiman L, Friedman JH, Olshen RA, Stone CJ (1984) Classification and regression trees. Wadsworth

Frini A, Guitouni A, Martel JM (2012) A general decomposition approach for multi-criteria decision trees. Expert Syst Appl 220:452–460

Savsek T, Vezjak M, Pavesic N (2006) Fuzzy trees in decision support systems. Eur J Oper Res 174:293–310

Ahmad A (2014) Decision tree ensembles based on kernel features. Appl Intell 41:855–869

Weiss GM, Provost F (2003) Learning when training data are costly: the effect of class distribution on tree induction. J Artif Intell Res 19:315–354

Bahnsen AC, Aouada D, Ottersten B (2015) Example-dependent cost-sensitive decision trees. Expert Systems with Applications 42:6609–6619

Li XJ, Zhao H, Zhu W (2015) A cost sensitive decision tree algorithm with two adaptive mechanisms. Knowl Based Syst 88:24–33

Turney PD (1995) Cost-sensitive classification: empirical evaluation of a hybrid genetic decision tree induction algorithm. J Artif Intell Res 2:369–409

Turney PD (2000) Types of cost in inductive concept learning. Proceedings of workshop on cost-sensitive learning at the seventeenth international conference on machine learning, pp 15–21

Garcia S, Fernandez A, Herrera F (2009) Enhancing the effectiveness and interpretability of decision tree and rule induction classifiers with evolutionary training set selection over imbalanced problems. Appl Soft Comput 9:1304–1314

Zhang X, Song Q, Wang G, Zhang K, He L, Jia X (2015) A dissimilarity-based imbalance data classification algorithm. Appl Intell 42:544–565

Arnt A, Zilberstein S (2005) Learning policies for sequential time and cost sensitive classification. Proceedings of 1st international workshop on utility-based data mining, pp 39–45

Zhao Z, Wang X (2018) Cost-sensitive SVDD models based on a sample selection approach. Appl Intell 48:4247–4266.

Chen YL, Wu CC, Tang K (2009) Building a cost-constrained decision tree with multiple condition attributes. Inf Sci 179:967–979

Min F, Zhu W (2012) Attribute reduction of data with error ranges and test costs. Inf Sci 211:48–67

Núñez M (1991) The use of background knowledge in decision tree induction. Mach Learn 6:231–250

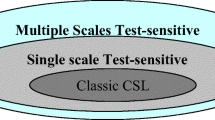

Qiu C, Jiang L, Li C (2017) Randomly selected decision tree for test-cost sensitive learning. Appl Soft Comput 53:27–33

Tan M (1993) Cost-sensitive learning of classification knowledge and its applications in robotics. Mach Learn 13:7–33

Domingos P (1999) MetaCost: A general method for making classifiers cost-sensitive. In: Proceedings of fifth international conference on knowledge discovery and data mining, pp 155–164

Elkan C (2001) The foundations of cost-sensitive learning. In: Proceedings of seventeenth international joint conference on artificial intelligence, pp 973–978

Li F, Zhang X, Zhang X, Du C, Xu Y, Tian YC (2018) Cost-sensitive and hybrid-attribute measure multi-decision tree over imbalanced data sets. Inf Sci 422:242–256

Ting KM (2002) An instance-weighting method to induce cost-sensitive trees. IEEE Trans Knowl Data Eng 14:659–665

Zhao H (2008) Instance weighting versus threshold adjusting for cost-sensitive classification. Knowl Inf Syst 15:321–334

Ling CX, Yang Q, Wang J, Zhang S (2004) Decision trees with minimal costs. In: Proceedings of 2004 international conference on machine learning, p 69

Ling CX, Sheng VS, Yang Q (2006) Test strategies for cost-sensitive decision tree. IEEE Trans Knowl Data Eng 18:1055–1067

Yang Q, Ling C, Chai X, Pan R (2006) Test-cost sensitive classification on data with missing values. IEEE Trans Knowl Data Eng 18:626–638

Zhang S, Qin Z, Ling CX, Sheng S (2005) Missing is useful”: mining values in cost-sensitive decision trees. IEEE Trans Knowl Data Eng 17:1689-1693.

Zhao H, Li X (2017) A cost sensitive decision tree algorithm based on weighted class distribution with batch deleting attribute mechanism. Inf Sci 378:303–316

Zhang S (2010) Cost-sensitive classification with respect to waiting cost. Knowl Based Syst 23:369–378

Chen YL, Wu CC, Tang K (2016) Time-constrained cost-sensitive decision tree induction. Inf Sci 354:140–152

Qin Z, Zhang S, Zhang C (2004) Cost-sensitive decision trees with multiple cost scales. In: Proceedings of Australian joint conference on artificial intelligence, pp 380–390

Wu CC, Chen YL, Liu YH, Yang XY (2016) Decision tree induction with a constrained number of leaf nodes. Appl Intell 45:673–685

Agrawal R, Srikant R (1994) Fast algorithms for mining association rules in large databases. In: Proceedings of the 20th International Conference on Very Large Data Bases, pp 487–499

Han J, Pei J, Yin Y, Mao R (2004) Mining frequent patterns without candidate generation: a frequent-pattern tree approach. Data Min Knowl Disc 8:53–87

Asuncion A, Newman DJ (2007) UCI machine learning repository. http://www.ics.uci.edu/~mlearn/MLRepository.html. Accessed Apr 2010

Czerniak J, Zarzycki H (2003) Application of rough sets in the presumptive diagnosis of urinary system diseases. Proceedings of ACS'2002 9th international conference 41-51.

Fayyad UM, Irani KB (1993) Multi-interval discretization of continuous-valued attributes for classification learning. In: Proceedings of 13th int’l joint conference artificial intelligence, pp 1022–1027

Minaei-Bidgoli B, Barmaki R, Nasiri M (2013) Mining numerical association rules via multi-objective genetic algorithms. Inf Sci 233:15–24

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wu, CC., Chen, YL. & Tang, K. Cost-sensitive decision tree with multiple resource constraints. Appl Intell 49, 3765–3782 (2019). https://doi.org/10.1007/s10489-019-01464-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-019-01464-x