Abstract

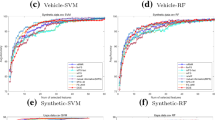

Feature selection plays a critical role in many applications that are relevant to machine learning, image processing and gene expression analysis. Traditional feature selection methods intend to maximize feature dependency while minimizing feature redundancy. In previous information-theoretical-based feature selection methods, feature redundancy term is measured by the mutual information between a candidate feature and each already-selected feature or the interaction information among a candidate feature, each already-selected feature and the class. However, the larger values of the traditional feature redundancy term do not indicate the worse a candidate feature because a candidate feature can obtain large redundant information, meanwhile offering large new classification information. To address this issue, we design a new feature redundancy term that considers the relevancy between a candidate feature and the class given each already-selected feature, and a novel feature selection method named min-redundancy and max-dependency (MRMD) is proposed. To verify the effectiveness of our method, MRMD is compared to eight competitive methods on an artificial example and fifteen real-world data sets respectively. The experimental results show that our method achieves the best classification performance with respect to multiple evaluation criteria.

Similar content being viewed by others

References

Battiti R (1994) Using mutual information for selecting features in supervised neural net learning. IEEE Trans Neural Netw 5(4):537–550

Bennasar M, Hicks Y, Setchi R (2015) Feature selection using joint mutual information maximisation. Expert Syst Appl 42(22):8520–8532

Bennasar M, Setchi R, Hicks Y (2013) Feature interaction maximisation. Pattern Recogn Lett 34 (14):1630–1635

Bolón-Canedo V, Sánchez-Marono N, Alonso-Betanzos A, Benítez JM, Herrera F (2014) A review of microarray datasets and applied feature selection methods. Inf Sci 282:111–135

Chen R, Sun N, Chen X, Yang M, Wu Q (2018) Supervised feature selection with a stratified feature weighting method. IEEE Access 6:15,087–15,098

Chen S, Ni D, Qin J, Lei B, Wang T, Cheng JZ (2016) Bridging computational features toward multiple semantic features with multi-task regression: a study of ct pulmonary nodules. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 53–60

Cover TM, Thomas JA (2012) Elements of information theory. Wiley, New York

Gao W, Hu L, Zhang P (2018) Class-specific mutual information variation for feature selection. Pattern Recogn 79:328–339

Gao W, Hu L, Zhang P, He J (2018) Feature selection considering the composition of feature relevancy. Pattern Recogn Lett 112:70–74

Gui J, Sun Z, Ji S, Tao D, Tan T (2017) Feature selection based on structured sparsity: a comprehensive study. IEEE Trans Neural Netw Learn Syst 28(7):1490–1507

Hancer E, Xue B, Zhang M, Karaboga D, Akay B (2018) Pareto front feature selection based on artificial bee colony optimization. Inf Sci 422:462–479

Huda S, Yearwood J, Jelinek HF, Hassan MM, Fortino G, Buckland M (2016) A hybrid feature selection with ensemble classification for imbalanced healthcare data: a case study for brain tumor diagnosis. IEEE Access 4:9145–9154

Lee S, Park YT, dAuriol BJ, et al. (2012) A novel feature selection method based on normalized mutual information. Appl Intell 37(1):100–120

Lewis DD (1992) Feature selection and feature extraction for text categorization. In: Proceedings of the workshop on Speech and Natural Language. Association for Computational Linguistics, pp 212–217

Li J, Cheng K, Wang S, Morstatter F, Trevino RP, Tang J, Liu H (2016) Feature selection: A data perspective. arXiv:1601.07996

Li J, Cheng K, Wang S, Morstatter F, Trevino RP, Tang J, Liu H (2018) Feature selection: a data perspective. ACM Comput Surv (CSUR) 50(6):94

Lichman M (2013) UCI machine learning repository. http://archive.ics.uci.edu/ml

Lin D, Tang X (2006) Conditional infomax learning: an integrated framework for feature extraction and fusion. In: European conference on computer vision. Springer, pp 68–82

Liu M, Xu C, Luo Y, Xu C, Wen Y, Tao D (2018) Cost-sensitive feature selection by optimizing f-measures. IEEE Trans Image Process 27(3):1323–1335

Mafarja M, Aljarah I, Heidari AA, Hammouri AI, Faris H, AlaM AZ, Mirjalili S (2018) Evolutionary population dynamics and grasshopper optimization approaches for feature selection problems. Knowl-Based Syst 145:25–45

Obozinski G, Taskar B, Jordan M (2006) Multi-task feature selection. Statistics Department, UC Berkeley, Technical Report 2(2.2)

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay E (2011) Scikit-learn: Machine learning in Python. J Mach Learn Res 12:2825–2830

Peng H, Long F, Ding C (2005) Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Pattern Anal Mach Intell 27(8):1226–1238

Sayed GI, Hassanien AE, Azar AT (2019) Feature selection via a novel chaotic crow search algorithm[J]. Neural Comput Appl 31(1):171–188

Senawi A, Wei HL, Billings SA (2017) A new maximum relevance-minimum multicollinearity (mrmmc) method for feature selection and ranking. Pattern Recogn 67:47–61

Sheikhpour R, Sarram MA, Gharaghani S, Chahooki MAZ (2017) A survey on semi-supervised feature selection methods. Pattern Recogn 64:141–158

Singh D, Singh B (2019) Hybridization of feature selection and feature weighting for high dimensional data[J]. Appl Intell 49(4):1580–1596

Vergara JR, Estévez PA (2014) A review of feature selection methods based on mutual information. Neural Comput Appl 24(1):175–186

Wang J, Wei JM, Yang Z, Wang SQ (2017) Feature selection by maximizing independent classification information. IEEE Trans Knowl Data Eng 29(4):828–841

Wang Y, Feng L, Zhu J (2018) Novel artificial bee colony based feature selection method for filtering redundant information. Appl Intell 48(4):868–885

Yang HH, Moody J (2000) Data visualization and feature selection: New algorithms for nongaussian data. In: Advances in neural information processing systems, pp 687–693

Zeng Z, Zhang H, Zhang R, Yin C (2015) A novel feature selection method considering feature interaction. Pattern Recogn 48(8):2656–2666

Acknowledgments

This work is funded by: Postdoctoral Innovative Talents Support Program under Grant No. BX20190137, and National Key R&D Plan of China under Grant No. 2017YFA0604500, National Sci-Tech Support Plan of China under Grant No. 2014BAH02F00, and by National Natural Science Foundation of China under Grant No. 61701190, and by Youth Science Foundation of Jilin Province of China under Grant No. 20160520011JH and 20180520021JH , and by Youth Sci-Tech Innovation Leader and Team Project of Jilin Province of China under Grant No. 20170519017JH, and by Key Technology Innovation Cooperation Project of Government and University for the whole Industry Demonstration under Grant No. SXGJSF2017-4, and Key scientific and technological R&D Plan of Jilin Province of China under Grant No. 20180201103GX, and China Postdoctoral Science Foundation under Grant No. 2018M631873.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Gao, W., Hu, L. & Zhang, P. Feature redundancy term variation for mutual information-based feature selection. Appl Intell 50, 1272–1288 (2020). https://doi.org/10.1007/s10489-019-01597-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-019-01597-z