Abstract

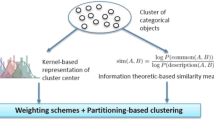

The performance of a partitional clustering algorithm is influenced by the initial random choice of cluster centers. Different runs of the clustering algorithm on the same data set often yield different results. This paper addresses that challenge by proposing an algorithm named k-PbC, which takes advantage of non-random initialization from the view of pattern mining to improve clustering quality. Specifically, k-PbC first performs a maximal frequent itemset mining approach to find a set of initial clusters. It then uses a kernel-based method to form cluster centers and an information-theoretic based dissimilarity measure to estimate the distance between cluster centers and data objects. An extensive experimental study was performed on various real categorical data sets to draw a comparison between k-PbC and state-of-the-art categorical clustering algorithms in terms of clustering quality. Comparative results have revealed that the proposed initialization method can enhance clustering results and k-PbC outperforms compared algorithms for both internal and external validation metrics.

k-PbC algorithm for categorical data clustering

Similar content being viewed by others

References

Aggarwal CC (2013) An introduction to cluster analysis. In: Data clustering: algorithms and applications. Chapman and Hall/CRC, pp 1–28

Agrawal R, Srikant R (1994) Fast algorithms for mining association rules. In: Proceedings of the 20th international conference on very large data bases, VLDB ’94. Morgan Kaufmann Publishers Inc, San Francisco , pp 487–499

Ahmad A, Dey L (2007) A k-mean clustering algorithm for mixed numeric and categorical data. Data Knowl Eng 63(2):503–527

Aitchison J, Aitken CGG (1976) Multivariate binary discrimination by the kernel method. Biometrika 63 (3):413–420

Andreopoulos B (2013) Clustering categorical data. In: Data clustering: algorithms and applications. Chapman and Hall/CRC, pp 277–304

Arthur D, Vassilvitskii S (2007) k-means++: the advantages of careful seeding. In: Proceedings of the eighteenth annual ACM-SIAM symposium on discrete algorithms. http://dl.acm.org/citation.cfm?id=1283383.1283494. Society for Industrial and Applied Mathematics, pp 1027–1035

Bahmani B, Moseley B, Vattani A, Kumar R, Vassilvitskii S (2012) Scalable k-means++. Proc VLDB Endow 5(7):622–633

Bai L, Liang J, Dang C, Cao F (2012) A cluster centers initialization method for clustering categorical data. Expert Syst Appl 39(9):8022–8029. https://doi.org/10.1016/j.eswa.2012.01.131

Boriah S, Chandola V, Kumar V (2008) Similarity measures for categorical data: a comparative evaluation. In: Proceedings of the 2008 SIAM international conference on data mining. SIAM, pp 243–254

Cao F, Liang J, Bai L (2009) A new initialization method for categorical data clustering. Expert Syst Appl 36(7):10223–10228. https://doi.org/10.1016/j.eswa.2009.01.060

Celebi EM, Kingravi HA, Vela PA (2013) A comparative study of efficient initialization methods for the k-means clustering algorithm. Exp Syst Appl 40(1):200–210. https://doi.org/10.1016/j.eswa.2012.07.021

Chen J, Lin X, Xuan Q, Xiang Y (2018) Fgch: a fast and grid based clustering algorithm for hybrid data stream. Appl Intell, 1–17. https://doi.org/10.1007/s10489-018-1324-x

Chen L (2015) A probabilistic framework for optimizing projected clusters with categorical attributes. Sci Chin Inform Sci 58(7):1–15

Chen L, Wang S (2013) Central clustering of categorical data with automated feature weighting. In: IJCAI, pp 1260–1266

Cheung Y-L, Fu AW-C (2004) Mining frequent itemsets without support threshold: with and without item constraints. IEEE Trans Knowl Data Eng 16(9):1052–1069. https://doi.org/10.1109/TKDE.2004.44

Deng T, Ye D, Ma R, Fujita H, Xiong L (2020) Low-rank local tangent space embedding for subspace clustering. Inform Sci 508:1–21. https://doi.org/10.1016/j.ins.2019.08.060

Dinh D-T, Fujinami T, Huynh V-N (2019) Estimating the optimal number of clusters in categorical data clustering by silhouette coefficient. In: KSS 2019: the twentieth international symposium on knowledge and systems sciences. Springer

Dinh D-T, Huynh V-N (2018) K-ccm: a center-based algorithm for clustering categorical data with missing values. In: Torra V, Narukawa Y, Aguiló I, González-Hidalgo M (eds) MDAI 2018: modeling decisions for artificial intelligence. Springer, pp 267–279, DOI https://doi.org/10.1007/978-3-030-00202-2_22, (to appear in print)

Dinh D-T, Le B, Fournier-Viger P, Huynh V-N (2018) An efficient algorithm for mining periodic high-utility sequential patterns. Appl Intell 48(12):4694–4714. https://doi.org/10.1007/s10489-018-1227-x

Dinh T, Huynh V-N, Le B (2017) Mining periodic high utility sequential patterns. In: Nguyen NT, Tojo S, Nguyen LM, Trawiński B (eds) Intelligent information and database systems. Springer, Cham, pp 545–555. https://doi.org/10.1007/978-3-319-54472-4_51

dos Santos TRL, Zárate LE (2015) Categorical data clustering: what similarity measure to recommend? Expert Syst Appl 42(3):1247–1260. https://doi.org/10.1016/j.eswa.2014.09.012

Dua D, Graff C (2019) UCI machine learning repository. http://archive.ics.uci.edu/ml

Fournier-Viger P, Chun-Wei Lin J, Vo B, Truong Chi T, Zhang J, Le HB (2017) A survey of itemset mining. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 7(4):e1207. https://doi.org/10.1002/widm.1207

Grahne G, Zhu J (2003) High performance mining of maximal frequent itemsets. In: 6th International workshop on high performance data mining, vol 16, p 34

Han J, Pei J, Kamber M (2011) Data mining: concepts and techniques. Elsevier

Han J, Pei J, Yin Y (2000) Mining frequent patterns without candidate generation. In: ACM sigmod record, vol 29. ACM, pp 1–12, DOI https://doi.org/10.1145/342009.335372

Huang Z (1997) Clustering large data sets with mixed numeric and categorical values. In: Proceedings of the First Pacific Asia knowledge discovery and data mining conference. World Scientific, Singapore, pp 21–34

Huang Z (1998) Extensions to the k-means algorithm for clustering large data sets with categorical values. Data Mining Knowl Discov 2(3):283–304. https://doi.org/10.1023/A:1009769707641

Hubert L, Arabie P (1985) Comparing partitions. J Classif 2(1):193–218. https://doi.org/10.1007/BF01908075

Izenman AJ (2008) Cluster analysis. In: Modern multivariate statistical techniques: regression, classification, and manifold learning. Springer, New York, pp 407–462

Jiang F, G Liu J D u, Sui Y (2016) Initialization of k-modes clustering using outlier detection techniques. Inform Sci 332:167–183. https://doi.org/10.1016/j.ins.2015.11.005

Kassambara A (2017) Practical guide to cluster analysis in R: unsupervised machine learning, vol 1 STHDA

Kaufman L, Rousseeuw PJ (2009) Finding groups in data: an introduction to cluster analysis, vol. 344. Wiley

Khan SS, Ahmad A (2013) Cluster center initialization algorithm for k-modes clustering. Expert Syst Appl 40(18):7444–7456. https://doi.org/10.1016/j.eswa.2013.07.002

Kim D -W, Lee KY, Lee D, Lee KH (2005) A k-populations algorithm for clustering categorical data. Pattern Recogn 38(7):1131–1134

Ko Y -C, Fujita H (2012) An approach of clustering features for ranked nations of e-government. Acta Polytechnica Hungarica 11(6):2014. https://doi.org/10.12700/aph.11.06.2014.06.1

Kuhn HW (1955) The Hungarian method for the assignment problem. Naval Res Logist Quart 2(1–2):83–97

Le B, Dinh D-T, Huynh V-N, Nguyen Q-M, Fournier-Viger P (2018) An efficient algorithm for hiding high utility sequential patterns. Int J Approx Reason 95:77–92. https://doi.org/10.1016/j.ijar.2018.01.005

Le B, Huynh U, Dinh D-T (2018) A pure array structure and parallel strategy for high-utility sequential pattern mining. Expert Syst Appl 104:107–120. https://doi.org/10.1016/j.eswa.2018.03.019

Lin D (1998) An information-theoretic definition of similarity. In: Proceedings of the Fifteenth international conference on machine learning, pp 296–304. http://dl.acm.org/citation.cfm?id=645527.657297

MacQueen J (1967) Some methods for classification and analysis of multivariate observations. In: Proceedings of the Fifth Berkeley symposium on mathematical statistics and probability, vol 1, Oakland, pp 281–297

Manning CD, Raghavan P, Schütze H (2008) Introduction to information retrieval. Cambridge University Press, New York

Mojarad M, Nejatian S, Parvin H, Mohammadpoor M (2019) A fuzzy clustering ensemble based on cluster clustering and iterative fusion of base clusters. Appl Intell, 1–15. https://doi.org/10.1007/s10489-018-01397-x

Ng MK, Li MJ, Huang JZ, He Z (2007) On the impact of dissimilarity measure in k-modes clustering algorithm. IEEE Trans Pattern Anal Mach Intell 29(3):503–507. https://doi.org/10.1109/TPAMI.2007.53

Nguyen HH (2017) Clustering categorical data using community detection techniques. Computational Intelligence and Neuroscience. https://doi.org/10.1155/2017/8986360

Nguyen T-P, Dinh D-T, Huynh V-N (2018) A new context-based clustering framework for categorical data. In: Geng X, Kang B-H (eds) PRICAI 2018: trends in artificial intelligence. Springer, pp 697–709, DOI https://doi.org/10.1007/978-3-319-97304-3_53, (to appear in print)

Nguyen T-HT, Dinh D-T, Sriboonchitta S, Huynh V-N (2019) A method for k-means-like clustering of categorical data. J Ambient Intell Humaniz Comput, 1–11. https://doi.org/10.1007/s12652-019-01445-5

Nguyen T-HT, Huynh V-N (2016) A k-means-like algorithm for clustering categorical data using an information theoretic-based dissimilarity measure. In: Gyssens M, Simari G (eds) FoIKS 2016: international symposium on foundations of information and knowledge systems. Springer, pp 115–130. https://doi.org/10.1007/978-3-319-30024-5_7

Reddy CK, Vinzamuri B (2013) A survey of partitional and hierarchical clustering algorithms. In: Data clustering: algorithms and applications. Chapman and Hall/CRC, pp 87– 110

Rousseeuw PJ (1987) Silhouettes: a graphical aid to the interpretation and validation of cluster analysis. J Comput Appl Math 20:53–65

San OM, Huynh V N, Nakamori Y (2004) An alternative extension of the k-means algorithm for clustering categorical data. Int J Appl Math Comput Sci 14:241–247

Wang H, Yang Y, Liu B, Fujita H (2019) A study of graph-based system for multi-view clustering. Knowl-Based Syst 163:1009–1019. https://doi.org/10.1016/j.knosys.2018.10.022

Wu X, Kumar V, Ross Quinlan J, Ghosh J, Yang Q, Motoda H, McLachlan GJ, Ng A, Liu B, Philip SYu et al (2008) Top 10 algorithms in data mining. Knowl Inform Syst 14(1):1–37. https://doi.org/10.1007/s10115-007-0114-2

Zhang Y, Yang Y, Li T, Fujita H (2019) A multitask multiview clustering algorithm in heterogeneous situations based on lle and le. Knowl-Based Syst 163:776–786. https://doi.org/10.1016/j.knosys.2018.10.001

Acknowledgments

The authors are very grateful to the editor and reviewers for their valuable comments and suggestions. This paper is based upon work supported in part by the Air Force Office of Scientific Research/Asian Office of Aerospace Research and Development (AFOSR/AOARD) under award number FA2386-17-1-4046.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Dinh, DT., Huynh, VN. k-PbC: an improved cluster center initialization for categorical data clustering. Appl Intell 50, 2610–2632 (2020). https://doi.org/10.1007/s10489-020-01677-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-01677-5