Abstract

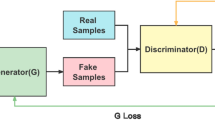

Generative adversarial network (GAN) is an effective method to learn generative models from real data. But there are some drawbacks such as instability, mode collapse and low computational efficiency in the existing GANs. In this paper, attentive evolutionary generative adversarial network (AEGAN) model is proposed in order to improve these disadvantages of GANs. The modified evolutionary algorithm is designed for the AEGAN. In the AEGAN the generator evolves continuously to resist the discriminator by three independent mutations at every batch and only the well-performing offspring (i.e.,the generators) can be preserved at next batch. Furthermore, a normalized self-attention (SA) mechanism is embedded in the discriminator and generator of AEGAN to adaptively assign weights according to the importance of features. We propose careful regulation of the generators evolution and an effective weight assignment to improve diversity and long-range dependence. We also propose a superior training algorithm for AEGAN. With the algorithm, the AEGAN overcomes the shortcomings of traditional GANs brought by single loss function and deep convolution and it greatly improves the training stability and statistical efficiency. Extensive image synthesis experiments on CIFAR-10, CelebA and LSUN datasets are presented to validate the performance of AEGAN. Experimental results and comparisons with other GANs show that the proposed model is superior to the existing models.

Similar content being viewed by others

References

I Goodfellow, J Pouget-Abadie, M Mirza, B Xu, D Warde-Farley, S Ozair, A Courville, and Y Bengio (2014). Generative adversarial nets[C]// In advances in neural information processing systems (NIPS), pages 2672–2680

Nguyen, Anh and Clune, Jeff and Bengio, Y. and Dosovitskiy, Alexey and Yosinski, Jason (2017). Plug & Play Generative Networks: Conditional Iterative Generation of Images in Latent Space[C]//In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 3510–3520

P Isola, JY Zhu, T Zhou, and AA Efros (2017). Image-to-image translation with conditional adversarial networks[C]// in the IEEE conference on computer vision and pattern recognition (CVPR), pages 5967–5976

Li Jiwei, W Monroe, Shi Tianlin, S Jean, A Ritter, D Jurafsky (2017). Adversarial learning for neural dialogue generation arXiv preprint arXiv: 1701.06547

Hu Weiwei, Tan Ying (2017). Generating adversarial malware examples for black-box attacks based on GAN. arXiv preprint arXiv:1702.05983

Mao, Xudong and Li, Qing and Xie, Haoran and Lau, Raymond and Wang, Zhen and Smolley, Stephen (2016). Least Squares Generative Adversarial Networks, arXiv: 1611.04076, pages 2813–2821

I Gulrajani, F Ahmed, M Arjovsky, V Dumoulin, and A Courville (2017). Improved training of wasserstein gans. In Advances in Neural Information Processing Systems (NIPS)

A Radford, L Metz, and S Chintala (2016). Unsupervised representation learning with deep convolutional generative adversarial networks[C]//in proceedings of the international conference on learning representations (ICLR), pages 1–15

V Nagarajan and JZ Kolter (2017). Gradient descent GAN optimization is locally stable[C]//in advances in neural information processing systems (NIPS), pages 1–41

Wang C, Xu C, Xin Y, Dacheng T (2018) Evolutionary generative adversarial networks [J]. IEEE Trans Evol Comput 23(6):921–934

Toutouh, Jamal and Hemberg, Erik and O’Reilly, Una-May (2019). Spatial evolutionary generative adversarial networks. Proceedings of the Genetic and Evolutionary Computation Conference. arXiv:1905.12702

He C, Kang H, Yao T, Li X (2019) An effective classifier based on convolutional neural network and regularized extreme learning machine[J]. Math Biosci Eng 16(6):8309–8321

A Vaswani, N Shazeer, N Parmar, et al. (2017). Attention is all you need[C]// in advances in neural information processing systems (NIPS), pages 5998–6008

K Cho, B Van Merrienboer, C Gulcehre, et al (2014). Learning phrase representations using RNN encoder-decoder for statistical machine translation, arXiv preprint arXiv:1406.1078, pages 1–15

Han Zhang, Ian Goodfellow, Dimitris Metaxas, and Augustus Odena (2018). Self-attention generative adversarial networks, arXiv preprint arXiv: 1805.08318, pages 1–10

Daras, G, Odena, A, Zhang, H, et al (2019). Your Local GAN: Designing Two Dimensional Local Attention Mechanisms for Generative Models [C] // The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), arXiv preprint arXiv:1911.12287

Li H, Tang J (2020) Dairy goat image generation based on improved-self-attention generative adversarial networks[J]. IEEE Access 8:62448–62457

Sanjeev Arora, Andrej Risteski, and Yi Zhang (2018). Do GANs learn the distribution? Some Theory and Empirics. In International Conference on Learning Representations(ICLR). pages 1–16

Karnewar A , Wang O (2019). MSG-GAN: Multi-Scale Gradient GAN for Stable Image Synthesis[C]. //The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), arXiv preprint arXiv: 1903.06048

Daniel Jiwoong Im, He Ma Chris Dongjoo Kim and Graham Taylor (2016). Generative Adversarial Parallelization. arXiv preprint arXiv:1612.04021

Wilde H, Knight V, Gillard J (2020) Evolutionary dataset optimization: learning algorithm quality through evolution[J]. Appl Intell 50:1172–1191

He C, Ye Y (2011) Evolution computation based learning algorithms of polygonal fuzzy neural networks[J]. Int J Intell Syst 26(4):340–352

Esteban Real, Sherry Moore, Andrew Selle, Saurabh Saxena,Yutaka LeonSuemastsu, Ouoc Le,Alex Kurakin (2017). Large-scale evolution of image classifiers[C]// in proceedings of the 34th international conference on machine learning (ICML), pages 1–18

A Ghosh, V Kulharia, VP Namboodiri, PH Torr, and PK Dokania (2018). “Multi-agent diverse generative adversarial networks,” in IEEE Conference on Computer Vision and Pattern Recognition, pp. 8513–8521

Y Wang, C Xu, J Qiu, C Xu, and D Tao (2017). Towards evolutional compression. arXiv preprint arXiv:1707.08005

“Gui, Jie, et al (2020). “A review on generative adversarial networks: Algorithms, theory, and applications.” arXiv preprint arXiv:2001.06937.”

Jolicoeur-Martineau, Alexia (2018). “The relativistic discriminator: a key element missing from standard GAN.”

Takeru Miyato, Toshiki Kataoka, Masanori Koyama, and Yuichi Yoshida (2018). Spectral normalization for generative adversarial networks. arXiv preprint arXiv:1802.05957

Zhang H, Xu T, Li H, Zhang S, Wang X, Huang X, Metaxas DN (2019) Stack GAN++: realistic image synthesis with stacked generative adversarial networks[J]. IEEE Transaction on Pattern Analysis and Machine Intelligence 41(8):1947–1962

Karras, T, Aila, T, Laine, S, and Lehtinen, J (2017). Progressive growing of GANs for improved quality, stability, and variation[C]// in proceedings of the international conference on learning representations (ICLR), pages 1–26

Krizhevsky, A and Hinton, G (2009). Learning multiple layers of features from tiny images. Computer Science Department, University of Toronto, Tech. Rep. 1

F Yu, A Seffff, Y Zhang, S Song, T Funkhouser and J Xiao (2015). Lsun: Construction of a large-scale image dataset using deep learning with humans in the loop. arXiv preprint arXiv:1506.03365, pages 1–9

Liu, Ziwei, et al (2014). “Deep Learning Face Attributes in the Wild.”

M Arjovsky, S Chintala, and L Bottou (2017). Wasserstein generative adversarial networks[C]//in proceedings of the 34th international conference on machine learning (ICML), pages 214–223

Sergey Ioffe and Christian Szegedy (2015). Batch normalization: accelerating deep network training by reducing internal covariate shift[C]//in proceedings of the 34th international conference on machine learning (ICML), pages 448–456

T Salimans, I Goodfellow, W Zaremba, V Cheung, A Radford, and X Chen (2016). Improved techniques for training gans[C]// in advances in neural information processing systems (NIPS), pages 2234–2242

Q Xu, G Huang, Y Yuan, C Guo, Y Sun, F Wu, and K Wein-berger (2018). “An empirical study on evaluation metrics of generative adversarial networks,”arXiv preprint arXiv:1806.07755”

Heusel, Martin, et al (2017). “GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium.”

Acknowledgements

This work is supported by the National Natural Science Foundation of China (No.61402227), the Natural Science Foundation of Hunan Province (No.2019JJ50618), Degree & Postgraduate Education Reform Project of Hunan Province (No. 2019JGYB116) and 2019 Hunan Province graduate Quality Curriculum Project(Neural network theory and applications). This work is also supported by the key discipline of computer science and technology in Hunan Province, China.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wu, Z., He, C., Yang, L. et al. Attentive evolutionary generative adversarial network. Appl Intell 51, 1747–1761 (2021). https://doi.org/10.1007/s10489-020-01917-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-01917-8