Abstract

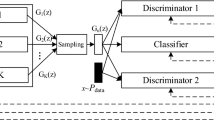

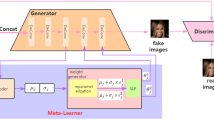

The purpose of active learning is to significantly reduce the cost of annotation while ensuring the good performance of the model. In this paper, we propose a novel active learning method based on the combination of pool and synthesis named dual generative adversarial active learning (DGAAL), which includes the functions of image generation and representation learning. This method includes two groups of generative adversarial network composed of a generator and two discriminators. One group is used for representation learning, and then this paper performs sampling based on the predicted value of the discriminator. The other group is used for image generation. The purpose is to generate samples which are similar to those obtained from sampling, so that samples with rich information can be fully utilized. In the sampling process, the two groups of network cooperate with each other to enable the generated samples to participate in sampling process, and to enable the discriminator for sampling to co-evolve. Thus, in the later stage of sampling, the problem of insufficient information for selecting samples based on the pool method is alleviated. In this paper, DGAAL is evaluated extensively on three data sets, and the results show that DGAAL not only has certain advantages over the existing methods in terms of model performance but can also further reduces the annotation cost.

Similar content being viewed by others

References

Yuan C, Wu Y, Qin X, Qiao S, Pan Y, Huang P, Liu D, Han N (2019) An effective image classification method for shallow densely connected convolution networks through squeezing and splitting techniques. Appl Intell 49(10):3570–3586

Lin E, Chen Q, Qi X (2020) Deep reinforcement learning for imbalanced classification. Appl Intell, 1–15

Sun C, Shrivastava A, Singh S, Gupta A (2017) Revisiting unreasonable effectiveness of data in deep learning era. In: Proceedings of the IEEE international conference on computer vision, pp 843–852

Liu P, Zhang H, Eom KB (2016) Active deep learning for classification of hyperspectral images. IEEE J Sel Top Appl Earth Obs Remote Sens 10(2):712–724

Shao W, Sun L, Zhang D (2018) Deep active learning for nucleus classification in pathology images. In: 2018 IEEE 15th international symposium on biomedical imaging, pp 199–202

Sener O, Savarese S (2017) Active learning for convolutional neural networks: A core-set approach. arXiv:1708.00489

Gal Y, Islam R, Ghahramani Z (2017) Deep bayesian active learning with image data arXiv:1703.02910

Sinha S, Ebrahimi S, Darrell T (2019) Variational adversarial active learning. In: Proceedings of the IEEE international conference on computer vision, pp 5972–5981

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S et al (2014) Generative adversarial nets. In: Advances in neural information processing systems, pp 2672–2680

Arjovsky M, Chintala S, Bottou L (2017) Wasserstein gan. arXiv:1701.07875

Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, Courville AC (2017) Improved training of wasserstein gans. In: Advances in neural information processing systems, pp 5767–5777

Zhu JY, Park T, Isola P, Efros AA (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision, pp 2223–2232

Choi Y, Choi M, Kim M, Ha JW, Kim S, Choo J (2018) Stargan: unified generative adversarial networks for multi-domain image-to-image translation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8789–8797

Tang XL, Du YM, Liu YW, Li JW, Ma YW (2018) Image recognition with conditional deep convolutional generative adversarial networks. J Autom Autom 44(05):855–864

Deng J, Cheng S, Xue N, Zhou Y, Zafeiriou S (2018) Uv-gan: adversarial facial uv map completion for pose-invariant face recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7093–7102

Kingma DP, Welling M (2013) Auto-encoding variational bayes. arXiv:1312.6114

Freund Y, Seung HS, Shamir E, Tishby N (1997) Selective sampling using the query by committee algorithm. Mach Learn 28(2-3):133–168

Gilad-Bachrach R, Navot A, Tishby N (2006) Query by committee made real. In: Advances in neural information processing systems, pp 443–450

Dasgupta S, Hsu D (2008) Hierarchical sampling for active learning. In: Proceedings of the 25th international conference on Machine learning, pp 208–215

Beluch WH, Genewein T, Nürnberger A, Köhler JM (2018) The power of ensembles for active learning in image classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 9368–9377

Gorriz M, Carlier A, Faure E, Giro-i-Nieto X (2017) Cost-effective active learning for melanoma segmentation. arXiv:1711.091681711.09168

Wang K, Zhang D, Li Y, Zhang R, Lin L (2016) Cost-effective active learning for deep image classification. IEEE Trans Circ Syst Video Technol 27(12):2591–2600

Dutt Jain S, Grauman K (2016) Active image segmentation propagation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2864–2873

Nguyen HT, Smeulders A (2004) Active learning using pre-clustering. In: Proceedings of the twenty-first international conference on machine learning, p 79

Yang L, Zhang Y, Chen J, Zhang S, Chen DZ (2017) Suggestive annotation: A deep active learning framework for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, New York, pp 399–407

Kapoor A, Grauman K, Urtasun R, Darrell T (2007) Active learning with gaussian processes for object categorization. In: 2007 IEEE 11th international conference on computer vision, pp 1–8

Roy N, McCallum A (2001) Toward optimal active learning through monte carlo estimation of error reduction. ICML, 441–448

Ebrahimi S, Elhoseiny M, Darrell T, Rohrbach M (2019) Uncertainty-guided continual learning with bayesian neural networks. arXiv:1906.02425

Tong S, Koller D (2001) Support vector machine active learning with applications to text classification. J Mach Learn Res 2(Nov):45–66

Li X, Guo Y (2013) Adaptive active learning for image classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 859–866

Brinker K (2003) Incorporating diversity in active learning with support vector machines. In: Proceedings of the 20th international conference on machine learning, pp 59–66

Wang Z, Ye J (2015) Querying discriminative and representative samples for batch mode active learning. ACM Trans Knowl Disc Data 9(3):1–23

Kuo W, Häne C, Yuh E, Mukherjee P, Malik J (2018) Cost-sensitive active learning for intracranial hemorrhage detection. In: International conference on medical image computing and computer-assisted intervention. Springer, New York, pp 715–723

Melville P, Mooney RJ (2004) Diverse ensembles for active learning. In: Proceedings of the twenty-first international conference on Machine learning, p 74

Lv X, Duan F, Jiang JJ, Fu X, Gan L (2020) Deep active learning for surface defect detection. Sensors 20(6):1650

Yoo D, Kweon IS (2019) Learning loss for active learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 93–102

Zhao Z, Yang X, Veeravalli B, Zeng Z (2020) Deeply supervised active learning for finger bones segmentation. arXiv:2005.03225

Sohn K, Lee H, Yan X (2015) Learning structured output representation using deep conditional generative models. In: Advances in neural information processing systems, pp 3483–3491

Kim K, Park D, Kim KI, Chun SY (2020) Task-aware variational adversarial active learning. arXiv:2002.04709

Saquil Y, Kim KI, Hall P (2018) Ranking cgans: Subjective control over semantic image attributes. arXiv:1804.04082

Zhang B, Li L, Yang S, Wang S, Zha ZJ, Huang Q (2020) State-relabeling adversarial active learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8756–8765

Mahapatra D, Bozorgtabar B, Thiran JP, Reyes M (2018) Efficient active learning for image classification and segmentation using a sample selection and conditional generative adversarial network. In: International conference on medical image computing and computer-assisted intervention. Springer, New York, pp 580–588

Mayer C, Timofte R (2020) Adversarial sampling for active learning. In: The IEEE winter conference on applications of computer vision, pp 3071–3079

Zhu JJ, Bento J (2017) Generative adversarial active learning. arXiv:1702.07956

Tran T, Do TT, Reid I, Carneiro G (2019) Bayesian generative active deep learning. arXiv:1904.11643

Gal Y, Islam R, Ghahramani Z (2017) Deep bayesian active learning with image data. arXiv:1703.02910

Mottaghi A, Yeung S (2019) Adversarial representation active learning. arXiv:1912.09720

Heusel M, Ramsauer H, Unterthiner T, Nessler B, Hochreiter S (2017) Gans trained by a two time-scale update rule converge to a local nash equilibrium. In: Advances in neural information processing systems, pp 6626–6637

Mirza M, Osindero S (2014) Conditional generative adversarial nets. arXiv:1411.1784

Grosse I, Bernaola-Galván P, Carpena P, Román-Roldán R, Oliver J, Stanley HE (2002) Analysis of symbolic sequences using the jensen-shannon divergence. Phys Rev E 65(4):041905

Jin Q, Luo X, Shi Y, Kita K (2019) Image generation method based on improved condition GAN. In: 2019 6th international conference on systems and informatics, pp 1290– 1294

Cui S, Jiang Y (2017) Effective lipschitz constraint enforcement for wasserstein GAN training. In: 2017 2nd IEEE international conference on computational intelligence and applications, pp 74–78

Bengio Y, Courville A, Vincent P (2013) Representation learning: A review and new perspectives. IEEE Trans Pattern Anal Mach Intell 35(8):1798–1828

Donahue J, Simonyan K (2019) Large scale adversarial representation learning. In: Advances in neural information processing systems, pp 10542–10552

Ma K, Shu Z, Bai X, Wang J, Samaras D (2018) Docunet: Document image unwarping via a stacked u-net. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4700–4709

Targ S, Almeida D, Lyman K (2016) Resnet in resnet: Generalizing residual architectures. arXiv:1603.08029

DeVries T, Taylor GW (2017) Dataset augmentation in feature space. arXiv:1702.05538

Yu B, Zhu DH (2009) Combining neural networks and semantic feature space for email classification. Knowl-Based Syst 22(5):376–381

Xue W, Mou X, Zhang L, Feng X (2013) Perceptual fidelity aware mean squared error

Miyato T, Kataoka T, Koyama M, Yoshida Y (2018) Spectral normalization for generative adversarial networks. arXiv:1802.05957

Krizhevsky A, Hinton G (2009) Learning multiple layers of features from tiny images

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) Imagenet: A large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition, pp 248–255

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks. In: Proceedings of the thirteenth international conference on artificial intelligence and statistics, pp 249–256

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv:1412.6980

Gal Y, Ghahramani Z (2016) Dropout as a bayesian approximation: representing model uncertainty in deep learning. In: International conference on machine learning, pp 1050–1059

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the Basic Scientific Research Projects of Central Universities 2572018BH07 and the Natural Science Foundation of Heilongjiang Province under Grant LH2019C003.

Rights and permissions

About this article

Cite this article

Guo, J., Pang, Z., Bai, M. et al. Dual generative adversarial active learning. Appl Intell 51, 5953–5964 (2021). https://doi.org/10.1007/s10489-020-02121-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-02121-4