Abstract

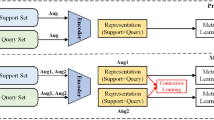

Deep learning has accomplished impressive excellence in many fields. However, its achievement relies on a vast amount of marker data and when there is insufficient labeled data, the phenomenon of over-fitting will occur. On the other hand, the real world tends to be so non-stationary that neural networks cannot learn continuously like humans. The specific manifestation is that learning new tasks leads to a significant decrease in its performance on old tasks. In responding to the above problem, this paper proposes a new algorithm CMLA (Continual Meta-Learning Algorithm) based on meta-learning. CMLA cannot only extract the key features of the sample, but also optimize the update method of the task gradient by introducing the cosine similarity judgment mechanism. The algorithm is tested on miniImageNet and Fewshot-CIFAR100 (Canadian Institute For Advanced Research), and the outcome clearly reveals the effectiveness and superiority of the CMLA in comparison with other advanced systems. Especially compared to MAML (Model-Agnostic Meta-Learning) with standard four-layer convolution, the accuracy of 1 shot and 5 shot is improved by 15.4% and 16.91% respectively under the setting of 5-way on miniImageNet. CMLA not only reduces the instability of the adaptation process, but also solves the stability-plasticity dilemma to a certain extent, achieving the goal of continual learning.

Similar content being viewed by others

Availability of data and material

The datasets used during this study are available upon reasonable request to the authors.

Code availability

The code is publicly available at https://github.com/jiangmengjuan/CMLA

References

Chen W Y, Liu Y C, Kira Z, Wang Y, Huang J (2019) A Closer Look at Few-shot Classification. In: 7Th international conference on learning representations. arxiv:1904.04232

Gupta G, Yadav K, Paull L (2020) La-MAML: Look-ahead Meta Learning for Continual Learning. arXiv:2007.13904

Javed K, White M (2019) Meta-Learning Representations for Continual Learning. In: Neural Information Processing Systems, pp 1818–1828

Hadsell R, Rao D, Rusu A A, Pascanu R (2020) Embracing change: Continual learning in deep neural networks. Trends Cogn Sci:1028–1040

Beaulieu S, Frati L, Miconi T, Lehman J, Stanley K, Clune J, Cheney N (2020) Learning to continually learn. In: 24Th european conference on artificial intelligence, vol 325, pp 992–1001. arxiv:2002.09571

Finn C, Abbeel P, Levine S (2017) Model-Agnostic Meta-Learning For fast adaptation of deep networks. In: 34Th international conference on machine learning, pp. 70: 1126–1135. arxiv:1703.03400

Kemker R, Kanan C (2018) Fearnet: Brain-inspired Model for Incremental Learning. In: 6Th international conference on learning representations. arxiv:1711.10563

Parisi G I, Kemker R, Part J L, Kanan C, Wermter S (2018) Continual lifelong learning with neural networks: a review. Neural Netw 113:54–71. arXiv:1802.07569

Kirkpatrick J, Pascanu R, Rabinowitz N, Veness J, Desjardins G, Rusu A A, Milan K, Quan J, Ramalho T, Grabska-Barwinska A, et al. (2016) Overcoming catastrophic forgetting in neural networks. Proc Natl Acad Sci 114(13):3521–3526

Soltoggio A (2015) Short-term plasticity as cause-effect hypothesis testing in distal reward learning. Biol Cybern 109(1):75–94

Rusu A A, Rabinowitz N C, Desjardins G, Soyer H, Kirkpatrick J, Kavukcuoglu K, Pascanu R, Hadsell R (2016) Progressive neural networks. arXiv:1606.04671

Lu J, Gong P, Ye J, Zhang C (2020) Learning from Very Few Samples: A Survey. arXiv:2009.02653

Snell J, Swersky K, Zemel R (2017) Prototypical Networks for Few-shot Learning. Neural Inf Process Syst:4077–4087

Lee K, Maji S, Ravichandran A, Soatto S (2019) Meta-Learning With Differentiable Convex Optimization. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10649–10657

Ye HJ, Hu H, Zhan DC, Sha F (2020) Few-Shot Learning via Embedding Adaptation With Set-to-Set Functions. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8805–8814

Santoro A, Bartunov S, Botvinick M, Wierstra D, Lillicrap T (2016) Meta-learning with memory-augmented neural networks. Proc 33nd Int Conf Mach Learn 48:1842–1850

Al-Shedivat M, Bansal T, Burda Y, Sutskever I, Mordatch I, Abbeel P (2018) Continuous Adaptation via Meta-Learning in Nonstationary and Competitive Environments. In: 6th International Conference on Learning Representations. arXiv:1710.03641

Thung K H, Wee C Y (2018) A brief review on multi-task learning. Multimed Tools Appl 77 (22):29705–29725

Caruana R (1997) Multi-task learning. Mach Learn 28(1):41–75

Ruder S (2017) An Overview of Multi-Task Learning in Deep Neural Networks. arXiv:1706.05098

Zhang J, Ghahramani Z, Yang Y (2008) Flexible latent variable models for multi-task learning. Mach Learn 73(3):221–242

Vandenhende S, Georgoulis S, Gansbeke W V, Proesmans M, Dai D, Gool L (2020) Multi-Task Learning for Dense Prediction Tasks: A Survey. arXiv:2004.13379

Dong F, Liu L, Li F (2020) Multi-stage meta-learning for few-shot with lie group network constraint. Entropy 22(6):625

Lopez-Paz D, Ranzato M (2017) Gradient episodic memory for continual learning. Neural Inf Process Syst:6467–6476

Hu J, Shen L, Sun G, Albanie S (2018) Squeeze-and-excitation networks. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7132–7141. arXiv:1709.01507

Vinyals O, Blundell C, Lillicrap T, Kavukcuoglu K, Wierstra D (2016) Matching networks for one shot learning. Neural Inf Process Syst:3630–3638

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M S, Berg A, Li F F (2015) Imagenet Large Scale Visual Recognition Challenge. Int J Comput Vis 115(3):211–252

Krizhevsky A, Hinton G (2009) Learning multiple layers of features from tiny images. Handb Syst Autoimmune Diseas 1(4)

Oreshkin B N, Lacoste A, Rodriguez P (2018) Tadam: task dependent adaptive metric for improved few-shot learning. Neural Inf Process Syst:719–729

Bottou L (2010) Large-scale machine learning with stochastic gradient descent. In: 19th International Conference on Computational Statistics, pp. 177–186

Kingma D, Ba J (2015) Adam: a method for stochastic optimization In: 3Rd international conference on learning representations. arxiv:1412.6980

Sung F, Yang Y, Zhang L, Xiang T, Torr P, Hospedales TM (2018) Learning to Compare: Relation Network for Few-Shot Learning. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 1199–1208

Zhang X, Qiang Y, Sung F, Yang Y, Hospedales TM (2018) Relationnet2: deep comparison columns for few-shot learning. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. arXiv:1811.07100v3

Lifchitz Y, Avrithis Y, Picard S, Bursuc A (2019) Dense Classification and Implanting for Few-Shot Learning. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 9250–9259

Ravichandran A, Bhotika R, Soatto S (2019) Few-Shot Learning With Embedded Class Models and Shot-Free Meta Training. 2019 IEEE/CVF International Conference on Computer Vision, pp 331–339

Simon C, Koniusz P, Nock R, Harandi M (2020) Adaptive Subspaces for Few-Shot Learning. IEEE/CVF Conference on Computer Vision and Pattern Recognition

Zhang RX, Che T, Grahahramani Z, Bengio Y, Song Y (2018) Metagan: An adversarial approach to few-shot learning. In: Neural Information Processing Systems, pp. 2371–2380

Sun Q, Liu Y, Chua TS, Schiele B (2019) Meta-Transfer Learning for Few-Shot Learning. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 403–412

Rusu A A, Rao D, Sygnowski J, Vinyals O, Pascanu R, Osindero S, Hadsell R (2019) Meta-Learning With latent embedding optimization. In: 7Th international conference on learning representations. arxiv:1807.05960

Elsken T, Staffler B, Metzen JH, Hutter F (2020) Meta-Learning of Neural Architectures for Few-Shot Learning. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 12362–12372

Guo Y, Cheung NM (2020) Attentive Weights Generation for Few Shot Learning via Information Maximization. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 13496–13505

Ravi S, Larochelle H (2017) Optimization as a model for few shot learning. In: 5th International Conference on Learning Representations

Li Z, Zhou F, Chen F, Li H (2017) Meta-SGD: Learning to Learn Quickly for Few Shot Learning. arXiv:1707.09835

Mishra N, Rohaninejad M, Chen X, Abbeel P (2017) A simple neural attentive meta-learner. In: 6Th international conference on learning representations. arxiv:1707.03141

Chen Y, Wang X, Liu Z, Xu H, Darrell T (2020) A new meta-baseline for few-shot learning. arXiv:2003.04390

Ye H J, Sheng X R, Zhan D C (2020) Few-shot learning with adaptively initialized task optimizer: a practical meta-learning approach. Mach Learn 109(3):643–664

Zhang C, Cai Y, Lin G, Shen C (2020) Deepemd: differentiable earth mover’s distance for few-shot learning. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12200–12210

Rajasegaran J, Khan S, Hayat M, Khan F, Shah M (2020) Self-supervised Knowledge Distillation for Few-shot Learning. arXiv:2006.09785

Tian Y, Wang Y, Krishnan D, Tenenbaum J, Isola P (2020) Rethinking Few-Shot Image Classification: a Good Embedding Is All You Need?. ECCV 12359:266–282. arXiv:2003.11539

Afrasiyabi A, Lalonde J F, Gagné C (2020) Associative alignment for few-shot image classification. ECCV 12350:18–35

Liu Y, Schiele B, Sun Q (2020) An ensemble of Epoch-Wise empirical bayes for Few-Shot learning. ECCV 12361:404–421

Acknowledgements

We would like to thank Fang Dong, Zhe Wang, Xiaohang Pan, Hui Dong, Yi Yi for their technical support. We would also like to thank the computer resources and other support provided by the Machine Learning Laboratory of Soochow University.

Funding

This work is supported by the National Key R&D Program of China (2018YFA0701700; 2018YFA0701701), and the National Natural Science Foundation of China under Grant No.61672364 and No.61902269.

Author information

Authors and Affiliations

Contributions

All authors have contributed to the conception and design of the study. Conceptualization: Mengjuan Jiang, Fanzhang Li, and Li Liu; Experimentation: Mengjuan Jiang; Writing-original draft preparation: Mengjuan Jiang; Writing-review and editing: Mengjuan Jiang, Fanzhang Li, and Li Liu; Funding acquisition: Fanzhang Li; Supervision: Fanzhang Li and Li Liu. All authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jiang, M., Li, F. & Liu, L. Continual meta-learning algorithm. Appl Intell 52, 4527–4542 (2022). https://doi.org/10.1007/s10489-021-02543-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-02543-8