Abstract

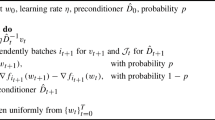

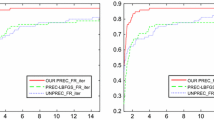

The doubly stochastic functional gradient descent algorithm (DSG) that is memory friendly and computationally efficient can effectively scale up kernel methods. However, in solving the highly ill-conditioned large-scale nonlinear machine learning problem, the convergence speed of DSG is quite slow. This is because the condition number of the Hessian matrix of this problem is quite large, which will make stochastic gradient methods converge very slowly. Fortunately, gradient preconditioning is a well-established technique in optimization aiming to reduce the condition number. Therefore, we propose a preconditioned doubly stochastic functional gradient descent algorithm (P-DSG) by combining DSG with gradient preconditioning. P-DSG first uses the gradient preconditioning to adaptively scale the individual components of the estimated functional gradient obtained by DSG, and then utilizes the preconditioned functional gradient as the descent direction in each iteration. Theoretically, an appropriate preconditioner is always the inverse of the Hessian matrix at the optimum, which is not easy to get due to its high computation cost. Therefore, we first choose an empirical covariance matrix of random Fourier features to approximate the Hessian matrix, and then perform a low-rank approximation to the empirical covariance matrix. P-DSG has a fast convergence rate \(\mathcal {O}(1/t)\) and produces a smaller constant factor in the boundary than that of DSG while remains \(\mathcal {O}(t)\) memory friendly and \(\mathcal {O}(td)\) computationally efficient. Finally, we test the performance of P-DSG on the kernel ridge regression, kernel support vector machines, and kernel logistic regression, respectively. The experimental results show that P-DSG speeds up convergence and achieves better performance.

Similar content being viewed by others

Notes

References

Altschuler J, Bach F, Rudi A, Niles-Weed J (2019) Massively scalable Sinkhorn distances via the nystrom̈ method. In: Advances in neural information processing systems, pp 4427–4437

Avron H, Kapralov M, Musco C, Musco C, Velingker A, Zandieh A (2017) Random Fourier features for kernel ridge regression: Approximation bounds and statistical guarantees. In: International conference on machine learning, pp 253–262

Avron H, Sindhwani V, Yang J, Mahoney MW (2016) Quasi-monte Carlo feature maps for shift-invariant kernels. J Mach Learn Res 17(1):4096–4133

Bengio Y (2012) Practical recommendations for gradient-based training of deep architectures. In: Neural networks: tricks of the trade, Springer, pp 437–478

Boyd S, Vandenberghe L (2004) Convex optimization. Cambridge University Press, Cambridge

Chapelle O (2007) Training a support vector machine in the primal. Neural Comput 19 (5):1155–1178

Chávez G, Liu Y, Ghysels P, Li XS, Rebrova E (2020) Scalable and memory-efficient kernel ridge regression. In: 2020 IEEE International parallel and distributed processing symposium (IPDPS), pp 956–965

Chen X, Yang H, King I, Lyu MR (2015) Training-efficient feature map for shift-invariant kernels. In: Twenty-fourth international joint conference on artificial intelligence, pp 3395–3401

Cutajar K, Osborne M, Cunningham J, Filippone M (2016) Preconditioning kernel matrices. In: International conference on machine learning, pp 2529–2538

Dai B, Xie B, He N, Liang Y, Raj A, Balcan MFF, Song L (2014) Scalable kernel methods via doubly stochastic gradients. In: Advances in neural information processing systems, pp 3041–3049

Duchi JC, Hazan E, Singer Y (2011) Adaptive subgradient methods for online learning and stochastic optimization. J Mach Learn Res 12(7):2121–2159

Fine S, Scheinberg K (2001) Efficient SVM training using low-rank kernel representations. J Mach Learn Res 2(Dec):243–264

Gonen A, Orabona F, Shalevshwartz S (2016) Solving ridge regression using sketched preconditioned SVRG. In: International conference on machine learning, pp 1397–1405

Gu B, Geng X, Li X, Shi W, Zheng G, Deng C, Huang H (2020) Scalable kernel ordinal regression via doubly stochastic gradients. IEEE Transactions on Neural Networks and Learning Systems, pp 1–13

Haim Avron KLC, Woodruff DP (2017) Faster kernel ridge regression using sketching and preconditioning. SIAM J Matrix Anal Appl 38(4):1116–1138

Kar P, Karnick H (2012) Random feature maps for dot product kernels. In: Artificial intelligence and statistics, pp 583–591

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. In: International conference on learning representations, pp 1–13

Kivinen J, Smola AJ, Williamson RC (2004) Online learning with kernels. IEEE Trans Signal Process 52(8):2165–2176

Kolotilina LY, Axelsson O (1990) Preconditioned conjugate gradient methods. Springer

Le Roux N, Manzagol PA, Bengio Y (2007) Topmoumoute online natural gradient algorithm. In: Advances in neural information processing systems, pp 849–856

Lei D, Tang J, Li Z, Wu Y (2019) Using low-rank approximations to speed up kernel logistic regression algorithm. IEEE Access 7:84242–84252

Li CL, Póczos B (2016) Utilize old coordinates: Faster doubly stochastic gradients for kernel methods. UAI, pp 467–476

Li X, Gu B, Ao S, Wang H, Ling CX (2017) Triply stochastic gradients on multiple kernel learning. In: UAI

Li Z, Ton JF, Oglic D, Sejdinovic D (2019) Towards a unified analysis of random Fourier features. In: International conference on machine learning, pp 3905–3914

Lin J, Rosasco L (2018) Generalization properties of doubly stochastic learning algorithms. J Complex 47:42–61

Liu F, Huang X, Chen Y, Suykens JA (2020) Random features for kernel approximation:, A survey in algorithms, theory, and beyond. arXiv:2004.11154

Maldonado S, López J (2017) Robust kernel-based multiclass support vector machines via second-order cone programming. Appl Intell 46(4):983–992

Mason L, Baxter J, Bartlett PL, Frean M, et al. (1999) Functional gradient techniques for combining hypotheses. In: Advances in neural information processing systems, MIT, pp 221–246

Munkhoeva M, Kapushev Y, Burnaev E, Oseledets I (2018) Quadrature-based features for kernel approximation. In: Advances in neural information processing systems, pp 9147–9156

Musco C, Musco C (2015) Randomized block Krylov methods for stronger and faster approximate singular value decomposition. In: Advances in neural information processing systems, pp 1396–1404

Rahimi A, Recht B (2008) Random features for large-scale kernel machines. In: Advances in neural information processing systems, pp 1177–1184

Ratliff ND, Bagnell JA (2007) Kernel conjugate gradient for fast kernel machines. IJCAI 20:1017–1021

Robbins H, Monro S (1951) A stochastic approximation method. Ann Math Stat 22(3):400–407

Scholkopf B, Smola AJ (2018) Learning with kernels: support vector machines, regularization, optimization, and beyond. Adaptive Computation and Machine Learning series

Shabat G, Choshen E, Ben-Or D, Carmel N (2019) Fast and accurate Gaussian kernel ridge regression using matrix decompositions for preconditioning. arXiv:1905.10587

Shalev-Shwartz S, Singer Y, Srebro N, Cotter A (2011) Pegasos: Primal estimated sub-gradient solver for SVM. Math Program 127(1):3–30

Shen Z, Qian H, Mu T, Zhang C (2017) Accelerated doubly stochastic gradient algorithm for large-scale empirical risk minimization. In: IJCAI, pp 2715–2721

Tieleman T, Hinton G (2012) Rmsprop: Divide the gradient by a running average of its recent magnitude. COURSERA: Neural Netw Mach Learn 4(2):26–31

Tu S, Roelofs R, Venkataraman S, Recht B (2016) Large scale kernel learning using block coordinate descent. arXiv:1602.05310

Vinyals O, Povey D (2012) Krylov subspace descent for deep learning. In: Artificial intelligence and statistics, pp 1261–1268

Wang D, Xu J (2019) Faster constrained linear regression via two-step preconditioning. Neurocomputing 364:280–296

Wendland H (2004) Scattered data approximation. Cambridge University Press, Cambridge

Williams CK, Seeger M (2001) Using the nystrom̈ method to speed up kernel machines. In: Advances in neural information processing systems, pp 682–688

Yang J, Sindhwani V, Fan Q, Avron H, Mahoney MW (2014) Random Laplace feature maps for semigroup kernels on histograms. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 971–978

Yang T, Jin R, Zhu S, Lin Q (2016) On data preconditioning for regularized loss minimization. Mach Learn 103(1):57–79

Yang T, Li YF, Mahdavi M, Jin R, Zhou ZH (2012) Nystrom̈ method vs random Fourier features: A theoretical and empirical comparison. In: Advances in neural information processing systems, pp 476–484

Yedida R, Saha S, Prashanth T (2020) Lipschitzlr: Using theoretically computed adaptive learning rates for fast convergence. Appl Intell, pp 1–19

Zhang J, May A, Dao T, Ré C (2019) Low-precision random Fourier features for memory-constrained kernel approximation. Proc Mach Learn Res 89:1264

Zhang Z, Zhou S, Li D, Yang T (2020) Gradient preconditioned mini-batch SGD for ridge regression. Neurocomputing 413:284–293

Zhou S (2016) Sparse LSSVM in primal using Cholesky factorization for large-scale problems. IEEE Trans Neural Netw Learn Syst 27(4):783–795

Zhu J, Hastie T (2005) Kernel logistic regression and the import vector machine. J Comput Graph Stat 14(1):185–205

Acknowledgements

This work was supported by the National Natural Science Foundation of China [Grants numbers 61772020].

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, Z., Zhou, S., Yang, T. et al. Faster doubly stochastic functional gradient by gradient preconditioning for scalable kernel methods. Appl Intell 52, 7091–7112 (2022). https://doi.org/10.1007/s10489-021-02618-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-02618-6