Abstract

Deep neural networks are vulnerable to adversarial examples, which are crafted by applying small, human-imperceptible perturbations on the original images, so as to mislead deep neural networks to output inaccurate predictions. Adversarial attacks can thus be an important method to evaluate and select robust models in safety-critical applications. However, under the challenging black-box setting, most existing adversarial attacks often achieve relatively low success rates on normally trained networks and adversarially trained networks. In this paper, we regard the generation process of adversarial examples as an optimization process similar to deep neural network training. From this point of view, we introduce AdaBelief optimizer and crop invariance into the generation of adversarial examples, and propose AdaBelief Iterative Fast Gradient Method (ABI-FGM) and Crop-Invariant attack Method (CIM) to improve the transferability of adversarial examples. By adopting adaptive learning rate into the iterative attacks, ABI-FGM can optimize the convergence process, resulting in more transferable adversarial examples. CIM is based on our discovery on the crop-invariant property of deep neural networks, which we thus leverage to optimize the adversarial perturbations over an ensemble of crop copies so as to avoid overfitting on the white-box model being attacked and improve the transferability of adversarial examples. ABI-FGM and CIM can be readily integrated to build a strong gradient-based attack to further boost the success rates of adversarial examples for black-box attacks. Moreover, our method can also be naturally combined with other gradient-based attack methods to build a more robust attack to generate more transferable adversarial examples against the defense models. Extensive experiments on the ImageNet dataset demonstrate the method’s effectiveness. Whether on normally trained networks or adversarially trained networks, our method has higher success rates than state-of-the-art gradient-based attack methods.

Similar content being viewed by others

References

Krizhevsky A, Sutskever I, Hinton G E (2017) Imagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90. https://doi.org/10.1145/3065386

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: Bengio Y, LeCun Y (eds) 3rd International Conference on Learning Representations, ICLR 2015, Conference Track Proceedings. arXiv:1409.1556, San Diego

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S E, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: IEEE conference on computer vision and pattern recognition, CVPR 2015. IEEE Computer Society, Boston, pp 1–9, https://doi.org/10.1109/CVPR.2015.7298594, (to appear in print)

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016. IEEE Computer Society, Las Vegas, pp 770–778, https://doi.org/10.1109/CVPR.2016.90, (to appear in print)

Szegedy C, Zaremba W, Sutskever I, Bruna J, Erhan D, Goodfellow I J, Fergus R (2014) Intriguing properties of neural networks. In: Bengio Y, LeCun Y (eds) 2nd International Conference on Learning Representations, ICLR 2014, Conference Track Proceedings, Banff. arXiv:1312.6199

Goodfellow I J, Shlens J, Szegedy C (2015) Explaining and harnessing adversarial examples. In: Bengio Y, LeCun Y (eds) 3rd International Conference on Learning Representations, ICLR 2015, Conference Track Proceedings, San Diego. arXiv:1412.6572

Kurakin A, Goodfellow I J, Bengio S (2017) Adversarial examples in the physical world. In: 5th International Conference on Learning Representations, ICLR 2017, Workshop Track Proceedings. https://openreview.net/forum?id=HJGU3Rodl. OpenReview.net, Toulon

Dong Y, Liao F, Pang T, Su H, Zhu J, Hu X, Li J (2018) Boosting adversarial attacks with momentum. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018. IEEE Computer Society, Salt Lake City, pp 9185–9193. http://openaccess.thecvf.com/content_cvpr_2018/html/Dong_Boosting_Adversarial_Attacks_CVPR_2018_paper.html

Carlini N, Wagner D A (2017) Towards evaluating the robustness of neural networks. In: 2017 IEEE Symposium on Security and Privacy, SP 2017. IEEE Computer Society, San Jose, pp 39–57, https://doi.org/10.1109/SP.2017.49, (to appear in print)

Xie C, Zhang Z, Zhou Y, Bai S, Wang J, Ren Z, Yuille A L (2019) Improving transferability of adversarial examples with input diversity. In: IEEE conference on computer vision and pattern recognition, CVPR 2019. Computer Vision Foundation / IEEE, long beach, pp 2730–2739. http://openaccess.thecvf.com/content_CVPR_2019/html/Xie_Improving_Transferability_of_Adversarial_Examples_With_Input_Diversity_CVPR_2019_paper.html

Dong Y, Pang T, Su H, Zhu J (2019) Evading defenses to transferable adversarial examples by translation-invariant attacks. In: IEEE conference on computer vision and pattern recognition, CVPR 2019. Computer Vision Foundation / IEEE, Long beach, pp 4312–4321. http://openaccess.thecvf.com/content_CVPR_2019/html/Dong_Evading_Defenses_to_Transferable_Adversarial_Examples_by_Translation-Invariant_Attacks_CVPR_2019_paper.html

Lin J, Song C, He K, Wang L, Hopcroft J E (2020) Nesterov accelerated gradient and scale invariance for adversarial attacks. In: 8th International Conference on Learning Representations, ICLR 2020. https://openreview.net/forum?id=SJlHwkBYDH. OpenReview.net, Addis Ababa

Zhuang J, Tang T, Ding Y, Tatikonda S C, Dvornek N C, Papademetris X, Duncan J S (2020) Adabelief optimizer: Adapting stepsizes by the belief in observed gradients. In: Larochelle H, Ranzato M, Hadsell R, Balcan M-F, Lin H-T (eds) Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020. https://proceedings.neurips.cc/paper/2020/hash/d9d4f495e875a2e075a1a4a6e1b9770f-Abstract.html

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M S, Berg A C, Li F-F (2015) Imagenet large scale visual recognition challenge. Int J Comput Vis 115(3):211–252. https://doi.org/10.1007/s11263-015-0816-y

Biggio B, Corona I, Maiorca D, Nelson B, Srndic N, Laskov P, Giacinto G, Roli F (2013) Evasion attacks against machine learning at test time. In: Blockeel H, Kersting K, Nijssen S, Zelezný F (eds) Machine learning and knowledge discovery in databases - european conference, ECML PKDD 2013, Proceedings, part III, Lecture Notes in Computer Science, vol 8190. Springer, Prague, pp 387–402, https://doi.org/10.1007/978-3-642-40994-3_25

Zou J, Pan Z, Qiu J, Liu X, Rui T, Li W (2020) Improving the transferability of adversarial examples with resized-diverse-inputs, diversity-ensemble and region fitting. In: Vedaldi A, Bischof H, Brox T, Frahm J-M (eds) Computer vision - ECCV 2020 - 16th european conference, proceedings, part XXII, Lecture Notes in Computer Science, vol 12367. Springer, Glasgow, pp 563–579, https://doi.org/10.1007/978-3-030-58542-6_34

Chen C, Huang T (2021) Camdar-adv: Generating adversarial patches on 3d object. Int J Intell Syst 36(3):1441–1453. https://doi.org/10.1002/int.22349

Madry A, Makelov A, Schmidt L, Tsipras D, Vladu A (2018) Towards deep learning models resistant to adversarial attacks. In: 6th International Conference on Learning Representations, ICLR 2018, Conference Track Proceedings. https://openreview.net/forum?id=rJzIBfZAb. OpenReview.net, Vancouver

Papernot N, McDaniel P D, Wu X, Jha S, Swami A (2016) Distillation as a defense to adversarial perturbations against deep neural networks. In: IEEE symposium on security and privacy, SP 2016. IEEE Computer Society, San Jose, pp 582–597, https://doi.org/10.1109/SP.2016.41, (to appear in print)

Xie C, Wang J, Zhang Z, Ren Z, Yuille AL (2018) Mitigating adversarial effects through randomization. In: 6th International Conference on Learning Representations, ICLR 2018, Conference Track Proceedings. https://openreview.net/forum?id=Sk9yuql0Z. OpenReview.net, Vancouver

Guo C, Rana M, Cissé M, van der Maaten L (2018) Countering adversarial images using input transformations. In: 6th International Conference on Learning Representations, ICLR 2018, Conference Track Proceedings. https://openreview.net/forum?id=SyJ7ClWCb. OpenReview.net, Vancouver

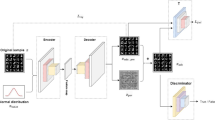

Samangouei P, Kabkab M, Chellappa R (2018) Defense-gan: Protecting classifiers against adversarial attacks using generative models. In: 6th International Conference on Learning Representations, ICLR 2018. https://openreview.net/forum?id=BkJ3ibb0-. OpenReview.net, Vancouver

Liu Z, Liu Q, Liu T, Xu N, Lin X, Wang Y, Wen W (2019) Feature distillation: Dnn-oriented JPEG compression against adversarial examples. In: IEEE conference on computer vision and pattern recognition, CVPR 2019. Computer Vision Foundation / IEEE, Long Beach, pp 860–868. http://openaccess.thecvf.com/content_CVPR_2019/html/Liu_Feature_Distillation_DNN-Oriented_JPEG_Compression_Against_Adversarial_Examples_CVPR_2019_paper.html

Mao C, Gupta A, Nitin V, Ray B, Song S, Yang J, Vondrick C (2020) Multitask learning strengthens adversarial robustness. In: Vedaldi A, Bischof H, Brox T, Frahm J-M (eds) Computer vision - ECCV 2020 - 16th european conference, Proceedings, part II, Lecture Notes in Computer Science, vol 12347. Springer, Glasgow, pp 158–174, https://doi.org/10.1007/978-3-030-58536-5_10

Cohen JM, Rosenfeld E, Kolter JZ (2019) Certified adversarial robustness via randomized smoothing. In: Chaudhuri K, Salakhutdinov R (eds) Proceedings of the 36th international conference on machine learning, ICML 2019, Proceedings of Machine Learning Research, vol 97. PMLR, Long Beach, pp 1310–1320. http://proceedings.mlr.press/v97/cohen19c.html

Tramèr F, Kurakin A, Papernot N, Goodfellow IJ, Boneh D, McDaniel PD (2018) Ensemble adversarial training: Attacks and defenses. In: 6th International Conference on Learning Representations, ICLR 2018, Conference Track Proceedings. https://openreview.net/forum?id=rkZvSe-RZ. OpenReview.net, Vancouver

Liao F, Liang M, Dong Y, Pang T, Hu X, Zhu J (2018) Defense against adversarial attacks using high-level representation guided denoiser. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018. IEEE Computer Society, Salt Lake City, pp 1778–1787. http://openaccess.thecvf.com/content_cvpr_2018/html/Liao_Defense_Against_Adversarial_CVPR_2018_paper.html

Jia X, Wei X, Cao X, Foroosh H (2019) Comdefend: An efficient image compression model to defend adversarial examples. In: IEEE conference on computer vision and pattern recognition, CVPR 2019. Computer Vision Foundation / IEEE, Long Beach , pp 6084–6092. http://openaccess.thecvf.com/content_CVPR_2019/html/Jia_ComDefend_An_Efficient_Image_Compression_Model_to_Defend_Adversarial_Examples_CVPR_2019_paper.html

Naseer M, Khan S, Hayat M, Khan F S, Porikli F (2020) A self-supervised approach for adversarial robustness. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020. IEEE, Seattle, pp 259–268, https://doi.org/10.1109/CVPR42600.2020.00034, (to appear in print)

Kingma DP, Ba J (2015) Adam: A method for stochastic optimization. In: Bengio Y, LeCun Y (eds) 3rd International Conference on Learning Representations, ICLR 2015, Conference Track Proceedings, San Diego. 1412.6980

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016. IEEE Computer Society, Las Vegas, pp 2818–2826, https://doi.org/10.1109/CVPR.2016.308, (to appear in print)

Szegedy C, Ioffe S, Vanhoucke V, Alemi A A (2017) Inception-v4, inception-resnet and the impact of residual connections on learning. In: Singh S P, Markovitch S (eds) Proceedings of the thirty-first AAAI conference on artificial intelligence. AAAI Press, San Francisco, pp 4278–4284. http://aaai.org/ocs/index.php/AAAI/AAAI17/paper/view/14806

He K, Zhang X, Ren S, Sun J (2016) Identity mappings in deep residual networks. In: Leibe B, Matas J, Sebe N, Welling M (eds) Computer vision - ECCV 2016 - 14th european conference, Proceedings, part IV, Lecture Notes in Computer Science, vol 9908. Springer, Amsterdam, pp 630–645. https://doi.org/10.1007/978-3-319-46493-0_38

Liu Y, Chen X, Liu C, Song D (2017) Delving into transferable adversarial examples and black-box attacks. In: 5th International Conference on Learning Representations, ICLR 2017, Conference Track Proceedings. https://openreview.net/forum?id=Sys6GJqxl. OpenReview.net, Toulon

Acknowledgements

This work was supported by the National Key Research and Development Program of China under Grant no. 2017YFB0801900.

Author information

Authors and Affiliations

Contributions

Bo Yang and Hengwei Zhang contributed equally to this work.

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A. Details of Algorithms

Appendix A. Details of Algorithms

The algorithm of CI-AB-SI-TI-DIM attacks is summarized in Algorithm 3. We can get the CI-AB-TI-DIM attack algorithm by removing S(⋅) in step 4 of Algorithm 3, and get the CI-AB-SI-TIM attack algorithm by removing T(⋅; p) in step 4 and step 6 of Algorithm 3. Of course, our method can also be related to the family of Fast Gradient Sign Methods via different parameter settings. It shows the advantages and convenience of the proposed method.

Rights and permissions

About this article

Cite this article

Yang, B., Zhang, H., Li, Z. et al. Adversarial example generation with adabelief optimizer and crop invariance. Appl Intell 53, 2332–2347 (2023). https://doi.org/10.1007/s10489-022-03469-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03469-5