Abstract

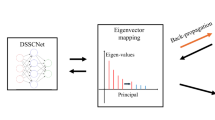

Graph-based dimensionality reduction methods have attracted much attention for they can be applied successfully in many practical problems such as digital images and information retrieval. Two main challenges of these methods are how to choose proper neighbors for graph construction and make use of global and local information when conducting dimensionality reduction. In this paper, we want to tackle these two challenges by presenting an improved graph optimization approach for unsupervised dimensionality reduction. Our method can deal with dimensionality reduction and graph construction at the same time, which doesn’t need to construct an affinity graph beforehand. On the other hand, by integrating the advantages of the orthogonal local preserving projections and principal component analysis, both the local and global information of the original data are considered in dimensionality reduction in our approach. Eventually, we learn the sparse affinity graph by considering probabilistic neighbors, which is optimal and suitable for classification. To testify the superiority of our approach, we carry out some experiments on several publicly available UCI and image data sets, and the results have demonstrated the effectiveness of our approach.

Similar content being viewed by others

References

Yang Y, Deng C, Tao D et al (2017) Latent max-margin multitask learning with skelets for 3-d action recognition. IEEE Trans Cybern 47:439–448

Zhou T, Tao D (2013) Double shrinking sparse dimension reduction. IEEE Trans Image Process 22:244–257

Xu M, Chen H, Varshney PK (2013) Dimensionality reduction for registration of high-dimensional data sets. IEEE Trans Image Process 22(8):3041–3049

Ayesha S, Hanif MK, Talib R (2020) Overview and comparative study of dimensionality reduction techniques for high dimensional data. Inf Fusion 59:44–58

Luo F, Du B, Zhang L et al (2019) Feature learning using spatial-spectral hypergraph discriminant analysis for hyperspectral image. IEEE Trans Cybern 49:2406–2419

Rajabzadeh H, Jahromi MZ, Ghodsi A (2021) Supervised discriminative dimensionality reduction by learning multiple transformation operators. Expert Syst Appl 164:113, 958

jun Shen X, Liu SX, Bao B et al (2020) A generalized least-squares approach regularized with graph embedding for dimensionality reduction. Pattern Recognit 98:107, 023

Turk MA, Pentland A (1991) Face recognition using eigenfaces. Proceedings 1991 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp 586–591

Cai D, He X, Han J et al (2006) Orthogonal laplacianfaces for face recognition. IEEE Trans Image Process 15:3608–3614

Belkin M, Niyogi P (2001) Laplacian eigenmaps and spectral techniques for embedding and clustering. In: NIPS, pp 585– 591

Belkin M, Niyogi P (2003) Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput 15:1373–1396

Tenenbaum JB, Silva VD, Langford JC (2000) A global geometric framework for nonlinear dimensionality reduction. Science 290(5500):2319–2323

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500):2323–2326

van der Maaten L, Postma EO, van den Herik J (2009) Dimensionality reduction: a comparative review. Technical report, Tilburg University, 2009. TiCC-TR 2009-005.

Bengio Y, Paiement JF, Vincent P, et al (2003) Out-of-sample extensions for lleisomapmdseigenmapsand spectral clustering. In: NIPS, pp 177–184

Jolliffe IT (2011) Principal component analysis. In: International encyclopedia of statistical science

He X, Niyogi P (2003) Locality preserving projections. In: NIPS, pp 153–160

Goyal P, Ferrara E (2018) Graph embedding techniquesapplicationsand performance: a survey. Knowl Based Syst 151:78–94

Yan S, Xu D, Zhang B et al (2007) Graph embedding and extensions: a general framework for dimensionality reduction. IEEE Trans Pattern Anal Mach Intell 29:40–51

Yang X, Liu G, Yu Q et al (2017) Stable and orthogonal local discriminant embedding using trace ratio criterion for dimensionality reduction. Multimed Tools Appl 77:3071–3081

Cai H, Zheng VW, Chang KCC (2018) A comprehensive survey of graph embedding: Problemstechniquesand applications. IEEE Trans Knowl Data Eng 30:1616–1637

Guo J, Zhao X, Yuan X et al (2017) Discriminative unsupervised 2d dimensionality reduction with graph embedding. Multimed Tools Appl 77:3189–3207

Wang S, Ding C, Hsu CH et al (2020) Dimensionality reduction via preserving local information. Future Gener Comput Syst 108:967–975

Nie F, Zhu W, Li X (2017) Unsupervised large graph embedding. In: AAAI, pp 2422–2428

Kokiopoulou E, Saad Y (2007) Orthogonal neighborhood preserving projections: a projection-based dimensionality reduction technique. IEEE Trans Pattern Anal Mach Intell 29:2143–2156

Wang R, Nie F, Hong R et al (2017) Fast and orthogonal locality preserving projections for dimensionality reduction. IEEE transactions on image processing : a publication of the IEEE Signal Processing Society 26(10):5019–5030

Wang A, Zhao S, Liu J et al (2020) Locality adaptive preserving projections for linear dimensionality reduction. Expert Syst Appl 151:113, 352

Jiang R, Fu W, Wen L et al (2016) Dimensionality reduction on anchorgraph with an efficient locality preserving projection. Neurocomputing 187:109–118

Zhou J, Pedrycz W, Yue X et al (2021) Projected fuzzy c-means clustering with locality preservation. Pattern Recognit 113:107–748

Zhang L, Chen S, Qiao L (2012) Graph optimization for dimensionality reduction with sparsity constraints. Pattern Recognit 45:1205–1210

Qiao L, Zhang L, Chen S (2013) Dimensionality reduction with adaptive graph. Front Comput Sci 7:745–753

Fang X, Xu Y, Li X et al (2017) Orthogonal self-guided similarity preserving projection for classification and clustering. Neural Networks: the Official Journal of the International Neural Network Society 88:1–8

Gou J, Yang Y, Yi Z et al (2020) Discriminative globality and locality preserving graph embedding for dimensionality reduction. Expert Syst Appl 144:113, 079

Zhang L, Qiao L, Chen S (2010) Graph-optimized locality preserving projections. Pattern Recognit 43:1993–2002

Yi Y, Wang J, Zhou W et al (2019) Joint graph optimization and projection learning for dimensionality reduction. Pattern Recognit 92:258–273

Gao Y, Luo S, Pan J et al (2021) Kernel alignment unsupervised discriminative dimensionality reduction. Neurocomputing 453:181–194

Nie F, Wang X, Huang H (2014) Clustering and projected clustering with adaptive neighbors. Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining, pp 977–986

Bezdek JC, Hathaway RJ (2003) Convergence of alternating optimization. Neural Parallel Sci Comput 11:351–368

Snyman JA, Wilke DN (2018) Practical mathematical optimization

Acknowledgements

The work was partial supported by the Research Program of Lanzhou University of Finance and Economics(Lzufe2020B-011) and Gansu University Innovation Fund Project(2021B-147), Open Project of the State Key Laboratory of Public Big Data (GZU-PBD2021-101), Natural Science Foundation of Gansu Province (21JR11RA132), and Key R & D projects in Gansu Province(21YF5FA087).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, Z., Wang, J., Li, Q. et al. Graph optimization for unsupervised dimensionality reduction with probabilistic neighbors. Appl Intell 53, 2348–2361 (2023). https://doi.org/10.1007/s10489-022-03534-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03534-z