Abstract

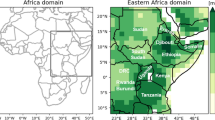

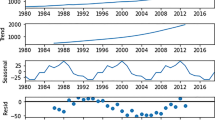

Climate data consists of multiple high-dimensional time series and multiple-dimensional space series with unknown series. These unknown series in climate data hide the complex co-variation relation patterns. By exploring these co-variation relation patterns, we can further reveal the complex representations between time series and space series in climate data. Therefore, it is a tough task to explore what kinds of relation patterns from high-dimensional climate data containing unknown complicated multi-variables. To address this, we proposed neural networks with three layers according to Brenier’s theorem. Brenier’s theorem rigorously proves that the data distribution in the background space is consistent with the data distribution in the reconstructed feature space with greatest probability, thereby ensuring that the relation patterns extracted by the proposed model are as close as possible to the original relation patterns. For the three series sets (i.e., a time series set, a spatial series set containing longitude, and a spatial series set containing latitude) in the climate dataset, we adopted the compact coding manner that one layer encodes a series set correspondingly, in order to maintain the consistency between a time series and two spatial series. Results on the ECMWF climate datasets show that the proposed method gains deeper relation patterns than competitors, i.e., the relation patterns captured by our method outperforms competitors in terms of regularity (for spatial series) and periodicity (for time series). We demonstrate that by this compact coding manner, neural networks capture deeper relation patterns from high-dimensional data containing complicated multiple variables due to this coding manner can accurately filter out more non-eigenvalue information from those complicated data.

Similar content being viewed by others

References

Mafarja M, Mirjalili S (2018) Whale optimization approaches for wrapper feature selection. Appl Soft Comput 62:441–453

Gokalp O, Tasci E, Ugur A (2020) A novel wrapper feature selection algorithm based on iterated greedy metaheuristic for sentiment classification. Expert Syst Appl 146:113176

Björne J, Kaewphan S, Salakoski T (2013) UTurku: drug named entity recognition and drug-drug interaction extraction using SVM classification and domain knowledge [C]. International Workshop on Semantic Evaluation 2013, pp 651–659

Li Y, Hu X, Lin H, Yang Z (2010) Learning an enriched representation from unlabeled data for protein-protein interaction extraction. BMC Bioinform 11(2):S7

Yamada M, Tang J, Lugo-Martinez J et al (2018) Ultra high-dimensional nonlinear feature selection for big biological data. IEEE Trans Knowl Data Eng 30(7):1352–1365

Pang T, Nie F, Han J et al (2018) Efficient feature selection via l 2,0-norm constrained sparse regression. IEEE Trans Knowl Data Eng 3(5):880–893

Chen X, Yuan G, Nie F et al (2018) Semi-supervised feature selection via sparse rescaled linear square regression. IEEE Trans Knowl Data Eng 32(1):165–176

Berón J, Restrepo HDB, Bovik AC (2019) Optimal feature selection for blind super-resolution image quality evaluation[C]. In: IEEE International Conference on Acoustics, Speech and Signal Processing, vol 2019. IEEE, pp 1842–1846

Sekeh SY, Hero AO (2019) Feature selection for mutlti-labeled variables via dependency maximization[C]. In: IEEE International Conference on Acoustics, Speech and Signal Processing, vol 2019. IEEE, pp 3127–3131

Zhang R, Nie F, Li X et al (2019) Feature selection with multi-view data: A survey. Inf Fusion 50:158–167

Tang J, Alelyani S, Liu H (2014) Feature selection for classification: a review. Data classification: Algorithms and applications, pp 37

Tabakhi S, Moradi P, Akhlaghian F (2014) An unsupervised feature selection algorithm based on ant colony optimization. Eng Appl Artif Intell 32:112–123

Wang Q, Wan J, Nie F et al (2019) Hierarchical feature selection for random projection. IEEE Trans Neural Netw Learn Syst 30(5):1581–1586

Luo M, Nie F, Chang X et al (2018) Adaptive unsupervised feature selection with structure regularization. IEEE Trans Neural Netw Learn Syst 29(4):944–956

Chen X, Yuan G, Wang W et al (2018) Local adaptive projection framework for feature selection of labeled and unlabeled data. IEEE Trans Neural Netw Learn Syst 29(12):6362–6373

Li X, Zhang H, Zhang R et al (2019) Generalized uncorrelated regression with adaptive graph for unsupervised feature selection. IEEE Trans Neural Netw Learn Syst 30(5):1587–1595

Tao C, Hou FN et al (2015) Effective criminative feature selection with nontrivial solution. IEEE Trans Neural Netw Learn Syst 27(4):796–808

Sheikholeslami F, Berberidis D, Giannakis GB (2018) Large-Scale Kernel-Based Feature Extraction via Low-Rank Subspace Tracking on a Budget. IEEE Trans Signal Process 66(8):1967–1981

Chowdhury MFM, Lavelli A (2013) FBK-irst: a multi-phase kernel based approach for drug-drug interaction detection and classification that exploits linguistic information. Atlanta, Georgia, USA, 351, pp 53

Zhang Z, Jia L, Zhao M (2019) Kernel-induced label propagation by mapping for semi-supervised classification. IEEE Trans Big Data 5(2):148–165

Huang W, Huang Y, Wang H (2020) Local binary patterns and superpixel-based multiple kernels for hyperspectral image classification. IEEE J Sel Top Appl Earth Obs Remote Sens 13:4550–4563

Low C-Y, Park J, Teoh AB-J (2020) Stacking-based deep neural network: deep analytic network for pattern classification. IEEE Trans Cybern 50(12):5021–5034

Zhang XY, Yin F, Zhang YM (2018) Drawing and recognizing Chinese characters with recurrent neural network. IEEE Trans Pattern Anal Mach Intell 40(4):849–862

Liu ZM, Yu PS (2019) Classification, denoising, and deinterleaving of pulse streams with recurrent neural networks. IEEE Trans Aerosp Electron Syst 55(44):1624–1639

Fukushima K (2021) Artificial vision by Deep CNN neocognitron. IEEE Trans Syst Man Cybern Syst 51(1):76–90

Liu S, Tang B, Chen Q, Wang X (2016) Drug-drug interaction extraction via con-volutional neural networks. Comput Math Methods Med 2016

Luan S, Chen C, Zhang B (2018) Gabor convolutional networks. IEEE Trans Image Process 27(9):4357–4366

Le Cun, Y., Bengio, Y. Hinton, G (2015) Deep learning. Nature, 521:436–444

Brenier Y (1991) Polar factorization and monotone rearrangement of vector-valued functions. Commun Pure Appl Math 44(4):375–417

Galicki A (2016) Effective Brenier theorem: applications to computable analysis and algorithmic randomness. 31st Annual ACM/IEEE Symposium on Logic in Computer Science (LICS), pp 1–10

Gu FL, Jian S, Yau S-T (2016) "Variational principles for minkowski type problems, discrete optimal transportation", and discrete monge-ampere equations. Asian J Math 20(2):383–398

Kehua S, Chen W, Lei N, Zhang J, Qian K, Xianfeng G (2017) Volume preserving mesh parameterization based on optimal mass transportationation. Comput Aided Design 82:42–56

Chen H, Huang G, Wang X-J (2019) Convergence rate estimates for aleksandrov's solution to the monge- ampere equation. SIAM J Numer Anal 57(1):173–191

Lei N, Kehua S, Cui L, Yau ST, Xianfeng David G (2019) A geometric view of optimal transportation and generative model. Comput Aided Geom Des 68:1–28

Kantorovich LV (1948) On a problem of Monge. Usp Mat Nauk 3:225–226

Villani C (2003) Topics in optimal transportation. graduate studies in mathematics, vol 58. American Mathematical Society, Providence

Villani C (2008) Optimal transport: old and new, vol 338. Springer Science & Business Media

Olshausen BA, Field DJ (1997) Sparse coding with an over complete basis set: a strategy employed by V1. Vis Res 37:3311–3325

Kingma DP, Ba JL (2015) Adam: a method for stochastic optimization, arXiv preprint arXiv:1412.6980v8

Zheng J, Wang J, Chen Y (2021) Effective approximation of high-dimensional space using neural networks. J Supercomput 24:1–21

Zheng J, Wang J, Chen Y (2021) Neural networks trained with high-dimensional functions approximation data in high-dimensional space. J Intell Fuzzy Syst 41(2):3739–3750

Hosseini-Asl E, Zurada JM, Nasraoui O (2016) Deep learning of part-based representation of data using sparse autoencoders with nonnegativity constraints. IEEE Trans Neural Netw Learn Syst 27(12):2486–2498

Zheng J, Wang J, Li J (2021) Deep neural networks for detection of abnormal trend in electricity data. Proc Rom Acad A 22(3):291–298

Ding J, Condon A, Shah SP (2018) Interpretable dimensionality reduction of single cell transcriptome data with deep generative models. Nat Commun 9:1–13

Alvarez-Esteban PC, del Barrio E, Cuesta-Albertos J et al (2016) A fixed-point approach to barycenters in Wasserstein space. J Math Anal Appl 441:744–762

Anderes E, Borgwardt S, Miller J (2016) Discrete Wasserstein barycenters: optimal transport for discrete data. Math Meth Oper Res 84:389–409

Le Gouic T, Loubes JM (2017) Existence and consistency of Wasserstein barycenters. Prob Theory Relat Fields 168:901–917

Zhengyu S, Wang Y, Shi R (2016) Optimal Mass Transport for Shape Matching and Comparison. IEEE Trans Pattern Anal Mach Intell 37(11):2246–2259

Arjovsky M, Chintala S, Bottou L (2017) Wasserstein generative adversarial networks. In: International Conference on Machine Learning [C]. 2017, pp 214–223

Radford A, Metz L, Chintala S (2016) Unsupervised representation learning with deep convolutional generative adversarial networks. In: ICLR 2016

Mao X, Li Q, Xie H, Lau R, Wang Z, Paul S (2017) Smolley least squares generative adversarial networks. In: ICCV

Funding

The research funding is supported by the Science and Technology Research Program of Chongqing Municipal Education Commission of China under Grant KJQN201903003. And the Science and Technology Research Program of Chongqing Municipal Education Commission of China under Grant KJQN201903002.

Author information

Authors and Affiliations

Contributions

Conceptualization by Jian Zheng and Qingling Wang. Methodology by Jian Zheng and Jianfeng Wang. Experiments and by Cong Liu, Hongling Liu, Jiang Li and Yuqin Deng. Wrote original draft by Jian Zheng.

Corresponding author

Ethics declarations

Competing interests

The authors have no conflicts of interest in this article.

Ethics approval and consent to participate

The authors declare that this work does not include humans and animals, and never collects data from human subjects.

Consent for publication

The authors agree with availability of data and material in this work.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Zheng, J., Wang, Q., Liu, C. et al. Relation patterns extraction from high-dimensional climate data with complicated multi-variables using deep neural networks. Appl Intell 53, 3124–3135 (2023). https://doi.org/10.1007/s10489-022-03737-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03737-4