Abstract

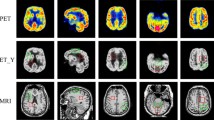

Existing image fusion methods always ignore complementary features and saliency from different inputs. To address these limitations, this paper proposes an unsupervised multi-level difference information replenishment fusion network for multi-modal medical image fusion (MMIF). Considering some features obliterated between layers, we design a multi-layer feature compensation module in our network to make fused images richer and more complete. Furthermore, we develop a novel fusion strategy to make the result maintain the subjective definition and intuitive features of the original images while adjusting the fused emphasis. On this basis, functional image fusion avoids color distortion by YUV processing. In addition, a hybrid loss is introduced to train our network. \( {\mathbf{\mathcal{L}}}_{\boldsymbol{fid}} \) provides the structural similarity for the fidelity term, \( {\mathbf{\mathcal{L}}}_{\boldsymbol{lum}} \) is utilized for the luminance maintaining term, and \( {\mathbf{\mathcal{L}}}_{\boldsymbol{sd}} \) presents the better gradient for the detail preserving term. Qualitative and quantitative experiments prove the superiority of our method over other state-of-the-art methods.

Similar content being viewed by others

References

Du J, Li W, Tan H (2019) Three-layer image representation by an enhanced illumination-based image fusion method. IEEE J Biomed Health Inf vol. PP, no 99, pp 1–1

Ganasala P, Prasad AD (2019) Contrast enhanced multi sensor image fusion based on guided image filter and NSST. IEEE Sens J vol. PP, no 99, pp 1–1

Ji X, Cheng X (2020) An adaptive multisensor image fusion method based on monogenic features. IEEE Sens J vol. PP, no 99, pp 1–1

Shahdoosti HR, Javaheri N (2017) Pansharpening of clustered MS and Pan images considering mixed pixels. IEEE Geosci Remote Sens Lett 14:826–830

Kong W, Miao Q, Lei Y (2019) Multimodal sensor medical image fusion based on local difference in non-subsampled domain. IEEE Trans Instrum Meas 68(4):938–951

Mithya V, Nagaraj B (2021) Medical image integrated possessions assisted soft computing techniques for optimized image fusion with less noise and high contour detection. J Ambient Intell Humaniz Comput 12(6):6811–6824

Zhou F, Hang R, Liu Q, Yuan X (2019) Pyramid fully convolutional network for hyperspectral and multispectral image fusion. IEEE J Sel Top Appl Earth Observ Remote Sens vol. PP, no 99, pp 1–10

Yong Y, Yue Q, Huang S, Pan L (2017) Multiple visual features measurement with gradient domain guided filtering for multisensor image fusion. IEEE Trans Inst Meas vol 691, no 703

Yu L, Xun C, Ward RK, Wang ZJ (2016) Image fusion with convolutional sparse representation. IEEE Signal Proc Lett vol. PP, no 99, pp 1–1

Dinh PH (2021) Multi-modal medical image fusion based on equilibrium optimizer algorithm and local energy functions. Appl Intell no 4

Ullah H, Zhao Y, Abdalla FY, Wu L (2021) Fast local Laplacian filtering based enhanced medical image fusion using parameter-adaptive PCNN and local features-based fuzzy weighted matrices. Appl Intell 52:1–20

Liang X, Hu P, Zhang L, Sun J, Yin G (2019) MCFNet: multi-layer concatenation fusion network for medical images fusion. IEEE Sensors J 19(16):7107–7119

Fu J, Li W, Du J, Huang Y (2021) A multi-scale residual pyramid attention network for medical image fusion. Biomed Signal Proc Control 66:102488

Liu Y, Chen X, Cheng J, Peng H (2017) A medical image fusion method based on convolutional neural networks. In 2017 20th international conference on information fusion (Fusion), IEEE, pp 1–7

Lai R, Li Y, Guan J, Xiong A (2019) Multi-scale visual attention deep convolutional neural network for multi-focus image fusion. IEEE Access 7:114385–114399

Guo X, Nie R, Cao J, Zhou D, Mei L, He K (2019) FuseGAN: learning to fuse multi-focus image via conditional generative adversarial network. IEEE Trans Multimed 21:1982–1996

Ma J, Ma Y, Li C (2019) Infrared and visible image fusion methods and applications: a survey. Inf Fusion 45:153–178

Burt PJ, Adelson EH (1987) The Laplacian pyramid as a compact image code. Readings Comput Vis 31(4):671–679

Chen CI (2017) Fusion of PET and MR brain images based on IHS and log-gabor transforms. IEEE Sens J vol. PP, no 21, pp 1–1

Yan L, Cao J, Rizvi S, Zhang K, Cheng X (2020) Improving the performance of image fusion based on visual saliency weight map combined with CNN. IEEE Access 8(99):59976–59986

Yang Y, Que Y, Huang S, Lin P (2016) Multimodal sensor medical image fusion based on Type-2 fuzzy logic in NSCT domain. IEEE Sensors J 16(10):3735–3745

Liu X, Mei W, Du H (2018) Multi-modality medical image fusion based on image decomposition framework and nonsubsampled shearlet transform. Biomed Signal Proc Control 40:343–350

Kumar NN, Prasad TJ, Prasad KS (2021) Optimized dual-tree complex wavelet transform and fuzzy entropy for multi-modal medical image fusion: a hybrid meta-heuristic concept. J Mech Med Biol p 2150024

Wang Q, Gao Z, Xie C, Chen G, Luo Q (2020) Fractional-order total variation for improving image fusion based on saliency map. Signal Image Video Process 14(1):1–9

Zhang X, Ma Y, Fan F, Zhang Y, Huang J (2017) Infrared and visible image fusion via saliency analysis and local edge-preserving multi-scale decomposition. JOSA A 34(8):1400–1410

Qiang et al (2018) Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images: a review. Inf Fusion

Zhu Z, Yin H, Chai Y, Li Y, Qi G (2018) A novel multi-modality image fusion method based on image decomposition and sparse representation. Information ences

Li X, Guo X, Han P, Wang X, Luo T (2020) Laplacian re-decomposition for multimodal medical image fusion. IEEE Trans Inst Meas vol. PP, no 99, pp 1–1

Li X, Zhou F, Tan H (2021) Joint image fusion and denoising via three-layer decomposition and sparse representation. Knowledge-Based Systems vol 224. https://doi.org/10.1016/j.knosys.2021.107087

Yin M, Liu X, Liu Y, Chen X (2018) Medical image fusion with parameter-adaptive pulse coupled neural network in nonsubsampled Shearlet transform domain. IEEE Trans Instrum Meas 68(1):49–64

Wei T, Tiwari P, Pandey HM, Moreira C, Jaiswal AK (2020) Multi-modal medical image fusion algorithm in the era of big data. Neural Comput Appl no. 3, pp 1–21

Ma J, Xu H, Jiang J, Mei X, Zhang XP (2020) DDcGAN: a dual-discriminator conditional generative adversarial network for multi-resolution image fusion. IEEE Trans Image Process 29:4980–4995

Huang J, Le Z, Ma Y, Fan F, Yang L (2020) MGMDcGAN: medical image fusion using multi-generator multi-discriminator conditional generative adversarial network. IEEE Access, vol. PP, no 99, pp 1–1

Huang G, Liu Z, Laurens V, Weinberger KQ (2016) Densely connected convolutional networks. In IEEE Comput Soc

Zhang H, Xu H, Xiao Y, Guo X, Ma J (2020) Rethinking the image fusion: a fast unified image fusion network based on proportional maintenance of gradient and intensity. Proc AAAI Conf Artif Intell 34(7):12797–12804

Zhang H, Ma J (2021) SDNet: a versatile squeeze-and-decomposition network for real-time image fusion. Int J Comput Vis 129:1–25

Paszke A et al (2017) Automatic differentiation in PyTorch

Fu J, Li W, Du J, Xu L (2021) DSAGAN: a generative adversarial network based on dual-stream attention mechanism for anatomical and functional image fusion. Inf Sci 576:484–506. https://doi.org/10.1016/j.ins.2021.06.083

Li X, Zhou F, Tan H (2021) Joint image fusion and deniosing via three-layer decomposition and sparse representation. Knowledge-Based Syst 224(1):107087

Piella G, Heijmans H (2003) A new quality metric for image fusion. In Proceedings 2003 international conference on image processing (Cat. No. 03CH37429), vol 3: IEEE, pp III-173

Yang C, Zhang J-Q, Wang X-R, Liu X (2008) A novel similarity based quality metric for image fusion. Inf Fusion 9(2):156–160. https://doi.org/10.1016/j.inffus.2006.09.001

Han Y, Cai Y, Cao Y, Xu X (2013) A new image fusion performance metric based on visual information fidelity. Inf Fusion 14(2):127–135

Sheikh HR, Member, IEEE, Bovik AC et al (2006) An information Fidelity criterion for image quality assessment using natural scene statistics. IEEE Trans Image Process 14(12):2117–2128

Zhao J, Laganiere R, Liu Z (2006) Performance assessment of combinative pixel-level image fusion based on an absolute feature measurement. Int J Innov Comput Inf Control Ijicic, vol 3, no 6

Acknowledgments

This work was supported in part by the NationalNatural Science Foundation of China under Grants 61966037, 61833005, and 61463052, National Key Research and Development Project of China under Grant 2020YFA0714301, China Postdoctoral Science Foundation under Grant 2017M621586, and Graduate Research innovation project of Yunnan University 2021Y257 and 2021Z45.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, L., Wang, X., Zhu, Y. et al. Multi-level difference information replenishment for medical image fusion. Appl Intell 53, 4579–4591 (2023). https://doi.org/10.1007/s10489-022-03819-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03819-3