Abstract

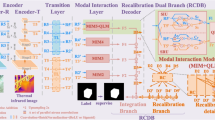

RGB-T salient object detection (SOD) combines thermal infrared and RGB images to overcome the light sensitivity of RGB images in low-light conditions. However, the quality of RGB-T images could be unreliable under complex imaging scenarios, and direct fusion of these low-quality images will lead to sub-optimal detection results. In this paper, we propose a novel Modal Complementary Fusion Network (MCFNet) to alleviate the contamination effect of low-quality images from both global and local perspectives. Specifically, we design a modal reweight module (MRM) to evaluate the global quality of images and adaptively reweight RGB-T features by explicitly modelling interdependencies between RGB and thermal images. Furthermore, we propose a spatial complementary fusion module (SCFM) to explore the complementary local regions between RGB-T images and selectively fuse multi-modal features. Finally, multi-scale features are fused to obtain the salient detection result. Experiments on three RGB-T benchmark datasets demonstrate that our MCFNet achieved outstanding performance compared with the latest state-of-the-art methods. We have also achieved competitive results in RGB-D SOD tasks, which proves the generalization of our method. The source code is released at https://github.com/dotaball/MCFNet.

Similar content being viewed by others

References

Fan D, Wang W, Cheng MM, Shen J (2019) Shifting more attention to video salient object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8554–8564

Bi HB, Lu D, Zhu HH, Yang LN, Guan HP (2021) STA-Net: spatial-temporal attention network for video salient object detection. Appl Intell 51(6):3450–3459

Gong A, Huang L, Shi J, Liu C (2022) Unsupervised RGB-T saliency detection by node classification distance and sparse constrained graph learning. Appl Intell 52(1):1030–1043

Wang J, Zhao Z, Yang S, Chai X, Zhang W, Zhang M (2022) Global contextual guided residual attention network for salient object detection. Appl Intell 52(6):6208–6226

Hou Q, Jiang P, Wei Y, Cheng MM (2018)Self-erasing network for integral object attention. Adv Neural Inf Process Syst 31:549–559

Yang Z, Ma Y, Lian J, Zhu L (2018) Saliency motivated improved simplified PCNN model for object segmentation. Neurocomputing 275:2179–2190

Li P, Chen B, Ouyang W, Wang D, Yang X, Lu H (2019) GradNet: Gradient-guided network for visual object tracking. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 6162–6171

Jiao J, Xue H, Ding J (2021) Non-local duplicate pooling network for salient object detection. Appl Intell 51(10):6881–6894

Zhao X, Pang Y, Zhang L, Lu H, Zhang L (2020) Suppress and balance: A simple gated network for salient object detection. In: European conference on computer vision, pp 35–51

Wang G, Li C, Ma Y, Zheng A, Tang J, Luo B (2018)RGB-T saliency detection benchmark: Dataset, baselines, analysis and a novel approach. In: Chinese Conference on Image and Graphics Technologies, pp 359–369

Tu Z, Xia T, Li C, Wang X, Ma Y, Tang J (2019)RGB-T image saliency detection via collaborative graph learning. IEEE Trans Multimed 22(1):160–173

Zhang Q, Huang N, Yao L, Zhang D, Shan C, Han J (2019)RGB-T salient object detection via fusing multi-level CNN features. IEEE Trans Image Process 29:3321–3335

Chen Z, Cong R, Xu Q, Huang Q (2021) DPANet: Depth potentiality-aware gated attention network for RGB-D salient object detection. IEEE Trans Image Process 30:7012–7024

Fan DP, Lin Z, Zhang Z, Zhu M, Cheng MM (2020) Rethinking RGB-D salient object detection: Models, data sets, and large-scale benchmarks. IEEE Trans Neural Netw Learn Syst 32(5):2075–2089

Jin WD, Xu J, Han Q, Zhang Y, Cheng MM (2021) CDNet: Complementary depth network for RGB-D salient object detection. IEEE Trans Image Process 30:3376–3390

Wang X, Li S, Chen C, Hao A, Qin H (2021) Depth quality-aware selective saliency fusion for RGB-D image salient object detection. Neurocomputing 432:44–56

Zhang Q, Xiao T, Huang N, Zhang D, Han J (2020) Revisiting feature fusion for RGB-T salient object detection. IEEE Trans Circuits Syst Video Technol 31(5):1804–1818

Gao W, Liao G, Ma S, Li G, Liang Y, Lin W (2021) Unified information fusion network for multi-modal RGB-D and RGB-T salient object detection. IEEE Trans Circuits Syst Video Technol 32(4):2091–2106

Ju R, Ge L, Geng W, Ren T, Wu G (2014) Depth saliency based on anisotropic center-surround difference. In: 2014 IEEE international conference on image processing, pp 1115–1119

Fan X, Liu Z, Sun G (2014) Salient region detection for stereoscopic images. In: 2014 19th International Conference on Digital Signal Processing, pp 454–458

Qu L, He S, Zhang J, Tian J, Tang Y, Yang Q (2017) RGBD salient object detection via deep fusion. IEEE Trans Image Process 26(5):2274–2285

Piao Y, Ji W, Li J, Zhang M, Lu H (2019)Depth-induced multi-scale recurrent attention network for saliency detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 7254–7263

Piao Y, Rong Z, Zhang M, Ren W, Lu H (2020) A2dele: Adaptive and attentive depth distiller for efficient rgb-d salient object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 9060–9069

Liu N, Zhang N, Han J (2020) Learning selective self-mutual attention for RGB-D saliency detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 13756–13765

Huang L, Song K, Gong A, Liu C, Yan Y (2020)RGB-T saliency detection via low-rank tensor learning and unified collaborative ranking. IEEE Signal Process Lett 27:1585–1589

Huang L, Song K, Wang J, Niu M, Yan Y (2021) Multi-graph fusion and learning for RGBT Image Saliency Detection. IEEE Trans Circuits Syst Video Technol 32(3):1366–1377

Tu Z, Ma Y, Li Z, Li C, Xu J, Liu Y (2020) RGBT salient object detection: A large-scale dataset and benchmark. arXiv preprint arXiv:2007.03262

Tu Z, Li Z, Li C, Lang Y, Tang J (2021)Multi-interactive dual-decoder for RGB-thermal salient object detection. IEEE Trans Image Process 30:5678–5691

Zhou W, Guo Q, Lei J, Yu L, Hwang JN (2021) ECFFNet: effective and consistent feature fusion network for RGB-T salient object detection. IEEE Trans Circuits Syst Video Technol 32(3):1224–1235

Wang J, Song K, Bao Y, Huang L, Yan Y (2021) CGFNet: Cross-guided fusion network for RGB-T salient object detection. IEEE Trans Circuits Syst Video Technol 32(5):2949–2961

Huo F, Zhu X, Zhang L, Liu Q, Shu Y (2021) Efficient context-guided stacked refinement network for RGB-T salient object detection. IEEE Trans Circuits Syst Video Technol 32(5):3111–3124

Lin TY, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2117–2125

Chen LC, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2017) Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans Pattern Anal Mach Intell 40(4):834–848

Deng X, Dragotti PL (2020) Deep convolutional neural network for multi-modal image restoration and fusion. IEEE Trans Pattern Anal Mach Intell 43(10):3333–3348

Zhao J, Zhao Y, Li J, Chen X (2020) Is depth really necessary for salient object detection? In: Proceedings of the 28th ACM International Conference on Multimedia, pp 1745–1754

Bahdanau D, Cho KH, Bengio Y (2015) Neural machine translation by jointly learning to align and translate. In: 3rd International Conference on Learning Representations

Godard C, Mac Aodha O, Brostow GJ (2017) Unsupervised monocular depth estimation with left-right consistency. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 270–279

Achanta R, Hemami S, Estrada F, Susstrunk S (2009)Frequency-tuned salient region detection. In: IEEE conference on computer vision and pattern recognition, pp 1597–1604

Fan DP, Cheng MM, Liu Y, Li T, Borji A (2017) Structure-measure: A new way to evaluate foreground maps. In: Proceedings of the IEEE international conference on computer vision, pp 4548–4557

Fan DP, Gong C, Cao Y, Ren B, Cheng MM, Borji A (2018)Enhanced-alignment measure for binary foreground map evaluation. In: Proceedings of the 27th International Joint Conference on Artificial Intelligence, pp 698–704

Margolin R, Zelnik-Manor L, Tal A (2014) How to evaluate foreground maps?. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 248–255

Tu Z, Xia T, Li C, Lu Y, Tang J (2019) M3S-NIR: Multi-modal multi-scale noise-insensitive ranking for RGB-T saliency detection. In: 2019 IEEE Conference on Multimedia Information Processing and Retrieval, pp 141–146

Guo Q, Zhou W, Lei J, Yu L (2021) TSFNet: Two-stage fusion network for RGB-T salient object detection. IEEE Signal Process Lett 28:1655–1659

Ju R, Ge L, Geng W, Ren T, Wu G (2014) Depth saliency based on anisotropic center-surround difference. In: IEEE international conference on image processing, pp 1115–1119

Peng H, Li B, Xiong W, Hu W, Ji R (2014) Rgbd salient object detection: a benchmark and algorithms. In: European conference on computer vision, pp 92–109

Niu Y, Geng Y, Li X, Liu F (2012) Leveraging stereopsis for saliency analysis. In: IEEE conference on computer vision and pattern recognition, pp 454–461

Zhang M, Zhang Y, Piao Y, Hu B, Lu H (2020) Feature reintegration over differential treatment: A top-down and adaptive fusion network for RGB-D salient object detection. In: Proceedings of the 28th ACM International Conference on Multimedia, pp 4107–4115

Ji W, Li J, Zhang M, Piao Y, Lu H (2020) Accurate rgb-d salient object detection via collaborative learning. In: European conference on computer vision, pp 52–69

Zhao X, Zhang L, Pang Y, Lu H, Zhang L (2020) A single stream network for robust and real-time RGB-D salient object detection. In: European conference on computer vision, pp 646–662

Zhang M, Ren W, Piao Y, Rong Z, Lu H (2020) Select, supplement and focus for RGB-D saliency detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3472–3481

Ji W, Li J, Yu S, Zhang M, Piao Y, Yao S, Cheng L (2021) Calibrated RGB-D salient object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 9471–9481

Li G, Liu Z, Chen M, Bai Z, Lin W, Ling H (2021) Hierarchical alternate interaction network for RGB-D salient object detection. IEEE Trans Image Process 30:3528–3542

Acknowledgements

This work is supported by the National Natural Science Foundation of China (51805078), the Fundamental Research Funds for the Central Universities (N2103011), the Central Guidance on Local Science and Technology Development Fund (2022JH6/100100023).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ma, S., Song, K., Dong, H. et al. Modal complementary fusion network for RGB-T salient object detection. Appl Intell 53, 9038–9055 (2023). https://doi.org/10.1007/s10489-022-03950-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03950-1