Abstract

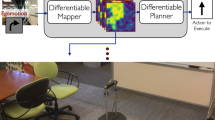

Robots often operate in built environments containing underlying structure that can be exploited to help predict future observations. In this work, we present a framework based on convolutional neural networks to predict point of interest locations in structured environments. The proposed technique exploits the inherent structure of the environment to train a convolutional neural network that is leveraged to facilitate robotic search. We start by investigating environments where the full environmental structure is known, and then we extend the work to unknown environments. Experimental results show the proposed framework provides a reliable method for increasing the efficiency of current search methods across multiple domains. Finally, we demonstrate the proposed framework increases the search efficiency of a mobile robot in a real-world office environment.

Similar content being viewed by others

References

Alphabet. Google maps. https://maps.google.com. Accessed December 10, 2017.

Aydemir, A., Jensfelt, P., & Folkesson, J. (2012). What can we learn from 38,000 rooms? reasoning about unexplored space in indoor environments. In Proceedings IEEE international conference on intelligent robots and systems (pp. 4675–4682).

Bai, S., Chen, F., & Englot, B. (2017). Toward autonomous mapping and exploration for mobile robots through deep supervised learning. In International conference on intelligent robots and systems (pp. 2379–2384). IEEE.

Bay, H., Ess, A., Tuytelaars, T., & Van Gool, L. (2008). Speeded-up robust features (SURF). Computer Vision and Image Understanding, 110(3), 346–359.

Berhault, M., Huang, H., Keskinocak, P., Koenig, S., Elmaghraby, W., Griffin, P., & Kleywegt, A. (2003). Robot exploration withcombinatorial auctions. In Proceedings of IEEE international conference on intelligent robots and systems (vol. 2, pp. 1957–1962).

Burgard, W., Moors, M., Fox, D., Simmons, R., & Thrun, S. (2000). Collaborative multi-robot exploration. In Proceedings of the IEEE international conference on robotics and automation (vol. 1, pp. 476–481).

Burt, P. J., & Adelson, E. H. (1987). The Laplacian pyramid as a compact image code. In M. A. Fischler & O. Firschein (Eds.), Readings in computer vision (pp. 671–679). Amsterdam: Elsevier.

Caesar, H., Uijlings, J., & Ferrari, V., (2015). Joint calibration for semantic segmentation. arXiv preprint arXiv:1507.01581.

Caley, J. A, Lawrance, N. R. J., & Hollinger, G. A. (2016). Deep learning of structured environments for robot search. In 2016 IEEE/RSJ international conference on intelligent robots and systems (IROS) (pp. 3987–3992). IEEE.

Chen, T., & Guestrin, C., (2016). Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining (pp. 785–794). ACM.

Ciresan, D., Meier, U., & Schmidhuber, J. (2012). Multi-column deep neural networks for image classification. In Proceedings of the IEEE international conference on computer vision and pattern recognition (pp. 3642–3649).

Dalal, N., & Triggs, B. (2005). Histograms of oriented gradients for human detection. In Proceedings of the IEEE international conference on computer vision and pattern recognition (vol. 1, pp. 886–893).

Dollár, P. (2016). Piotr’s Computer Vision Matlab Toolbox (PMT). http://vision.ucsd.edu/~pdollar/toolbox/doc/index.html. Accessed Oct 10, 2016.

Friedman, J. H. (2001). Greedy function approximation: A gradient boosting machine. Annals of Statistics, 29(5), 1189–1232.

Guo, X., Singh, S., Lee, H., Lewis, R. L, & Wang, X. (2014). Deep learning for real-time Atari game play using offline Monte-Carlo tree search planning. In Proceedings of the advances in neural information processing systems (pp. 3338–3346).

Hinton, G., Deng, L., Yu, D., Dahl, G. E., Mohamed, A. R., Jaitly, N., et al. (2012). Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Processing Magazine, 29(6), 82–97.

Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems (pp. 1097–1105).

Lawrence, S., Giles, C. L., Tsoi, A. C., & Back, A. D. (1997). Face recognition: A convolutional neural-network approach. IEEE Transactions on Neural Networks, 8(1), 98–113.

Lenz, I., Knepper, R., & Saxena, A. (2015). DeepMPC: Learning deep latent features for model predictive control. In Proceedings of the robotics: science and systems conference, Rome, Italy.

Levine, S., Finn, C., Darrell, T., & Abbeel, P. (2016). End-to-end training of deep visuomotor policies. Journal of Machine Learning Research, 17(39), 1–40.

Levine, S., Pastor, P., Krizhevsky, A., & Quillen, D. (2016). Learning hand-eye coordination for robotic grasping with large-scale data collection. In International symposium on experimental robotics (pp. 173–184). Berlin: Springer.

Lin, T.-Y., Goyal, P., Girshick, R., He, K., & Dolla, P. (2017). Focal loss for dense object detection. In 2017 IEEE international conference on computer vision (ICCV) (pp. 2980–2988). IEEE.

Lowe, D. G. (2004). Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision, 60(2), 91–110.

Macé, S., Locteau, H., Valveny, E., & Tabbone, S. (2010). A system to detect rooms in architectural floor plan images. In Proceedings of the IAPR international workshop on document analysis systems (pp. 167–174). ACM.

Maddison, C. J, Huang, A., Sutskever, I., & Silver, D. (2014). Move evaluation in Go using deep convolutional neural networks. arXiv preprint arXiv:1412.6564.

Mapzen. Mapzen data. https://mapzen.com. Accessed December 10, 2017.

Mikolajczyk, K., & Schmid, C. (2004). Scale & affine invariant interest point detectors. International Journal of Computer Vision, 60(1), 63–86.

OpenStreetMap contributors. Planet dump. Retrieved from https://planet.osm.org. https://www.openstreetmap.org (2017). Accessed October 10, 2017.

Perea Ström, D., Nenci, F., & Stachniss, C. (2015). Predictive exploration considering previously mapped environments. In Proceedings of the IEEE international conference on robotics and automation (pp. 2761–2766).

QGIS Development Team. QGIS geographic information system. http://qgis.osgeo.org. Accessed December 10, 2017.

Quigley, M., Conley, K., Gerkey, B. P., Faust, J., Foote, T., Leibs, J. et al. (2009). ROS: An open-source robot operating system. Kobe, Japan. In Proceedings of the ICRA workshop on open source software.

Richter, C., & Roy, N. (2017). Safe visual navigation via deep learning and novelty detection. In Proceedings of the robotics: Science and systems conference.

Rota Bulo, S., Neuhold, G., & Kontschieder, P. (2017). Loss max-pooling for semantic image segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2126–2135).

Silver, D., Huang, A., Maddison, C. J., Guez, A., Sifre, L., Van Den Driessche, G., et al. (2016). Mastering the game of go with deep neural networks and tree search. Nature, 529(7587), 484–489.

Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556.

Tai, L., Li, S., & Liu, M. (2016). A deep-network solution towards model-less obstacle avoidance. In International conference on intelligent robots and systems (pp. 2759–2764). IEEE.

Tola, E., Lepetit, V., & Fua, P. (2010). Daisy: An efficient dense descriptor applied to wide-baseline stereo. IEEE Transactions on Pattern Analysis and Machine Intelligence, 32(5), 815–830.

Wurm, K. M., Stachniss, C., & Burgard, W. (2008). Coordinated multi-robot exploration using a segmentation of the environment. In Proceedings of the IEEE international conference on intelligent robots and systems (pp. 1160–1165).

Yamauchi, B. (1997). A frontier-based approach for autonomous exploration. In Proceedings of the IEEE international symposium on computational intelligence in robotics and automation (pp. 146–151).

Yamauchi, B. (1998). Frontier-based exploration using multiple robots. In Proceedings of the international conference on autonomous agents (pp. 47–53). ACM.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Geoffrey A. Hollinger: This work has been funded in part by the Office of Naval Research Grants N00014-17-1-2581 and N00014-14-1-0509.

Rights and permissions

About this article

Cite this article

Caley, J.A., Lawrance, N.R.J. & Hollinger, G.A. Deep learning of structured environments for robot search. Auton Robot 43, 1695–1714 (2019). https://doi.org/10.1007/s10514-018-09821-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-018-09821-4