Abstract

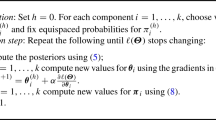

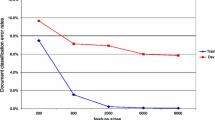

The generalized Dirichlet distribution has been shown to be a more appropriate prior than the Dirichlet distribution for naïve Bayesian classifiers. When the dimension of a generalized Dirichlet random vector is large, the computational effort for calculating the expected value of a random variable can be high. In document classification, the number of distinct words that is the dimension of a prior for naïve Bayesian classifiers is generally more than ten thousand. Generalized Dirichlet priors can therefore be inapplicable for document classification from the viewpoint of computational efficiency. In this paper, some properties of the generalized Dirichlet distribution are established to accelerate the calculation of the expected values of random variables. Those properties are then used to construct noninformative generalized Dirichlet priors for naïve Bayesian classifiers with multinomial models. Our experimental results on two document sets show that generalized Dirichlet priors can achieve a significantly higher prediction accuracy and that the computational efficiency of naïve Bayesian classifiers is preserved.

Similar content being viewed by others

References

Bier VM, Yi W (1995) A Bayesian method for analyzing dependencies in precursor data. Int J Forecast 11: 25–41

Cestnik B, Bratko I (1991) On estimating probabilities in tree pruning. Machine learning—EWSL-91, European Working Session on Learning, pp 138–150

Connor RJ, Mosimann JE (1969) Concepts of independence for proportions with a generalization of the Dirichlet distribution. J Am Stat Assoc 64: 194–206

Hall M, Frank E, Holmes G, Pfahringer B, Reutemann P, Witten IH (2009) The WEKA data mining software: an update. SIGKDD Explor 11: 10–18

Kim S, Han K, Rim H, Myaeng S (2006) Some effective techniques for naive Bayes text classification. IEEE Trans Knowl Data Eng 18: 1457–1466

Kolcz AR, Yih W (2007) Raising the baseline for high-precision text classifiers. In: Proceedings of the 13th ACM SIGKDD international conference on knowledge discovery and data mining, pp 400–409

Lang K (1995) NewsWeeder: learning to filter netnews. In: Proceedings of the 12th international conference on machine learning, pp 331–339

Lewis DD (1998) Naive Bayes at forty: the independence assumption in information retrieval. In: Proceedings of the 10th European conference on machine learning, pp 4–15

McCallum A, Nigam K (1998) A comparison of event models for naive Bayes text classification. In: Proceedings of the AAAI-98 workshop on learning for text categorization, pp 41–48

Mitchell TM (1997) Machine learning. McGraw-Hill, Boston

Porter MF (1980) An algorithm for suffix stripping. Program 14: 130–137

Rennie J, Shih L, Teevan J, D Karger D (2003) Tackling the poor assumptions of Naive Bayes text classifiers. In: Proceedings of the twentieth international conference on machine learning, pp 616–623

Schneider KM (2003) A comparison of event models for naive Bayes anti-spam e-mail filtering. In: Proceedings of the 10th conference on European chapter of the association for computational linguistics, pp 307–314

Spiegelhalter DJ, Knill-Jones RP (1984) Statistical and knowledge based approaches to clinical decision support systems, with an application in gastroenterology. J R Stat Soc 147: 35–77

Wilks SS (1962) Mathematical statistics. Wiley, New York

Wong TT (1998) Generalized Dirichlet distribution in Bayesian analysis. Appl Math Comput 97: 165–181

Wong TT (2005) A Bayesian approach employing generalized Dirichlet priors in predicting microchip yields. J Chin Inst Indus Eng 22: 210–217

Wong TT (2007) Perfect aggregation of Bayesian analysis on compositional data. Stat Papers 48: 265–282

Wong TT (2009) Alternative prior assumptions for improving the performance of naive Bayesian classifier. Data Mining Knowl Discov 18: 183–213

Wong TT (2010) Parameter estimation for generalized Dirichlet distributions from the sample estimates of the first and the second moments of random variables. Comput Stat Data Anal 54: 1756–1765

Wong TT (2012) A hybrid discretization method for naïve Bayesian classifiers. Pattern Recog 45: 2321–2325

Yang Y, Webb GI (2009) Discretization for naïve Bayes learning: managing discretization bias and variance. Mach Learn 74: 39–74

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Dr. Bing Liu.

Rights and permissions

About this article

Cite this article

Wong, TT. Generalized Dirichlet priors for Naïve Bayesian classifiers with multinomial models in document classification. Data Min Knowl Disc 28, 123–144 (2014). https://doi.org/10.1007/s10618-012-0296-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10618-012-0296-4