Abstract

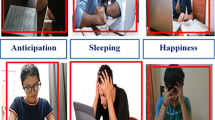

Autism spectrum disorder (A.S.D.) is considered a heterogeneous mental disorder, which is notoriously difficult to identify for a better diagnosis, especially among children. The current diagnosis methodology is purely based on the behavioural observation of symptoms prone to misdiagnosis. Several hybrid methods were explored, which also needs its improvisation in better prediction and diagnosis to move this field towards intelligent and accurate diagnosis. The main objective of this research paper was to develop the new diagnosis software which integrates the novel fuzzy hybrid deep convolutional neural networks and fusion of facial expressions and human gaits based on input video sequences. The algorithm has been trained and validated with the different video datasets such as Kaggle FER2013 and Karolinska Directed Emotional Faces (KDEF) datasets with real-time test scenarios, and various parameters such as accuracy, recall and F1-score were evaluated. Our proposed deep learning model outperforms another state-of-the-art method with an increase in prediction accuracy up to 30% with maximum accuracy of 95%. The model presented in this paper yields more advantages in terms of prediction time and accuracy also. However, the speech therapists, teachers, caretakers, and parents can use the software as a technological tool when working with children with A.S.D.

Similar content being viewed by others

References

American Psychiatric Association: Diagnostic and Statistical Manual of Mental Disorders (DSM-5R). American Psychiatric Association, Washington, DC (2013)

Kanner, L., et al.: Autistic disturbances of affective contact. Nerv. Child 2(3), 217–250 (1943)

Carmona-Serrano, N., López-Belmonte, J., López-Núñez, J.-A., Moreno-Guerrero, A.-J.: Trends in autism research in the field of education in Web of Science: a bibliometric study. Brain Sci. 10(12), 1018 (2020)

Goren, C.C., Sarty, M., Wu, P.Y.: Visual following and pattern discrimination of face-like stimuli by newborn infants. Pediatrics 56(4), 544–549 (1975)

Dwyer, P., Saron, C.D., Rivera, S.M.: Identification of longitudinal sensory subtypes in typical development and autism spectrum development using growth mixture modelling. Res. Autism Spectr. Disord. 78, 101645 (2020). https://doi.org/10.1016/j.rasd.2020.101645

Udayakumar, N.: Facial expression recognition system for autistic children in virtual reality environment. Int. J. Sci. Res. Publ. 6(6), 613–622 (2016)

Black, M.H., Chen, N.T., Iyer, K.K., Lipp, O.V., Bolte, S., Falkmer, M., Tan, T., Girdler, S.: Mechanisms of facial emotion recognition in autism spectrum disorders: insights from eye tracking and electroencephalography. Neurosci. Biobehav. Rev. 80, 488–515 (2017)

Anjana, R., Lavanya, M.: Facial emotions recognition system for autism. Int. J. Adv. Eng. Technol. 5, 40–43 (2014)

Haque, M.I.U., Valles, D.: A facial expression recognition approach using DCNN for autistic children to identify emotions. In: IEEE 9th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), 2018, pp. 546–551

Crawford, H., Moss, J., Oliver, C., Elliott, N., Anderson, G.M., McCleery, J.P.: Visual preference for social stimuli in individuals with autism or neurodevelopmental disorders: an eye-tracking study. Mol. Autism 7(1), 24 (2016)

Prem Kumar, K., Murugapriya, K., Varsha, M.R., Asmitha, R., Sureka, S.: Facial emotion recognition for autism children. J. Innov. Technol. Explor. Eng. 9(7), 1274–1278 (2020)

Gepner, B., Deruelle, C., Grynfeltt, S.: Motion and emotion: a novel approach to the study of face processing by young autistic children. J. Autism Dev. Disord. 31(1), 11 (2001)

Bal, E., Harden, E., Lamb, D., Van Hecke, A.V., Denver, J.W., Porges, S.W.: Emotion recognition in children with autism spectrum disorders: relations to eye gaze and autonomic state. J. Autism Dev. Disord. 40(3), 358–370 (2010)

van ’t Hof, M., Tisseur, C., van BerckelearOnnes, I., van Nieuwenhuyzen, A., Daniels, A.M., Deen, M., Hoek, H.W., Ester, W.A.: Age at autism spectrum disorder diagnosis: a systematic review and meta-analysis from 2012 to 2019. Autism SAGE J. 25(4), 862–873. Article first published online: 19 Nov 2020; Issue published: 1 May 2021

Weeks, S.J., Hobson, R.P.: The salience of facial expression for autistic children. J. Child Psychol. Psychiatry 28(1), 137–152 (1987)

Hobson, R.P.: The autistic child’s appraisal of expressions of emotion: a further study. J. Child Psychol. Psychiatry 27(5), 671–680 (1986)

Nobile, M., Perego, P., Piccinini, L., Mani, E., Rossi, A., Bellina, M., Molteni, M.: Further evidence of complex motor dysfunction in drug naive children with autism using automatic motion analysis of gait. Autism 15(3), 263–283 (2011)

Mache, M.A., Todd, T.A.: Gross motor skills are related to postural stability and age in children with autism spectrum disorder. Res. Autism Spectr. Disord. 23, 179–187 (2016)

Calhoun, M., Longworth, M., Chester, V.L.: Gait patterns in children with autism. Clin. Biomech. 26(2), 200–206 (2011)

Yeung, S., Russakovsky, O., Mori, G., Fei-Fei, L.: End-to-end learning of action detection from frame glimpses in videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 2678–2687

Simonyan, K., Zisserman, A.: Two-stream convolutional networks for action recognition in videos. In: Advances in Neural Information Processing Systems, 2014, pp. 568–576

Mozo, A., Ordozgoiti, B., Gómez-Canaval, S.: ‘Forecasting short-term data center network traffic load with convolutional neural networks.’ PLoS ONE 13(2), e0191939 (2018)

Zhang, C., Zhang, H., Yuan, D., Zhang, M.: ‘Citywide cellular traffic prediction based on densely connected convolutional neural networks.’ IEEE Commun. Lett. 22(8), 1656–1659 (2018)

Huang, G.B., Zhu, Q.Y., Siew, C.K.: Extreme learning machine: a new learning scheme of feedforward neural networks. In: Proceedings of the 2004 IEEE International Joint Conference on Neural Networks, Budapest, Hungary, 25–29 July 2004, vol. 2, pp. 985–990

Huang, G.B., Zhu, Q.Y., Siew, C.K.: Extreme learning machine: theory and applications. Neurocomputing 70, 489–501 (2006)

Li, G., Lee, O., Rabitz, H.: High efficiency classification of children with autism spectrum disorder. PLoS ONE 13(2), e0192867 (2018). https://doi.org/10.1371/journal.pone.0192867

Bi, X., Wang, Y., Shu, Q., Sun, Q., Xu, Q.: Classification of autism spectrum disorder using random support vector machine cluster. Front. Genet. 9, 18 (2018). https://doi.org/10.3389/fgene.2018.00018

Alarifi, H.S., Young, G.S.: Using multiple machine learning algorithms to predict autism in children. In: International Conference on Artificial Intelligence, ICAI'18

Ganapathi Raju, N.V., Madhavi, K., Sravan Kumar, G., Vijender Reddy, G., Latha, K., Lakshmi Sushma, K.: Prognostication of autism spectrum disorder (A.S.D.) using supervised machine learning models. Int. J. Eng. Adv. Technol. 8(4), 2249–8958 (2019)

Omar, K.S., Mondal, P., Khan, N.S., Rizvi, M.R.K., Islam, M.N.: A machine learning approach to predict autism spectrum disorder. In: 2019 International Conference on Electrical, Computer and Communication Engineering (ECCE), Cox' Bazar, Bangladesh, 2019, pp. 1–6. https://doi.org/10.1109/ECACE.2019.8679454

Hyde, K.K., Novack, M.N., LaHaye, N., Parlett-Pelleriti, C., Anden, R., Dixon, D.R., Linstead, E.: Applications of supervised machine learning in autism spectrum disorder research: a review. Rev. J. Autism Dev. Disord. 6, 128–146 (2019)

Thabtah, F.: Autism spectrum disorder screening: machine learning adaptation and DSM-5 fulfillment (2017). https://doi.org/10.1145/3107514.3107515

Gu, J., Wang, Z., Kuen, J., et al.: Recent advances in convolutional neural networks. Pattern Recognit. 77, 354–377 (2018). View at: Publisher Site | Google Scholar

Huang, G., Huang, G.-B., Song, S., You, K.: Trends in extreme learning machines: a review. Neural Netw. 61, 32–48 (2015)

Huang, G.-B., Chen, L.: Convex incremental extreme learning machine. Neurocomputing 70(16–18), 3056–3062 (2007)

Xie, S., Hu, H.: Facial expression recognition with FRR-CNN. Electron. Lett. 53(4), 235–237 (2017). View at: Publisher Site | Google Scholar

Li, J., Zhang, D., Zhang, J., et al.: Facial expression recognition with faster R-CNN. Procedia Comput. Sci. 107(C), 135–140 (2017). View at: Publisher Site | Google Scholar

Whittle, M.W.: Whittle’s Gait Analysis, 5th edn., p. 30. Elsevier, Amsterdam (2012)

Major, M.J., Raghavan, P., Gard, S.: Assessing a low-cost accelerometer-based technique to estimate spatial gait parameters of lower-limb prosthesis users. Prosthet. Orthot. Int. 40(5), 643–648 (2015). https://doi.org/10.1177/0309364614568411

Camicioli, R., Howieson, D., Lehman, S., Kaye, J.: Talking while walking: the effect of a dual task in aging and Alzheimer’s disease. Neurology 48(4), 955–958 (1997). https://doi.org/10.1212/WNL.48.4.955

Llinas, J., Hall, D.L.: An introduction to multi-sensor data fusion. In: Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), May 1998, vol. 6, pp. 537–540. https://doi.org/10.1109/ISCAS.1998.705329

Ding, Z., et al.: The real time gait phase detection based on long short-term memory. In: Proceedings of the IEEE 3rd International Conference on Data Science in Cyberspace (D.S.C.), June 2018, pp. 33–38. https://doi.org/10.1109/DSC.2018.00014

Vu, H.T.T., Gomez, F., Cherelle, P., Lefeber, D., Nowé, A., Vanderborght, B.: ED-FNN: a new deep learning algorithm to detect percentage of the gait cycle for powered prostheses. Sensors 18(7), 2389 (2018). https://doi.org/10.3390/s18072389

Mazumder, O., Sankar, A., Kumar Lenka, K.P., Bhaumik, S.: Multichannel fusion based adaptive gait trajectory generation using wearable sensors. J. Intell. Robot. Syst. 86(3–4), 335–351 (2016). https://doi.org/10.1007/s10846-016-0436-y

Mun, K.R., Song, G., Chun, S., Kim, J.: Gait estimation from anatomical foot parameters measured by a foot feature measurement system using a deep neural network model. Nature 8, 9879 (2018). https://doi.org/10.1038/s41598-018-28222-2

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Saranya, A., Anandan, R. FIGS-DEAF: an novel implementation of hybrid deep learning algorithm to predict autism spectrum disorders using facial fused gait features. Distrib Parallel Databases 40, 753–778 (2022). https://doi.org/10.1007/s10619-021-07361-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10619-021-07361-y