Abstract

Evidence from empirical studies suggests that mobile applications are not thoroughly tested as their desktop counterparts. In particular, GUI testing is generally limited. Like web-based applications, mobile apps suffer from GUI testing fragility, i.e., GUI test classes failing or needing interventions because of modifications in the AUT or in its GUI arrangement and definition. The objective of our study is to examine the diffusion of test classes created with a set of popular GUI Automation Frameworks for Android apps, the amount of changes required to keep test classes up to date, and the amount of code churn in existing test suites, along with the underlying modifications in the AUT that caused such modifications. We defined 12 metrics to characterize the evolution of test classes and test methods, and a taxonomy of 28 possible causes for changes to test code. To perform our experiments, we selected six widely used open-source GUI Automation Frameworks for Android apps. We evaluated the diffusion of the tools by mining the GitHub repositories featuring them, and computed our set of metrics on the projects. Applying the Grounded Theory technique, we then manually analyzed diff files of test classes written with the selected tools, to build from the ground up a taxonomy of causes for modifications of test code. We found that none of the considered GUI automation frameworks achieved a major diffusion among open-source Android projects available on GitHub. For projects featuring tests created with the selected frameworks, we found that test suites had to be modified often – specifically, about 8% of developers’ modified LOCs belonged to test code and that a relevant portion (around 50% on average) of those modifications were induced by modifications in GUI definition and arrangement. Test code written with GUI automation fromeworks proved to need significant interventions during the lifespan of a typical Android open-source project. This can be seen as an obstacle for developers to adopt this kind of test automation. The evaluations and measurements of the maintainance needed by test code wrtitten with GUI automation frameworks, and the taxonomy of modification causes, can serve as a benchmark for developers, and the basis for the formulation of actionable guidelines and the development of automated tools to help mitigating the issue.

Similar content being viewed by others

Notes

The sample and the evaluations based on the examined Java classes is available at the following URL: http://softeng.polito.it/coppola/precision_evaluations.csv

References

Alégroth E, Feldt R, Ryrholm L (2015) Visual gui testing in practice: challenges, problemsand limitations. Empir Softw Eng 20(3):694–744

Amalfitano D, Fasolino AR, Tramontana P, De Carmine S, Imparato G (2012) A toolset for gui testing of android applications. In: 2012 28th IEEE international conference on software maintenance (ICSM). IEEE, pp 650–653

Amalfitano D, Fasolino AR, Tramontana P, Ta BD, Memon AM (2015) Mobiguitar: automated model-based testing of mobile apps. IEEE software 32(5):53–59

Charmaz K (2014) Constructing grounded theory. Sage

Choi W, Necula G, Sen K (2013) Guided gui testing of android apps with minimal restart and approximate learning. In: Acm sigplan notices, vol 48. ACM, pp 623–640

Choudhary SR, Gorla A, Orso A (2015) Automated test input generation for android: are we there yet?(e). In: 2015 30th IEEE/ACM international conference on automated software engineering (ASE). IEEE, pp 429–440

Coppola R, Raffero E, Torchiano M (2016) Automated mobile ui test fragility: an exploratory assessment study on android. In: Proceedings of the 2nd international workshop on user interface test automation. ACM, pp 11–20

Coppola R, Morisio M, Torchiano M (2017) Scripted gui testing of android apps: a study on diffusion, evolution and fragility. In: Proceedings of the 13th international conference on predictive models and data analytics in software engineering. ACM, pp 22–32

Coppola R, Morisio M, Torchiano M (2018a) Maintenance of android widget-based gui testing: a taxonomy of test case modification causes. In: Proceedings of the 1st IEEE workshop on next level of test automation 2018. IEEE

Coppola R, Morisio M, Torchiano M (2018b) Mobile gui testing fragility: a study on open-source android applications. IEEE Trans Reliab 68(1):67–90

Corbin JM, Strauss A (1990) Grounded theory research: procedures, canons, and evaluative criteria. Qual Sociol 13(1):3–21

Cruz L, Abreu R, Lo D (2018) To the attention of mobile software developers: guess what, test your app! ArXiv

Gao J, Bai X, Tsai WT, Uehara T (2014) Mobile application testing: a tutorial. Computer 47(2):46–55

Gao Z, Chen Z, Zou Y, Memon AM (2016) Sitar: Gui test script repair. IEEE Transactions on Software Engineering 42(2):170–186

Garousi V, Felderer M (2016) Developing, verifying, and maintaining high-quality automated test scripts. IEEE Softw 33(3):68–75

Glaser BG, Strauss AL, Strutzel E (1968) The discovery of grounded theory; strategies for qualitative research. Nurs Res 17(4):364

Gomez L, Neamtiu I, Azim T, Millstein T (2013) Reran: timing-and touch-sensitive record and replay for android. In: 2013 35th international conference on software engineering (ICSE). IEEE, pp 72–81

Grechanik M, Xie Q, Fu C (2009) Maintaining and evolving gui-directed test scripts. In: Proceedings of the 31st international conference on software engineering. IEEE Computer Society, pp 408–418

Grgurina R, Brestovac G, Grbac TG (2011) Development environment for android application development: an experience report. In: 2011 Proceedings of the 34th international convention on MIPRO. IEEE, pp 1693–1698

Islam MR (2014) Numeric rating of apps on google play store by sentiment analysis on user reviews. In: 2014 international conference on electrical engineering and information communication technology. https://doi.org/10.1109/ICEEICT.2014.6919058, pp 1–4

Jensen CS, Prasad MR, Møller A (2013) Automated testing with targeted event sequence generation. In: Proceedings of the 2013 international symposium on software testing and analysis, ACM, pp 67–77

Kaasila J, Ferreira D, Kostakos V, Ojala T (2012) Testdroid: automated remote ui testing on android. In: Proceedings of the 11th international conference on mobile and ubiquitous multimedia. ACM, p 28

Kaur A (2015) Review of mobile applications testing with automated techniques. Int J Adv Res Comput Commun Eng 4(10):503–507

Knych TW, Baliga A (2014) Android application development and testability. In: Proceedings of the 1st international conference on mobile software engineering and systems. ACM, pp 37–40

Kochhar PS, Thung F, Nagappan N, Zimmermann T, Lo D (2015) Understanding the test automation culture of app developers. In: 2015 IEEE 8th international conference on software testing, verification and validation (ICST). IEEE, pp 1–10

Kropp M, Morales P (2010) Automated gui testing on the android platform. In: Proceedings of the 22nd IFIP international conference on testing software and systems: short papers, pp. 67–72

Leotta M, Clerissi D, Ricca F, Tonella P (2013) Capture-replay vs. programmable web testing: an empirical assessment during test case evolution. In: 2013 20th working conference on reverse engineering (WCRE). IEEE, pp 272–281

Leotta M, Clerissi D, Ricca F, Tonella P (2014) Visual vs. dom-based web locators: an empirical study. In: International conference on Web engineering. Springer, pp 322–340

Linares-Vásquez M (2015) Enabling testing of android apps. In: 2015 IEEE/ACM 37th IEEE international conference on Software engineering (ICSE), vol 2. IEEE, pp 763–765

Linares-Vasquez M, Vendome C, Luo Q, Poshyvanyk D (2015) How developers detect and fix performance bottlenecks in android apps. In: 2015 IEEE international conference on software maintenance and evolution (ICSME). IEEE, pp 352–361

Linares-Vásquez M, Bernal-Cárdenas C, Moran K, Poshyvanyk D (2017a) How do developers test android applications?. In: 2017 IEEE international conference on software maintenance and evolution (ICSME). IEEE, pp 613–622

Linares-Vásquez M, Moran K, Poshyvanyk D (2017b) Continuous, evolutionary and large-scale: a new perspective for automated mobile app testing. In: 2017 IEEE international conference on software maintenance and evolution (ICSME). IEEE, pp 399–410

Liu CH, Lu CY, Cheng SJ, Chang KY, Hsiao YC, Chu WM (2014) Capture-replay testing for android applications. In: 2014 international symposium on computer, consumer and control (IS3c), IEEE, pp 1129–1132

Machiry A, Tahiliani R, Naik M (2013) Dynodroid: an input generation system for android apps. In: Proceedings of the 2013 9th joint meeting on foundations of software engineering. ACM, pp 224–234

Memon AM (2008) Automatically repairing event sequence-based gui test suites for regression testing. ACM Trans Softw Eng Methodol (TOSEM) 18(2):4

Milano DT (2011) Android application testing guide. Packt Publishing Ltd, Birmingham

Mirzaei N, Malek S, Păsăreanu CS, Esfahani N, Mahmood R (2012) Testing android apps through symbolic execution. ACM SIGSOFT Software Engineering Notes 37(6):1–5

Moran K, Linares-Vásquez M, Bernal-Cárdenas C, Vendome C, Poshyvanyk D (2017) Crashscope: a practical tool for automated testing of android applications. In: 2017 IEEE/ACM 39th international conference on software engineering companion (ICSE-C). IEEE, pp 15–18

Muccini H, Di Francesco A, Esposito P (2012) Software testing of mobile applications: challenges and future research directions. In: Proceedings of the 7th international workshop on automation of software test. IEEE Press, pp 29–35

Pinto LS, Sinha S, Orso A (2012) Understanding myths and realities of test-suite evolution. In: Proceedings of the ACM SIGSOFT 20th international symposium on the foundations of software engineering. ACM, p 33

Ralph P (2018) Toward methodological guidelines for process theories and taxonomies in software engineering. IEEE Trans Softw Eng. https://ieeexplore.ieee.org/abstract/document/8267085

Scott TJ, Kuksenok K, Perry D, Brooks M, Anicello O, Aragon C (2012) Adapting grounded theory to construct a taxonomy of affect in collaborative online chat. In: Proceedings of the 30th ACM international conference on Design of communication. ACM, pp 197–204

Sedano T, Ralph P, Péraire C (2017) Software development waste. In: Proceedings of the 39th international conference on software engineering. IEEE Press, pp 130–140

Shah G, Shah P, Muchhala R (2014) Software testing automation using appium. International Journal of Current Engineering and Technology 4(5):3528–3531

Singh S, Gadgil R, Chudgor A (2014) Automated testing of mobile applications using scripting technique: a study on appium. International Journal of Current Engineering and Technology (IJCET) 4(5):3627–3630

Stol KJ, Ralph P, Fitzgerald B (2016) Grounded theory in software engineering research: a critical review and guidelines. In: 2016 IEEE/ACM 38th international conference on software engineering (ICSE). IEEE, pp 120–131

Strauss A, Corbin J (1998) Basics of qualitative research. techniques and procedures for developing grounded theory, Thousand Oaks, CA, Sage

Tan M, Cheng P (2016) Research and implementation of automated testing framework based on android. Inf Technol 5:035

Tang X, Wang S, Mao K (2015) Will this bug-fixing change break regression testing?. In: 2015 ACM/IEEE international symposium on empirical software engineering and measurement (ESEM), IEEE, pp 1–10

Yang W, Prasad MR, Xie T (2013) A grey-box approach for automated gui-model generation of mobile applications. In: International conference on fundamental approaches to software engineering. Springer, pp 250–265

Yusifoğlu VG, Amannejad Y, Can AB (2015) Software test-code engineering: a systematic mapping. Inf Softw Technol 58:123–147

Zadgaonkar H (2013) Robotium automated testing for android. Packt Publishing Ltd, Birmingham

Zhauniarovich Y, Philippov A, Gadyatskaya O, Crispo B, Massacci F (2015) Towards black box testing of android apps. In: 2015 10th international conference on availability, reliability and security (ARES). IEEE, pp 501–510

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by: David Bowes, Emad Shihab, and Burak Turhan

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Absolute Number of Modification Causes

Table 8 shows the absolute number of occurrences of the categories of modification causes among the examined diff files; each column shows the number of occurrences for the set of diff files that are associated to a given GUI Automation Frameworks.

Appendix B: Running Sample of Metric Computations

To provide samples of metric computations, we resort on reporting all the intermediate and final measures for a small projects of the sample that we considered, namely WheresMyBus/android.Footnote 14 The project features test classes that are attributable to the Espresso GUI Automation Framework. During the lifespan of the app, four different test classes are identified. The GitHub repository has a history of six distinct tagged releases, including the Master.

Table 9 shows all the measures computed for the six distinct releases of the project. As detailed in the later Procedure section, all those metrics are obtained through (i) searches in the .java source files that are associated to the considered GUI Automation Framework (in this case, all .java files containing the keyword “Espresso”); (2) examinations of the differences between the same files in consecutive releases of the project; (3) examination of the methods that are featured by each test class in all releases of the project. In the table, when a metric is not defined for a given release, the symbol “-” is used. This happens, for instance, in the transition between release 1.4.0 and master, where no modifications are performed in the whole project (hence, Pdiff = 0). In this case, the MRTL metric is not defined. All the derived metrics which require a comparison with the amount of code, classes or methods of the previous release are not defined for the first tagged release of the project.

Table 10, shows statistics about the test classes that are featured by the examined project, during its lifespan. The table columns show, for each class, the absolute paths, the versions in which the class is present, the contained methods, and the total and modified LOCs, and the total, added, modified and deleted methods. The project features four distinct test classes during its lifespan. The statistics collected for the classes are finally used to compute the Test Suite Volatility, i.e., the percentage of classes with at least a modification during their lifespan upon the total number of classes (in the case of this project, the 100%).

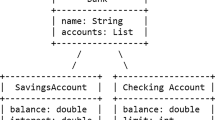

The metrics NTC, AC, DC and MC, respectively the total, added, deleted and modified test classes, are computed by a raw count of the number of .java files that are associated to the testing tool under examination. The metrics NTM, AM, DM and MM, respectively the total, added, deleted and modified test methods, are computed (i) in the case of AM and DM only, by counting the methods in added or deleted test classes; (ii) by applying the JavaParser tool on the individual test classes before and after the release transition, and examining the differences in the lists of methods. Diff files are also examined to identify the position of modified lines in test classes, in order to compute MCMM (i.e., the number of Modified Classes with Modified Methods). As an example, we report in Fig. 2 the modifications in the test class TestAlertForumActivity.java between release 1.3.0 and release 1.4.0. It is evident from the diff file that a single test method is modified in the release transition, and that of the 7 modified test LOCs are outside test methods. Having a method modified, the class counts for the computation of the MCMM metric (i.e., the number of modified test classes with modified methods).

Appendix C: Filtering Procedure

Rights and permissions

About this article

Cite this article

Coppola, R., Morisio, M., Torchiano, M. et al. Scripted GUI testing of Android open-source apps: evolution of test code and fragility causes. Empir Software Eng 24, 3205–3248 (2019). https://doi.org/10.1007/s10664-019-09722-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10664-019-09722-9