Abstract

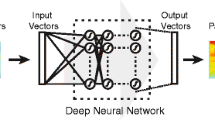

Voice conversion is an important problem in audio signal processing. The goal of voice conversion is to transform the speech signal of a source speaker such that it sounds as if it had been uttered by a target speaker. Our contribution in this paper includes a new methodology for designing the relationship between two sets of spectral envelopes. Our systems perform by: (1) cascading deep neural networks and Gaussian mixture model to construct DNN–GMM and GMM–DNN–GMM models in order to find a global mapping relationship between the cepstral vectors of the two speakers; (2) using a new spectral synthesis process with cascaded cepstrum predictors and excitation and phase extracted from the target training space encoded as a KD-tree. Experimental results of the proposed methods exhibit a great improvement of the intelligibility, the quality and naturalness of the converted speech signals when compared with stimuli obtained by baseline conversion methods. The extraction of excitation and phase from the target training space, permits the preservation of target speaker’s identity.

Similar content being viewed by others

References

Abe, M., Nakamura, S., Shikano, K., & Kuwabara, H. (1990). Voice conversion through vector quantization. Journal of the Acoustical Society of Japan (E), 11(2), 71–76.

Arslan, L. M. (1999). Speaker transformation algorithm using segmental codebooks (stasc) 1. Speech Communication, 28(3), 211–226.

Arya, S. (1996). Nearest neighbor searching and applications. PhD thesis, University of Maryland, College Park.

Azarov, E., Petrovsky, A., & Zubrycki, P. (2010). Multi voice text to speech synthesis based on the instantaneous parametric voice conversion. In Signal processing algorithms, architectures, arrangements, and applications SPA 2010 (pp. 78–82). IEEE.

Beauregard, G. T., Zhu, X., & Wyse, L. (2005). An efficient algorithm for real-time spectrogram inversion. In Proceedings of the 8th international conference on digital audio effects (pp. 116–118).

Ben Othmane, I., Di Martino, J., & Ouni, K. (2018a). Enhancement of esophageal speech obtained by a voice conversion technique using time dilated fourier cepstra. International Journal of Speech Technology, 22, 1–12.

Ben Othmane, I., Di Martino, J., & Ouni, K. (2018b). Improving the computational performance of standard gmm-based voice conversion systems used in real-time applications. In 2018 International conference on electronics, control, optimization and computer science (ICECOCS) (pp. 1–5). IEEE.

Charlier, M., Ohtani, Y., Toda, T., Moinet, A., & Dutoit, T. (2009). Cross-language voice conversion based on eigenvoices. In 10th Annual conference of the international speech communication association

Chen, L.-H., Ling, Z.-H., Liu, L.-J., & Dai, L.-R. (2014). Voice conversion using deep neural networks with layer-wise generative training. IEEE/ACM Transactions on Audio, Speech and Language Processing (TASLP), 22(12), 1859–1872.

Chen, L.-H., Ling, Z.-H., Song, Y., & Dai, L.-R. (2013). Joint spectral distribution modeling using restricted Boltzmann machines for voice conversion. Interspeech, 87, 3052–3056.

Chen, L.-H., Yang, C.-Y., Ling, Z.-H., Jiang, Y., Dai, L.-R., Hu, Y., & Wang, R.-H. (2011). The USTC system for blizzard challenge 2011. In Blizzard challenge workshop.

Desai, S., Black, A. W., Yegnanarayana, B., & Prahallad, K. (2010). Spectral mapping using artificial neural networks for voice conversion. IEEE Transactions on Audio, Speech, and Language Processing, 18(5), 954–964.

Desai, S., Raghavendra, E. V., Yegnanarayana, B., Black, A. W., & Prahallad, K. (2009). Voice conversion using artificial neural networks. In IEEE International Conference on Acoustics, Speech and Signal Processing, 2009. ICASSP 2009 (pp. 3893–3896). IEEE.

Deza, M. M., & Deza, E. (2009). Encyclopedia of distances. In Encyclopedia of distances (pp. 1–583). Springer, Berlin

Doi, H., Toda, T., Nakamura, K., Saruwatari, H., & Shikano, K. (2014). Alaryngeal speech enhancement based on one-to-many eigenvoice conversion. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 22(1), 172–183.

Gibiansky, A., Arik, S., Diamos, G., Miller, J., Peng, K., Ping, W., et al. (2017). Deep voice 2: Multi-speaker neural text-to-speech. Advances in Neural Information Processing Systems, 2962–2970.

Griffin, D., & Lim, J. (1984). Signal estimation from modified short-time fourier transform. IEEE Transactions on Acoustics, Speech, and Signal Processing, 32(2), 236–243.

Helander, E., Silén, H., Virtanen, T., & Gabbouj, M. (2012). Voice conversion using dynamic kernel partial least squares regression. IEEE Transactions on Audio, Speech, and Language Processing, 20(3), 806–817.

Iwahashi, N., & Sagisaka, Y. (1995). Speech spectrum conversion based on speaker interpolation and multi-functional representation with weighting by radial basis function networks. Speech Communication, 16(2), 139–151.

Kain, A., & Macon, M. W. (1998). Spectral voice conversion for text-to-speech synthesis. In Proceedings of the 1998 IEEE international conference on acoustics, speech and signal processing, 1998. (Vol. 1, pp. 285–288). IEEE.

Kain, A. B. (2001). High resolution voice transformation.

Kanungo, T., Mount, D. M., Netanyahu, N. S., Piatko, C. D., Silverman, R., & Wu, A. Y. (2002). An efficient k-means clustering algorithm: Analysis and implementation. IEEE Transactions on Pattern Analysis & Machine Intelligence, 7, 881–892.

Kawahara, H., Masuda-Katsuse, I., & De Cheveigne, A. (1999). Restructuring speech representations using a pitch-adaptive time-frequency smoothing and an instantaneous-frequency-based F0 extraction: Possible role of a repetitive structure in sounds. Speech Communication, 27(3–4), 187–207.

Kawanami, H., Iwami, Y., Toda, T., Saruwatari, H., & Shikano, K. (2003). GMM-based voice conversion applied to emotional speech synthesis. In Eighth European Conference on Speech Communication and Technology.

Kobayashi, K., Toda, T., & Nakamura, S. (2016). F0 transformation techniques for statistical voice conversion with direct waveform modification with spectral differential. In 2016 IEEE Spoken Language Technology Workshop (SLT) (pp. 693–700). IEEE.

Kominek, J., & Black, A. W. (2004). The CMU arctic speech databases. In Fifth ISCA workshop on speech synthesis.

Ling, Z.-H., Kang, S.-Y., Zen, H., Senior, A., Schuster, M., Qian, X.-J., et al. (2015). Deep learning for acoustic modeling in parametric speech generation: A systematic review of existing techniques and future trends. IEEE Signal Processing Magazine, 32(3), 35–52.

Liu, L.-J., Chen, L.-H., Ling, Z.-H., & Dai, L.-R. (2015). Spectral conversion using deep neural networks trained with multi-source speakers. In 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 4849–4853). IEEE.

Liu, L.-J., Ling, Z.-H., Jiang, Y., Zhou, M., & Dai, L.-R. (2018). Wavenet vocoder with limited training data for voice conversion. Interspeech, 1983–1987.

Lorenzo-Trueba, J., Yamagishi, J., Toda, T., Saito, D., Villavicencio, F., Kinnunen, T., & Ling, Z. (2018). The voice conversion challenge 2018: Promoting development of parallel and nonparallel methods. arXiv:1804.04262.

Mashimo, M., Toda, T., Kawanami, H., Shikano, K., & Campbell, N. (2002). Cross-language voice conversion evaluation using bilingual databases.

Mizuno, H., & Abe, M. (1995). Voice conversion algorithm based on piecewise linear conversion rules of formant frequency and spectrum tilt. Speech Communication, 16(2), 153–164.

Mouchtaris, A., Van der Spiegel, J., & Mueller, P. (2004). A spectral conversion approach to the iterative wiener filter for speech enhancement. In 2004 IEEE international conference on multimedia and expo (ICME)(IEEE Cat. No. 04TH8763) (Vol. 3, pp. 1971–1974). IEEE.

Nair, V., & Hinton, G. E. (2010). Rectified linear units improve restricted Boltzmann machines. In Proceedings of the 27th international conference on machine learning (ICML-10) (pp. 807–814).

Nakamura, K., Toda, T., Saruwatari, H., & Shikano, K. (2012). Speaking-aid systems using GMM-based voice conversion for electrolaryngeal speech. Speech Communication, 54(1), 134–146.

Nakashika, T., Takashima, R., Takiguchi, T., & Ariki, Y. (2013). Voice conversion in high-order eigen space using deep belief nets. Interspeech, 369–372.

Nakashika, T., Takiguchi, T., & Ariki, Y. (2014). High-order sequence modeling using speaker-dependent recurrent temporal restricted Boltzmann machines for voice conversion. In Fifteenth annual conference of the international speech communication association.

Oord, A. v. d., Dieleman, S., Zen, H., Simonyan, K., Vinyals, O., Graves, A., Kalchbrenner, N., Senior, A., & Kavukcuoglu, K. (2016). Wavenet: A generative model for raw audio. arXiv:1609.03499.

Oppenheim, A. V. (1969). Speech analysis-synthesis system based on homomorphic filtering. The Journal of the Acoustical Society of America, 45(2), 458–465.

Orphanidou, C., Moroz, I. M., & Roberts, S. J. (2007). Multiscale voice morphing using radial basis function analysis. In Algorithms for Approximation (pp. 61–69). Springer, Berlin.

Park, K.-Y., & Kim, H. S. (2000). Narrowband to wideband conversion of speech using GMM based transformation. In 2000 IEEE international conference on acoustics, speech, and signal processing. Proceedings (Cat. No. 00CH37100) (Vol. 3, pp. 1843–1846). IEEE.

Ramani, B., Jeeva, M. A., Vijayalakshmi, P., & Nagarajan, T. (2014). Cross-lingual voice conversion-based polyglot speech synthesizer for Indian languages. In Fifteenth annual conference of the international speech communication association.

Rumelhart, D. E., Hinton, G. E., Williams, R. J., et al. (1988). Learning representations by back-propagating errors. Cognitive Modeling, 5(3), 1.

Saito, Y., Takamichi, S., & Saruwatari, H. (2017). Voice conversion using input-to-output highway networks. IEICE Transactions on Information and Systems, 100(8), 1925–1928.

Sakoe, H., & Chiba, S. (1978). Dynamic programming algorithm optimization for spoken word recognition. IEEE Transactions on Acoustics, Speech, and Signal Processing, 26(1), 43–49.

Sekii, Y., Orihara, R., Kojima, K., Sei, Y., Tahara, Y., & Ohsuga, A. (2017). Fast many-to-one voice conversion using autoencoders. ICAART, 2, 164–174.

Seltzer, M. L., Acero, A., & Droppo, J. (2005). Robust bandwidth extension of noise-corrupted narrowband speech. In Ninth European conference on speech communication and technology.

Song, P., Jin, Y., Zheng, W., & Zhao, L. (2014). Text-independent voice conversion using speaker model alignment method from non-parallel speech. In Fifteenth annual conference of the international speech communication association.

Stylianou, Y. (2001). Applying the harmonic plus noise model in concatenative speech synthesis. IEEE Transactions on Speech and Audio Processing, 9(1), 21–29.

Stylianou, Y., Cappé, O., & Moulines, E. (1998). Continuous probabilistic transform for voice conversion. IEEE Transactions on Speech and Audio Processing, 6(2), 131–142.

Sun, L., Kang, S., Li, K., & Meng, H. (2015). Voice conversion using deep bidirectional long short-term memory based recurrent neural networks. In 2015 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 4869–4873). IEEE.

Sundermann, D., Ney, H., & Hoge, H. (2003). VTLN-based cross-language voice conversion. In 2003 IEEE workshop on automatic speech recognition and understanding (IEEE Cat. No. 03EX721) (pp. 676–681). IEEE.

Tamamori, A., Hayashi, T., Kobayashi, K., Takeda, K., & Toda, T. (2017). Speaker-dependent wavenet vocoder. Interspeech, 1118–1122.

Toda, T., Black, A. W., & Tokuda, K. (2007). Voice conversion based on maximum-likelihood estimation of spectral parameter trajectory. IEEE Transactions on Audio, Speech, and Language Processing, 15(8), 2222–2235.

Toda, T., Chen, L.-H., Saito, D., Villavicencio, F., Wester, M., Wu, Z., et al. (2016). The voice conversion challenge 2016. Interspeech, 1632–1636.

Turk, O., & Schroder, M. (2010). Evaluation of expressive speech synthesis with voice conversion and copy resynthesis techniques. IEEE Transactions on Audio, Speech, and Language Processing, 18(5), 965–973.

Upperman, G. (2004). Linear predictive coding in voice conversion.

Valbret, H., Moulines, E., & Tubach, J.-P. (1992). Voice transformation using Psola technique. Speech Communication, 11(2–3), 175–187.

Verhelst, W., & Mertens, J. (1996). Voice conversion using partitions of spectral feature space. In 1996 IEEE international conference on acoustics, speech, and signal processing conference proceedings (Vol. 1, pp. 365–368). IEEE.

Villavicencio, F., & Bonada, J. (2010). Applying voice conversion to concatenative singing-voice synthesis. In Eleventh annual conference of the international speech communication association.

Watanabe, T., Murakami, T., Namba, M., Hoya, T., & Ishida, Y. (2002). Transformation of spectral envelope for voice conversion based on radial basis function networks. In Seventh international conference on spoken language processing.

Werghi, A., Di Martino, J., & Jebara, S. B. (2010). On the use of an iterative estimation of continuous probabilistic transforms for voice conversion. In 2010 5th international symposium on I/V communications and mobile network (pp. 1–4). IEEE.

Wester, M., Wu, Z., & Yamagishi, J. (2016). Analysis of the voice conversion challenge 2016 evaluation results. Interspeech, 1637–1641.

Xu, N., Tang, Y., Bao, J., Jiang, A., Liu, X., & Yang, Z. (2014). Voice conversion based on gaussian processes by coherent and asymmetric training with limited training data. Speech Communication, 58, 124–138.

Yu, D., & Deng, L. (2010). Deep learning and its applications to signal and information processing [exploratory DSP]. IEEE Signal Processing Magazine, 28(1), 145–154.

Yu, D., & Deng, L. (2016). Automatic Speech Recognition. Berlin: Springer.

Zhu, X., Beauregard, G. T., & Wyse, L. (2006). Real-time iterative spectrum inversion with look-ahead. In 2006 IEEE international conference on multimedia and expo (pp. 229–232). IEEE.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ben Othmane, I., Di Martino, J. & Ouni, K. A novel voice conversion approach using cascaded powerful cepstrum predictors with excitation and phase extracted from the target training space encoded as a KD-tree. Int J Speech Technol 22, 1007–1019 (2019). https://doi.org/10.1007/s10772-019-09643-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-019-09643-4