Abstract

In this article we present Supervised Semantic Indexing which defines a class of nonlinear (quadratic) models that are discriminatively trained to directly map from the word content in a query-document or document-document pair to a ranking score. Like Latent Semantic Indexing (LSI), our models take account of correlations between words (synonymy, polysemy). However, unlike LSI our models are trained from a supervised signal directly on the ranking task of interest, which we argue is the reason for our superior results. As the query and target texts are modeled separately, our approach is easily generalized to different retrieval tasks, such as cross-language retrieval or online advertising placement. Dealing with models on all pairs of words features is computationally challenging. We propose several improvements to our basic model for addressing this issue, including low rank (but diagonal preserving) representations, correlated feature hashing and sparsification. We provide an empirical study of all these methods on retrieval tasks based on Wikipedia documents as well as an Internet advertisement task. We obtain state-of-the-art performance while providing realistically scalable methods.

Similar content being viewed by others

1 Introduction

Ranking text documents given a text-based query is one of the key tasks in information retrieval. A typical solution is to: (a) embed the problem in a feature space, e.g. model queries and target documents using a vector representation; and then (b) choose (or learn) a similarity metric that operates in this vector space. Ranking is then performed by sorting the documents based on their similarity score with the query.

For example, a classical vector space model, see e.g. (Baeza-Yates et al. 1999), uses weighted word counts (e.g. via tf-idf) as the feature space, and the cosine similarity for ranking. In this case, the model is chosen by hand and no machine learning is involved. This type of model often performs remarkably well, but suffers from the fact that only exact matches of words between query and target texts contribute to the similarity score. That is, words are considered to be independent, which is clearly a false assumption.

Latent Semantic Indexing (Deerwester et al. 1990), and related methods such as pLSA and LDA (Hofmann 1999; Blei et al. 2003), are unsupervised methods that choose a low dimensional feature representation of “latent concepts” where words are no longer independent. They are trained with reconstruction objectives, either based on mean squared error (LSI) or likelihood (pLSA, LDA). These models, being unsupervised, are still agnostic to the particular task of interest. Supervised LDA (sLDA) (Blei et al. 2007) has been proposed where a set of auxiliary labels are trained on jointly with the unsupervised task. However, the supervised task is not a task of learning to rank because the supervised signal is at the document level and is query independent.

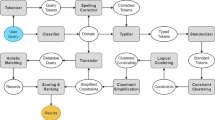

In this article we propose Supervised Semantic Indexing (SSI) which defines a class of models that can be trained on a supervised signal (i.e., labeled data) to provide a ranking of a database of documents given a query. This signal is defined at the (query, documents) level and can either be point-wise—for instance the relevance of the document to the query—or pairwise—a given document is better than another for a given query. In this work, we focus on pairwise preferences. For example, if one has click-through data yielding query-target relationships, one can use this to train these models to perform well on this task (Joachims 2002). Or, if one is interested in finding documents related to a given query document, one can use known hyperlinks to learn a model that performs well on this task (Grangier et al. 2005). Moreover, our approach can model queries and documents separately, which can accommodate for differing word distributions between documents and queries. This is essential in the case of cross-language retrieval (Grefenstette 1998) where queries and documents are in different languages. This can also be applied to other applications as well, for instance matching questions and answers (Voorhees et al. 2005).

Learning to rank as a supervised task is not a new subject, however, most methods and models have typically relied on optimizing over only a few hand-constructed features, e.g. based on existing vector space models such as tf-idf, the title, URL, PageRank and other information, see e.g. (Joachims 2002; Burges et al. 2005). Our work is orthogonal to those works, as it presents a way of learning a model for query and target texts by considering features generated by all pairs of words between the two texts Footnote 1. The difficulty here is that such feature spaces are very large and we present several models that deal with memory, speed and capacity control issues. In particular we propose constraints on our model that are diagonal preserving but otherwise low rank, a direct sparsity constraint on the model, and a technique of hashing features (sharing weights) based on their correlation, called correlated feature hashing (CFH). In fact, all of our proposed methods can be used in conjunction with other features and methods explored in previous work for further gains.

We show experimentally on retrieval tasks derived from Wikipedia that our method strongly outperforms word-feature based models such as tf-idf vector space models, LSI and other baselines on document-document, query-document tasks and cross-language retrieval tasks. Finally, we give results on an Internet advertising task using proprietary data from an online advertising company.

The rest of this article is as follows. In Sect. 2 we describe our method, Sect. 3 discusses prior work, Sect. 4 describes the experimental study of our method, and Sect. 5 concludes.

2 Supervised semantic indexing

The goal of our work is to learn a similarity function f(q, d) between a query q and a document d, which will be used in ranking. Without loss of generality, we take the ranking framework of “pairwise preference” (Herbrich et al. 2000). That is, given a set of tuples \({\mathcal R}\) (labeled data), where each tuple contains a query q, a relevant document d + and an irrelevant (or lower ranked) document d −, we would like to choose function f(q, d) such that f(q, d +) > f(q, d −), expressing that d + should be ranked higher than d −.

This section is organized as follows. In Sect. 2.1 we present the basic model of SSI, which is essentially a perceptron on quadratic word features. To the best of our knowledge, we are the first to consider such features using this model. For scalability and model capacity control, we propose in Sect. 2.2 several improvements to this model. Namely, in 2.2.1 we propose a novel asymmetric low rank approximation, which enables separate modeling of query and documents, yielding improved scalability and testing performance. In 2.2.2 we study a sparsified version of the basic model. In addition, we propose a novel feature hashing scheme in 2.2.3 to reduce feature numbers, in an way more meaningful than “Hash Kernels” (Shi et al. 2009). Section 2.3 discusses the training of all models. Section 2.4 exposes several applications of SSI, which will be further investigated in experiments (Sect. 4).

2.1 Basic model

Let us denote the set of documents in the corpus as \(\{ d_t\}_{t=1}^\ell \subset {{\mathbb{R}}}^{\mathcal D} \) and a query text as \(q \in {{\mathbb{R}}}^{\mathcal D},\) where \({\mathcal D}\) is the dictionary sizeFootnote 2, and the jth dimension of a vector indicates the frequency of occurrence of the jth word, e.g. using the tf-idf weighting and then normalizing to unit length (Baeza-Yates et al. 1999).

The set of models we propose are all special cases of the following type of model:

where f(q, d) is the score between a query q and a given document d, and \(W \in {{\mathbb{R}}}^{{\mathcal D} \times {\mathcal D}} \) is the weight matrix, which will be learned from a supervised signal. This model can capture synonymy and polysemy as it looks at all possible cross terms, and can be tuned directly for the task of interest. We do not use stemming since our model can already match words with common stems (if it is useful for the task). Note that negative correlations via negative values in the weight matrix W can also be encoded.

Expressed in another way, given the pair q, d we are constructing the joint feature map:

where Φ s (·) is the sth dimension in our feature space, and choosing the set of models:

Note that a model taking pairs of words as features is essential here, a simple approach concatenating (q, d) into a single vector and using f(q, d) = w·[q, d] is not a viable option as it would result in the same document ordering for any query.

We could train any standard method such as a ranking perceptron or a ranking SVM using our choice of features. However, without further modifications, this basic approach has a number of problems in terms of speed, storage space and capacity as we will now discuss.

2.1.1 Efficiency of a dense W matrix

We analyze both memory and speed considerations. Firstly, this method so far assumes that W fits in memory (unless sparsity is somehow enforced). If the dictionary size \(\mathcal D=30,000,\) then this requires 3.4 Gb of RAM (assuming floats), and if the dictionary size is 2.5 Million (as it will be in our experiments in Sect. 4) this amounts to 14.5 Terabytes. The vectors q and d are sparse so the speed of computation of a single query-document pair involves mn computations q i W ij d j , where q and d have m and n non-zero terms, respectively. We have found this is reasonable for training, but may be an issue at test timeFootnote 3. Alternatively, one can compute \(v = q^\top W\) once, and then compute v d for each document. This is the same speed as a classical vector space model where the query contains \(\mathcal D\) terms, assuming W is dense. The capacity of this model is also obviously rather large. As every pair of words between query and target is modeled separately it means that any pair not seen during the training phase will not have its weight trained. Regularizing the weights so that unseen pairs have W ij = 0 is thus essential (this is discussed in Sect. 2.3). However, this is still not ideal and clearly a huge number of training examples will be necessary to train so many weights, most of which are not used for any given training pair (q, d).

Overall, a dense matrix W is challenging in terms of memory footprint, computation time and controlling its capacity for good generalization. In the next section we describe ways of improving over this basic approach.

2.2 Improved models

We now describe several special cases by constraining the form (1) that lead to proposals for improved models in terms of accuracy and efficiency.

2.2.1 Low rank (but diagonal preserving) W matrices

An efficient scheme is to constrain W in the following way:

Here, U and V are \(N \times {\mathcal D} \) matrices. This induces a N-dimensional “latent concept” space in a similar way to LSI. This similarity is the main inspiration to name this class of models “Supervised Semantic Indexing”. However, in this paper we actually refer to any method that performs supervised learning to rank using word-word relationships based on their semantics related to the task, whether low rank or not.

However, its differences from LSI should be highlighted:

-

Most importantly it is trained from a supervised signal using preference relations (ranking constraints).

-

Further, U and V differ so it does not assume the query and target document should be embedded to the latent space in the same way. This can be suitable when the query text distribution is very different to the document text distribution, such as the typical case of brief queries and longer documents. The extreme case of cross language retrieval with queries and target texts in different languages is naturally modeled in this setup.

-

Finally, the addition of the identity term means this model automatically learns the tradeoff between using the low dimensional space and a classical vector space model. This is important because the diagonal of the W matrix gives the specificity of picking out when a word co-ocurrs in both documents (indeed, setting W = I is equivalent to cosine similarity using tf-idf, see below). The matrix I is full rank and therefore cannot be approximated with the low rank model \(U^\top V,\) so our model combines both. Note that the weights of U and V are learnt so one does not need a weighting parameter multiplied by I.

However, the efficiency and memory footprint are as favorable as LSI. Typically, one caches the N-dimensional representation for each document to use at query time. Moreover, the low rank induces a form of capacity control (we have far fewer parameters to learn) which we observe leads to improved generalization ability.

We also highlight several other regularization variants, which are further possible ways of constraining W:

-

W = I: if q and d are normalized tf-idf vectors this is equivalent to using the standard cosine similarity with no learning (and no synonymy or polysemy).

-

W = D, where D is a diagonal matrix: one learns a re-weighting of tf-idf using labeled data (still no synonymy or polysemy). This is similar to a method proposed in Grangier et al. (2005).

-

\({ W = U^\top U + I}:\) we constrain the model to be symmetric; the query and target document are treated in the same way.

-

\({ W = U^\top V + D}:\) where D is a diagonal matrix, to be learnt. Here, we can learn a re-weighting of tf-idf at the same time as a latent concept space.

2.2.2 Sparse W matrices

If W was itself a sparse matrix, then computation of f(·) would be considerably faster, not to mention the memory savings. If the query has m non-zero terms, and any given row of W has p non-zero terms, then there are at most mp terms in \(v=q^\top W\) (compared to \(m \mathcal D\) terms for a dense W, and m terms for a classical vector space cosine).

The simplest way to do this is to apply a regularizer such as minimizing the L 1-norm of W (see, e.g. Guyon et al. 2006):

where L(·) is a function that computes the loss of the current model given a query \(q \in {\mathcal Q}\) and a labeling Y(q,d) of the relevance of the documents d with respect to q. However, in general any sparsity promoting regularizer or feature selection technique could be used.

2.2.3 Correlated feature hashing

Another way to both lower the capacity of our model and decrease its storage requirements is to share weights among features.

Hash Kernels (Random Hashing of Words) In Shi et al. (2009) the authors proposed a general technique called “Hash Kernels” where they approximate the feature representation Φ(x) with:

where \(h : {\mathcal W} \rightarrow \{1,\ldots,{\mathcal H}\}\) is a hash function that reduces an the feature space down to \({\mathcal H}\) dimensions, while maintaining sparsity, where \({\mathcal W}\) is the set of initial feature indices. The software Vowpal WabbitFootnote 4 implements this idea (as a regression task) for joint feature spaces on pairs of objects, e.g. documents. In this case, the hash function used for a pair of words (s,t) is \(h(s,t) = \hbox{mod}(sP+t,{\mathcal H})\) where P is a large prime. This yields

where Φs,t(·) indexes the feature on the word pair (s,t), e.g. \(\Upphi_{s,t}(\cdot) = \Upphi_{((s-1){\mathcal D}+t)}(\cdot).\) This technique is equivalent to sharing weights, i.e. constraining W st = W kl when h(s,t) = h(k,l). In this case, the sharing is done pseudo-randomly, and collisions in the hash table generally result in sharing weights between term pairs that share no meaning in common.

Correlated Feature Hashing We thus suggest a technique to share weights (or equivalently hash features) so that collisions actually happens for terms with close meaning. For that purpose, we first sort the words in our dictionary in frequency order, so that i = 1 is the most frequent, and \(i={\mathcal D}\) is the least frequent. For each word \(i=1, \ldots, {\mathcal D},\) we calculate its DICE coefficient (Smadja et al. 1996) with respect to each word \(j = 1, \ldots, {\mathcal F}\) among the top \({\mathcal F}\) most frequent words:

where cooccur(i, j) counts the number of co-occurences for i and j at the document or sentence level, and occur(i) is the total number of occurences of word i. Note that these scores can be calculated from a large corpus of completely unlabeled documents. For each i, we sort the \({\mathcal F}\) scores (largest first) so that \(S_p(i) \in \{1,\ldots,{\mathcal F}\}\) correspond to the index of the pth largest DICE score DICE(i,S p (i)). We can then use the Hash Kernel approximation \(\bar{\Upphi}(\cdot)\) given in Eq. 5 relying on the “hashing” function:

This strategy is equivalent to pre-processing our documents and replacing all the words indexed by i with S1(i). Note that we have reduced our feature space from \({\mathcal D}^2\) features to \({\mathcal H} = {\mathcal F}^2\) features. This reduction can be important as shown in our experiments, see Sect. 4: e.g. for our Wikipedia experiments, we have \({\mathcal F} = 30,000\) as opposed to \({\mathcal D}=2.5\) Million. Typical examples of the top k matches to a word using the DICE score are given in Table 1.

Moreover, we can also combine correlated feature hashing with the low rank W matrix constraint described in Sect. 2.2.1. In that case U and V becomes \({\mathcal F} \times N\) dimensional matrices instead of \({\mathcal D} \times N\) matrices instead because the set of features is no longer the entire dictionary, but the first \({\mathcal F}\) words.

Correlated Feature Hashing by Multiple Binning It is also suggested in Shi et al. (2009) to hash a feature Φ i (·) so that it contributes to multiple features \(\bar{\Upphi}_j(\cdot)\) in the reduced feature space. This strategy theoretically lessens the consequence of collisions. In our case, we can construct multiple hash functions from the values \(S_p(\cdot), p=1,\ldots,k,\) i.e. the top k correlated words according to their DICE scores:

where

Pseudocode for constructing the feature map \(\bar{\Upphi}(q,d)\) is given in algorithm 1

Algorithm 1 Construction of the correlated feature hashing (6) |

|---|

Initialize the \(\mathcal F\) dimensional vectors a and b to 0. |

for \(p=1,\ldots,k\) do |

for all \(i=1,\ldots,\mathcal D\) such that q i > 0 or d i > 0 do |

j ← S p (i) |

a j ← a j + q i |

b j ← b j + d i |

end for |

end for |

\(\forall i,j\in [1\ldots {\mathcal F}], \bar{\Upphi}_{((i-1){\mathcal F}+j)}(q,d) \leftarrow {\frac{1}{k}}(a b^\top)_{ij} \) |

.

Equation 6 defines the reduced feature space as the mean of k feature maps which are built using hashing functions using the \(p=1,\ldots,k\) most correlated words. Equation 7 defines the hash function for a pair of words i and j using the p th most correlated words S p (i) and S p (j). That is, the new feature space consists of, for each word in the original document, the top k most correlated words from the set of size \({\mathcal F}\) of the most frequently occurring words. Hence as before there are never more than \({\mathcal H}={\mathcal F}^2\) possible features. Overall, this is in fact equivalent to pre-processing our documents and replacing all the words indexed by i with \(S_1(i),\ldots,S_k(i),\) with appropriate weights.

Hashingn-grams One can also use these techniques to incorporate n-gram features into the model without requiring a huge feature representation that would have no way of fitting in memory. We simply use the DICE coefficient between an n-gram i and the first \({\mathcal F}\) words \(j=1,\ldots,{\mathcal F},\) and proceed as before. In fact, our feature space size does not increase at all, and we are free to use any value of n. Typical examples of the top k matches for a 2-gram using the DICE score are given in Table 2.

2.3 Training methods

We now discuss how to train the models we have described in the previous section.

2.3.1 Learning the basic model

Given the similarity function Eq. 1, we would like to choose W such that \( q^{\top} W d^{+} > q^{\top} W d^{-},\) expressing that d + should be ranked higher than d −.

For that purpose, we employ the margin ranking loss (Herbrich et al. 2000) which has already been used in several IR methods before (Joachims 2002; Burges et al. 2005; Grangier et al. 2005), and minimize:

This optimization problem is solved through stochastic gradient descent, (see, e.g. Burges et al. 2005): iteratively, one picks a random tuple and makes a gradient step for that tuple:

Obviously, one should exploit the sparsity of q and d when calculating these updates. To train our model, we choose the (fixed) learning rate \(\lambda\) which minimizes the training error. We also suggest to initialize the training with W = I as this initializes the model to the same solution as a cosine similarity score. This introduces a prior expressing that the weight matrix should be close to I, considering term correlation only when it is necessary to increase the score of a relevant document, or conversely decreasing the score of a non-relevant document. Termination is then performed by viewing when the error is no longer improving, using a validation set (i.e. using early stopping).

Our method thus far is a margin ranking perceptron (Collins et al. 2001) with a particular choice of features (2). The margin perceptron has been shown to be equivalent to SVM (Collobert et al. 2004), although possessing a different training algorithm (SVM typically uses quadratic programming, while the margin perceptron uses stochastic gradient descent). The problems we study (see Sect. 4) include millions of training examples, making classical SVM training intractable. Stochastic training is highly scalable and is easy to implement for our model. The regularizing effect of the large margin term w T w in SVMs can be achieved by weight decay or early stopping in stochastic learning for margin perceptrons (Collobert et al. 2004). In this work, we rely on early stopping: early stopping monitors the performance on held-out validation data during training and stops learning when validation data stops improving. This strategy hence regularizes the model by expressing preference for weights close to the initial weights (Caruana et al. 2000; Collobert et al. 2004). Empirically we did not experience severe overfitting problems in most of our experiments, as we worked with large-sized training sets, more details can be found in Sect. 4.5.

However, we note that such optimization cannot be easily applied to probabilistic methods such as pLSA because of their normalization constraints. Recent methods like LDA (Blei et al. 2003) also suffer from scalability issues.

Researchers have also explored optimizing various alternative loss functions other than the ranking loss including optimizing normalized discounted cumulative gain (NDCG) and mean average precision (MAP) (Burges et al. 2005; Burges et al. 2006; Cao et al. 2007; Yue et al. 2007). In fact, one could use those optimization strategies to train our models instead of optimizing the ranking loss as well.

2.3.2 Learning a low rank W matrix

When the W matrix is constrained, e.g. \(W=U^\top V + I,\) training is done in a similar way as before. It this case, the gradient steps to optimize the parameters U and V are:

Note this is no longer a convex optimization problem. In our experiments we initialized the matrices U and V randomly using a normal distribution with mean zero and standard deviation one.

2.3.3 Learning a sparse W matrix

Directly employing an L 1-norm regularizer is known to be difficult to optimize online (see, e.g. Langford et al. (2009) for an explanation and ways to deal with this problem). The use of the L 1 regularizer is also hampered by the excessive cost of its validation on large scale problems, such as the ones addressed in Sect. 4. In this work, therefore, we enforce sparsity through feature selection. Potentially we could appeal to any feature selection method (see Guyon et al. 2006 for a review). Here, we used a simple, intuitive “projection” method that can be seen as a generalization of the Recursive Feature Elimination (RFE) feature selection algorithm (see Guyon et al. 2006, Chapter 5). Algorithm 2 gives the pseudo-code of this technique

Algorithm 2 Sparse Model Learning |

|---|

1. Train the model with a dense matrix W as before. |

2. For each row i of W, find the k active elements with the smallest values of |W ij |. Constrain these elements to equal zero, i.e. make them inactive (the reason to choose the row here is that if the query has m non-zero terms, and any given row of W has p non-zero terms, then the method has at most mp terms in—compared to the m terms in a classical vector space model—as we mentioned before.). |

3. Train the model with the constrained W matrix. |

4. If W contains more than p non-zero terms in each row go back to 2. |

.

This algorithm is motivated by the removal of the smallest weights being linked to the smallest change in the objective function (Guyon et al. 2006) and has been shown to work well in several experimental setups. Overall, we found this scheme to be simple and efficient, while yielding good results.

2.3.4 Learning with feature hashing

Feature hashing simply corresponds to a different choice of feature map, depending on the hashing technique chosen. Hence the training techniques described above can be used for training with feature hashing.

2.4 Applications

2.4.1 Standard retrieval

We consider two standard retrieval models: returning relevant documents given a keyword-based query, and finding related documents with respect to a given query document, which we call the query-document and document-document tasks.

Our models naturally can be trained to solve these tasks. We note here that so far our models have only included features based on the bag-of-words model, but there is nothing stopping us adding other kinds of features as well. Typical choices include: features based on the title, body, words in bold font, the popularity of a page, its PageRank, the URL, and so on, see e.g. (Baeza-Yates et al. 1999). However, for clarity and simplicity, our experiments use a setup where the raw words are used.

2.4.2 Cross language retrieval

Cross Language Retrieval (Grefenstette 1998) is the task of retrieving documents in a target language E given a query in a different source language F Footnote 5. Our model

can naturally deal with this task without the need for machine translation because it directly learns the correspondence between the two languages using labeled data in the form of tuples \({\mathcal R}.\) The use of a non-symmetric low rank model like (4) naturally suits this task (in this case adding the identity does not make sense):

Given additional supervised data for a standard monolingual retrieval problem, which will typically be more abundant than for the cross-lingual problem, it is also possible to regularize the solution we find by training on this task at the same time (multi-tasking) using the model:

Alternatively, it is also possible to use the model \( f(q_E,d_E) = q_E^\top (U_E^\top V_E) d_E,\) where only V E is shared between tasks. Multi-tasking is achieved by adding the objective functions together. For stochastic gradient descent training, this amounts to performing a gradient step for one of the objectives followed by a gradient step for the other. Training (10) will act as a regularizer when training the model (9) because the two functions share the parameters V E , so both tasks aim to find a good latent space for target documents in language E. Similarly, one can also regularize U F .

2.4.3 Content matching

Our models can also be applied to identify two differing types of text as a matching pair, for example a sequence of text (which could be a web page or an email or a chat log) with a targeted advertisement. In this case, click-through data can provide supervision. Here, again for simplicity, we assume both text and advert are represented as words. In practice, however, other types of engineered features could be added for optimal performance.

3 Prior work

A tf-idf vector space model and LSI (Deerwester et al. 1990) are two main baselines we will compare to. We already mentioned pLSA (Hofmann 1999) and LDA (Blei et al. 2003) both have scalability problems and are not reported to generally outperform LSA and TF-IDF (Gehler et al. 2006). Moreover in the introduction we discussed how sLDA (Blei et al. 2007) provides supervision at the document level (via a class label or regression value) and is not a task of learning to rank, whereas here we study supervision at the (query,documents) level. Wang et al. (2006) uses cooccurences of multiple type objects to build better latent concept vectors. However, it still falls into the “unsupervised” category.

In Grangier et al. (2005) the authors learned the weights of an orthogonal vector space model on Wikipedia links, improving over the OKAPI method. Joachims et al. (2002) trained a SVM with hand-designed features based on the title, body, search engines rankings and the URL. Burges et al. (2005) proposed a neural network method using a similar set of features (569 in total). In contrast we limited ourselves to body text (not using title, URL, etc.) and train on at most \({\mathcal D}^2=900\) million features.

Query Expansion, often referred to as blind relevance feedback, is another way to deal with synonyms, but requires manual tuning and does not always yield a consistent improvement (Zighelnic et al. 2008).

The authors of Grangier et al. (2008) used a related model to the ones we describe, but for the task of image retrieval, and (Goel et al. 2009) also used a related (regression-based) method for advert placement. They both use the idea of using the cross product space of features in the perceptron algorithm as in Eq. 2 which is implemented in related software to these two publications, PAMIRFootnote 6 and Vowpal WabbitFootnote 7. The task of document retrieval, and the use of sparse or low rank matrices, or of using correlated feature hashing (cf. Sect. 2.2), is not studied. Earlier work (Berger et al. 1999) models the probability of a query given a document based on “translation probabilities”, which are the conditional probabilities of a query word given a document word. Although this algorithm shares the same intuition with our work, its lack of problem size control could lead to intractability for a large vocabulary, due to the exploding number of query-document word pairs.

Several authors (Salakhutdinov et al. 2007; Keller et al. 2005) have proposed interesting nonlinear versions of (unsupervised) LSI using neural networks and showed they outperform LSI or pLSA. However, in the case of Salakhutdinov et al. (2007) we note their method is rather slow, and a dictionary size of only 2000 was used. Finally, (Sun et al. 2004) proposes a “supervised” LSI for classification. This has a similar sounding title to ours, but is quite different because it is based on applying LSI to document classification rather than improving ranking via known preference relations. The authors of Gabrilovich et al. (2007) proposed “Explicit Semantic Analysis” which represents the meaning of texts in a high-dimensional space of concepts by building a feature space derived from the human-organized knowledge from an encyclopedia, e.g. Wikipedia. In the new space, cosine similarity is applied. SSI could be applied to such feature representations so that they are not agnostic to a particular supervised task as well.

Another related area of research is in distance metric learning (Weinberger et al. 2008; Jain et al. 2008; Globerson et al. 2007). Methods like Large Margin Nearest Neighbor LMNN (Weinberger et al. 2008) also learn a model similar to the basic model (2.1) with the full matrix W (but not with our improvements to this model). LMNN further constrain W to be a positive semidefinite matrix. This makes the training computational cost considerable, e.g. even after significant optimization of the learning algorithm it still takes 3.5 h to train on 60,000 examples and 169 features on a handwritten digit recognition problem. This would hence not be scalable for large scale text ranking experiments. Nevertheless, Chechik et al. compared LMNN (Weinberger et al. 2008), LEGO (Jain et al. 2008) and MCML (Globerson et al. 2007) to a stochastic gradient method with a full matrix W (the basic model (2.1)) on a small image ranking task and report in fact that the stochastic method provides both improved results and efficiency.Footnote 8

Methods for cross-language retrieval range from first applying machine translation and then a conventional retrieval method such as LSI (Grefenstette 1998), a direct method of applying LSI for this task called CL-LSI (Dumais et al. 1997) or using Kernel Canonical Correlation Analysis, KCCA (Vinokourov et al. 2003). While the latter is a strongly performing method, it also suffers from scalability problems and requires translated text pairs for training.

4 Experimental study

Learning a model of term correlations over a large vocabulary is a considerable challenge that requires a large amount of training data. Standard retrieval datasets like TRECFootnote 9 or LETOR (Liu et al. 2007) contain only a few hundred training queries, and are hence too small for that purpose. Moreover, some datasets only provide few pre-processed features like page-rank, or BM25, and not the actual words. Click-through from web search engines could provide valuable supervision. However, such data is not publicly available, and hence experiments on such data are not reproducible.

We hence conducted most experiments on Wikipedia and used links within Wikipedia to build a large scale ranking task. Thanks to its abundant, high-quality labeling and structuring, Wikipedia has been exploited in a number of applications such as disambiguation (Bunescu et al. 2006, Cucerzan 2007), text categorization (Minier et al. 2007; Hu et al. 2008), relationship extraction (Ruiz-casado et al. 2005, Chernov et al. 2006), and searching (Milne et al. 2007) etc. Specifically, Wikipedia link structures were also used in Minier et al. (2007; 2007), Ruiz-casado et al. 2005).

We considered several tasks: document-document retrieval described in Sect. 4.1, query-document retrieval described in Sect. 4.2, and cross-language document-document retrieval described in Sect. 4.3. In Sect. 4.4 we also give results on an Internet advertising task using proprietary data from an online advertising company.

In these experiments we compare our approach, Supervised Semantic Indexing (SSI), to the following methods: tf-idf with cosine similarity (TFIDF), Query Expansion (QE), LSIFootnote 10 and α LSI + (1 − α) TFIDF. Moreover SSI with an “unconstrained W” is just a margin ranking perceptron with a particular choice of feature map, and SSI using hash kernels is the approach of Shi et al. (2009) employing a ranking loss. For LSI we report the best value of α and embedding dimension (50, 100, 200, 500, 750 or 1000), optimized on the training set ranking loss. We then report the low rank version of SSI using the same choice of dimension. Query Expansion involves applying TFIDF and then adding the mean vector \(\beta \sum_{i=1}^{\mathcal E} d_{r_i} \) of the top \({\mathcal E}\) retrieved documents multiplied by a weighting β to the query, and applying TFIDF again. We report the error rate where β and \({\mathcal E}\) are optimized using the training set ranking loss.

For each method, we measure the ranking loss (the percentage of tuples in \({\mathcal R}\) that are incorrectly ordered), precision P(n) at position n = 10 (P@10) and the mean average precision (MAP), as well as their standard errors. For computational reasons, MAP and P@10 were measured by averaging over a fixed set of 1000 test queries, where for each query the linked test set documents plus random subsets of 10,000 documents were used as the database, rather than the whole testing set. The ranking loss is measured using 100,000 testing tuples (i.e. 100,000 queries, and for each query one random positive and one random negative target document were selected).

4.1 Document-document retrieval

We considered a set of 1,828,645 English Wikipedia documents as a database, and split the 24,667,286 linksFootnote 11 randomly into two portions, 70% for training and 30% for testing. We then considered the following task: given a query document q, rank the other documents such that if q links to d then d should be highly ranked.

Limited Dictionary Size In our first experiments, we used only the top 30,000 most frequent words. This allowed us to compare all methods with the proposed approach, Supervised Semantic Indexing (SSI), using a completely unconstrained W matrix as in Eq. 1. LSI is also feasible to compute in this setting. We compare several variants of our approach, as detailed in Sect. 2.2.

Results on the test set are given in Table 3. All the variants of our method SSI strongly outperform the existing techniques TFIDF, LSI and QE. SSI with unconstrained W performs worse than the low rank counterparts—probably because it has too much capacity given the training set size. Non-symmetric low-rank SSI \(W = U^\top V + I\) slightly outperforms its symmetric counterpart \(W = U^\top U + I\) (see also Table 6 for other choices of N). SSI with sparse W degrades in performance with increased sparsity (from 1000 non-zero elements per column to 10 non-zero elements) but still outperforms our baselines. Diagonal SSI W = D is only a learned re-weighting of word weights, but still slightly outperforms TFIDF. In terms of our baselines, LSI is slightly better than TFIDF but QE in this case does not improve much over TFIDF, perhaps because of the difficulty of this task, i.e. there may too often many irrelevant documents in the top \({\mathcal E}\) documents initially retrieved for QE to help.

Unlimited Dictionary Size In our second experiment we no longer constrained methods to a fixed dictionary size, so all 2.5 million words are used. Due to being unable to compute LSI for the full dictionary size, we used the LSI computed in the previous experiment on 30,000 words and combined it with TFIDF using the entire dictionary. In this setting we compared our baselines with the low rank SSI method \(W=(U^\top V)_n + I,\) where n means that we constrained the rows of U and V for infrequent words (i.e. all words apart from the most frequent n) to equal zero. The reason for this constraint is that it can stop the method overfitting: if a word is used in one document only then its embedding can take on any value independent of its content. Infrequent words are still used in the diagonal of the matrix (via the + I term). The results, given in Table 4, show that using this constraint outperforms an unconstrained choice of n = 2.5M. Figure 1 shows scatter plots where SSI outperforms the baselines TFIDF and LSI in terms of average precision.

Overall, compared to the baselines the same trends are observed as in the limited dictionary case, indicating that the restriction in the previous experiment did not bias the results in favor of any one algorithm. Note also that as a page has on average just over 3 test set links to other pages, the maximum P@10 one can achieve in this case is 0.31, while our best model reaches 0.263 for this measure.

Hash Kernels and Correlated Feature Hashing On the full dictionary size experiments in Table 4 we also compare Hash Kernels (Shi et al. 2009) with our Correlated Feature Hashing method described in Sect. 2.2.3. For Hash Kernels we tried several sizes of hash table \({\mathcal H}\) (1M, 3M and 6M), we also tried adding a diagonal to the matrix learned in a similar way as is done for LSI. We note that if the hash table is big enough this method is equivalent to SSI with an unconstrained W, however, for the hash sizes we tried Hash Kernels did not perform as well. For correlated feature hashing, we simply used the SSI model \(W=(U^\top V)_{30k} + I\) from the 7th row in the table to model the most frequent 30,000 words and trained a second model using Eq. 6 with k = 5 to model all other words, and combined the two models with a mixing factor (which was also learned). The result “SSI: CFH (1-grams)” is the best performing method we have found. Doing the same trick but with 2-grams instead also improved over Low Rank SSI, but not by as much. Combining both 1-grams and 2-grams, however, did not improve over 1-grams alone.

Training and Testing Splits In some cases, one might be worried that our experimental setup has split training and testing data only by partitioning the links, but not the documents, hence performance of our model when new unseen documents are added to the database might be in question. We therefore also tested an experimental setup where the test set of documents is completely separate from the training set of documents, by completely removing all training set links between training and testing documents. In fact, this does not alter the performance significantly, as shown in Table 5. This outlines that our model can accommodate a growing corpus without frequent re-training.

Importance of the Latent Concept Dimension In the above experiments we simply chose the dimension N of the low rank matrices to be the same as the best latent concept dimension for LSI. However, we also tried some experiments varying N and found that the error rates are fairly invariant to this parameter. For example, using a limited dictionary size of 30,000 words we achieve a ranking loss 0.39, 0.30 or 0.31% for N = 100, 200, 500 using a \(W=U^\top V + I\) type model. More detailed results are given in Table 6.

Importance of the Identity matrix for Low Rank representations The addition of the identity term in our model \(W=U^\top V + I\) allows this model to automatically learn the tradeoff between using the low dimensional space and a classical vector space model. The diagonal elements count when there are exact matches (co-ocurrences) of words between the documents. The off-diagonal (approximated with a low rank representation) captures topics and synonyms. Using only W = I yields the inferior TFIDF model. Using only \(W=U^\top V\) also does not work as well as \(W=U^\top V + I.\) Indeed, we obtain a mean average precision of 0.42 with the former, and 0.51 with the latter. Similar results can be seen with the error rate of LSI with or without adding the (1 − α)TFIDF term, however, for LSI this modification seems rather ad-hoc rather than being a natural constraint on the general form of W as in our method.

Ignoring the Diagonal On the other hand, for some tasks it is not possible to use the identity matrix at all, e.g. for cross-language retrieval. Out of curiosity, we thus also tested our method SSI training a dense matrix W where the diagonal is constrained to be zeroFootnote 12, so only synonyms can be used. This obtained a test ranking loss of 0.69% (limited dictionary size case), compare to 0.41% with the diagonal. indicating this could be a very good cross-language retrieval model, which we explore further in Sect. 4.3.

4.2 Query-document retrieval

We also tested our approach in a query-document setup. We used the same setup as before but we constructed queries by keeping only k random words from query documents in an attempt to mimic a “keyword search”. First, using the same setup as in the previous section with a limited dictionary size of 30,000 words we present results for keyword queries of length k = 5, 10 and 20 in Table 7. SSI yields similar improvements as in the document-document retrieval case over the baselines. Here, we do not report full results for Query Expansion, however, it did not give large improvements over TFIDF, e.g. for the k = 10 case we obtain 0.084 MAP and 0.0376 P@10 for QE at best. Results for k = 10 using an unconstrained dictionary are given in Table 8. Again, SSI yields similar improvements. Overall, non-symmetric SSI gives a slight but consistent improvement over symmetric SSI. Changing the embedding dimension N (capacity) did not appear to effect this, for example for k = 10 and N = 100 we obtain 3.11%/0.215/0.097 for Rank Loss/MAP/P@10 using SSI \(W=U^\top U + I\) and 2.93% / 0.235 / 0.102 using SSI \(W=U^\top V + I\) (results in Table 7 are for N = 200). Finally, correlated feature hashing again improved over models without hashing.

4.3 Cross language document-document retrieval

We considered the same set of 1,828,645 English Wikipedia documents and a set of 846,582 Japanese Wikipedia documents, where 135,737 of the documents are known to be about the same concept as a corresponding English page (this information can be found in the Wiki markup provided in a Wikipedia dump.) For example, the page about “Microsoft” can be found in both English and Japanese, and they are cross-referenced. These pairs are referred to as “mates” in the literature, see e.g. (Dumais et al. 1997).

We then consider a cross language retrieval task that is analogous to the task in Sect. 4.1: given a Japanese query document q Jap with English mate q Eng , rank the English documents so that a document d Eng linked from q Eng appear above the unlinked ones. For this task, the document q Eng is removed and not considered available during training or testing. There are in total 8.1M such q Jap − d Eng links. The dataset is split into train and test as before.

The first type of baseline we considered is based on machine translation. We use a machine translation tool on the Japanese query, and then apply TFIDF or LSI. We consider three methods of machine translation: Google’s APIFootnote 13 or Fujitsu’s ATLASFootnote 14 is used to translate each query document, or we translate each word in the Japanese dictionary using ATLAS and then apply this word-based translation to a query. We also compared to CL-LSI (Dumais et al. 1997) trained on all 90,000 Jap-Eng mates from the training set, with a feasible limited vocabulary of 30,000 words in both language.

For SSI, we consider two cases: (a) apply the ATLAS machine translation tool to a document first, and then use SSI trained on the monolingual task of Sect. 4.1, which we call SSI EngEng , or (b) train SSI directly with Japanese queries and English target documents using the model (9), which we call SSI JapEng .

The results are given in Table 9 where the dictionary size was once again limited to 30,000 words in both languages. Results for an unrestricted dictionary size are given in Table 10.

TFIDF using the three translation methods give relatively similar results. Using LSI or CL-LSI slightly improves these results, depending on the metric. Machine translation followed by SSI EngEng outperforms all these methods, however, the direct SSI JapEng which requires no machine translation tool at all, improves results even further. We conjecture this is because translation mistakes generate noisy features which SSI JapEng circumvents.

However, we also consider combining SSI JapEng with TFIDF or SSI EngEng using a mixing parameter α and this provided further gains, at the expense of requiring a machine translation tool once more.

Regularizing using Monolingual Retrieval The reported experiments do not include the regularization approach based on multi-tasking introduced in Sect. 2.4.2, Eq. 10. In fact, we did not find that this strategy helped much unless we reduced the amount of Japanese-English training data, while using all the English-English and Japanese-Japanese data. For example taking only 1% (81,000 pairs) of the Japanese-English data gives a ranking loss of 2.43% with regularization, compared to 3.02% without.

Mate Finding Note that many cross-lingual experiments, e.g. (Dumais et al. 1997), typically measure the performance of finding a “mate”, the same document in another language, whereas our experiment tries to model a query-based retrieval task. However, we also performed an experiment in the mate-finding setting. In this case, SSI achieves a ranking test error of 0.53%, and CL-LSI achieves 0.81%.

4.4 Content matching

We present results on matching adverts to web pages, a problem closely related to document retrieval. We obtained proprietary data from an online advertising company of the form of pairs of web pages and adverts that were clicked while displayed on that page. We only considered clicks in position 1 and discarded the sessions in which none of the ads was clicked. This is a way to circumvent the well known position bias problem—the fact that links appearing in lower positions are less likely to be clicked even if they are relevant. Indeed, by construction, every negative example comes from a slate of adverts in which there was a click in a lower position; it is thus likely that the user examined that negative example but deemed it irrelevant (as opposed to the user not clicking because he did not even look at the advert).

We consider these (webpage,clicked-on-ad) pairs as positive examples (q,d +), and any other randomly chosen ad is considered as a negative example d − for that query page. 1.9M pairs were used for training and 100,000 pairs for testing. The web pages contained 87 features (words) on average, while the ads contained 19 features on average. The two classes (clicks and no-clicks) are roughly balanced. From the way we construct the dataset, this means than when a user clicks on an advert, he/she clicks about half of the time on the one in the first position.

We compared TFIDF, Hash Kernels and Low Rank SSI on this task. The results are given in Table 11. In this case TFIDF performs very poorly, often the positive (page, ad) pairs share very few, if any, features, and even if they do this does not appear to be very discriminative. Hash Kernels and Low Rank SSI appear to perform rather similarly, both strongly outperforming TFIDF. The rank loss on this dataset is two orders of magnitude higher than on the Wikipedia experiments described in the previous sections. This is probably due to a combination of two factors: first, the positive and negative classes are balanced, whereas there was only a few positive documents in the Wikipedia experiments; and second, clicks data are much more noisy

.

We might add, however, that at test time, Low Rank SSI has a considerable advantage over Hash Kernels in terms of speed. As the vectors Uq and Vd can be cached for each page and ad, a matching operation only requires N multiplications (a dot product in the “embedding” space). However, for hash kernels |q||d| hashing operations and multiplications have to be performed, where |·| means the number of non-zero elements. For values such as |q| = 100, |d| = 100 and N = 100 that would mean Hash Kernels would be around 100 times slower than Low Rank SSI at test time, and this difference gets larger if more features are used.

4.5 Notes on overfitting and regularization

As stated earlier, our low rank model provides two mechanisms for capacity control: the selection of the latent dimension N and early stopping. Reducing the latent dimension N limits the rank of the matrix W and hence reduces the capacity of the model. Early stopping stops training when the performance over a validation set stops increasing. It hence stops learning before the training loss is minimized, expressing preference for weights close to the random initialization (Caruana et al. 2000).

Figure 2 illustrates the effect of these two regularization strategies over cross-language experiments with different training set sizes. It appears that overfitting occurs when training data size is very small (1 and 4%). The gap between training and testing error reduces with increased training data size, as expected. However, especially for larger training set sizes, one can see that we did not experience a divergence between train and test error as the number of iterations of online learning increased.

In Fig. 2a, b, we also display the training curve for models with different capacity (N = 50 and N = 100). One can see that in both cases, the model with N = 100 yields the best performance. One can further note the advantage of early stopping: eg. in the first figure, it lasts longer for N = 50 than for N = 100 before the test error starts increasing. Early stopping can stop training in such cases and hence yield better generalization performance. For larger training sets, one can notice that early stopping is less important as the generalization error does not increase. However, it is still useful to stop training when the generalization performance stalls as it simply reduces the training computational cost.

5 Conclusion

We have described a versatile, powerful set of discriminatively trained models for document ranking. Many “learning to rank” papers have focused on the problem of selecting the objective to optimize (given a fixed class of functions) and typically use a relatively small number of hand-engineered features as input. This work is orthogonal to those works as it studies models with large feature sets generated by all pairs of words between the query and target texts. The challenge here is that such feature spaces are very large, and we thus presented several models that deal with the memory, speed and capacity control issues. In particular we proposed low rank and sparse learning and a technique of hashing correlated features. In fact, all of our proposed methods can be used in conjunction with other features and objective functions explored in previous work for further gains.

Our empirical study covered query and document retrieval, cross-language retrieval and ad-placement. Our main conclusions were: (a) we found that the low rank model outperforms the full rank margin ranking perceptron with the same features as well as its sparsified version. We also outperform classical methods such as TFIDF, LSI or query expansion. Finally, it is also better than or comparable to“Hash Kernel”, another new supervised technique, in terms of accuracy, while having advantages in terms of efficiency; and (b) Using Correlated feature hashing improves results even further. Both the low rank idea from (a) and correlated feature hashing (b) prove to be effective ways to reduce the feature space size.

Many generalizations of our work are possible: adding more features into our models as we just mentioned, generalizing to other kinds of nonlinear models, and exploring the use of the same models for other tasks such as question answering. In general, web search and other standard retrieval tasks currently often depend on entering query keywords which are likely to be contained in the target document, rather than the user directly describing what they want to find. Our models are capable of learning to rank using either the former or the latter.

Notes

This work is an expanded version of a poster paper (Bai et al. 2009) with further algorithmic proposals, applications and experiments.

In fact in our resulting methods there is no need to restrict that both q and d have the same dimensionality \(\mathcal D\) but we will make this assumption for simplicity of exposition.

Of course, any method can be sped up by applying it to only a subset of pre-filtered documents, filtering using some faster method.

For example, Google provides such a service at http://translate.google.com/translate_s.

Oral presentation at the (Snowbird) Machine Learning Workshop, see http://snowbird.djvuzone.org/abstracts/119.pdf.

We use the SVDLIBC software http://tedlab.mit.edu/∼dr/svdlibc/ and the cosine distance in the latent concept space.

We removed links to calendar years as they provide little information while being very frequent.

Note that the model \(W=U^\top V\) with the identity achieved a ranking loss of 0.56%, however, this model can represent at least some of the diagonal.

References

Baeza-Yates, R., & Ribeiro-Neto, B., et al. (1999). Modern information retrieval. England: Addison-Wesley Harlow.

Bai, B., Weston, J., Collobert, R., & Grangier, D. (2009). Supervised semantic indexing. In European conference on information retrieval.

Berger, A., & Lafferty, J. (1999). Information retrieval as statistical translation. In ACM SIGIR’ 99, (pp. 222–229).

Blei, D. M., & McAuliffe, J. D. (2007). Supervised topic models. In In advances in neural information processing systems (NIPS).

Blei, D. M., Ng, A., & Jordan, M. I. (2003). Latent dirichlet allocation. The Journal of Machine Learning Research, 3, 993–1022.

Bunescu, R., & Pasca, M. (2006). Using encyclopedic knowledge for named entity disambiguation. In In EACL, (pp. 9–16).

Burges, C., Ragno, R., & Le, Q. V. (2006). Learning to rank with nonsmooth cost functions. In Advances in neural information processing systems: Proceedings of the 2006 conference. Cambridge, MA: MIT Press.

Burges, C., Shaked, T., Renshaw, E., Lazier, A., Deeds, M., Hamilton, N., et al. (2005). Learning to rank using gradient descent. In ICML 2005 (pp. 89–96). New York: ACM Press.

Cao, Z., Qin, T., Liu, T. Y., Tsai, M. F., & Li, H. (2007). Learning to rank: From pairwise approach to listwise approach. In Proceedings of the 24th international conference on Machine learning (pp. 129–136). New York: ACM Press.

Caruana, R., Lawrence, S., & Giles. L. (2000). Overfitting in neural nets: Backpropagation, conjugate gradient, and early stopping. In Advances in neural information processing systems, NIPS 13 (pp. 402–408).

Chernov, S., Iofciu, T., Nejdl, W., & Zhou, X. (2006). Extracting semantic relationships between wikipedia categories. In In 1st international workshop: SemWiki2006—From Wiki to semantics (SemWiki 2006), co-located with the ESWC2006 in Budva.

Collins, M., & Duffy, N. (2001). New ranking algorithms for parsing and tagging: Kernels over discrete structures, and the voted perceptron. In Proceedings of the 40th annual meeting on association for computational linguistics (pp. 263–270). Morristown, NJ: Association for Computational Linguistics.

Collobert, R., & Bengio, S. (2004). Links between perceptrons, mlps and svms. In ICML 2004.

Cucerzan, S. (2007). Large-scale named entity disambiguation based on wikipedia data. In Proceedings of the 2007 joint conference on empirical methods in natural language processing and computational natural language learning (pp. 708–716). Prague: Association for Computational Linguistics.

Deerwester, S., Dumais, S. T., Furnas, G. W., Landauer, T. K., & Harshman, R. (1990). Indexing by latent semantic analysis. JASIS, 41(6), 391–407.

Dumais, S. T., Letsche, T. A., Littman, M. L., & Landauer, T. K. (1997). Automatic cross-language retrieval using latent semantic indexing. In AAAI spring symposium on cross-language text and speech retrieval.

Gabrilovich, E. & Markovitch, S. (2007). Computing semantic relatedness using wikipedia-based explicit semantic analysis. In International joint conference on artificial intelligence.

Gehler, P., Holub, A., & Welling, M. (2006). The rate adapting poisson (rap) model for information retrieval and object recognition. In Proceedings of the 23rd international conference on machine learning.

Globerson, A., & Roweis, S. (2007). Visualizing pairwise similarity via semidefinite programming. In AISTATS.

Goel, S., Langford, J., & Strehl, A. (2009). Predictive indexing for fast search. In Advances in neural information processing systems 21.

Grangier, D., & Bengio, S. (2005). Inferring document similarity from hyperlinks. In CIKM ’05 (pp. 359–360). New York: ACM.

Grangier, D., & Bengio, S., (2008). A discriminative kernel-based approach to rank images from text queries. IEEE Transactions on PAMI, 30(8), 1371–1384.

Grefenstette, G. (1998). Cross-language information retrieval. Norwell, MA: Kluwer Academic Publishers.

Guyon, I. M., Gunn, S. R., Nikravesh, M., & Zadeh, L. (Eds). (2006). Feature extraction: Foundations and applications. Berlin: Springer.

Herbrich, R., Graepel, T., & Obermayer, K. (2000). Large margin rank boundaries for ordinal regression. Cambridge, MA: MIT Press

Hofmann, T. (1999). Probabilistic latent semantic indexing. In SIGIR 1999 (pp. 50–57). New York: ACM Press.

Hu, J., Fang, L., Cao, Y., Zeng, H., Li, H., Yang, Q., et al. (2008). Enhancing text clustering by leveraging wikipedia semantics. In SIGIR ’08: Proceedings of the 31st annual international ACM SIGIR conference on research and development in information retrieval (pp. 179–186). New York: ACM.

Jain, P., Kulis, B., Dhillon, I. S., & Grauman, K. (2008). Online metric learning and fast similarity search. In Advances in neural information processing systems (NIPS).

Joachims, T. (2002). Optimizing search engines using clickthrough data. In ACM SIGKDD (pp. 133–142).

Keller, M., & Bengio, S. (2005). A neural network for text representation. In International conference on artificial neural networks, ICANN, IDIAP-RR 05-12.

Langford, J., Li, L., & Zhang, T. (2009). Sparse online learning via truncated gradient. In Advances in neural information processing systems 21.

Liu, T. Y., Xu, J., Qin, T., Xiong, W., & Li, H. (2007). Letor: Benchmark dataset for research on learning to rank for information retrieval. In Proceedings of SIGIR 2007 workshop on learning to rank for information retrieval.

Milne, D. N., Witten, I. H., & Nichols D. M. (2007). A knowledge-based search engine powered by wikipedia. In CIKM ’07: Proceedings of the sixteenth ACM conference on conference on information and knowledge management (pp. 445–454). New York: ACM.

Minier, Z., Bodo, Z., & Csato, L. (2007). Wikipedia-based kernels for text categorization. In In 9th international symposium on symbolic and numeric algorithms for scientific computing (pp. 157–164).

Ruiz-casado, M., Alfonseca, E., & Castells, P. (2005). Automatic extraction of semantic relationships for wordnet by means of pattern learning from wikipedia. In In NLDB pp. 67–79. Berlin: Springer.

Salakhutdinov, R., & Hinton, G. (2007). Semantic hashing. Proceedings of the SIGIR workshop on information retrieval and applications of graphical models, Amsterdam.

Shi, Q., Petterson, J., Dror, G., Langford, J., Smola, A., Strehl, A., & Vishwanathan, V. (2009). Hash kernels. In Twelfth international conference on artificial intelligence and statistics.

Smadja, F., McKeown, K. R., & Hatzivassiloglou, V. (1996). Translating collocations for bilingual lexicons: A statistical approach.Computational Linguistics, 22(1), 1–38.

Sun, J., Chen, Z., Zeng, H., Lu, Y., Shi, C., & Ma, W. (2004). Supervised latent semantic indexing for document categorization. In ICDM 2004 (pp. 535–538). Washington, DC: IEEE Computer Society.

Vinokourov, A., Shawe-Taylor, J., & Cristianini, N. (2003). Inferring a semantic representation of text via cross-language correlation analysis. NIPS (pp. 1497–1504).

Voorhees, E. M.,& Dang, H. T. (2005). Overview of the trec 2005 question answering track. In In TREC 2005.

Wang, X., Sun, J., Chen, Z.,& Zhai, C. (2006). Latent semantic analysis for multiple-type interrelated data objects. In SIGIR’06.

Weinberger, K., & Saul, L. (2008). Fast solvers and efficient implementations for distance metric learning. In International conference on machine learning.

Yue, Y., Finley, T., Radlinski, F., & Joachims, T. (2007). A support vector method for optimizing average precision. In SIGIR (pp. 271–278).

Zighelnic, L., & Kurland, O. (2008). Query-drift prevention for robust query expansion. In SIGIR 2008 (pp. 825–826). New York: ACM.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bai, B., Weston, J., Grangier, D. et al. Learning to rank with (a lot of) word features. Inf Retrieval 13, 291–314 (2010). https://doi.org/10.1007/s10791-009-9117-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10791-009-9117-9