Abstract

In this study, we show that individual users’ preferences for the level of diversity, popularity, and serendipity in recommendation lists cannot be inferred from their ratings alone. We demonstrate that we can extract strong signals about individual preferences for recommendation diversity, popularity and serendipity by measuring their personality traits. We conducted an online experiment with over 1,800 users for six months on a live recommendation system. In this experiment, we asked users to evaluate a list of movie recommendations with different levels of diversity, popularity, and serendipity. Then, we assessed users’ personality traits using the Ten-item Personality Inventory (TIPI). We found that ratings-based recommender systems may often fail to deliver preferred levels of diversity, popularity, and serendipity for their users (e.g. users with high-serendipity preferences). We also found that users with different personalities have different preferences for these three recommendation properties. Our work suggests that we can improve user satisfaction when we integrate users’ personality traits into the process of generating recommendations.

Similar content being viewed by others

Notes

using polr in R

Although in our user experiment we have three categorical levels for diversity, popularity, and serendipity, we decide to analyze the data based on continuous variables representing the diversity, serendipity and popularity of recommendation lists. This is because the distributions for diversity, popularity and serendipity are either skewed or highly overlapped, as shown in Appendix A. Thus using continuous variables makes our analyses independent from the distributions and easily duplicated.

The statistical significance test reported is of the interaction effect only; i.e., of the difference between the odds-ratios of high- and low–introversion users

The model is reported in the Appendix B Table 6

This and the subsequent visualizations are generated as follows. First, we bucket the corresponding continuous score (in this case diversity score). Then, for each bucket, we compute the percentage of users who answered Just Right to the corresponding questions (in this case, the question assessing user preference for diversity). Thus, a dot represents the percentage of a personality-trait group per bucket. However, the line is fitted based on the continuous score, not on the bucketed percentages.

The model is reported in Appendix B Table 7

The model is reported in Appendix B Table 11

The model is reported in Appendix B Table 7.

The model is reported in Appendix B Table 8

References

Adomavicius, G., & Kwon, Y. (2009). Toward more diverse recommendations: Item re-ranking methods for recommender systems. In Workshop on Information Technologies and Systems.

Ali, K., & van Wijnand, S. (2004). TiVo: making show recommendations using a distributed collaborative filtering architecture. In Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, 394–401.

Bachrach, Y., Kosinski, M., Graepel, T., Kohli, P., & Stillwell, D. (2012). Personality and patterns of Facebook usage. In Proceedings of the 4th Annual ACM Web Science Conference. ACM, 24–32.

Celma, O., & Cano, P. (2008). From hits to niches?: Or how popular artists can bias music recommendation and discovery. In Proceedings of the 2nd KDD Workshop on Large-Scale Recommender Systems and the Netflix Prize Competition. ACM, 5.

Chang, S., Maxwell Harper, F., & Terveen, L. (2015). Using groups of items for preference elicitation in recommender systems. In Proceedings of the 18th ACM conference on computer supported cooperative work; social computing (CSCW ‘15) (pp. 1258–1269). New York, NY, USA: ACM.

Chausson, O. (2010). Who watches what?: Assessing the impact of gender and personality on film preferences. Paper published online on the MyPersonality project website. http://mypersonality.org/wiki/doku.

Chen, L., Wu, W., & He, L. (2013). How personality influences users’ needs for recommendation diversity? In CHI’13 Extended Abstracts on Human Factors in Computing Systems. ACM, 829–834.

Costa, P. T., & McCrae, R. R. (2008). The revised neo personality inventory (neo-pi-r). The SAGE handbook of personality theory and assessment, 2, 179–198.

Ekstrand, M. D., Maxwell Harper, F., Willemsen, M. C., & Konstan, J. A. (2014). User perception of differences in recommender algorithms. In Proceedings of the 8th ACM Conference on Recommender systems. ACM, 161–168.

Fleder, D. M., & Hosanagar, K. (2007). Recommender systems and their impact on sales diversity. In Proceedings of the 8th ACM conference on Electronic commerce. ACM, 192–199.

Gosling, S. D., Rentfrow, P. J., & Swann Jr., W. B. (2003). A very brief measure of the big-five personality domains. Journal of Research in Personality, 37(6), 504–528.

Herlocker, J. L., Konstan, J. A., Terveen, L. G., & Riedl, J. T. (2004). Evaluating collaborative filtering recommender systems. ACM Transactions on Information Systems (TOIS), 22(1), 5–53.

Hu, R., & Pu, P. (2009). Acceptance issues of personality-based recommender systems. In Proceedings of the third ACM conference on Recommender systems. ACM, 221–224.

Hu, R., & Pu, P. (2011). Enhancing collaborative filtering systems with personality information. In Proceedings of the fifth ACM conference on Recommender systems. ACM, 197–204.

John, O. P., & Srivastava, S. (1999). The big five trait taxonomy: History, measurement, and theoretical perspectives. Handbook of personality: Theory and research, 2, 102–138.

Karatzoglou, A., Amatriain, X., Baltrunas, L., & Oliver, N. (2010). Multiverse recommendation: N-dimensional tensor factorization for context-aware collaborative filtering. In proceedings of the fourth ACM conference on recommender systems. ACM, 79–86.

Kemp, A. E. (1996). The musical temperament: Psychology and personality of musicians. Oxford: Oxford University Press.

Kraaykamp, G., & van Eijck, K. (2005). Personality, media preferences, and cultural participation. Personality and Individual Differences, 38(7), 1675–1688.

McCrae, R. R., & Costa, P. T. (1987). Validation of the five-factor model of personality across instruments and observers. Journal of Personality and Social Psychology, 52(1), 81.

McLaughlin, M. R., & Herlocker, J. L. (2004). A collaborative filtering algorithm and evaluation metric that accurately model the user experience. In Proceedings of the 27th annual international ACM SIGIR conference on Research and development in information retrieval. ACM, 329–336.

McNee, S. M., Riedl, J., & Konstan, J. A. (2006). Being accurate is not enough: How accuracy metrics have hurt recommender systems. In CHI’06 extended abstracts on Human factors in computing systems. ACM, 1097–1101.

Nguyen, T. T., Hui, P.-M., Maxwell Harper, F., Terveen, L., & Konstan, J. A. (2014). Exploring the filter bubble: The effect of using recommender systems on content diversity. In Proceedings of the 23rd international conference on World Wide Web. ACM, 677–686.

Oh, J., Park, S., Yu, H., Song, M., & Park, S.-T. (2011). Novel recommendation based on personal popularity tendency. In In Data Mining (ICDM), 2011 I.E. 11th International Conference on. IEEE (pp. 507–516).

Quercia, D., Kosinski, M., Stillwell, D., & Crowcroft, J. (2011). Our twitter profiles, our selves: Predicting personality with twitter. In In Privacy, Security, Risk and Trust (PASSAT) and 2011 I.E. Third International Conference on Social Computing (SocialCom), 2011 I.E. Third International Conference on. IEEE (pp. 180–185).

Rendle, S., Freudenthaler, C., Gantner, Z., & SchmidtThieme, L. (2009). BPR: Bayesian personalized ranking from implicit feedback. In Proceedings of the twenty-fifth conference on uncertainty in artificial intelligence. AUAI press, 452–461.

Rentfrow, P. J., & Gosling, S. D. (2003). The do re mi’s of everyday life: The structure and personality correlates of music preferences. Journal of Personality and Social Psychology, 84(6), 1236.

Shephard, R. W., & Fare, R. (1974). The law of diminishing returns. Berlin: Springer.

Steck, H. (2011). Item popularity and recommendation accuracy. In Proceedings of the fifth ACM conference on Recommender systems. ACM, 125–132.

Tkalcic, M., Kunaver, M., Tasic, J., & Kosir, A. (2009). Personality based user similarity measure for a collaborative recommender system. In Proceedings of the 5th Workshop on Emotion in Human-Computer Interaction-Real world challenges. 30–37.

Vargas, S., & Castells, P. (2013). Exploiting the diversity of user preferences for recommendation. In Proceedings of the 10th conference on open research areas in information retrieval (pp. 129–136). Le centre de hautes etudes internationals d’informatique documentaires.

Jesse Vig, Shilad Sen, and John Riedl. (2012). The tag genome: Encoding community knowledge to support novel interaction. ACM Transactions on Interactive Intelligent Systems (TiiS) 2, 3, 13.

Wu, W., & Chen, L. (2015). Implicit acquisition of user personality for augmenting movie recommendations. In In International Conference on User Modeling, Adaptation, and Personalization. Springer (pp. 302–314).

Youyou, W., Kosinski, M., & Stillwell, D. (2015). Computer-based personality judgments are more accurate than those made by humans. Proceedings of the National Academy of Sciences. 112(4), 1036–1040.

Zhang, M., & Hurley, N. (2009). Novel item recommendation by user profile partitioning. In Proceedings of the 2009 IEEE/WIC/ACM International Joint Conference on Web Intelligence and Intelligent Agent Technology-Volume 01. IEEE computer society, 508–515.

Zhang, Y. C., Seaghdha, D. O., Quercia, D., & Jambor, T. (2012). Auralist: Introducing serendipity into music recommendation. In Proceedings of the fifth ACM international conference on Web search and data mining. ACM, 13–22.

Ziegler, C.-N., McNee, S. M., Konstan, J. A., & Lausen, G. (2005). Improving recommendation lists through topic diversification. In Proceedings of the 14th international conference on World Wide Web. ACM, 22–32.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A Reason to exclude some users from the Analyses

The score ranges from 0 to 1. Red indicates high level, blue medium level and green low level. There are three curves indicating the three peaks of high, medium and low levels of popularity.

Before explaining why we exclude some users from our main analyses, we discuss the results of our manipulation-check analyses, which reveal reasons for the exclusion.

Our manipulation-check analyses show that:

-

for the diversity metric, users perceived the differences in recommendations of high and low levels (p-value = 0.000), and of medium and low levels (p-value = 0.000). Users did not perceived the differences in recommendations of medium and high levels (p-value = 0.255).

-

for the popularity metric, users perceived the differences in recommendations of high and low levels (p-value = 0.000), in recommendations of medium and high levels (p-value = 0.000). Users also perceived the differences in recommendations of medium and low levels (with marginally p-value = 0.057).

-

for the serendipity metric, users perceived the differences in recommendations of high and low levels (p-value = 0.035), in recommendations of medium and low levels (p-value = 0.021). Users did not perceive the differences in recommendations with medium and high levels (p-value = 0.956).

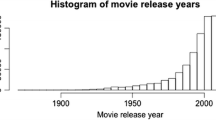

We plot out the distributions of diversity, popularity, and serendipity to investigate why in some level comparisons (e.g. high vs. medium diversity level) users did not perceive the differences. Figures 7 and 8 show these distributions. In each distribution, we observe overlapping regions of high, medium and low levels. These overlapping make users not perceive differences in some recommendations with different levels.

Both scores range from 0 to 1. Red indicates high levels, blue medium levels and green low levels. In each figure, there are three curves indicating the three peaks of high, medium, and low levels of diversity (left figure) or of serendipity (right figure)

In this study, we want to examine the recommendation experience of users who perceived the differences in the diversity, popularity, or serendipity quantities per level. Thus, we remove from our analyses users to whom the recommendations cannot deliver to the appropriate quantities of diversity, popularity, and serendipity. Our process to remove users from the analyses is as follow.

Diversity

From Fig. 8 (left), there are clear cut-off points for low, medium and high levels of diversity at 0.24 and 0.41. Thus, we analyze 150/169 users who were assigned to the low-level with diversity scores less than 0.24, 147/176 users assigned to the medium-level with diversity scores from 0.24 to 0.41, and 171/176 users assigned to the high level with scores greater than 0.41.

Popularity

From Fig. 7, there are clear cut-off points for low, medium and high levels at 0.6% and 5.0%. Thus, we analyze 151/205 users assigned to the low-level with popularity scores from 0% to less than 0.6%, 135/195 users assigned to the medium-level with popularity scores from 0.6% to 5.0%, and 158/182 users assigned to the high-level with popularity scores greater than 5.0%.

Serendipity

From Fig. 8 (right), there are clear cut-off points for low, medium and high levels of serendipity at 0.46 and 0.80. Thus, we analyze 114/162 users who were assigned to the low-level with serendipity scores less than 0.46, 102/171 users assigned to the medium-level with serendipity scores from 0.46 to 0.80, and 92/199 users assigned to the high-level with serendipity score greater than 0.80.

Appendix B Tables

Rights and permissions

About this article

Cite this article

Nguyen, T.T., Maxwell Harper, F., Terveen, L. et al. User Personality and User Satisfaction with Recommender Systems. Inf Syst Front 20, 1173–1189 (2018). https://doi.org/10.1007/s10796-017-9782-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10796-017-9782-y