Abstract

The influence of unreliable synapses on the dynamic properties of a neural network is investigated for a homogeneous integrate-and-fire network with delayed inhibitory synapses. Numerical and analytical calculations show that the network relaxes to a state with dynamic clusters of identical size which permanently exchange neurons. We present analytical results for the number of clusters and their distribution of firing times which are determined by the synaptic properties. The number of possible configurations increases exponentially with network size. In addition to states with a maximal number of clusters, metastable ones with a smaller number of clusters survive for an exponentially large time scale. An externally excited cluster survives for some time, too, thus clusters may encode information.

Similar content being viewed by others

References

Abbott, L. F., & van Vreeswijk, C. (1993). Asynchronous states in networks of pulse-coupled oscillators. Physical Review E, 48(2), 1483–1490.

Abeles, M. (1991). Corticonics: Neural circuits of the cerebral cortex. New York: Cambridge University Press.

Abramowitz, M., & Stegun, I. A. (1964). Handbook of mathematical functions with formulas, graphs, and mathematical tables. New York: Dover.

Allen, C., & Stevens, C. F. (1994). An Evaluation of Causes for Unreliability of Synaptic Transmission. Proceedings of the National Academy of Sciences of the United States of America, 91(22), 10380–10383.

Amit, D. J., & Brunel, N. (1997). Model of global spontaneous activity and local structured activity during delay periods in the cerebral cortex. Cerebral Cortex, 7, 237–252.

Barnsley, M. F. (1989). Fractals everywhere. Boston: Academic.

Bressloff, P. C. (1999). Mean-field theory of globally coupled integrate-and-fire neural oscillators with dynamic synapses. Physical Review E, 60(2), 2160–2170.

Brunel, N. (2000). Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. Journal of Computational Neuroscience, 8, 183–208.

Brunel, N., & Hakim, V. (1999). Fast global oscillations in networks of integrate-and-fire neurons with low firing rates. Neural Computation, 11(7), 1621–1671.

Buzsaki, G. (2006). Rhythms of the brain. New York: Oxford University Press.

Diesmann, M., Gewaltig, M., & Aertsen, A. (1999). Stable propagation of synchronous spiking in cortical neural networks. Nature, 402, 529–533.

Ernst, U., Pawelzik, K., & Geisel, T. (1995). Synchronization induced by temporal delays in pulse-coupled oscillators. Physical Review Letters, 74(9), 1570–1573.

Ernst, U., Pawelzik, K., & Geisel, T. (1998). Delay-induced multistable synchronization of biological oscillators. Physical Review E, 57(2), 2150–2162.

Gerstner, W. (1995). Time structure of the activity in neural network models. Physical Review E, 51(1), 738–758.

Gerstner, W. (1996). Rapid phase locking in systems of pulse-coupled oscillators with delays. Physical Review Letters, 76(10), 1755–1758.

Gerstner, W., & Kistler, W. K. (2002). Spiking neuron models. Cambridge: Cambridge University Press.

Golomb, D., Wang, X. J., & Rinzel, J. (1994). Synchronization properties of spindle oscillations in a thalamic reticular nucleus model. Journal of Neurophysiology, 72(3), 1109–1126.

Gong, P. L., & van Leeuwen, H. (2007). Dynamically maintained spike timing sequences in networks of pulse-coupled oscillators with delay. Physical Review Letters, 98(4), 048104.

Jahnke, S., Memmesheimer, R. M., & Timme, M. (2008). Stable irregular dynamics in complex neural networks. Physical Review Letters, 100(4), 048102.

Kestler, J., & Kinzel, W. (2006). Multifractal distribution of spike intervals for two oscillators coupled by unreliable pulses. Journal of Physics A: Mathematical and General, 39, L461–L466.

Kinzel, W. (2008). On the stationary state of a network of inhibitory spiking neurons. Journal of Computational Neuroscience, 24, 105–112.

Kumar, A., Rotter, S., & Aertsen, A. (2008). Conditions for propagating synchronous spiking and asynchronous firing rates in a cortical network model. Journal of Neuroscience, 28, 5268–5280.

Lisman, J. E. (1997). Bursts as a unit of neural information: Making unreliable synapses reliable. Trends in Neurosciences, 20, 38–43.

Maass, W., & Natschläger, T. (2000). A model for fast analog computation based on unreliable synapses. Neural Computation, 12(7), 1679–1704.

Mirollo, R. E., & Strogatz, S. H. (1990). Synchronization of pulse-coupled biological oscillators. SIAM Journal on Applied Mathematics, 50(6), 1645–1662.

Rosenmund, C., Clements, J., & Westbrook, G. (1993). Nonuniform probability of glutamate release at a hippocampal synapse. Science, 262, 754–757.

Senn, W., Markram, H., & Tsodyks, M. (2001). An algorithm for modifying neurotransmitter release probability based on pre- and postsynaptic spike timing. Neural Computation, 13(1), 35–67.

Seung, H. S. (2003). Learning in spiking neural networks by reinforcement of stochastic synaptic transmission. Neuron 40(6), 1063–1073.

Stevens, C. F., & Wang, Y. (1994). Changes in reliability of synaptic function as a mechanism for plasticity. Nature, 371, 704–707.

Timme, M., & Wolf, F. (2008). The simplest problem in the collective dynamics of neural networks: is synchrony stable? Nonlinearity 21, 1579–1599.

Timme, M., Wolf, F., & Geisel, T. (2002). Coexistence of regular and irregular dynamics in complex networks of pulse-coupled oscillators. Physical Review Letters, 89, 258701.

Tuckwell, H. C. (1988). Introduction to theoretical neurobiology. New York: Cambridge University Press.

van Vreeswijk, C. (1996). Partial synchronization in populations of pulse-coupled oscillators. Physical Review E, 54(5), 5522–5537.

van Vreeswijk, C. (2000). Analysis of the asynchronous state in networks of strongly coupled oscillators. Physical Review Letters, 84(22), 5110–5113.

van Vreeswijk, C., Abbott, L. F., & Ermentrout, G. B. (1994). When inhibition not excitation synchronizes neural firing. Journal of Computational Neuroscience, 1, 313–321.

van Vreeswijk, C., & Sompolinsky, H. (1996). Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science 274, 1724–1726.

Zillmer, R., Livi, R., Politi, A., & Torcini, A. (2007). Stability of the splay state in pulse-coupled networks. Physical Review E 76(4), 046102.

Acknowledgements

It is a pleasure for us to acknowledge stimulating and fruitful discussions with Ido Kanter and Moshe Abeles.

Author information

Authors and Affiliations

Corresponding author

Additional information

Action Editor: Nicolas Brunel

Appendices

Appendix A: Distribution of firing times

Though the inputs triggered by the spike volley of all neurons do not arrive at the same time, the approximation that they would is a good one leaving us with

where K is the number of afferent synapses for each neuron. The random variable J shall be defined by the normalized sum of the inputs \( \sum_{j=1}^K h_j \frac{g}{K}\). The values of J, denoted as \(\tilde{J}\), are binomial distributed which for large K can be approximated by a Gaussian distributed total pulse strength J with mean and variance

The pulse has the shape of a δ-function, that is fixed in time, but its altitude varies. It can be shown that the error due to the approximation is much smaller than this variation, thus justifying it.

Now we consider how a neuron with potential \(V(0^-)=V_0\) which at time t = 0 receives the pulse of strength \(\tilde{J}\) evolves over the period T.

According to the last line V(T) is linear in \(\tilde{J}\). Since \(\tilde{J}\) is a value of the Gaussian distributed random variable J, the values V(T) are Gaussian distributed for given V 0 as well. Thus the transition probability density for the potential to be V(T) after time T under the condition that it was V 0 at time t = 0 is

Integrating over the initial distribution of V 0, denoted as \(\mathcal{P}_V(V_0)\), yields the distribution of potentials at time T, which must be identical to the initial one since T is the period. This leads to the following linear homogeneous Fredholm-integral-equation of the second kind where m and s are as defined above in Eq. (20) and Eq. (21).

The Fourier-transformed equation

reads

with a : = ϑ e T and \(c := 1-e^{-T}\frac{1-g p}{\vartheta}\). It is solveable using the ansatz \(\Tilde{\mathcal{P}}(k) = \exp\left(\sum_i \alpha_ik^i\right)\) and comparison of coefficients. The result, up to a free complex constant, is

Transforming back and choosing the constant correctly, so that \(\mathcal{P}_V\) obeys the required properties of a pdf, which are \(\mathcal{P}_V \in \mathbb{R}\) and \(\int \mathcal{P}_V(V)dV=1\), yields a Gaussian distribution of V 0 with mean \( \mu = \frac{a c}{a-1}\) and variance \(\sigma^2 = \frac{a^2}{a^2-1}s^2\). The potential evolves from its reset value 0 over a time τ until the input arrives, thus μ = 1 − e − τ. Eliminating T yields the result in Eq. (10). Note that τ has to be smaller than T 0, because 0 < μ < θ, which is biologically well founded.

The transformation of the obtained pdf of the potential into a pdf of the spiking times is simply done using \(\mathcal{P}_V dV=\mathcal{P}_{t_\theta} dt_{\theta}\) and the definition of the time to threshold \(t_\theta:=\ln\frac{1-V}{\vartheta}\), resulting in Eq. (11).

Appendix B: Identical clusters

2.1 B.1 Cluster size

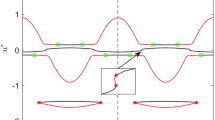

We study the pdf for n c clusters in the phase-description. For simplicity we do not remove the selfcoupling. Analogous to the study of the synchronous state we consider the evolution of the phase over one period, cf. Table 1. We here present the procedure for two clusters, which works analogous for higher n c .

The inhibitory pulse of strength \(\tilde{J_i}\), triggered by firing of cluster i, results in a phase jump Δϕ that depends on the present phase. Using the relationship Eq. (3) we get:

The Δϕ are determined by the present phase and the pulse strength.

Between these jumps the phase increases linearly by δϕ. We use again the approximation that the inputs due to the spike volley of one cluster arrive at the same time. For derivation of the δϕ the dynamics of whole clusters and not single neurons is relevant. Therefore in a first step the calculation is only carried out using mean values, i.e. the deterministic case is considered. Each cluster i is described by one phase ϕ i and the pulse strengths \(\tilde{J_i}\) are fixed to \(\mu_{J_i}\) instead of being drawn from a Gaussian distribution. The mean values of the pulse strengths are

where N i is the number of neurons in cluster i and \(x=\frac{N_1}{N}\) is the relative clustersize of cluster 1.

In the time interval \([0^+;t_{\theta_2}+\tau^-]\), which corresponds to δϕ a , the phase of cluster 2 evolves from ϕ 2 + Δϕ 2a towards 1 and then from 0 to ϕ 1. Here the parameter τ comes into play, because

This yields the following equation for δϕ a :

After the time T, being the period, both phases must have their initial value:

Reinserting the Δϕ ij into the last equation leads after some transforms to another equation for δϕ a .

Setting 29 equal to 33 with substituting

results in a quadratic equation for b. One of its both solutions is ruled out by the fact that b has to be positive.

Reinserting the definitions of a, b and using 28, we finally got an equation for ϕ 2 in dependence of the given parameters τ, θ, \(\mu_{J_1}\) and \(\mu_{J_2}\). The Δϕ ij can be calculated through their definitions 26, δϕ a and δϕ b using 29 and 30 respectively, resulting in

with δϕ(x) as defined below in Eq. (37).

δϕ a and δϕ b turn out to be equal for \(x=\frac{1}{2}\), i.e. clusters of the same size. If two clusters with the same size emerge, then the interval between them in the spike train is \(\frac{T}{2}\). The other cluster fires exactly in the mid of a period.

Now we turn toward the actual distribution of the phases. Each cluster i is described by a phase distribution \(\mathcal{P}_{\phi_i}(\phi)\). We derive a set of n c integral equations, one for each cluster. Firing of cluster i results in a pulse of strength \(\tilde{J}\) which is drawn from Gaussian distributions \(\mathcal{P}_{J_i}(\tilde{J})\) with means as defined in 27 and variances

A pulse of strength \(\tilde{J}\) results in a phase shift Δϕ according to Eq. 25 from ϕ 0 to \(\phi=\phi_0+\Delta\Phi(\phi_0,\tilde{J})=\log_\vartheta(\vartheta^{\phi_0}-\tilde{J})\). Solving this for \(\tilde{J}\) yields

The probability that a pulse triggered by cluster i shifts the phase from ϕ 0 to ϕ is thus

The self-consistent equation for cluster 1 in a 2-cluster state reads

Each term can be understood considering Table 1. We start with an initial value ϕ′′ out of the distribution \(\mathcal{P}_{\phi_1}(\phi'')\). The pulse due to firing of cluster 1 shifts the phase from ϕ′′ to ϕ′ − δϕ a , corresponding to \(\mathcal{P}_{\Delta\phi,J_1}(\phi'-\delta\phi_a,\phi'')\). Then the phase evolves linearly to ϕ′ until the pulse due to firing of cluster 2 shifts the phase from ϕ′ to ϕ + 1 − δϕ b , corresponding to \(\mathcal{P}_{{\mathit {\Delta}}\phi,J_2}(\phi+1-\delta\phi_b,\phi')\). The phase evolves linearly, is reset, and evolves finally to ϕ. The integration is done over all initial and intermediate values ϕ′′ and ϕ′ respectively. The integral equation for \(\mathcal{P}_{\phi_2}\) is obtained for \(x \leftrightarrow (1-x)\) and has to be simultaneously valid.

The equations can be solved numerically using discretization into n bins bins. The integrals are replaced by sums and the pdf by a vector:

n bins is the number of bins and characterizes the quality of the discretization. In the limit n bins → ∞ the original integral equation is restored. n bins is further the dimension of the vectors \({\mathbf{P}_{i}}\). P i,k denotes the kth component of this vector. E.g. for cluster 1 of the 2-cluster state:

With the introduced A ij and B ki this can be written as a matrix equation

In general one can transform the n c integral equations to n c matrix equations of the form \({\bf P_i} = \underline{M_i} \bf{P_i}\). We end up with eigenvalue equations for \(\underline{M_i}\) to the eigenvalue 1. A solution to the integral equations only exists if all \(\underline{M_i}\) have an eigenvalue that equals 1. The related eigenvector is the discretized pdf. The theory of transfer operators tells us that the relevant eigenvalue corresponding to the stationary state is the leading one λ 1. The way matrices \(\underline{M_i}\) are defined they only have positive entries \((\underline{M_i})_{ij}>0\). The Perron-Frobenius theorem applies and therefore the largest eigenvalue is simple. The corresponding eigenvector is unique, i.e. the distribution if λ 1 = 1. We find that a solution only exists for identical clusters, e.g. for n c = 2 the largest eigenvalue of each matrix equals 1 only if \(x=\frac{1}{2}\). Thus cluster states are stationary only for clusters of the same size.

2.2 B.2 Stability

The matrix equations for these states with identical clusters read in general:

with \(\underline{M}:=\underline{B}\ \underline{A}\cdots\underline{A}\) and \(\underline{A}\), \(\underline{B}\) defined as:

where \(\mathcal{P}_{J}(J)\) is a Gaussian distribution with mean and variance

δϕ denotes the normalized time elapsed between consecutive firings of clusters. We define \({\mathit {\Phi}}(\phi)\) to map the phase ϕ from one firing time to the phase at the next firing time. After each firing an input occurs with delay τ, thus with Eq. (25)

Because n c firings occur during one period δϕ is the solution of the nested function

where \({\mathit {\Phi}}(\phi)\) is nested n c times.

A perturbation δ P 0 evolves over one period according to \({\delta \bf P_{n+1}} = \underline{M}{\delta \bf P_n}\). Powers of \(\underline{M}\) converge against a (right) stochastic matrix

thus any perturbation converges, up to a constant factor, for n→ ∞ against \(\mathbf P\).

The total variance Var tot of a matrix is defined as sum over the variances in the rows:

Appendix C: Number of clusters

Clusters of identical size emerge which is the outcome of numerical simulations and we showed it explicitly for two clusters in Appendix B. This simplifies the algebraic treatment due to symmetries. In Eq. (46) we already defined the function \({\mathit {\Phi}}(\phi)\) that maps the phase from one firing event to the next which happens normalized time δϕ later. Due to the mentioned symmetry we have the same overall distribution of phase at all spiking times, but the labels have changed:

Into the last equation we plug in the upper bound τ max of Eq. (13), \(\phi_{n_c}(0)=1+\frac{\tau_{max}}{\ln(\vartheta)}\), and solve for δϕ and \({\mathit {\Phi}}(\phi)\) respectively.

Inserting this into Eq. (12) and changing variables ϕ→ϑ ϕ results in the final Eq. (14).

Rights and permissions

About this article

Cite this article

Friedrich, J., Kinzel, W. Dynamics of recurrent neural networks with delayed unreliable synapses: metastable clustering. J Comput Neurosci 27, 65–80 (2009). https://doi.org/10.1007/s10827-008-0127-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-008-0127-1