Abstract

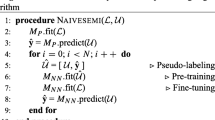

This paper investigates the problem of augmenting labeled data with unlabeled data to improve classification accuracy. This is significant for many applications such as image classification where obtaining classification labels is expensive, while large unlabeled examples are easily available. We investigate an Expectation Maximization (EM) algorithm for learning from labeled and unlabeled data. The reason why unlabeled data boosts learning accuracy is because it provides the information about the joint probability distribution. A theoretical argument shows that the more unlabeled examples are combined in learning, the more accurate the result. We then introduce B-EM algorithm, based on the combination of EM with bootstrap method, to exploit the large unlabeled data while avoiding prohibitive I/O cost. Experimental results over both synthetic and real data sets show that the proposed approach has a satisfactory performance.

Similar content being viewed by others

References

Babu, S., & Widom, J. (2001). Continuous queries over data streams. SIGMOD Record, 30(3), 109–120.

Blum, A., & Mitchell, T. (1998). Combining labeled and unlabeled data with co-training. In Proceedings of the Eleventh Annual Conference on Computational Learning Theory (pp. 92–100), Madison, Wisconsin.

Bradley, P. S., Fayyad, U. M., & Reina, C. A. (1998, August). Scaling clustering algorithms to large databases. In Proceedings of the Fourth ACM KDD International Conference on Knowledge Discovery and Data Mining (pp. 9–15), New York.

Carreira-Perpinan, M. (1996). A review of dimension reduction techniques. In Technique Report, CS-96-09. Department of Computer Science, University of Sheffield.

Castelli, V., & Cover, T. (1995). On the exponential value of labeled samples. Pattern Recognition Letters, 6(1), 105–111.

Castelli, V., & Cover, T. (1996). The relative value of labeled and unlabeled samples in pattern recognition with an unknown mixing parameters. IEEE Transaction on Information Theory, 42(6), 2102–2117.

Chakrabarti, S., Dom, B., Agrawal, R., & Raghavan, P. (1997). Using taxonomy, discriminants, and signatures for navigating in text databases. In Proceedings of the 23rd Very Large Data Bases Conference (pp. 446–455), Athens, Greece.

Chapmann, B., & Tibshirani, R. (1993). An introduction to the bootstrap. monograph on statistics and applied probability. Chapman and Hall.

Cheeseman, P., & Stutz, J. (1996). Bayesian classification (autoclass): Theory and results. Advances in Knowledge Discovery and Data Mining.

Dempster, A., Laird, N., & Rubin, D. (1977). Maximum likelihood from incomplete data via the em algorithm. Journal of the Royal Statistical Society, 39(1),1–38.

Gehrke, J., Ganti, V., Ramakrishnan, R., & Loh, W.-Y. (1999). Boat-optimistic decision tree construction. In Proceedings of the ACM SIGMOD International Conference on Management of Data (pp. 169–180) Philadelphia, Pennsylvania.

Gehrke, J., Ramakrishnan, R., & Ganti, V. (1998). Rainforest—a framework for fast decision tree construction of large datasets. In Proceedings of the 24th Very Large Data Bases Conference (pp. 416–427), New York.

Ghaharmani, Z., & Jordan, M. (1994). Supervised learning from incomplete data via an em approach. In Proceedings of Advances in Neural Information Processing Systems (pp. 120–127), Denver, Colorado.

Goldberg, D. (1999). Genetic algorithms in search, optimization and machine learning. Reading, Massachussetts: Morgan Kaufmann.

James, M. (1985). Classification algorithms. New York: Wiley & Sons.

Lippmann, R. (1987). An introduction to computing with neural nets. IEEE ASSP Magazine, 4(22), 4–22.

Mehta, M., Agrawal, R., & Risanen, J. (1996). Sliq: A fast scalable classifier for data mining. In Proceedings of the Fifth International Conference on Extending Database Technology, (pp. 18–32), Avignon, France.

Neal, R., & Hinton, G. (1999). A view of the em algorithm that justifies incremental, sparse, and other variants. In M. I. Jordan (Ed.), Learning in graphical models (pp. 355–368). Norwell, Massachussetts: Kluwer Academic Publishers.

Nigam, K., Mccallum, A. Thrun, S., & Mitchel, T. (2000). Text classification from labeled and unlabeled documents. Machine Learning, 39(2/3), 103–134.

Ortega-Binderberger, M. (1999) Corel image features. http://kdd.ics.uci.edu/databases/CorelFeatures/CorelFeatures.html.

Quinlan, J. (1986). Induction of decision trees. Machine Learning, 1, 81–106.

Quinlan, J. (1989). Unknown attribute values in induction. In Proceedings of the Sixth International Machine Learning Workshop (pp. 164–168), Ithaca, New York.

Shafer, J., Agrawal, R., & Mehta, M. (1996). Sprint: A scalable parallel classifier for data mining. In Proceedings of the 22nd Very Large Data Bases Conference (pp. 544–555), Bombay, India.

Shanhshanhani, B., & Landgrebe, D. (1994). The effect of unlabeled samples in reducing the small sample size problem and mitigating the hughes phenomenon. IEEE Transactions on Geoscience and Remote Sensing, 32(5), 1087–1095.

Tian, Q., Wu, Y., & Huang, T. S. (2000). Incorporate discriminant analysis with em algorithm in image retrieval. In Proceedings of IEEE International Conference on Multimedia and Expo(I), New York City, New York (pp. 299–302).

Toutanova, K., Chen, F., Popat, K., & Hifmann, T. (2001). Text classification in a hierarchical mixture model for small training sets. In Proceedings of ACM International Conference on Information and Knowledge Management (pp. 105–112), Atlanta, Georgia.

Wu, X., Fan, J., & Subramanian, K. R. (2002). B-EM: A classifier incorporating bootstrap with em approach for data mining. In Proceedings of ACM International Conference on Knowledge Discovery and Data Mining. (pp. 670–675), Edmonton, Canada.

Author information

Authors and Affiliations

Corresponding author

Additional information

A short version of this paper (Wu, Fan, & Subramaian, 2002) was published in the ACM-SIGKDD Conference in Knowledge Discovery and Data Mining, 2002. This manuscript is a much extended version that describes in detail the algorithms, techniques, and contains more experimental results.

Rights and permissions

About this article

Cite this article

Wu, X. Incorporating large unlabeled data to enhance EM classification. J Intell Inf Syst 26, 211–226 (2006). https://doi.org/10.1007/s10844-006-0865-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10844-006-0865-3