Abstract

Nowadays, data scientists prefer “easy” high-level languages like R and Python, which accomplish complex mathematical tasks with a few lines of code, but they present memory and speed limitations. Data summarization has been a fundamental technique in data mining that has promise with more demanding data science applications. Unfortunately, most summarization approaches require reading the entire data set before computing any machine learning (ML) model, the old-fashioned way. Also, it is hard to learn models if there is an addition or removal of data samples. Keeping these motivations in mind, we present incremental algorithms to smartly compute summarization matrix, previously used in parallel DBMSs, to compute ML models incrementally in data science languages. Compared to the previous approaches, our new smart algorithms interleave model computation periodically, as the data set is being summarized. A salient feature is scalability to large data sets, provided the summarization matrix fits in RAM, a reasonable assumption in most cases. We show our incremental approach is intelligent and works for a wide spectrum of ML models. Our experimental evaluation shows models get increasingly accurate, reaching total accuracy when the data set is fully scanned. On the other hand, we show our incremental algorithms are as fast as Python ML library, and much faster than R built-in routines.

Similar content being viewed by others

References

Ahmed, M. (2019). Data summarization: A survey. Knowledge and Information Systems, 58(2), 249–273.

Al-Amin, S.T., Chebolu, S.U.S., & Ordonez, C. (2020). Extending the R language with a scalable matrix summarization operator. In IEEE International conference on big data, big data 2020 (pp. 399–405).

Altiparmak, F., Tuncel, E., & Ferhatosmanoglu, H. (2008). Incremental maintenance of online summaries over multiple streams. IEEE Transactions on Knowledge and Data Engineering, 20(2), 216–229.

Beazley, D.M. (1996). SWIG: An easy to use tool for integrating scripting languages with C and C++. In Fourth annual USENIX tcl/tk workshop.

Bradley, P., Fayyad, U., & Reina, C. (1998). Scaling clustering algorithms to large databases. In Proc. ACM KDD conference (pp. 9–15).

Cauwenberghs, G., & Poggio, T.A. (2000). Incremental and decremental support vector machine learning. In Advances in neural information processing systems 13, papers from neural information processing systems (NIPS) 2000 (pp. 409–415). Denver: MIT Press.

Chebolu, S.U.S., Ordonez, C., & Al-Amin, S.T. (2019). Scalable machine learning in the R language using a summarization matrix. In Database and expert systems applications DEXA (pp. 247–262).

Chen, Y., Xiong, J., Xu, W., & Zuo, J. (2019). A novel online incremental and decremental learning algorithm based on variable support vector machine. Cluster Computing, 22(Supplement), 7435–7445.

Das, S., Sismanis, Y., Beyer, K., Gemulla, R., Haas, P., & McPherson, J. (2010). RICARDO: Integrating R and hadoop. In Proc. ACM SIGMOD conference (pp. 987–998).

David, J., Pessemier, T.D., Dekoninck, L., Coensel, B.D., Joseph, W., Botteldooren, D., & Martens, L. (2020). Detection of road pavement quality using statistical clustering methods. Journal of Intelligent Information System, 54, 483–499.

Dua, D., & Graff, C. (2017). UCI machine learning repository. http://archive.ics.uci.edu/ml.

Eddelbuettel, D. (2013). Seamless r and c++ integration with rcpp. New York: Springer.

Gepperth, A., & Hammer, B. (2016). Incremental learning algorithms and applications. In 24Th european symposium on artificial neural networks, ESANN.

Hastie, T., Tibshirani, R., & Friedman, J. (2001). The Elements of Statistical Learning, 1st edn. New York: Springer.

He, H., Chen, S., Li, K., & Xu, X. (2011). Incremental learning from stream data. IEEE Transactions on Neural Networks, 22(12), 1901–1914.

James, G., Witten, D., Hastie, T., & Tibshirani, R. (2013). An introduction to statistical learning Vol. 112. Berlin: Springer.

Karasuyama, M., & Takeuchi, I. (2009). Multiple incremental decremental learning of support vector machines. In 23Rd annual conference on neural information processing systems 2009 (pp. 907–915).

LeCun, Y., Bengio, Y., & Hinton, G.E. (2015). Deep learning. Nature, 521(7553), 436–444.

Levatic, J., Ceci, M., Kocev, D., & Dzeroski, S. (2017). Semi-supervised classification trees. Journal of Intelligent Information System, 49, 461–486.

Ordonez, C., Zhang, Y., & Cabrera, W. (2016). The Gamma matrix to summarize dense and sparse data sets for big data analytics. IEEE Transactions on Knowledge and Data Engineering (TKDE), 28(7), 1906–1918.

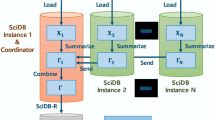

Ordonez, C., Zhang, Y., & Johnsson, S.L. (2019). Scalable machine learning computing a data summarization matrix with a parallel array DBMS. Distributed and Parallel Databases, 37(3), 329–350.

Osojnik, A., Panov, P., & Dzeroski, S. (2018). Tree-based methods for online multi-target regression. Journal of Intelligent Information System, 50, 315–339.

Patra, B.K., & Nandi, S. (2015). Effective data summarization for hierarchical clustering in large datasets. Knowledge and Information Systems, 42(1), 1–20.

Pedregosa, F., Varoquaux, G., Gramfort, A., & Michel, V. (2011). Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12, 2825–2830.

Polikar, R., Upda, L., Upda, S.S., & Honavar, V.G. (2001). Learn++: an incremental learning algorithm for supervised neural networks. IEEE Trans. Syst. Man Cybern. Part C, 31(4), 497–508.

Ross, D.A., Lim, J., Lin, R., & Yang, M. (2008). Incremental learning for robust visual tracking. International Journal of Computer Vision, 77, 125–141.

Rumsey, D. (2011). Statistics For Dummies. –For dummies. Hoboken: Wiley.

Spokoiny, A., & Shahar, Y. (2008). Incremental application of knowledge to continuously arriving time-oriented data. Journal of Intelligent Information System 1–33.

Tari, L., Tu, P.H., Hakenberg, J., Chen, Y., Son, T.C., Gonzalez, G., & Baral, C. (2012). Incremental information extraction using relational databases. IEEE Transactions on Knowledge and Data Engineering, 24(1), 86–99.

Totad, S.G., Geeta, R.B., & Reddy, P.V.G.D.P. (2012). Batch incremental processing for fp-tree construction using fp-growth algorithm. Knowledge and Information Systems, 33(2), 475–490.

Zakai, A. (2011). Emscripten: an llvm-to-javascript compiler. In C.V. Lopes K. Fisher (Eds.) Companion to the 26th annual ACM SIGPLAN conference on object-oriented programming, systems, languages, and applications, OOPSLA (pp. 301–312). ACM.

Zhang, T., Ramakrishnan, R., & Livny, M. (1996). BIRCH: An efficient data clustering method for very large databases. In Proc. ACM SIGMOD conference (pp. 103–114).

Zhang, Y., Zhang, W., & Yang, J. (2010). I/o-efficient statistical computing with RIOT. In Proc. ICDE.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Al-Amin, S.T., Ordonez, C. Incremental and accurate computation of machine learning models with smart data summarization. J Intell Inf Syst 59, 149–172 (2022). https://doi.org/10.1007/s10844-021-00690-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10844-021-00690-5