Abstract

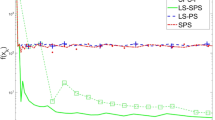

We propose a novel stochastic Nesterov’s smoothing accelerated method for general nonsmooth, constrained, stochastic composite convex optimization, the nonsmooth component of which may be not easy to compute its proximal operator. The proposed method combines Nesterov’s smoothing accelerated method (Nesterov in Math Program 103(1):127–152, 2005) for deterministic problems and stochastic approximation for stochastic problems, which allows three variants: single sample and two different mini-batch sizes per iteration, respectively. We prove that all the three variants achieve the best-known complexity bounds in terms of stochastic oracle. Numerical results on a robust linear regression problem, as well as a support vector machine problem show that the proposed method compares favorably with other state-of-the-art first-order methods, and the variants with mini-batch sizes outperform the variant with single sample.

Similar content being viewed by others

References

Nesterov, Y.: Smooth minimization of non-smooth functions. Math. Program. 103(1), 127–152 (2005)

Chapelle, O., Sindhwani, V., Keerthi, S.S.: Optimization techniques for semi-supervised support vector machines. J. Mach. Learn. Res. 9, 203–233 (2008)

Shivaswamy, P.K., Jebara, T.: Relative margin machines. NIPS 19, 1481–1488 (2008)

Li, J., Chen, C., So, A.M.C.: Fast epigraphical projection-based incremental algorithms for Wasserstein distributionally robust support vector machine. NIPS 33, 4029–4039 (2020)

Crammer, K., Singer, Y.: On the algorithmic implementation of multiclass kernel-based vector machines. J. Mach. Learn. Res. 2, 265–292 (2001)

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Stat. 22(3), 400–407 (1951)

Polyak, B.: New stochastic approximation type procedures. Automat. i Telemekh. 7(2), 98–107 (1990). ((English translation: Automation and Remote Control))

Polyak, B., Juditsky, A.: Acceleration of stochastic approximation by averaging. SIAM J. Control. Optim. 30(4), 838–855 (2006)

Nemirovski, A., Juditsky, A., Lan, G., Shapiro, A.: Robust stochastic approximation approach to stochastic programming. SIAM J. Optim. 19(4), 1574–1609 (2009)

Nemirovsky, A., Yudin, D.B.: Problem Complexity and Method Efficiency in Optimization. Wiley, New York (1983)

Lan, G., Nemirovski, A., Shapiro, A.: Validation analysis of mirror descent stochastic approximation method. Math. Program. 134(2), 425–458 (2012)

Ghadimi, S., Lan, G., Zhang, H.: Mini-batch stochastic approximation methods for nonconvex stochastic composite optimization. Math. Program. 155(1–2), 267–305 (2016)

Wang, X., Wang, X., Yuan, Y.-X.: Stochastic proximal quasi-Newton methods for non-convex composite optimization. Optim. Methods Softw. 34(5), 922–948 (2019)

Chen, S., Ma, S., So, A.M.-C., Zhang, T.: Proximal gradient method for nonsmooth optimization over the Stiefel manifold. SIAM J. Optim. 30(1), 210–239 (2020)

Xiao, X.: A unified convergence analysis of stochastic Bregman proximal gradient and extragradient method. J. Optim. Theory Appl. 188(3), 605–627 (2021)

Bai, J., Hager, W.W., Zhang, H.: An inexact accelerated stochastic ADMM for separable convex optimization. Comput. Optim. Appl. 81, 479–518 (2022)

Bai, J., Han, D., Sun, H., Zhang, H.: Convergence on a symmetric accelerated stochastic ADMM with larger stepsizes. CSIAM-AM. (2022). https://doi.org/10.4208/csiam-am.SO-2021-0021

Xiao, L., Zhang, T.: A proximal stochastic gradient method with progressive variance reduction. SIAM J. Optim. 24(4), 2057–2075 (2014)

Nitanda, A.: Stochastic proximal gradient descent with acceleration techniques. NIPS 27, 1574–1582 (2014)

Wang, X., Wang, S., Zhang, H.: Inexact proximal stochastic gradient method for convex composite optimization. Comput. Optim. Appl. 68(3), 579–618 (2017)

Allen-Zhu, Z.: Katyusha: the first direct acceleration of stochastic gradient methods. J. Mach. Learn. Res. 18(1), 8194–8244 (2017)

Reddi, S., Sra, S., Poczos, B., Smola, A.J.: Proximal stochastic methods for nonsmooth nonconvex finite-sum optimization. NIPS 29, 1145–1153 (2016)

Pham, N.H., Nguyen, L.M., Phan, D.T., Tran-Dinh, Q.: ProxSARAH: an efficient algorithmic framework for stochastic composite nonconvex optimization. J. Mach. Learn. Res. 21(110), 1–48 (2020)

Shivaswamy, P.K., Jebara, T.: Maximum relative margin and data-dependent regularization. J. Mach. Learn. Res. 11(2), 747–788 (2010)

Zhang, T., Zhou, Z.H.: Optimal margin distribution machine. IEEE Trans. Knowl. Data Eng. 32(6), 1143–1156 (2019)

Crammer, K., Dredze, M., Pereira, F.: Confidence-weighted linear classification for text categorization. J. Mach. Learn. Res. 13(1), 1891–1926 (2012)

Bertsimas, D., Gupta, V., Kallus, N.: Robust sample average approximation. Math. Program. 171(1), 217–282 (2018)

Chen, X.: Smoothing methods for nonsmooth, nonconvex minimization. Math. Program. 134(1), 71–99 (2012)

Chen, X.: Smoothing methods for complementarity problems and their applications: a survey. J. Oper. Res. Soc. Jpn. 43(1), 32–47 (2000)

Zhang, C., Chen, X.: A smoothing active set method for linearly constrained non-Lipschitz nonconvex optimization. SIAM J. Optim. 30, 1–30 (2020)

Zhang, C., Chen, X.: Smoothing projected gradient method and its application to stochastic linear complementarity problems. SIAM J. Optim. 20, 627–649 (2009)

Polyak, B.: Introduction to Optimization. Optimization Software Inc., New York (1987)

Ouyang, H., Gray, A.G.: Stochastic smoothing for nonsmooth minimizations: accelerating SGD by exploiting structure. ICML 2, 1523–1530 (2012)

Devolder, O., Glineur, F., Nesterov, Y.: Double smoothing technique for large-scale linearly constrained convex optimization. SIAM J. Optim. 22(2), 702–727 (2012)

Quoc, T.: Adaptive smoothing algorithms for nonsmooth composite convex minimization. Comput. Optim. Appl. 66(3), 425–451 (2017)

Duchi, J.C., Bartlett, P.L., Wainwright, M.J.: Randomized smoothing for stochastic optimization. SIAM J. Optim. 22(2), 674–701 (2012)

Tseng, P.: On accelerated proximal gradient methods for convex-concave optimization, http://www.math.washington.edu/~tseng/papers/apgm.pdf (2008)

Li, Z., Li, J.: A simple proximal stochastic gradient method for nonsmooth nonconvex optimization. NIPS 31, 5569–5579 (2018)

Lan, G.: An optimal method for stochastic composite optimization. Math. Program. 133(1), 365–397 (2012)

Defazio, A., Bach, F., Lacoste-Julien, S.: SAGA: a fast incremental gradient method with support for non-strongly convex composite objectives. NIPS 27, 422 (2014)

Reddi, S.J., Hefny, A., Sra, S., Póczos, B., Smola, A.: Stochastic variance reduction for nonconvex optimization. ICML. 2, 314–323 (2016)

Nguyen, L.M., Liu, J., Scheinberg, K., Takác, M.: SARAH: a novel method for machine learning problems using stochastic recursive gradient. ICML. 5, 2613–2621 (2017)

Zhou, K., Jin, Y., Ding, Q., Cheng, J.: Amortized Nesterov’s momentum: a robust momentum and its application to deep learning. UAI. 7, 211–220 (2020)

Beck, A.: First-Order Methods in Optimization. SIAM, Philadelphia (2017)

Noble, W.S.: What is a support vector machine? Nat. Biotechnol. 24(12), 1565–1567 (2006)

Huang, S., Cai, N., Pacheco, P.P., Narrandes, S., Wang, Y., Xu, W.: Applications of support vector machine (SVM) learning in cancer genomics. Cancer Genom. Proteom. 15(1), 41–51 (2018)

Rodriguez, R., Vogt, M., Bajorath, J.: Support vector machine classification and regression prioritize different structural features for binary compound activity and potency value prediction. ACS Omega 2(10), 6371–6379 (2017)

Chang, C., Lin, C.: LIBSVM: a library for support vector machines. ACM Trans. Intel. Syst. Tecnol. 2(3), 1–27 (2011)

Acknowledgements

The work is supported in part by “the Natural Science Foundation of Beijing, China” (Grant No. 1202021) and “the Natural Science Foundation of China” (Grant No. 12171027).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, R., Zhang, C., Wang, L. et al. A Stochastic Nesterov’s Smoothing Accelerated Method for General Nonsmooth Constrained Stochastic Composite Convex Optimization. J Sci Comput 93, 52 (2022). https://doi.org/10.1007/s10915-022-02016-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-02016-1