Abstract

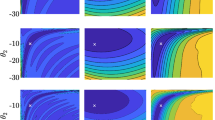

A known approach to optimization is the cyclic (or alternating or block coordinate) method, where the full parameter vector is divided into two or more subvectors and the process proceeds by sequentially optimizing each of the subvectors, while holding the remaining parameters at their most recent values. One advantage of such a scheme is the preservation of potentially large investments in software, while allowing for an extension of capability to include new parameters for estimation. A specific case of interest involves cross-sectional data that is modeled in state–space form, where there is interest in estimating the mean vector and covariance matrix of the initial state vector as well as certain parameters associated with the dynamics of the underlying differential equations (e.g., power spectral density parameters). This paper shows that, under reasonable conditions, the cyclic scheme leads to parameter estimates that converge to the optimal joint value for the full vector of unknown parameters. Convergence conditions here differ from others in the literature. Further, relative to standard search methods on the full vector, numerical results here suggest a more general property of faster convergence for seesaw as a consequence of the more “aggressive” (larger) gain coefficient (step size) possible.

Similar content being viewed by others

References

Shumway, R.H., Olsen, D.E., Levy, L.J.: Estimation and tests of hypotheses for the initial mean and covariance in the Kalman filter model. Commun. Stat., Theory Methods 10, 1625–1641 (1981)

Sun, F.K.: A maximum likelihood algorithm for the mean and covariance of nonidentically distributed observations. IEEE Trans. Autom. Control 27(1), 245–247 (1982)

Achtziger, W.: On simultaneous optimization of truss geometry and topology. Struct. Multidiscip. Optim. 33, 285–304 (2007)

Bazaraa, M.S., Sherali, H.D., Shetty, C.M.: Nonlinear Programming: Theory and Algorithms, 2nd edn. Wiley, New York (1993)

Miller, R.E.: Optimization: Foundations and Applications. Wiley, New York (2000)

Bezdek, J.C., Hathaway, R.J.: Convergence of alternating optimization. Neural Parallel Sci. Comput. 11(4), 351–368 (2003)

Tseng, P.: Convergence of a block coordinate descent method for nondifferentiable minimization. J. Optim. Theory Appl. 109(3), 475–494 (2001)

Bertsekas, D.: Nonlinear Programming, 2nd edn. Athena Scientific, Belmont (1999)

Konno, H.: A cutting plane algorithm for solving bilinear programs. Math. Program. 11, 14–27 (1976)

Alarie, S., Audet, C., Jaumard, B., Savard, G.: Concavity cuts for disjoint bilinear programming. Math. Program., Ser. A 90(2), 373–398 (2001)

Audet, C., Brimberg, J., Hansen, P., Le Digabel, S., Mladenović, N.: Pooling problem: alternate formulations and solution methods. Manag. Sci. 50(6), 761–776 (2004)

Lee, S., Park, F.C.: Cyclic optimization algorithms for simultaneous structure and motion recovery in computer vision. Eng. Optim. 40(5), 403–419 (2008)

Fessler, J.A., Hero, A.O.: Space-alternating generalized expectation–maximization algorithm. IEEE Trans. Signal Process. 42, 2664–2677 (1994)

Haaland, B., Min, W., Qian, P.Z.G., Amemiya, Y.: A statistical approach to thermal management of data centers under steady state and system perturbations. J. Am. Stat. Assoc. 105(491), 1030–1041 (2010)

Fessler, J.A., Ficaro, E.P., Clinthorne, N.H., Lange, K.: Grouped-coordinate ascent algorithms for penalized-likelihood transmission image reconstruction. IEEE Trans. Med. Imaging 16(2), 166–175 (1997)

Polak, E.: Optimization: Algorithms and Consistent Approximations. Springer, New York (1997)

Spall, J.C.: Introduction to Stochastic Search and Optimization: Estimation, Simulation, and Control. Wiley, Hoboken (2003)

Ng, S.K., Krishnan, T., McLachlan, G.J.: The EM algorithm. In: Gentle, J.E., Härdle, W., Mori, Y. (eds.) Handbook of Computational Statistics. Springer, New York (2004). Chap. II.5

Levy, L.J.: Generic maximum likelihood identification algorithms for linear state space models. In: Proceedings of the Conference on Information Sciences and Systems (CISS), March 1995, Baltimore, MD, pp. 659–667 (1995)

Apostol, T.M.: Mathematical Analysis, 2nd edn. Addison-Wesley, Reading (1974)

Fleming, W.: Functions of Several Variables. Springer, New York (1977)

Goodrich, R.L., Caines, P.E.: Linear system identification from nonstationary cross-sectional data. IEEE Trans. Autom. Control 24, 403–411 (1979)

Spall, J.C.: Cyclic seesaw optimization with applications to state-space model identification. In: Proceedings of the 45th Annual Conference on Information Sciences and Systems (CISS), 23–25 March 2011, Baltimore, MD (2011)

Wills, A., Ninness, B.: On gradient-based search for multivariable system estimates. IEEE Trans. Autom. Control 53(1), 298–306 (2008)

Segal, M., Weinstein, E.: A new method for evaluating the log-likelihood gradient, the Hessian, and the Fisher information matrix. IEEE Trans. Inf. Theory 35(3), 682–687 (1989)

Rao, C.R.: Linear Statistical Inference and its Applications, 2nd edn. Wiley, New York (1973)

Moon, T.K., Stirling, W.C.: Mathematical Methods and Algorithms for Signal Processing. Prentice Hall, Upper Saddle River (2000)

Spall, J.C., Garner, J.P.: Parameter identification for state-space models with nuisance parameters. IEEE Trans. Aerosp. Electron. Syst. 26(6), 992–998 (1990)

Rosenbrock, H.H.: An automatic method for finding the greatest or least value of a function. Comput. J. 3(3), 175–184 (1960)

Acknowledgements

This work was partially supported by US Navy Contract N00024-03-D-6606 and a JHU/APL Sabbatical Professorship. I appreciate comments from Dr. Steve Corley (JHU/APL) on a key aspect of Theorem 3.1 and assistance from former student. John Rumbavage. and current student, Qi Wang, with the numerical study in Sect. 5. Preliminary versions of parts of this paper were presented at the 2006 American Control Conference, the 2011 Conference on Information Sciences and Systems, and the 2011 IEEE Conference on Decision and Control; these conference versions did not include the complete theory of this paper and did not include the numerical study here.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Johannes O. Royset.

Rights and permissions

About this article

Cite this article

Spall, J.C. Cyclic Seesaw Process for Optimization and Identification. J Optim Theory Appl 154, 187–208 (2012). https://doi.org/10.1007/s10957-012-0001-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-012-0001-1