Abstract

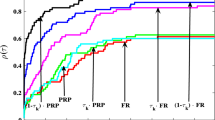

The Barzilai–Borwein conjugate gradient methods, which were first proposed by Dai and Kou (Sci China Math 59(8):1511–1524, 2016), are very interesting and very efficient for strictly convex quadratic minimization. In this paper, we present an efficient Barzilai–Borwein conjugate gradient method for unconstrained optimization. Motivated by the Barzilai–Borwein method and the linear conjugate gradient method, we derive a new search direction satisfying the sufficient descent condition based on a quadratic model in a two-dimensional subspace, and design a new strategy for the choice of initial stepsize. A generalized Wolfe line search is also proposed, which is nonmonotone and can avoid a numerical drawback of the original Wolfe line search. Under mild conditions, we establish the global convergence and the R-linear convergence of the proposed method. In particular, we also analyze the convergence for convex functions. Numerical results show that, for the CUTEr library and the test problem collection given by Andrei, the proposed method is superior to two famous conjugate gradient methods, which were proposed by Dai and Kou (SIAM J Optim 23(1):296–320, 2013) and Hager and Zhang (SIAM J Optim 16(1):170–192, 2005), respectively.

Similar content being viewed by others

References

Yuan, Y.X.: A review on subspace methods for nonlinear optimization. In: Proceedings of the International Congress of Mathematics 2014, Seoul, Korea, pp. 807–827 (2014)

Yuan, Y.X., Stoer, J.: A subspace study on conjugate gradient algorithms. Z. Angew. Math. Mech. 75(1), 69–77 (1995)

Andrei, N.: An accelerated subspace minimization three-term conjugate gradient algorithm for unconstrained optimization. Numer. Algorithms 65(4), 859–874 (2014)

Yang, Y.T., Chen, Y.T., Lu, Y.L.: A subspace conjugate gradient algorithm for large-scale unconstrained optimization. Numer. Algorithms 76(3), 813–828 (2017)

Barzilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8(1), 141–148 (1988)

Dai, Y.H., Kou, C.X.: A Barzilai–Borwein conjugate gradient method. Sci. China. Math. 59(8), 1511–1524 (2016)

Dai, Y.H., Kou, C.X.: A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolfe line search. SIAM J. Optim. 23(1), 296–320 (2013)

Hager, W.W., Zhang, H.C.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170–192 (2005)

Gould, N.I.M., Orban, D., Toint, PhL: CUTEr and SifDec: a constrained and unconstrained testing environment, revisited. ACM Trans. Math. Softw. 29(4), 373–394 (2003)

Andrei, N.: An unconstrained optimization test functions collection. Adv. Model. Optim. 10, 147–161 (2008)

Fletcher, R., Reeves, C.: Function minimization by conjugate gradients. Comput. J. 7(2), 149–154 (1964)

Hestenes, M.R., Stiefel, E.L.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49(6), 409–436 (1952)

Polyak, B.T.: The conjugate gradient method in extreme problems. USSR Comput. Math. Math. Phys. 9, 94–112 (1969)

Dai, Y.H., Yuan, Y.X.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10(1), 177–182 (1999)

Dai, Y.H., Yuan, Y.X.: Nonlinear Conjugate Gradient Methods. Shanghai Scientific and Technical Publishers, Shanghai (2000)

Hager, W.W., Zhang, H.C.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2(1), 35–58 (2006)

Dong, X.L., Liu, H.W., He, Y.B.: A self-adjusting conjugate gradient method with sufficient descent condition and conjugacy condition. J. Optim. Theory Appl. 165(1), 225–241 (2015)

Zhang, L., Zhou, W.J., Li, D.H.: Global convergence of a modified Fletcher–Reeves conjugate method with Armijo-type line search. Numer. Math. 104(4), 561–572 (2006)

Dong, X.L., Liu, H.W., He, Y.B.: A modified Hestenes–Stiefel conjugate gradient method with sufficient descent condition and conjugacy condition. J. Comput. Appl. Math. 281, 239–249 (2015)

Babaie-Kafaki, S., Reza, G.: The Dai–Liao nonlinear conjugate gradient method with optimal parameter choices. Eur. J. Oper. Res. 234(3), 625–630 (2014)

Andrei, N.: Another conjugate gradient algorithm with guaranteed descent and the conjugacy conditions for large-scaled unconstrained optimization. J. Optim. Theory Appl. 159(3), 159–182 (2013)

Raydan, M.: On the Barzilai and Borwein choice of steplength for the gradient method. IMA J. Numer. Anal. 13(3), 321–326 (1993)

Dai, Y.H., Liao, L.Z.: \( R \)-linear convergence of the Barzilai and Borwein gradient method. IMA J. Numer. Anal. 22(1), 1–10 (2002)

Raydan, M.: The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Optim. 7(1), 26–33 (1997)

Liu, Z.X., Liu, H.W., Dong, X.L.: An efficient gradient method with approximate optimal stepsize for the strictly convex quadratic minimization problem. Optimization 67(3), 427–440 (2018)

Biglari, F., Solimanpur, M.: Scaling on the spectral gradient method. J. Optim. Theory Appl. 158(2), 626–635 (2013)

Liu, Z.X., Liu, H.W., Dong, X.L.: A new adaptive Barzilai and Borwein method for unconstrained optimization. Optim. Lett. 12(4), 845–873 (2018)

Dai, Y.H., Yuan, J.Y., Yuan, Y.X.: Modified two-point stepsize gradient methods for unconstrained optimization problems. Comput. Optim. Appl. 22(1), 103–109 (2002)

Liu, Z.X., Liu, H.W.: An efficient gradient method with approximate optimal stepsize for large-scale unconstrained optimization. Numer. Algorithms 78(1), 21–39 (2018)

Liu, Z.X., Liu, H.W.: Several efficient gradient methods with approximate optimal stepsizes for large scale unconstrained optimization. J. Comput. Appl. Math. 328, 400–413 (2018)

Yuan, Y.X.: A modified BFGS algorithm for unconstrained optimization. IMA J. Numer. Anal. 11(3), 325–332 (1991)

Andrei, N.: Open problems in nonlinear conjugate gradient algorithms for unconstrained optimization. Bull. Malays. Math. Sci. Soc. 34(2), 319–330 (2011)

Zhou, B., Gao, L., Dai, Y.H.: Gradient methods with adaptive stepsizes. Comput. Optim. Appl. 35(1), 69–86 (2006)

Zhang, H.C., Hager, W.W.: A nonmonotone line search technique and its application to unconstrained optimization. SIAM J. Optim. 14(4), 1043–105 (2004)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2002)

Hager, W.W., Zhang, H.C.: The limited memory conjugate gradient method. SIAM J. Optim. 23(4), 2150–2168 (2013)

Hager, W.W., Zhang, H.C.: Algorithm 851:CG\( \_ \)DESCENT, a conjugate gradient method with guaranteed descent. ACM Trans. Math. Softw. 32(1), 113–137 (2006)

Acknowledgements

We would like to thank the anonymous referees and the editor for their valuable comments. We also would like to thank Professor Dai, Y. H., Dr. Kou caixia and Dr. Chen weikun for their help in the numerical experiments, and thank Professors Hager, W.W. and Zhang, H. C. for their C code of CG\( \_ \)DESCENT (5.3). This research is supported by National Science Foundation of China (No. 11461021), Guangxi Science Foundation (Nos. 2015GXNSFAA139011, 2017GXNSFBA198031), Shaanxi Science Foundation (No. 2017JM1014), Scientific Research Project of Hezhou University (Nos. 2014YBZK06, 2016HZXYSX03), Guangxi Colleges and Universities Key Laboratory of Symbolic Computation and Engineering Data Processing.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liu, H., Liu, Z. An Efficient Barzilai–Borwein Conjugate Gradient Method for Unconstrained Optimization. J Optim Theory Appl 180, 879–906 (2019). https://doi.org/10.1007/s10957-018-1393-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-018-1393-3

Keywords

- Barzilai–Borwein method

- Barzilai–Borwein conjugate gradient method

- Subspace minimization

- R-linear convergence

- Nonmonotone Wolfe line search