Abstract

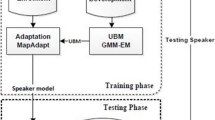

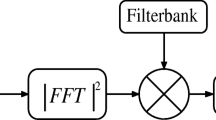

A human speaker recognition expert often observes the speech spectrogram in multiple different scales for speaker recognition, especially under the short utterance condition. Inspired by this action, this paper proposes a novel multi-resolution time frequency feature (MRTF) extraction method, which is obtained by performing a 2-Dimensional discrete cosine transform (DCT) in multi-scale on the time frequency spectrogram matrix and then selecting and combining to the final multi-scaled transformed elements. Compared to the traditional Mel-Frequency Cepstral Coefficient (MFCC) feature extraction, the proposed method can make better use of multi-resolution temporal-frequency information. Beyond this, we also proposed three complementary combination strategies of MFCC and MRTF: in feature level, in i-vector level and in score level. Comparing their performance. We found the best results are obtained by combination in i-vector level. In the three NIST 2008 Speaker Recognition Evaluation datasets, the proposed method is the most effective for improving the performance under short utterance than under long utterance. And after the combination, we can achieve an EER of 11.32 % and MinDCF of 0.054 in the 10sec-10sec trials on the male dataset, which is an absolute 3 % improvement of EER than the best reported result in this field.

Similar content being viewed by others

References

Ajmera P, Holambe R (2009) Multiresolution features based polynomial kernel discriminant analysis for speaker recognition. In: International conference on advances in computing, control, telecommunication technologies, 2009, ACT ’09, pp 333–337

Dehak N (2009) Discriminative and generative approches for long- and short-term speaker characteristics modeling: application to speaker verification. Ph.D. thesis, École de Technologie Supérieure, Montreal

Dehak N, Kenny P, Dehak R, Dumouchel P, Ouellet P (2011) Front-end factor analysis for speaker verification. IEEE Trans Audio Speech Language Proc 19(4):788–798. doi:10.1109/TASL.2010.2064307

Dehak N, Kenny P, da Dehak R, Glembek O, Dumouchel P, Burget L, Hubeika V, Castaldo F (2009) Support vector machines and joint factor analysis for speaker verification. In: ICASSP, pp 4237–4240

Dehak N, Dehak R, Glass J, Reynolds D, Kenny P (2010) Cosine similarity scoring without score normalization techniques. In: Odyssey 2010—the speaker and language recognition workshop

Hatch AO, Kajarekar S, Stolcke A (2006) Within-class covariance normalization for svm-based speaker recognition. In: Ninth international conference on spoken language processing

Impedovo D, Pirlo G, Petrone M (2012) A multi-resolution multi-classifier system for speaker verification. Expert Syst 29(5):442–455

Jayanna H, Mahadeva Prasanna S (2010) Limited data speaker identification. Sadhana 35(5):525–546

Kanagasundaram A, Vogt R, Dean DB, Sridharan S, Mason MW (2011) I-vector based speaker recognition on short utterances. In: Interspeech 2011, International Speech Communication Association (ISCA). Firenze Fiera, Florence, pp 2341–2344

Kenny P, Boulianne G, Dumouchel P (2005) Eigenvoice modeling with sparse training data. IEEE Trans Audio Speech Language Proc 13(3):345–354

Kenny P, Boulianne G, Ouellet P, Dumouchel P (2007) Joint factor analysis versus eigenchannels in speaker recognition. IEEE Trans Audio Speech Language Proc 15(4):1435–1447

Kenny P, Boulianne G, Ouellet P, Dumouchel P (2007) Speaker and session variability in gmm-based speaker verification. IEEE Trans Audio Speech Language Proc 15(4):1448–1460

Kinnunen T, Li H (2010) An overview of text-independent speaker recognition: from features to supervectors. Speech Comm 52(1):12–40. doi:10.1016/j.specom.2009.08.009. http://www.sciencedirect.com/science/article/pii/S0167639309001289

Li ZY, He L, Zhang WQ, Liu J (2010) Multi-feature combination for speaker recognition. In: 2010 7th International Symposium on Chinese Spoken Language Processing (ISCSLP). IEEE, pp 318–321

Li ZY, Zhang WQ, He L, Liu J (2012) Complementary combination in i-vector level for language recognition. In: Odyssey 2012—the speaker and language recognition workshop

Martinez AM, Kak AC (2001) Pca versus lda. IEEE Trans Pattern Anal Mach Intell 23(2):228–233

Mclaren M, Vogt R, Baker B, Sridharan S, Sridharan S (2010) Experiments in svm-based speaker verification using short utterances. In: Odyssey 2012—the speaker and language recognition workshop

NIST (2008) The nist year 2008 speaker recognition evaluation plan. National Institute of Standards and Technology

Pelecanos J, Sridharan S (2001) Feature warping for robust speaker verification. In: Odyssey 2001—the speaker and language recognition workshop

Reynolds DA, Quatieri TF, Dunn RB (2000) Speaker verification using adapted gaussian mixture models. Digital Sign Proc 10(1–3):19–41

Shan Y, Liu J (2011) Robust speaker recognition in cross-channel condition based on gaussian mixture model. Multimed Tools Appl 52(1):159–173

Stafylakis T, Kenny P, Senoussaoui M, Dumouchel P (2012) Plda using gaussian restricted boltzmann machines with application to speaker verification. In: INTERSPEECH

Zhang WQ, Deng Y, He L, Liu J (2010) Variant time-frequency cepstral features for speaker recognition. In: INTERSPEECH, pp 2122–2125

Zhang WQ, He L, Deng Y, Liu J, Johnson M (2011) Time frequency cepstral features and heteroscedastic linear discriminant analysis for language recognition. IEEE Trans Audio Speech Language Proc 19(2):266–276

Acknowledgements

This work is supported by National Natural Science Foundation of China (Project 61370034 , 61273268, 61005019, 90920302) and by Beijing Natural Science Foundation Program (Project KZ201110005005).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, ZY., Zhang, WQ. & Liu, J. Multi-resolution time frequency feature and complementary combination for short utterance speaker recognition. Multimed Tools Appl 74, 937–953 (2015). https://doi.org/10.1007/s11042-013-1705-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-013-1705-4