Abstract

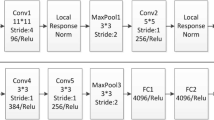

Automatic image annotation aims to assign relevant keywords to images and has become a research focus. Although many techniques have been proposed to solve this problem in the last decade, giving promissing performance on standard datasets, we propose a novel automatic image annotation technique in this paper. Our method uses a label transfer mechanism to automatically recommend those promising tags to each image by using the category information of images. As image representation is one of the key technique in image annotation, we use sparse coding based spatial pyramid matching and deep convolutional neural networks to model image features. And metric learning technique is further used to combine these features to achieve more effective image representation in this paper. Experimental results illustrate that the proposed method get similar or better results than the state-of-the-art methods on three standard image datasets.

Similar content being viewed by others

Notes

The source code of this algorithm can be downloaded from http://www.eecs.umich.edu/~honglak/softwares/nips06-sparsecoding.htm

MSRC images can be downloaded from http://research.microsoft.com/en-us/projects/objectclassrecognition/

References

Barnard K, Jordan MI (2005) Word sense disambiguation with pictures. Artif Intell 167(1-2):13–30

Dehghani M, Zamani HAS, Kamps J, Croft WB (2017) Neural ranking models with weak supervision. In: ACM SIGIR, pp 65–74

Duygulu P, Barnard K, Freitas JFG, Forsyth DA (2002) Object recognition as machine translation: learning a lexicon for a fixed image vocabulary. In: European conference on computer vision, pp 97–112

Feng SL, Manmatha R, Lavrenko V (2004) Multiple bernoulli relevance models for image and video annotation. In: CVPR, pp 1002–1009

Gong Y, Jia Y, Leung T, Toshev A, Ioffe S (2014) Deep convolutional ranking for multilabel image annotation. In: arXiv:http://arXiv.org/abs/1312.4894

Guillaumin M, Mensink T, Verbeek J, Schmid C (2009) Tagprop: discriminative metric learning in nearest neighbor models for image auto-annotation. In: ICCV, pp 309–316

Hare JS, Lewisa PH, Enserb PG, Sandomb C (2006) Mind the gap: another look at the problem of the semantic gap in image retrieval. Multimedia Content, Analysis, Management and Retrieval

Haug T, Ganea OE, Grnarova P (2018) Neural multi-step reasoning for question answering on semi-structured tables. In: European conference on information retrieval, pp 611–617

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: CVPR, pp 770–778

Jeon J, Lavreko V, Manmatha R (2003) Automatic image annotation and retrieval using cross-media relevance models. In: ACM SIGIR, pp 119–126

Johnson J, Ballan L, Fei-Fei L (2015) Love thy neighbors: image annotation by exploiting image metadata. In: ICCV, pp 4624–4632

Kiros R, Szepesvari C (2015) Deep representations and codes for image auto-annotation. In: NIPS, pp 917–925

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: NIPS, pp 1106–1114

Kumar A, Irsoy O, Su J, Bradbury J (2015) Ask me anything: dynamic memory networks for natural language processing. In: arXiv:http://arXiv.org/abs/1506.07285v2

Lavrenko V, Manmatha R, Jeon J (2004) A model for learning the semantics of pictures. In: NIPS, pp 553–560

Lazebnik S, Schmid C, Ponce J (2006) Beyond bags of features: spatial pyramid matching for recognizing natural scene categories. In: CVPR

Lee H, Battle A, Raina R, Ng AY (2007) Efficient sparse coding algorithms. In: NIPS, pp 801–808

Li J, Wang J (2003) Automatic linguistic indexing of pictures by a statistical modeling approach. IEEE PAMI 25(9):1075–1088

Li Z, Liu J, Xu C, Lu H (2013) Mlrank: multi-correlation learning to rank for image annotation. Pattern Recogn 46(10):2700–2710

Liu Y, Xu D, Tsang I, Luo J (2007) Using large-scale web data to facilitate textual query based retrieval of consumer photos. ACM MM 163:1277–1283

Lu Z (2009) Generalized relevence models for automatic image annotation. InL Pacific Rim conference on multimedia: advances in multimedia information processing

Metzler D, Manmatha R An inference network approach to image retrieval. In: CIVR, pp 42–50

Monay F, Gatica-Perez D (2003) On image auto-annotation with latent space models. In: ACM MM, pp 275–278

Moran S, Lanvrenko V (2014) Sparse kernel learning for image annotation. In: ACM ICMR, p 113

Sigurbj B, Zwol R (2008) Flickr tag recommendation based on collective knowledge. In: WWW, pp 327–336

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large scale image recognition. In: ICLR

Song Y, Zhuang Z, Li H, Zhao Q, Li J, Lee W, Giles CL (2008) Real-time automatic tag recommendation. In: ACM SIGIR, pp 515–522

Song J, Gao L, Nie F, Shen H, Yan Y, Nicu S (2016) Optimized graph learning using partial tags and multiple features for image and video annotation. IEEE Trans Image Process 25(11):4999–5011

Song J, Guo Y, Gao L (2017) From deterministic to generative: multi-modal stochastic rnns for video captioning. IEEE Transactions on Neural Networks and Learning Systems

Song J, Zhang H, Li X, Gao L, Wang M, Hong R (2018) Self-supervised video hashing with hierarchical binary auto-encoder. IEEE Trans Image Process 27 (7):3210–3221

Szegedy C, Liu W, Jia Y (2015) Going deeper with convolutions. In: CVPR, pp 1–9

Venkatesh N, Subhransu M, Manmatha R (2015) Automatic image annotation using deep learning representations. In: ACM ICMR, pp 603–606

Verma Y, Jawahar C (2012) Image annotation using metric learning in semantic neighbourhoods. In: ECCV, pp 836–849

Wang L, Liu L, Khan L (2004) Automatic image annotation and retrieval ussing subspace clustering algorithm. In: ACM Int’1 workshop multimedia databases, pp 100–108

Wang G, Hoiem D, Forsyth DA (2009) Building text features for object image classification. In: CVPR, pp 1367–1374

Wang J, Yang Y, Mao J, Huang Z, Huang C, Xu W (2016) Cnn-rnn: a unified framework for multi-label image classification. In: CVPR, pp 2285–2294

Wang X, Gao L, Song J, Shen H (2017) Beyond frame-level cnn: saliency-aware 3-d cnn with lstm for video action recognition. IEEE Signal Process Lett 24(4):510–514

Wang X, Gao L, Wang P, Sun X, Liu X (2018) Two-stream 3d convnet fusion for action recognition in videos with arbitrary size and length. IEEE Trans Multimed 20(3):634–644

Wu X, Du Z, Guo Y (2018) A visual attention-based keyword extraction for document classification. Multimed Tools Appl, 1–13

Yang J, Yu K, Gong Y, Huang T (2009) Linear spatial pyramid matching using sparse coding for image classification. In: CVPR, pp 1794–1801

Yang Z, He X, Gao J, Deng L, Smola A (2016) Stacked attention networks for image question answering. In: CVPR, pp 21–29

Yu Z, Yu J, Fan J, Tao D (2017) Multi-modal factorized bilinear pooling with co-attention learning for visual question answering. In: ICCV, pp 1839–1848

Yu J, Lu Y, Qin Z, Liu Y, Tan J, Li G, Zhang W (2018) Modeling text with graph convolutional network for cross-modal information retrieval. In: arXiv:http://arXiv.org/abs/1802.00985

Zhang W, Qin Z, Wan T (2012) Semi-automatic image annotation using sparsing coding. In: ICMLC, pp 720–724

Zhang W, Hu H, Hu HY (2018) Training visual-semantic embedding network for boosting automatic image annotation. Neural Process Lett 3:1–17

Acknowledgements

This work is supported by the Natural Science Foundation of China (No. 61572162) and the Zhejiang Provincial Key Science and Technology Project Foundation (No. 2018C01012).

Funding

This work is supported by the Natural Science Foundation of China (No. 61572162) and the Zhejiang Provincial Key Science and Technology Project Foundation (No. 2018C01012).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, W., Hu, H., Hu, H. et al. Automatic image annotation via category labels. Multimed Tools Appl 79, 11421–11435 (2020). https://doi.org/10.1007/s11042-019-07929-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-07929-y