Abstract

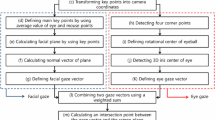

This paper suggests a method for tracking gaze of a person at a distance around 2 m, using a single pan-tilt-zoom (PTZ) camera. In the suggested method, images are acquired for gaze tracking by turning the camera to the wide angle mode, or the narrow angle mode, depending on the location of the person. The face that is present in the field of view (FOV) of a camera, is detected in the wide angle mode. Once the location of the face is calculated, the camera turns to the narrow angle mode. The images, which have been acquired in the narrow angle mode, contain information on the direction of gaze of the person, who is at a distance. The method for calculating the direction of gaze is comprised of the head pose estimation and gaze direction calculation steps. The head pose estimation is performed using the location information on the eyes and nose in the face. The direction of gaze is generated using the process of partitioning the pupil through a deformable template, and extracting the center of an eye using the end points of both eyes and head pose information. This paper shows that the proposed gaze tracking algorithm can effectively track the direction of a person’s gaze, at varying distances.

Similar content being viewed by others

References

Alnajar F, Gevers T, Valenti R, Ghebreab S (2017) Auto-calibrated gaze estimation using human gaze patterns. Int J Comput Vis 124(2):223–236

Arar NM, Gao H, Thrian JP (2017) A regression-based user calibration framework for real-time gaze estimation. IEEE Transactions on Circuites and Systems for Video Technology 27(12):2623–2638

Baker BW, Woods MG (2001) The role of the divine proportion in the esthetic improvement of patients undergoing combined orthodontic/orthognathic surgical treatment. International Journal of Adult Orthodontics and Orthognathic Surgery 16(2):108–120

Chan CN, Oe S, Lin CS (2007) Active eye-tracking system by using quad ptz cameras,” In Proc. of the 33rd Annual Conference of the IEEE Industrial Electronics Society, pp. 2389–2394

Cheng H, Liu Y, Fu W, Ji Y, Yang L, Zhao Y, Yang J (2017) Gazing Point dependent eye gaze estimation. Pattern Recogn 71:36–44

Cho DC, Kim WY (2013) Long-range gaze tracking system for large movements. IEEE Trans Biomed Eng 60(12):3432–3440

Corcoran PM, Nanu F, Petrescu S, Bigioi P (2012) Real-time eye gaze tracking for gaming design and consumer electronics systems. IEEE Trans Consum Electron 58(2):347–355

Dhanachandra N, Manglem K, Chanu YJ (2015) Image segmentation using K-means clustering algorithm and subtractive clustering algorithm. Procedia Computer Science 54:764–771

Duchowski AT (2002) A breadth-first survey of eye-tracking applications. Behavior Research Methods, Instruments, and Computers 34(4):455–470

Fadda G, Marcialis GL, Roli F, Ghiani L (2013) Exploiting the golden ratio on human faces for head-pose estimation. Lect Notes Comput Sci 8156:280–289

Hallinan PW (1991) Recognizing human eyes. In Proc of the SPIE Geometric Methods in Computer Vision 1570:214–226

Hansen D, Ji Q (2009) In the eye of the beholder: a survey of models for eyes and gaze. IEEE Trans Pattern Anal Mach Intell 32(3):478–500

Hennessey C, Fiset J (2012) Long range eye tracking: bringing eye tracking into the living room. In Proc. of the International Symposium on Eye Tracking Research and Applications:249–252

Horsley M, Eliot M, Knight BA, Reilly R (2014) Current trends in eye tracking research. Springer

Iqbal N, Lee H, Lee SY (2013) Smart user interface for mobile consumer devices using model-based eye-gaze estimation. IEEE Trans Consum Electron 59(1):161–166

Jairath S, Bharadwaj S, Vatsa M (2015) Adaptive skin color model to improve video face detection. Machine Intelligence and Signal Processing:131–142

Ju F, Chen X, Sato Y (2017) Appearance-based gaze estimation via Uncalibrated gaze pattern recovery. IEEE Trans Image Process 26(4):1543–1553

Kar A, Corcoran P (2017) A review and analysis of eye-gaze estimation systems, algorithms and performance evaluation methods in consumer platforms. IEEE Access 5:16495–16519

Khade BS, Haikwad HM, Aher AS, Patil KK (2016) Face Recognition Techniques: A Survey. International Journal of Computer Science and Information Technology 5(11):65–72

Kublbeck C, Ernst A (2006) Face detection and tracking in video sequences using the odified census transformation. Image Vis Comput 24(6):564–572

Lee HC, Luong DT, Cho CW, Lee EC, Park KR (2010) Gaze tracking system at a distance for controlling IPTV. IEEE Trans Consum Electron 56(4):2577–2583

Magee JJ, Betke M, Gips J, Scott MR, Waber BN (2008) A human-computer interface using symmetry between eyes to detect gaze direction. IEEE Trans Syst Man Cybern Syst Hum 38(6):1248–1261

Ramdane-Cherif Z, Nait-Ali A, Motsch JF, Krebs MO (2004) Performance of computer system for recording and analyzing eye gaze position using an infrared light device. J Clin Monit Comput 18(1):39–44

Reale M, Hung T, Yin L (2010) Pointing with the eyes: gaze estimation using a static/active camera system and 3d iris disk model. IEEE International Conference on Multimedia and Expo:280–285

Tamura K, Choi R, Aoki Y (2018) Unconstrained and calibration-free gaze estimation in a room-scale area using a monocular camera. IEEE Access 6:10896–10908

Tunhua W, Baogang B, Changle Z, Shaozi L, Kunhui L (2010) Real-time non-intrusive eye tracking for human computer interaction. In Proc. of the 5th International Conference on Computer Science and Education, pp. 1092–1096

Viola P, Jones M (2001) Rapid object detection using a boosted cascade of simple features. In Proc of the IEEE Conference on Computer Vision and Pattern Recognition 1:511–518

Wood E, Bulling A (2004) EyeTab: model-based gaze estimation in unmodified tablet computers. In Proc of the International Symposium on Eye Tracking Research and Applications:207–210

Yan S, Wang H, Fang Z, Wang C A Face Detection Method Combining Improved AdaBoost Algorithm and Template Matching in Video Sequence,” Intelligent Human-Machine Systems and Cybernetics, 8th International Conference on, 2016

Yoo BI, Han JJ, Choi CK, Yi KY, Suh SJ, Park DS, Kim CY (2010) 3D user Interface combining gaze and hand gestures for large-scale display. In Proc of the ACM Conference on Human Factors in Computing Systems:3709–3714

Acknowledgements

This research was supported by the MSIT(Ministry of Science and ICT), Korea, under the ITRC(Information Technology Research Center) support program(IITP-2018-0-01419) supervised by the IITP(Institute for Information & communications Technology Promotion).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lee, GJ., Jang, SW. & Kim, GY. Pupil detection and gaze tracking using a deformable template. Multimed Tools Appl 79, 12939–12958 (2020). https://doi.org/10.1007/s11042-020-08638-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-08638-7