Abstract

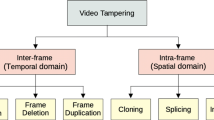

Videos are tampered by the forgers to modify or remove their content for malicious purpose. Many video authentication algorithms are developed to detect this tampering. At present, very few standard and diversified tampered video dataset is publicly available for reliable verification and authentication of forensic algorithms. In this paper, we propose the development of total 210 videos for Temporal Domain Tampered Video Dataset (TDTVD) using Frame Deletion, Frame Duplication and Frame Insertion. Out of total 210 videos, 120 videos are developed based on Event/Object/Person (EOP) removal or modification and remaining 90 videos are created based on Smart Tampering (ST) or Multiple Tampering. 16 original videos from SULFA and 24 original videos from YouTube (VTD Dataset) are used to develop different tampered videos. EOP based videos include 40 videos for each tampering type of frame deletion, frame insertion and frame duplication. ST based tampered video contains multiple tampering in a single video. Multiple tampering is developed in three categories (1) 10-frames tampered (frame deletion, frame duplication or frame insertion) at 3-different locations (2) 20-frames tampered at 3- different locations and (3) 30-frames tampered at 3-different locations in the video. Proposed TDTVD dataset includes all temporal domain tampering and also includes multiple tampering videos. The resultant tampered videos have video length ranging from 6 s to 18 s with resolution 320X240 or 640X360 pixels. The database is comprised of static and dynamic videos with various activities, like traffic, sports, news, a ball rolling, airport, garden, highways, zoom in zoom out etc. This entire dataset is publicly accessible for researchers, and this will be especially valuable to test their algorithms on this vast dataset. The detailed ground truth information like tampering type, frames tampered, location of tampering is also given for each developed tampered video to support verifying tampering detection algorithms. The dataset is compared with state of the art and validated with two video tampering detection methods.

Similar content being viewed by others

References

Amerini I, Ballan L, Caldelli R, Del Bimbo A, Serra G (2011) A SIFT-based forensic method for copy-move attack detection and transformation recovery. IEEE Trans. Inf. Forensics Secur. 6:1099–1110. https://doi.org/10.1109/TIFS.2011.2129512

Ardizzone E, Mazzola G (2015) Image analysis and processing — I.C.I.A.P. 2015, 9279. 665–675. https://doi.org/10.1007/978-3-319-23231-7

Ardizzone E, Bruno A, Mazzola G (2015) Copy--move forgery detection by matching triangles of keypoints. IEEE Trans Inf Forensics Secur 10:2084–2094

Bestagini P, Milani S, Tagliasacchi M, Tubaro S (2013) Local tampering detection in video sequences, in: 2013 IEEE 15th Int. Work. Multimed. Signal Process, pp 488–493

Chen S, Tan S, Li B, Huang J (2016) Automatic detection of object-based forgery in advanced video. IEEE Trans Circuits Syst Video Technol 26:2138–2151

Christlein V, Riess C, Jordan J, Riess C, Angelopoulou E (2012) An evaluation of popular copy-move forgery detection approaches. IEEE Trans Inf Forensics Secur 7:1841–1854. https://doi.org/10.1109/TIFS.2012.2218597

COVERAGE – A NOVEL DATABASE FOR COPY-MOVE FORGERY DETECTION Advanced Digital Sciences Center , University of Illinois at Urbana-Champaign, Singapore . Electrical and Computer Engineering and C.S.L., University of Illinois at Urbana-Champaign, IL, U.S.A. C, (2016).

D’Avino D, Cozzolino D, Poggi G, Verdoliva L (2017) Autoencoder with recurrent neural networks for video forgery detection. Electron Imaging 2017:92–99

Dong J, Wang W, Tan T (2013) Casia image tampering detection evaluation database. In: 2013 IEEE China Summit Int. Conf. Signal Inf. Process, pp 422–426

Gloe T, Böhme R (2010) The'Dresden Image Database'for benchmarking digital image forensics. In: Proc. 2010 A.C.M. Symp. Appl. Comput., pp 1584–1590

Gupta P, Raj A, Singh S, Yadav S (2019) Emerging trends in expert applications and security. Springer Singapore. https://doi.org/10.1007/978-981-13-2285-3

Ismael Al-Sanjary O, Ahmed AA, Sulong G (2016) Development of a video tampering dataset for forensic investigation. Forensic Sci Int 266:565–572. https://doi.org/10.1016/j.forsciint.2016.07.013

Johnston P, Elyan E (2019) A review of digital video tampering: From simple editing to full synthesis. Digit Investig. https://doi.org/10.1016/j.diin.2019.03.006

Le, T. T., Almansa, A., Gousseau, Y., & Masnou, S. (2017). Motion-consistent video inpainting. In 2017 IEEE International Conference on Image Processing (ICIP) (pp. 2094-2098). IEEE.

Lo Presti L, La Cascia M (2014) Concurrent photo sequence organization. Multimed Tools Appl 68:777–803

Qadir G, Yahaya S, Ho ATS (2012) Surrey University Library for Forensic Analysis (SULFA) of video content, pp 121–121. https://doi.org/10.1049/cp.2012.0422

Singh RD, Aggarwal N (2018) Video content authentication techniques: a comprehensive survey. Multimed Syst 24:211–240. https://doi.org/10.1007/s00530-017-0538-9

Sitara K, Mehtre BM (2016) Digital video tampering detection: An overview of passive techniques. Digit Investig 18:8–22. https://doi.org/10.1016/j.diin.2016.06.003

Sitara K, Mehtre BM (2018) Detection of inter-frame forgeries in digital videos. Forensic Sci Int 289:186–206. https://doi.org/10.1016/j.forsciint.2018.04.056

Video_ Copy-move forgeries dataset - REWIND project, (n.d.).

Wang W, Jiang X, Wang S, Wan M, Sun T (2013) Identifying video forgery process using optical flow. In: Int. Work. Digit. Watermarking, pp 244–257

Wang Q, Li Z, Zhang Z, Ma Q (2014) Video inter-frame forgery identification based on consistency of correlation coefficients of gray values. J Comput Commun 2:51–57

Zampoglou M, Papadopoulos S, Kompatsiaris Y (2015) Detecting image splicing in the wild (web). In: 2015 IEEE Int. Conf. Multimed. Expo Work, pp 1–6

Zampoglou M, Markatopoulou F, Mercier G, Touska D, Apostolidis E, Papadopoulos S, Cozien R, Patras I, Mezaris V, Kompatsiaris I (2019) Detecting tampered videos with multimedia forensics and deep learning. Lect. Notes Comput. Sci. (Including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics). 11295 LNCS 374–386. https://doi.org/10.1007/978-3-030-05710-7_31

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this section, we give summarization steps for implementation of Temporal domain tampering (EOP).

-

1.

Frame Deletion (EOP)

-

1.

Using MATLAB, the original input video is converted into frames

-

2.

Manually frames are selected containing the event/object/person to be deleted from the original video

-

3.

Start frame number del1, and end frame number del2 of the selected frames are recorded

-

4.

Frames from del1 to del2 are deleted

-

5.

Rest of the frames are converted into a video using MATLAB program

-

6.

An actual example of frame deletion is shown in Fig. 3. In Original video named “02_original.avi”, frame number del1 = 57 to del2 = 106 (50 frames) containing the Event of “ball rolling on the table” is selected

-

7.

Now to eliminate this Event, frames from del1 = 57 to del2 = 106 are deleted from the original video frames.

-

8.

The remaining frames are converted into a video to develop tampered video “Tampered_EOP_02_original_framedel.avi.”

-

9.

Table 2 shows all frame deleted tampered videos.

-

1.

-

2.

Frame Duplication (EOP)

-

1.

Using MATLAB, the original input video is converted into frames

-

2.

Manually frames are selected from the original video containing the event/object/person to be duplicated (copied) into the original video

-

3.

Start frame number dup1, and end frame number dup2 of the selected frames are recorded

-

4.

Frames from dup1 to dup2 are duplicated (copied) and pasted into an original video at another location

-

5.

All frames are converted into the video using MATLAB program

-

6.

An actual example of frame duplication is shown in Fig. 4. In Original video named “02_original.avi”, frame number dup1 = 55 to dup2 = 105 (51 frames) containing the Event of “ball rolling on the table” are selected

-

7.

Now to duplicate this Event, frame number dup1 = 55 to dup2 = 105 are copied and pasted from frame number 145 to 195 into the original video frames

-

8.

So, in frame duplicated video, this event will be seen twice

-

9.

All frames are converted into a video to develop tampered video “Tampered_EOP_02_original_framedup.avi.”

-

10.

Table 3 shows all frame duplicated tampered videos

-

1.

-

3.

Frame Insertion (EOP)

-

1.

Using MATLAB, the original video and insertion video are converted into frames

-

2.

Manually frames are selected from the insertion video frames containing the event/object/person to be inserted into the original video

-

3.

Start frame number ins1 and end frame number ins2 of the selected frames from the insertion video frames are recorded

-

4.

Frames ins1 to ins2 are inserted into original video frames

-

5.

All frames are converted into a video using MATLAB program

-

6.

An actual example of frame insertion is shown in Fig. 5. As seen in Fig. 5b, 70 frames (frame ins1 to ins2) from the video “04_original.avi” are selected containing the event “ball rolling on the table”

-

7.

These frames from ins1 to ins2 are inserted in the original video “02_original.avi” at location starting from frame number 41

-

8.

Frames are added in such a way that tampered video looks continuous

-

9.

All frames are converted into a video to develop tampered video “Tampered_EOP_02_original_frameins.avi”.

-

10.

Table 4 shows all frame inserted tampered videos.

-

1.

Rights and permissions

About this article

Cite this article

Panchal, H.D., Shah, H.B. Video tampering dataset development in temporal domain for video forgery authentication. Multimed Tools Appl 79, 24553–24577 (2020). https://doi.org/10.1007/s11042-020-09205-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09205-w