Abstract

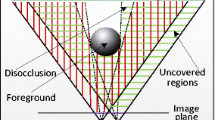

Depth-image-based rendering is an efficient way to produce content for 3D video and free viewpoint video. However, since the background that is occluded by the foreground objects in the reference view may become visible in the synthesized view, disocclusions are produced. In this paper, a disocclusion-type aware hole filling method is proposed for disocclusion handling. Disocclusions are divided into two types based on the depth value of their boundary pixels: foreground-background (FG-BG) disocclusion and background-background (BG-BG) disocclusion. For FG-BG disocclusion, the depth values of the associated pixels are optimized in the reference image to ensure the removal of ghosts and adaptively divide the disocclusion into some small holes. For BG-BG disocclusion, a foreground removal method is applied to remove the corresponding foreground objects. The removed regions are filled with the surrounding background textures so that the BG-BG disocclusion in the synthesized image can be eliminated. Experimental results indicate that the proposed method outperforms the other methods in the objective and subjective evaluations.

Similar content being viewed by others

References

Ahn I, Kim C (2013) A novel depth-based virtual view synthesis method for free viewpoint video. IEEE Trans Broadcast 59(4):614–626. https://doi.org/10.1109/tbc.2013.2281658

Chen W-Y, Chang Y-L, Lin S-F, Ding L-F, Chen L-G (2005) Efficient depth image based rendering with edge dependent depth filter and interpolation. In: 2005 IEEE International Conference on Multimedia and Expo. IEEE, pp 1314–1317

Chen XD, Liang HT, Xu HY, Ren SY, Cai HY, Wang Y (2019) Artifact handling based on depth image for view synthesis. Appl Sci-Basel 9(9):19. https://doi.org/10.3390/app9091834

Chen XD, Liang HT, Xu HY, Ren SY, Cai HY, Wang Y (2020) Virtual view synthesis based on asymmetric bidirectional DIBR for 3D video and free viewpoint video. Applied Sciences-Basel 10(5):19. https://doi.org/10.3390/app10051562

Criminisi A, Perez P, Toyama K (2004) Region filling and object removal by exemplar-based image inpainting. IEEE Trans Image Process 13(9):1200–1212. https://doi.org/10.1109/tip.2004.833105

Daribo I, Saito H (2011) A novel Inpainting-based layered depth video for 3DTV. IEEE Trans Broadcast 57(2):533–541. https://doi.org/10.1109/tbc.2011.2125110

de Oliveira AQ, Walter M, Jung CR (2018) An artifact-type aware DIBR method for view synthesis. IEEE Signal Process Lett 25(11):1705–1709. https://doi.org/10.1109/lsp.2018.2870342

Deng ZM, Wang MJ (2018) Reliability-based view synthesis for free viewpoint video. Appl Sci-Basel 8(5):15. https://doi.org/10.3390/app8050823

Dong GS, Huang WM, Smith WAP, Ren P (2020) A shadow constrained conditional generative adversarial net for SRTM data restoration. Remote Sens Environ 237:14. https://doi.org/10.1016/j.rse.2019.111602

Fehn C (2004) Depth-image-based rendering (DIBR), compression and transmission for a new approach on 3D-TV. In: Woods AJ, Merritt JO, Benton SA, Bolas MT (eds) Stereoscopic displays and virtual reality systems Xi, Proceedings of the Society of Photo-Optical Instrumentation Engineers (Spie), vol 5291. Spie-Int Soc Optical Engineering, Bellingham, pp 93–104. https://doi.org/10.1117/12.524762

Greene N, Kass M, Miller G (1993) Hierarchical Z-buffer visibility. In: Proceedings of the 20th annual conference on Computer graphics and interactive techniques. ACM, pp 231–238

Han DX, Chen H, Tu CH, Xu YY (2018) View synthesis using foreground object extraction for disparity control and image inpainting. J Vis Commun Image Represent 56:287–295. https://doi.org/10.1016/j.jvcir.2018.10.004

Kao CC (2017) Stereoscopic image generation with depth image based rendering. Multimed Tools Appl 76(11):12981–12999. https://doi.org/10.1007/s11042-016-3733-3

Kim S, Jang J, Lim J, Paik J, Lee S (2018) Disparity-selective stereo matching using correlation confidence measure. J Opt Soc Am A-Opt Image Sci Vis 35(9):1653–1662. https://doi.org/10.1364/josaa.35.001653

Lei JJ, Zhang CC, Wu M, You L, Fan KF, Hou CP (2017) A divide-and-conquer hole-filling method for handling disocclusion in single-view rendering. Multimed Tools Appl 76(6):7661–7676. https://doi.org/10.1007/s11042-016-3413-3

Li S, Zhu C, Sun MT (2018) Hole filling with multiple reference views in DIBR view synthesis. IEEE Trans Multimedia 20(8):1948–1959. https://doi.org/10.1109/tmm.2018.2791810

Liang ZY, Shen JB (2020) Local semantic Siamese networks for fast tracking. IEEE Trans Image Process 29:3351–3364. https://doi.org/10.1109/tip.2019.2959256

Lie WN, Hsieh CY, Lin GS (2018) Key-frame-based background sprite generation for hole filling in depth image-based rendering. IEEE Trans Multimedia 20(5):1075–1087. https://doi.org/10.1109/tmm.2017.2763319

Lim H, Kim YS, Lee S, Choi O, Kim JD, Kim C (2011) Bi-layer inpainting for novel view synthesis. In: 2011 18th IEEE International Conference on Image Processing. IEEE, pp 1089–1092

Liu SH, Paul A, Zhang GC, Jeon G (2015) A game theory-based block image compression method in encryption domain. J Supercomput 71(9):3353–3372. https://doi.org/10.1007/s11227-015-1413-0

Liu W, Ma LY, Qiu B, Cui MY, Ding JW (2017) An efficient depth map preprocessing method based on structure-aided domain transform smoothing for 3D view generation. PLoS One 12(4):20. https://doi.org/10.1371/journal.pone.0175910

Luo GB, Zhu YS (2017) Foreground removal approach for hole filling in 3D video and FVV synthesis. IEEE Trans Circuits Syst Video Technol 27(10):2118–2131. https://doi.org/10.1109/tcsvt.2016.2583978

Luo G, Zhu Y, Li Z (2016) Zhang L a hole filling approach based on background reconstruction for view synthesis in 3D video. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, In, pp 1781–1789

Luo GB, Zhu YS, Weng ZY, Li ZT (2020) A Disocclusion Inpainting framework for depth-based view synthesis. IEEE Trans Pattern Anal Mach Intell 42(6):1289–1302. https://doi.org/10.1109/tpami.2019.2899837

Mori Y, Fukushima N, Yendo T, Fujii T, Tanimoto M (2009) View generation with 3D warping using depth information for FTV. Signal Process-Image Commun 24(1–2):65–72. https://doi.org/10.1016/j.image.2008.10.013

Muddala SM, Sjostrom M, Olsson R (2016) Virtual view synthesis using layered depth image generation and depth-based inpainting for filling disocclusions and translucent disocclusions. J Vis Commun Image Represent 38:351–366. https://doi.org/10.1016/j.jvcir.2016.02.017

Nazeri K, Ng E, Joseph T, Qureshi F, Ebrahimi M (2019) EdgeConnect: generative image inpainting with adversarial edge learning. arXiv preprint arXiv:190100212

Ndjiki-Nya P, Koppel M, Doshkov D, Lakshman H, Merkle P, Muller K, Wiegand T (2011) Depth image-based rendering with advanced texture synthesis for 3-D video. IEEE Trans Multimedia 13(3):453–465. https://doi.org/10.1109/tmm.2011.2128862

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE transactions on systems, man, and cybernetics 9(1):62–66

Rahmatov N, Paul A, Saeed F, Hong WH, Seo H, Kim J (2019) Machine learning-based automated image processing for quality management in industrial internet of things. Int J Distrib Sens Netw 15(10):11. https://doi.org/10.1177/1550147719883551

Scharstein D, Pal C (2007) Learning conditional random fields for stereo. In: 2007 Ieee Conference on Computer Vision and Pattern Recognition, Vols 1–8, New York. IEEE Conference on Computer Vision and Pattern Recognition. Ieee, pp 1688−+

Shao F, Lin WC, Fu RD, Yu M, Jiang GY (2017) Optimizing multiview video plus depth retargeting technique for stereoscopic 3D displays. Opt Express 25(11):12478–12492. https://doi.org/10.1364/oe.25.012478

Shen JB, Jin XG, Mao XY, Feng JQ (2006) Completion-based texture design using deformation. Vis Comput 22(9–11):936–945. https://doi.org/10.1007/s00371-006-0079-2

Shen JB, Jin XF, Zhou CA, Wang CCL (2007) Gradient based image completion by solving the Poisson equation. Comput Graph-UK 31(1):119–126. https://doi.org/10.1016/j.cag.2006.10.004

Shen JB, Wang DP, Li XL (2013) Depth-aware image seam carving. IEEE T Cybern 43(5):1453–1461. https://doi.org/10.1109/tcyb.2013.2273270

Sun W, Au OC, Xu L, Li Y, Hu W (2012) Novel temporal domain hole filling based on background modeling for view synthesis. In: 2012 19th IEEE International Conference on Image Processing. IEEE, pp 2721–2724

Tam WJ, Alain G, Zhang L, Martin T, Renaud R (2004) Smoothing depth maps for improved steroscopic image quality. In: Three-Dimensional TV, Video, and Display III, 2004. International Society for Optics and Photonics, pp 162–173

Tech G, Chen Y, Muller K, Ohm JR, Vetro A, Wang YK (2016) Overview of the multiview and 3D extensions of high efficiency video coding. IEEE Trans Circuits Syst Video Technol 26(1):35–49. https://doi.org/10.1109/tcsvt.2015.2477935

Wang WG, Shen JB (2018) Deep visual attention prediction. IEEE Trans Image Process 27(5):2368–2378. https://doi.org/10.1109/tip.2017.2787612

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612. https://doi.org/10.1109/tip.2003.819861

Wang LH, Huang XJ, Xi M, Li DX, Zhang M (2010) An asymmetric edge adaptive filter for depth generation and hole filling in 3DTV. IEEE Trans Broadcast 56(3):425–431. https://doi.org/10.1109/tbc.2010.2053971

Wang WG, Shen JB, Yu YZ, Ma KL (2017) Stereoscopic thumbnail creation via efficient stereo saliency detection. IEEE Trans Vis Comput Graph 23(8):2014–2027. https://doi.org/10.1109/tvcg.2016.2600594

Wang WG, Shen JB, Ling HB (2019) A deep network solution for attention and aesthetics aware photo cropping. IEEE Trans Pattern Anal Mach Intell 41(7):1531–1544. https://doi.org/10.1109/tpami.2018.2840724

Zhang L, Tam WJ (2005) Stereoscopic image generation based on depth images for 3D TV. IEEE Trans Broadcast 51(2):191–199. https://doi.org/10.1109/tbc.2005.846190

Zhang L, Zhang L, Mou XQ, Zhang D (2011) FSIM: a feature similarity index for image quality assessment. IEEE Trans Image Process 20(8):2378–2386. https://doi.org/10.1109/tip.2011.2109730

Zhang L, Shen Y, Li HY (2014) VSI: a visual saliency-induced index for perceptual image quality assessment. IEEE Trans Image Process 23(10):4270–4281. https://doi.org/10.1109/tip.2014.2346028

Zhu C, Li S (2016) Depth image based view synthesis: new insights and perspectives on hole generation and filling. IEEE Trans Broadcast 62(1):82–93. https://doi.org/10.1109/tbc.2015.2475697

Zitnick CL, Kang SB, Uyttendaele M, Winder S, Szeliski R (2004) High-quality video view interpolation using a layered representation. ACM Trans Graph 23(3):600–608. https://doi.org/10.1145/1015706.1015766

Acknowledgments

The authors would like to thank the Interactive Visual Media Group at Microsoft Research for making the MSR 3D Video Dataset publicly available.

Funding

This work was funded by the National Major Project of Scientific and Technical Supporting Programs of China during the 13th Five-year Plan Period (grant numbers 2017YFC0109702, 2017YFC0109901, and 2018YFC0116202).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, X., Liang, H., Xu, H. et al. Disocclusion-type aware hole filling method for view synthesis. Multimed Tools Appl 80, 11557–11581 (2021). https://doi.org/10.1007/s11042-020-10196-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-10196-x