Abstract

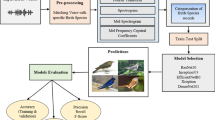

The classification of birdsong has very important signification to monitor the bird population in the habitats. Aiming at the birdsong dataset with complex and diverse audio background, this paper attempts to introduce an acoustic feature for voice and music analysis: Chroma. It is spliced and fused with the commonly used birdsong features, Log-Mel Spectrogram (LM) and Mel Frequency Cepstrum Coefficient (MFCC), to enrich the representational capacity of single feature; At the same time, in view of the characteristic that birdsong has continuous and dynamic changes in time, a 3DCNN-LSTM combined model is proposed as a classifier to make the network more sensitive to the birdsong information that changes with time. In this paper, we selected four bird audio data from the Xeno-Canto website to evaluate how LM, MFCC and Chroma were fused to maximize the birdsong audio information. The experimental results show that the LM-MFCC-C feature combination achieves the best result of 97.9% mean average precision (mAP) in the experiment.

Similar content being viewed by others

References

Abadi M, Barham P, Chen J et al (2016) Tensorflow: A system for large-scale machine learning. In 12th {USENIX} symposium on operating systems design and implementation {OSDI} 16: 265–283

Bai S, Kolter J Z, Koltun V (2018) An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv preprint: arXiv:1803.01271

Bardeli R, Wolff D, Kurth F et al (2010) Detecting bird sounds in a complex acoustic environment and application to bioacoustic monitoring. Pattern Recogn Lett 31(12):1524–1534

Boddapati V, Petef A, Rasmusson J et al (2017) Classifying environmental sounds using image recognition networks. Procedia computer science 112:2048–2056. https://doi.org/10.1016/j.procs.2017.08.250

Chachada S, Kuo CCJ (2014) Environmental sound recognition: A survey APSIPA Transactions on Signal and Information Processing 3 https://doi.org/10.1017/ATSIP.2014.12

Ellis D (2007) Chroma feature analysis and synthesis. Resources of Laboratory for the Recognition and Organization of Speech and Audio-LabROSA

Fagerlund S (2007) Bird species recognition using support vector machines. EURASIP J Adv in Signal Process 1:038637. https://doi.org/10.1155/2007/38637

Ghosal D, Kolekar MH (2018) Music Genre Recognition Using Deep Neural Networks and Transfer Learning. In Interspeech. 2087–2091

Graves A, Mohamed A, Hinton G (2013) Speech recognition with deep recurrent neural networks. In 2013 IEEE international conference on acoustics, speech and signal processing(pp.6645–6649). IEEE. https://doi.org/10.1109/ICASSP.2013.6638947

Ganchev T, Fakotakis N, Kokkinakis G (2005) Comparative evaluation of various MFCC implementations on the speaker verification task. In Proceedings of the SPECOM(Vol. 1, 191–194).

Girshick R, Donahue J, Darrell T et al (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition 580–587

Himawan I, Towsey M, Roe P (2018) 3D convolution recurrent neural networks for bird sound detection

He K, Zhang X, Ren S et al (2016) Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition(pp. 770–778)

Huang G, Liu Z, Van Der Maaten L et al (2017) Densely connected convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 4700–4708

Izonin I, Tkachenko R, Kryvinska N, et al (2019) Multiple Linear Regression based on Coefficients Identification using Non-Iterative SGTM Neural-Like Structure. In International Work-Conference on Artificial Neural Networks 467–479. Springer, Cham

Ji S, Xu W, Yang M et al (2012) 3D convolutional neural networks for human action recognition. IEEE Trans Pattern Anal Mach Intell 35(1):221–231. https://doi.org/10.1109/TPAMI.2012.59

Joly A, Goëau H, Glotin H et al (2017) Lifeclef lab overview: multimedia species identification challenges. In International Conference of the Cross-Language Evaluation Forum for European Languages 255–274. Springer, Cham

Kahl S, Wilhelm-Stein T, Hussein H et al (2017) Large-Scale Bird Sound Classification using Convolutional Neural Networks. In CLEF (Working Notes).

Kalan AK, Mundry R, Wagner OJJ et al (2015) Towards the automated detection and occupancy estimation of primates using passive acoustic monitoring. Ecol Ind 54:217–226. https://doi.org/10.1016/j.ecolind.2015.02.023

Koops HV, Van Balen J, Wiering F (2014) A deep neural network approach to the lifeclef 2014 bird task. CLEF2014 Working Notes, 1180: 634–642

Lavanya Sudha PV, Lavanya Devi G, Nelaturi N (2018) Random Forest Algorithm for Recognition of Bird Species using Audio Recordings. Int J Manage, Tech And Engr 8(11):90–94

Li S, Yao Y, Hu J et al (2018) An ensemble stacked convolutional neural network model for environmental event sound recognition. Appl Sci 8(7):1152. https://doi.org/10.3390/app8071152

Lee CH, Chou CH, Han CC et al (2006) Automatic recognition of animal vocalizations using averaged MFCC and linear discriminant analysis[J]. Pattern Recogn Lett 27(2):93–101

Lee CH, Hsu SB, Shih JL et al (2012) Continuous birdsong recognition using Gaussian mixture modeling of image shape features. IEEE Trans Multimedia 15(2):454–464. https://doi.org/10.1109/TMM.2012.2229969

Leng YR, Tran HD (2014) Multi-label bird classification using an ensemble classifier with simple features. In Signal and Information Processing Association Annual Summit and Conference (APSIPA), Asia-Pacific. 1–5. IEEE. https://doi.org/10.1109/APSIPA.2014.7041649

McFee B, Raffel C, Liang D et al (2015) librosa: Audio and music signal analysis in python. In Proceedings of the 14th python in science conference 8: 18–25

Müller M, Kurth F, Clausen M (2005) Audio Matching via Chroma-Based Statistical Features. In ISMIR(Vol. 2005, p. 6)

Müller M (2007) Information retrieval for music and motion(Vol. 2, p. 59). Heidelberg: Springer

Nirosha P, Marsland S, Castro I (2018) "Automated Birdsong Recognition in Complex Acoustic Environments: A Review." J Avian Biol 49, no. 5. https://doi.org/10.1111/jav.01447

Paulus J, Müller M, Klapuri A (2010) State of the Art Report: Audio-Based Music Structure Analysis. In Ismir. 625–636

Pereira HM, Cooper HD (2006) Towards the global monitoring of biodiversity change. Trends Ecol Evol 21(3):123–129

Piczak KJ (2015) Environmental sound classification with convolutional neural networks. In 2015 IEEE 25th International Workshop on Machine Learning for Signal Processing (MLSP) 1–6. IEEE. https://doi.org/10.1109/MLSP.2015.7324337

Stowell D, Plumbley MD (2014) Automatic large-scale classification of bird sounds is strongly improved by unsupervised feature learning. PeerJ, 2: e488.

Sainath TN, Vinyals O, Senior A et al (2015) Convolutional, long short-term memory, fully connected deep neural networks. In 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 4580–4584. IEEE. https://doi.org/10.1109/ICASSP.2015.7178838

Sangiorgio M, Dercole F (2020) Robustness of LSTM neural networks for multi-step forecasting of chaotic time series. Chaos, Solitons & Fractals, 139, 110045

Sahidullah M, Saha G (2012) Design, analysis and experimental evaluation of block based transformation in MFCC computation for speaker recognition. Speech Commun 54(4):543–565. https://doi.org/10.1016/j.specom.2011.11.004

Sprengel E, Jaggi M, Kilcher Y et al (2016) Audio based bird species identification using deep learning techniques No. CONF; 547–559

Shi B, Bai X, Yao C (2016) An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Trans Pattern Anal Mach Intell 39(11):2298–2304. https://doi.org/10.1109/TPAMI.2016.2646371

Stowell D, Wood MD, Pamuła H et al (2019) Automatic acoustic detection of birds through deep learning: the first Bird Audio Detection challenge. Methods Ecol Evol 10(3):368–380

Shen J, Pang R, Weiss RJ et al (2018) Natural tts synthesis by conditioning wavenet on mel spectrogram predictions. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 4779–4783 IEEE

Shore J, Johnson R (1980) Axiomatic derivation of the principle of maximum entropy and the principle of minimum cross-entropy. IEEE Trans Inf Theory 26(1):26–37. https://doi.org/10.1109/TIT.1980.1056144

Sprengel E, Jaggi M, Kilcher Y et al (2016) Audio based bird species identification using deep learning techniques No. CONF, 547–559

Su Y, Zhang K, Wang J et al (2019) Environment sound classification using a two-stream CNN based on decision-level fusion. Sensors 19(7):1733. https://doi.org/10.3390/s19071733

Su Y, Zhang K, Wang J et al (2020) Performance analysis of multiple aggregated acoustic features for environment sound classification. Appl Acoust 158:107050. https://doi.org/10.1016/j.apacoust.2019.107050

Torfi A, Dawson J, Nasrabadi NM (2018) Text-independent speaker verification using 3d convolutional neural networks. In 2018 IEEE International Conference on Multimedia and Expo (ICME) 1–6. IEEE. https://doi.org/10.1109/ICME.2018.8486441

Tieleman T, Hinton G (2012) Lecture 6.5-rmsprop: Divide the gradient by a running average of its recent magnitude. COURSERA: Neural networks for machine learning, 4(2): 26–31

Tkachenko R, Doroshenko A, Izonin I et al (2018) Imbalance Data Classification via Neural-like Structures of Geometric Transformations Model: Local and Global Approaches. In International conference on computer science, engineering and education applications 112–122. Springer, Cham

Tkachenko R, Izonin I (2018) Model and principles for the implementation of neural-like structures based on geometric data transformations. In International Conference on Computer Science, Engineering and Education Applications 578–587. Springer, Cham

Torfi A, Iranmanesh SM, Nasrabadi N et al (2017) 3d convolutional neural networks for cross audio-visual matching recognition. IEEE Access 5:22081–22091. https://doi.org/10.1109/ACCESS.2017.2761539

Walther GR, Post E, Convey P et al (2002) Ecological responses to recent climate change. Nature 416(6879):389–395

Xie J, Towsey M, Zhang J et al (2016) Adaptive frequency scaled wavelet packet decomposition for frog call classification. Eco Inform 32:134–144

Xing Z, Baik E, Jiao Y et al (2017) Modeling of the latent embedding of music using deep neural network. arXiv preprint: arXiv:1705.05229

Yang G-P, Tuan C-I, Lee H-Y, Lee L-S (2019) ‘‘Improved speech separation with Time-and-Frequency cross-domain joint embedding and clustering,’’ in Proc. Interspeech, Sep. pp. 1363–1367, 10. 21437/interspeech 2019–2181

Yin W, Kann K, Yu M et al (2017) Comparative study of cnn and rnn for natural language processing. arXiv preprint: arXiv:1702.01923

Zhang X, Chen A, Zhou G et al (2019) Spectrogram-frame linear network and continuous frame sequence for bird sound classification. Eco Inform 54:101009. https://doi.org/10.1016/j.ecoinf.2019.101009

Zhao X, Shao Y, Wang DL (2012) CASA-based robust speaker identification. IEEE Trans Audio Speech Lang Process 20(5):1608–1616. https://doi.org/10.1109/TASL.2012.2186803

Funding

This work supported in part by Scientific Innovation Fund for Post-graduates of Central South University of Forestry and Technology CX20192014; Hunan Key Laboratory of intelligent logistics technology 2019TP1015.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yan, N., Chen, A., Zhou, G. et al. Birdsong classification based on multi-feature fusion. Multimed Tools Appl 80, 36529–36547 (2021). https://doi.org/10.1007/s11042-021-11396-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11396-9