Abstract

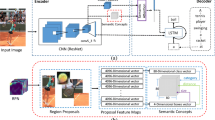

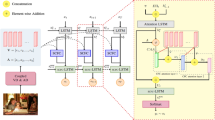

Transformer-based architectures have shown encouraging results in image captioning. They usually utilize self-attention based methods to establish the semantic association between objects in an image for predicting caption. However, when appearance features between the candidate object and query object show weak dependence, the self-attention based methods are hard to capture the semantic association between them. In this paper, a Semantic Association Enhancement Transformer model is proposed to address the above challenge. First, an Appearance-Geometry Multi-Head Attention is introduced to model a visual relationship by integrating the geometry features and appearance features of the objects. The visual relationship characterizes the semantic association and relative position among the objects. Secondly, a Visual Relationship Improving module is presented to weigh the importance of appearance feature and geometry feature of query object to the modeled visual relationship. Then, the visual relationship among different objects is adaptively improved according to the constructed importance, especially the objects with weak dependence on appearance features, thereby enhancing their semantic association. Extensive experiments on MS COCO dataset demonstrate that the proposed method outperforms the state-of-the-art methods.

Similar content being viewed by others

References

Anderson P, Fernando B, Johnson M, Gould S (2016) Spice: semantic propositional image caption evaluation. In: European conference on computer vision (ECCV), pp 382–398

Anderson P, He X, Buehler C, Teney D, Johnson M, Gould S, Zhang L (2018) Bottom-up and top-down attention for image captioning and visual question answering. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 6077–6086

Ashish N, Vaswani S, Niki P, Jakob U, Llion J, Aidan NG, Lukasz K, Illia P (2017) Attention is all you need. In: Conference and workshop on neural information processing systems (NeurlPS), pp 5998–6008

Banerjee S, Lavie A (2005) Meteor: an automatic metric for mt evaluation with improved correlation with human judgments. In: Annual meeting of the association for computational linguistics (ACL), pp 65–72

Bansal M, Kumar M, Kumar M (2021) 2d object recognition techniques: state-of-the-art work. Arch Comput Methods Eng 28(3):1147–1161

Chhabra P, Garg NK, Kumar M (2020) Content-based image retrieval system using orb and sift features. Neural Comput Applic 32(7):2725–2733

Cornia M, Stefanini M, Baraldi L, Cucchiara R (2020) Meshed-memory transformer for image captioning. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 10575–10584

Deng J, Dong W, Socher R, Li L, Li K, Feifei L (2009) Imagenet: a large-scale hierarchical image database. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 248–255

Devlin J, Chang MW, Lee K, Toutanova K (2019) Bert: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the conference of the North American chapter of the association for computational linguistics: human language technologies (NAACL-HLT), pp 4171–4186

Gao L, Li X, Song J, Shen HT (2020) Hierarchical lstms with adaptive attention for visual captioning. IEEE Trans Pattern Anal Mach Intell 42 (5):1112–1131

Guo L, Liu J, Lu S, Lu H (2020) Show, tell, and polish: ruminant decoding for image captioning. IEEE Trans Multimodal (TMM) 22(8):2149–2162

Guo L, Liu J, Zhu X, Yao P, Lu S, Lu H (2020) Normalized and geometry-aware self-attention network for image captioning. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 10324–10333

Herdade S, Kappeler A, Boakye K, Soares J (2019) Image captioning: transforming objects into words. In: Conference and workshop on neural information processing systems (NeurlPS), pp 11135–11145

Hu H, Gu J, Zhang Z, Dai J, Wei Y (2018) Relation networks for object detection. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 3588–3597

Huang L, Wang W, Xia Y, Chen J (2019) Adaptively aligned image captioning via adaptive attention time. In: Conference and workshop on neural information processing systems (NeurlPS), pp 8942–8951

Huang Y, Chen J, Ouyang W, Wan W, Xue Y (2020) Image captioning with end-to-end attribute detection and subsequent attributes prediction. IEEE Trans Image Process (TIP) 29:4013–4026

Ji J, Xu C, Zhang X, Wang B, Song X (2020) Spatio-temporal memory attention for image captioning. IEEE Trans Image Process (TIP) 29:7615–7628

Jiang W, Ma L, Jiang Y, Liu W, Zhang T (2018) Recurrent fusion network for image captioning. In: European conference on computer vision (ECCV), pp 510–526

Krishna R, Zhu Y, Groth O, Johnson J, Hata K, Kravitz J, Chen S, Kalantidis Y, Li L, Shamma DA, Bernstein MS, Li F (2017) Visual genome: connecting language and vision using crowdsourced dense image annotations. Int J Comput Vis (IJCV) 123(1):32–73

Kumar M, Chhabra P, Garg NK (2018) An efficient content based image retrieval system using bayesnet and k-nn. Multimed Tools Appl 77(16):21557–21570

Kumar M, Kumar M, et al. (2021) Xgboost: 2d-object recognition using shape descriptors and extreme gradient boosting classifier. In: International conference on computational methods and data engineering (ICCMDE), pp 207–222

Li G, Zhu L, Liu P, Yang Y (2019) Entangled transformer for image captioning. In: IEEE International conference on computer vision (ICCV), pp 8928–8937

Li J, Yao P, Guo L, Zhang W (2019) Boosted transformer for image captioning. Appl Sci 9(16):3260

Lin C (2004) Rouge: a package for automatic evaluation of summaries. In: Annual meeting of the association for computational linguistics (ACL), pp 74–81

Lin T, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollar P, Zitnick CL (2014) Microsoft coco: Common objects in context. In: European conference on computer vision (ECCV), pp 740–755

Naqvi N, Ye Z (2021) Image captions: global-local and joint signals attention model (gl-jsam). Multimed Tools Appl 79(33):24429–24448

Papineni K, Roukos S, Ward T, Zhu W (2002) Bleu: a method for automatic evaluation of machine translation. In: Annual meeting of the association for computational linguistics (ACL), pp 311–318

Qin Y, Du J, Zhang Y, Lu H (2019) Look back and predict forward in image captioning. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 8367–8375

Ren S, He K, Girshick R, Sun J (2015) Faster r-cnn: towards real-time object detection with region proposal networks. In: Conference and workshop on neural information processing systems (NeurlPS), pp 91–99

Rennie SJ, Marcheret E, Mroueh Y, Ross J, Goel V (2017) Self-critical sequence training for image captioning. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 1179–1195

Ryan K, Ruslan S, Richard SZ (2014) Unifying visual-semantic embeddings with multimodal neural language models. In: arXiv:1411.253

Shen X, Liu B, Zhou Y, Zhao J (2020) Remote sensing image caption generation via transformer and reinforcement learning. Multimed Tools Appl 79(35):26661–26682

Shi Z, Zhou X, Qiu X, Zhu X (2020) Improving image captioning with better use of captions. In: Annual meeting of the association for computational linguistics (ACL), pp 7454–7464

Tran A, Mathews A, Xie L (2020) Transform and tell: entity-aware news image captioning. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 13032–13042

Vedantam R, Zitnick CL, Parikh D (2015) Cider: consensus-based image description evaluation. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 4566–4575

Vinyals O, Toshev A, Bengio S, Erhan D (2015) Show and tell: a neural image caption generator. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 3156–3164

Wang L, Bai Z, Zhang Y, Lu H (2020) Show, recall, and tell: image captioning with recall mechanism. In: AAAI Conference on artificial intelligence (AAAI), pp 12176–12183

Wang S, Lan L, Zhang X, Luo Z (2020) Gatecap: gated spatial and semantic attention model for image captioning. Multimed Tools Appl 79 (17):11531–11549

Wen Z, Peng Y (2020) Multi-level knowledge injecting for visual commonsense reasoning. IEEE Trans Circ Syst Vid Technol (TCSVT) 31(3):1042–1054

Wu J, Chen T, Wu H, Yang Z, Luo G, Lin L (2020) Fine-grained image captioning with global-local discriminative objective. IEEE Transactions on Multimedia (TMM). https://doi.org/10.1109/TMM.2020.3011317

Wu L, Xu M, Sang L, Yao T, Mei T (2020) Noise augmented double-stream graph convolutional networks for image captioning. IEEE Transactions on Circuits and Systems for Video Technology (TCSVT). https://doi.org/10.1109/TCSVT.2020.3036860

Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhutdinov R, Zemel R, Bengio Y (2015) Show, attend and tell: neural image caption generation with visual attention. In: International conference on machine learning (ICML), pp 2048–2057

Yan C, Hao Y, Li L, Yin J, Liu A, Mao Z, Chen Z, Gao X (2021) Task-adaptive attention for image captioning. IEEE Transactions on Circuits and Systems for Video Technology (TCSVT). https://doi.org/10.1109/TCSVT.2021.3067449

Yang L, Wang H, Tang P, Li Q (2021) Captionnet: a tailor-made recurrent neural network for generating image descriptions. IEEE Trans Multimed (TMM) 23:835–845

Yang X, Tang K, Zhang H, Cai J (2019) Auto-encoding scene graphs for image captioning. In: IEEE Conference on computer vision and pattern recognition (CVPR), pp 10685–10694

Yao T, Pan Y, Li Y, Mei T (2018) Exploring visual relationship for image captioning. In: European conference on computer vision (ECCV), pp 711–727

Yao T, Pan Y, Li Y, Mei T (2019) Hierarchy parsing for image captioning. In: IEEE International conference on computer vision (ICCV), pp 2621–2629

Yu J, Li J, Yu Z, Huang Q (2020) Multimodal transformer with multi-view visual representation for image captioning. IEEE Trans Circ Syst Video Technol (TCSVT) 30(12):4467–4480

Zhang Y, Shi X, Mi S, Yang X (2021) Image captioning with transformer and knowledge graph. Pattern Recogn Lett (PRL) 143:43–49

Zhu X, Li L, Liu J, Peng H, Niu X (2018) Captioning transformer with stacked attention modules. Appl Sci 8:5

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grants (61925201, 62132001, U21B2025, 62020106004, and 92048301) and the National Key R&D Program of China (2018YFB1305200).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no conflict of interest.

Additional information

Human participants or animals

This article does not contain any studies with human participants or animals performed by any of the authors.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jia, X., Wang, Y., Peng, Y. et al. Semantic association enhancement transformer with relative position for image captioning. Multimed Tools Appl 81, 21349–21367 (2022). https://doi.org/10.1007/s11042-022-12776-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12776-5