Abstract

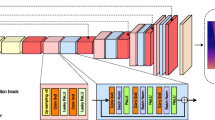

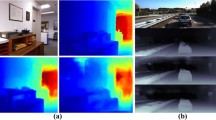

Monocular 360° depth estimation is an important and challenging task in computer vision and 3D vision. Recently, deep neural networks have shown great abilities in estimating depth map from a single 360° image. However, existing 360° depth estimation networks typically employ well-exposed 360° images and corresponding depth maps as the supervision data, ignoring the learning of under-exposed and over-exposed images, which results in the generation of low-quality depth maps and the poor generalization capabilities of the networks. In the current paper, we train an improved convolutional neural network URectNet with a distortion weighted loss function to learn high-quality depth maps from multi-exposure 360° images. Firstly, we insert skip connections into the baseline network of 360° depth estimation to improve performance. Then, we design a distortion weighted loss function to eliminate the effect of distortion caused by 360° images during network training. Due to the lack of 360° multi-exposure images, we render a 360° multi-exposure dataset from Matterport3D for network training. The generalization capability of the network is enhanced by increasing the dynamic range of the training images. Finally, extensive experiments and ablation studies are provided to validate our method against existing state-of-the-art algorithms, demonstrating the ability of the proposed method to achieve a favorable performance.

Similar content being viewed by others

References

Chang A, Dai A, Funkhouser T, et al. (2017) Matterport3d: Learning from rgb-d data in indoor environments[J]. arXiv preprint arXiv:1709.06158

Da K (2014)A method for stochastic optimization[J]. arXiv preprint arXiv:1412.6980

de La Garanderie GP, Abarghouei AA, Breckon TP(2018) Eliminating the blind spot: Adapting 3d object detection and monocular depth estimation to 360 panoramic imagery[C]//Proceedings of the European Conference on Computer Vision (ECCV). : 789–807.

Eder M, Moulon P, Guan L (2019) Pano popups: indoor 3d reconstruction with a plane-aware network[C]//2019 international conference on 3D vision (3DV). IEEE:76–84

Eigen D, Fergus R (2015) Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture[C]//Proceedings of the IEEE international conference on computer vision. : 2650–2658.

Eigen D, Puhrsch C, Fergus R (2014) Depth map prediction from a single image using a multi-scale deep network[J]. arXiv preprint arXiv:1406.2283.

Endo Y, Kanamori Y, Mitani J (2017) Deep reverse tone mapping[J]// ACM Trans. Graph 36(6):177–171

Fernandez-Labrador C, Facil JM, Perez-Yus A, Demonceaux C, Civera J, Guerrero JJ (2020) Corners for layout: end-to-end layout recovery from 360 images[J]. IEEE Robot Autom Lett 5(2):1255–1262

Garg R, Bg V K, Carneiro G, et al. (2016) Unsupervised cnn for single view depth estimation: geometry to the rescue[C]//European conference on computer vision. Springer, Cham, : 740–756

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks[C]//proceedings of the thirteenth international conference on artificial intelligence and statistics. JMLR Workshop Conf Proceed:249–256

Godard C, Mac Aodha O, Brostow GJ (2017) Unsupervised monocular depth estimation with left-right consistency[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. : 270–279

Hartley R, Zisserman A (2000) Multiple view geometry in computer vision second edition[M]. Cambridge University Press

He K, Zhang X, Ren S, et al. (2016) Deep residual learning for image recognition[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778

Huang J, Chen Z, Ceylan D et al (2017) 6-DOF VR videos with a single 360-camera[C]//2017 IEEE virtual reality (VR). IEEE:37–44

Im S, Ha H, Rameau F, et al. (2016) All-around depth from small motion with a spherical panoramic camera[C]//European conference on computer vision. Springer, Cham,: 156–172

Jin L, Xu Y, Zheng J, et al. (2020) Geometric structure based and regularized depth estimation from 360 indoor imagery[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. : 889–898.

Kuznietsov Y, Stuckler J, Leibe B (2017) Semi-supervised deep learning for monocular depth map prediction[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 6647–6655.

Laina I, Rupprecht C, Belagiannis V et al (2016) Deeper depth prediction with fully convolutional residual networks[C]//2016 fourth international conference on 3D vision (3DV). IEEE:239–248

Liao K, Lin C, Zhao Y, Xu M (2020) Model-free distortion rectification framework bridged by distortion distribution map[J]. IEEE Trans Image Process 29:3707–3718

Liao K, Lin C, Zhao Y (2021) A deep ordinal distortion estimation approach for distortion rectification[J]. IEEE Trans Image Process 30:3362–3375

Mahjourian R, Wicke M, Angelova A (2018) Unsupervised learning of depth and ego-motion from monocular video using 3d geometric constraints[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. : 5667–5675.

Pagani A, Stricker D (2011) Structure from motion using full spherical panoramic cameras[C]//2011 IEEE international conference on computer vision workshops (ICCV workshops). IEEE:375–382

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation[C]//international conference on medical image computing and computer-assisted intervention. Springer, Cham. 234–241

Saxena A, Sun M, Ng AY (2008) Make3d: learning 3d scene structure from a single still image[J]. IEEE Trans Pattern Anal Mach Intell 31(5):824–840

Su Y C, Grauman K (2017) Learning Spherical Convolution for Fast Features from 360° Imagery[C]// In Advances in Neural Information Processing Systems (NIPS). 2(3): 5

Su Y C, Grauman K (2019) Kernel transformer networks for compact spherical convolution[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 9442–9451.

Tateno K, Navab N, Tombari F (2018) Distortion-aware convolutional filters for dense prediction in panoramic images[C]//Proceedings of the European Conference on Computer Vision (ECCV). 707–722

Wang C, Buenaposada J M, Zhu R, et al. (2018) Learning depth from monocular videos using direct methods[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. : 2022-2030.

Wang FE, Hu HN, Cheng HT et al (2018) Self-supervised learning of depth and camera motion from 360° videos[C]//Asian conference on computer vision. Springer, Cham, pp 53–68

Wang FE, Yeh YH, Sun M, et al. (2020) Bifuse: Monocular 360 depth estimation via bi-projection fusion[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. : 462–471

Wang NH, Solarte B, Tsai YH et al (2020) 360SD-net: 360° stereo depth estimation with learnable cost volume[C]//2020 IEEE international conference on robotics and automation (ICRA). IEEE:582–588

Yin Z, Shi J (2018) Geonet: Unsupervised learning of dense depth, optical flow and camera pose[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. : 1983-1992

Zeng W, Karaoglu S, Gevers T (2020) Joint 3d layout and depth prediction from a single indoor panorama image[C]//European conference on computer vision. Springer, Cham, 666–682.

Zhou T, Brown M, Snavely N, et al. (2017) Unsupervised learning of depth and ego-motion from video[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. : 1851-1858.

Zioulis N, Karakottas A, Zarpalas D, et al. (2018) Omnidepth: Dense depth estimation for indoors spherical panoramas[C]//Proceedings of the European Conference on Computer Vision (ECCV). : 448–465.

Zioulis N, Karakottas A, Zarpalas D et al (2019) Spherical view synthesis for self-supervised 360 depth estimation[C]//2019 international conference on 3D vision (3DV). IEEE:690–699

Acknowledgements

This work was supported by Natural Science Foundation of Jilin of China (20190201255JC) and the Key Science and Technology Project of Jilin Province (20180201069GX).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interests

The authors declare no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xu, C., Yang, H., Han, C. et al. Learning high-quality depth map from 360° multi-exposure imagery. Multimed Tools Appl 81, 35965–35980 (2022). https://doi.org/10.1007/s11042-022-13340-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-13340-x