Abstract

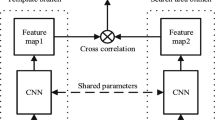

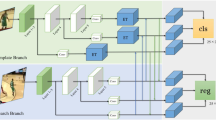

Existing Siamese-based trackers deal with target deformation and occlusion by introducing online updates. However, these trackers still suffer from model drift due to the cumulative error in tracking results and the lack of a suitable model update strategy. To solve this problem, we propose an online bionic visual siamese tracking framework based on the mixed time-event triggering mechanism. In which, the bionic vision network introduces the receptive field block and the blurpool, which improve the quality of feature extraction while maintaining the translational invariance of the convolutional neural network. The former uses dilated convolution kernels with different dilation rates to fuse depth features, which effectively increases the receptive field of the network. The latter uses low-pass filtering to anti-alias before downsampling, reducing the negative impact of the downsampling operation on the generalization ability of the network. In addition, to enable the model to effectively capture target appearance variations, a template update strategy with the mixed time-event triggering mechanism is designed. The strategy evaluates the quality of tracking results via a quality assessment model, guided by the mixed time-event triggering mechanism to adaptively weighted fusion of fixed and mutative templates. Numerous experiments conducted on OTB100, VOT2016, VOT2018, UAV123, GOT-10k benchmarks show that the proposed tracker outperforms the baseline tracker and achieves state-of-the-art performance.

Similar content being viewed by others

Data Availability

The raw/processed data required to reproduce these findings cannot be shared at this time as the data also form part of an ongoing study.

References

Azulay A, Weiss Y (2018) Why do deep convolutional networks generalize so poorly to small image transformations?. arXiv:1805.12177

Bertinetto L, Valmadre J, Golodetz S, Miksik O, Torr HS (2016) Staple: Complementary learners for real-time tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1401–1409

Chen Z, Zhong B, Li G, Zhang S, Ji R (2020) Siamese box adaptive network for visual tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6668–6677

Collins RT, Liu Y, Leordeanu M (2005) Online selection of discriminative tracking features. IEEE Trans Pattern Anal Machine Intell 27(10):1631–1643

Danelljan M, Bhat G, Shahbaz Khan F, Felsberg M (2017) Eco: Efficient convolution operators for tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6638–6646

Danelljan M, Häger G, Khan F S, Felsberg M (2016) Discriminative scale space tracking. IEEE Trans Pattern Anal Machine Intell 39(8):1561–1575

Danelljan M, Hager G, Shahbaz Khan F, Felsberg M (2015) Convolutional features for correlation filter based visual tracking. In: Proceedings of the IEEE international conference on computer vision workshops, pp 58–66

Danelljan M, Hager G, Shahbaz Khan F, Felsberg M (2015) Learning spatially regularized correlation filters for visual tracking. In: Proceedings of the IEEE international conference on computer vision, pp 4310–4318

Danelljan M, Robinson A, Shahbaz Khan F, Felsberg M (2016) Beyond correlation filters: Learning continuous convolution operators for visual tracking. In: European conference on computer vision. Springer, pp 472–488

Dunnhofer M, Martinel N, Luca Foresti G, Micheloni C (2019) Visual tracking by means of deep reinforcement learning and an expert demonstrator. In: Proceedings of The IEEE/CVF international conference on computer vision workshops, pp 0–0

FEI D, SONG H, ZHANG K (2020) Multi-level feature enhancement for real-time visual tracking. J Comput Appl 40(11):3300

Fu L-, Ding Y, Du Y-, Zhang B, Wang L-, Wang D (2020) Siammn: Siamese modulation network for visual object tracking. Multimed Tools Appl 79(43):32623–32641

Gündoğdu E, Alatan A A (2016) The visual object tracking vot2016 challenge results

Guo D, Wang J, Zhao W, Cui Y, Wang Z, Chen S (2021) End-to-end feature fusion siamese network for adaptive visual tracking. IET Image Proc 15(1):91–100

Guo D, Zhao W, Cui Y, Wang Z, Chen S, Zhang J (2018) Siamese network based features fusion for adaptive visual tracking. In: Pacific Rim international conference on artificial intelligence. Springer, pp 759–771

Guo Q, Feng W, Zhou C, Huang R, Wan L, Wang S (2017) Learning dynamic siamese network for visual object tracking. In: Proceedings of the IEEE international conference on computer vision, pp 1763–1771

He A, Luo C, Tian X, Zeng W (2018) A twofold siamese network for real-time object tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4834–4843

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Henriques J F, Caseiro R, Martins P, Batista J (2014) High-speed tracking with kernelized correlation filters. IEEE Trans Pattern Anal Machine Intell 37(3):583–596

Huang H, Liu G, Zhang Y, Xiong R, Zhang S (2022) Ensemble siamese networks for object tracking. Neural Comput Appl 34(10):8173–8191

Huang L, Zhao X, Huang K (2019) Got-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Trans Pattern Anal Mach Intell 43(5):1562–1577

Jepson A D, Fleet D J, El-Maraghi T F (2003) Robust online appearance models for visual tracking. IEEE Trans Pattern Anal Machine Intell 25 (10):1296–1311

Kristan M, Leonardis A, Matas J, Felsberg M, Pflugfelder R, Čehovin Zajc L, Vojir T, Bhat G, Lukezic A, Eldesokey A et al (2018) The sixth visual object tracking vot2018 challenge results. In: Proceedings of the European conference on computer vision (ECCV) workshops, pp 0–0

Kristan M, Leonardis A, Matas J, Felsberg M, Pflugfelder R, Čehovin Zajc L, Vojir T, Hager G, Lukezic A, Eldesokey A et al (2017) The visual object tracking vot2017 challenge results. In: Proceedings of the IEEE international conference on computer vision workshops, pp 1949–1972

Kristan M, Matas J, Leonardis A, Felsberg M, Cehovin L, Fernández G, Vojir T (2016) Hager, et al. the visual object tracking vot2016 challenge results. In: ECCV workshop, vol 2, p 8

Krizhevsky A, Sutskever I, Hinton G E (2012) Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems 25

Li B, Wu W, Wang Q, Zhang F, Xing J, Yan J (2019) Siamrpn++: Evolution of siamese visual tracking with very deep networks. CVPR 4282–4291

Li B, Yan J, Wu W, Zhu Z, Hu X (2018) High performance visual tracking with siamese region proposal network. In: 2018 IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 8971–8980

Liu J, Wang Y, Huang X, Su Y (2022) Tracking by dynamic template: Dual update mechanism. J Vis Commun Image Represent 84:103456

Ma X, Guo J, Tang S, Qiao Z, Chen Q, Yang Q, Fu S (2020) Dcanet: Learning connected attentions for convolutional neural networks. arXiv:2007.05099

Mueller M, Smith N, Ghanem B (2016) A benchmark and simulator for uav tracking. In: European conference on computer vision. Springer, pp 445–461

Nam H, Han B (2016) Learning multi-domain convolutional neural networks for visual tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4293–4302

Noor S, Waqas M, Saleem M I, Minhas H N (2021) Automatic object tracking and segmentation using unsupervised siammask. IEEE Access 9:106550–106559

Pu S, Song Y, Ma C, Zhang H, Yang M-H (2018) Deep attentive tracking via reciprocative learning. Advances in neural information processing systems 31

Ren S, He K, Girshick R, Sun J (2015) Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in neural information processing systems 28

Ross D A, Lim J, Lin R-S, Yang M-H (2008) Incremental learning for robust visual tracking. Int J Comput Vision 77(1):125–141

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition

Tao R, Gavves E, Smeulders WM (2016) Siamese instance search for tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1420–1429

Valmadre J, Bertinetto L, Henriques J, Vedaldi A, Torr HS (2017) End-to-end representation learning for correlation filter based tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2805–2813

Wang Q, Teng Z, Xing J, Gao J, Hu W, Maybank S (2018) Learning attentions: residual attentional siamese network for high performance online visual tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4854–4863

Wei L, Xi Z, Hu Z, Sun H (2022) Siamsyb: simple yet better methods to enhance siamese tracking. Multimedia Tools Appl 1–20

Wu Y, Lim J, Yang M-H (2013) Online object tracking: A benchmark. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2411–2418

Xiao D, Tan K, Wei Z, Zhang G (2022) Siamese block attention network for online update object tracking. Appl Intell 1–13

Xu Y, Wang Z, Li Z, Yuan Y, Yu G (2020) Siamfc++: Towards robust and accurate visual tracking with target estimation guidelines. AAAI 12549–12556

Xu Z, Luo H, Hui B, Chang Z, Ju M (2019) Siamese tracking with adaptive template-updating strategy. Appl Sci 9(18):3725

Yang T, Chan A B (2018) Learning dynamic memory networks for object tracking. In: Proceedings of the European conference on computer vision (ECCV), pp 152–167

Yuan T, Yang W, Li Q, Wang Y (2021) An anchor-free siamese network with multi-template update for object tracking. Electronics 10(9):1067

Zhang L, Gonzalez-Garcia A, Weijer JVD, Danelljan M, Khan F S (2019) Learning the model update for siamese trackers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 4010–4019

Zhang R (2019) Making convolutional networks shift-invariant again. In: International conference on machine learning. PMLR, pp 7324–7334

Zhang Z, Peng H, Wang Q (2019) Deeper and wider siamese networks for real-time visual tracking. 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR 2019) 4586–4595

Zhao F, Zhang T, Song Y, Tang M, Wang X, Wang J (2020) Siamese regression tracking with reinforced template updating. IEEE Trans Image Process 30:628–640

Zhou Y, Li J, Du B, Chang J, Ding Z, Qin T (2021) Learning adaptive updating siamese network for visual tracking. Multimedia Tools Appl 80 (19):29849–29873

Zhu G, Porikli F, Li H (2016) Beyond local search: Tracking objects everywhere with instance-specific proposals. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 943–951

Zhu W, Zou G, Liu Q, Zeng Z (2021) An enhanced visual attention siamese network that updates template features online. Secur Commun Netw 2021

Zhu Z, Wang Q, Li B, Wu W, Yan J, Hu W (2018) Distractor-aware siamese networks for visual object tracking. In: Proceedings of the European conference on computer vision (ECCV), pp 101–117

Acknowledgements

This work is supported by the National Natural Science Foundation of China under Grant (61873246, 62072416, 62006213, 62102373), Program for Science & Technology Innovation Talents in Universities of Henan Province (21HASTIT028), Natural Science Foundation of Henan (202300410495), Key Scientific Research Projects of Colleges and Universities in Henan Province (21A120010).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, H., Zhang, Z., Zhang, J. et al. Online bionic visual siamese tracking based on mixed time-event triggering mechanism. Multimed Tools Appl 82, 15199–15222 (2023). https://doi.org/10.1007/s11042-022-13930-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-13930-9